Based on the latest Pascal architecture, Nvidia claim the GTX 1080 is the most advanced graphics card ever made. The GTX 1080 is built on a new 16nm FinFET manufacturing process allowing the chip to incorporate more transistors – subsequently allowing for higher clock speeds and improved power efficiency.

The GTX 1080 also adopts GDDR5X memory which enhances bandwidth significantly over previous GDDR5 designs. Is Nvidia's GTX 1080 the new GPU king?

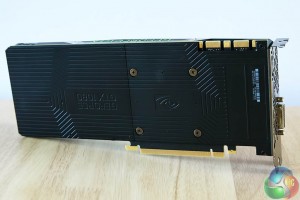

The GTX 1080 ‘Founders Edition' is crafted by Nvidia engineers and is built around a die cast aluminium body with low profile backplate. This is all machine finished and heat treated for strength.

Interestingly I have read some negative comments from KitGuru readers in the last week saying they prefer the appearance of the older Nvidia reference cooler, but I must say, I quite like the angular shapes and patterns in the design.

| GPU | GeForce GTX960 |

Geforce GTX970 |

GeForce GTX980 |

Geforce GTX 980 Ti |

Geforce GTX Titan X |

Geforce GTX 1080 |

| Streaming Multiprocessors | 8 | 13 | 16 | 22 | 24 | 20 |

| CUDA Cores | 1024 | 1664 | 2048 | 2816 | 3072 | 2560 |

| Base Clock | 1126 mhz | 1050 mhz | 1126 mhz | 1000 mhz | 1000 mhz | 1607 mhz |

| GPU Boost Clock | 1178 mhz | 1178 mhz | 1216 mhz | 1075 mhz | 1076 mhz | 1733 mhz |

| Total Video memory | 2GB | 4GB | 4GB | 6GB | 12GB | 8GB |

| Texture Units | 64 | 104 | 128 | 176 | 192 | 160 |

| Texture fill-rate | 72.1 Gigatexels/Sec | 109.2 Gigatexels/Sec | 144.1 Gigatexels/Sec | 176 Gigatexels/Sec | 192 Gigatexels/Sec | 257.1 Gigatexels/Sec |

| Memory Clock | 7010 mhz | 7000 mhz | 7000 mhz | 7000 mhz | 7000 mhz | 5005mhz |

| Memory Bandwidth | 112.16 GB/sec | 224 GB/s | 224 GB/sec | 336.5 GB/sec | 336.5 GB/sec | 320 GB/s |

| Bus Width | 128bit | 256bit | 256bit | 384bit | 384bit | 256bit |

| ROPs | 32 | 56 | 64 | 96 | 96 | 64 |

| Manufacturing Process | 28nm | 28nm | 28nm | 28nm | 28nm | 16nm |

| TDP | 120 watts | 145 watts | 165 watts | 250 watts | 250 watts | 180 watts |

The Nvidia GTX1080 ships with 2560 CUDA cores and 20 SM units. The 8GB of GDDR5X memory is connected via a 256 bit memory interface. This new G5X memory offers a huge step up in bandwidth, when compared against the older GDDR5 standard. It runs at a data rate of 10Gbps, giving 43% more bandwidth than the GTX980 GPU.

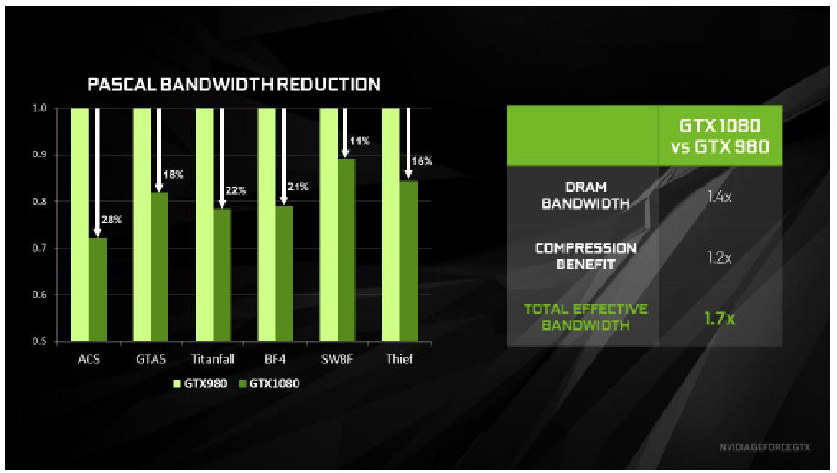

Architectural improvements in memory compression give an effective memory bandwidth increase of 1.7x when compared against the previous Nvidia flagship. There is a lot more to this performance than the figures above, so I recommend our readers spend a little time reading Page 2 of this review today where I try and break the technology advancements down as simply as possible.

For the last 10 days or so I have been retesting many graphics cards with the latest AMD and Nvidia drivers. We list all the partnering hardware on the testing methodology page of the review. First let's have a quick look at the new Nvidia technology.

The Nvidia GTX 1080 is based on the Pascal GPU architecture that was first introduced in the high end, datacenter class GP100 GPU. Nvidia's new Pascal GPU (GP104) is designed to deal with upcoming Direct X 12 games and Vulkan graphics. Nvidia will be placing a lot of focus on VR going forward as well.

Nvidia have been at the forefront of power efficiency for some time now, I was a huge fan of the Maxwell architecture and I still remember the first GTX750 ti I reviewed way back in February 2014.

This was the first Maxwell card we tested on launch day and it delivered solid 1080p performance, all without even requiring a single PCIe power connector – and best of all it required only 60 watts when gaming. When the higher end Maxwell cards were released later in the year, Nvidia dominated the market.

The GTX 1080 is comprised of a whopping 7.2 billion transistors, including 2560 single precision CUDA Cores. It is built on the 16nm FinFET manufacturing process which will drive higher levels of performance while increasing power efficiency – critical when operating at very high clock speeds.

Nvidia Gameworks is a key focus for Nvidia although it would be fair to say that it has been the centre point of plenty of controversy over the last year. Nvidia state that the GTX 1080 performance with GameWorks libraries enable developers to ‘readily implement more interactive and cinematic experiences.' Nvidia claim that the Pascal architecture, 16nm FinFET manufacturing process and GDDR5X memory give the GTX1080 a total 70% lead over the previous GTX980 flagship.

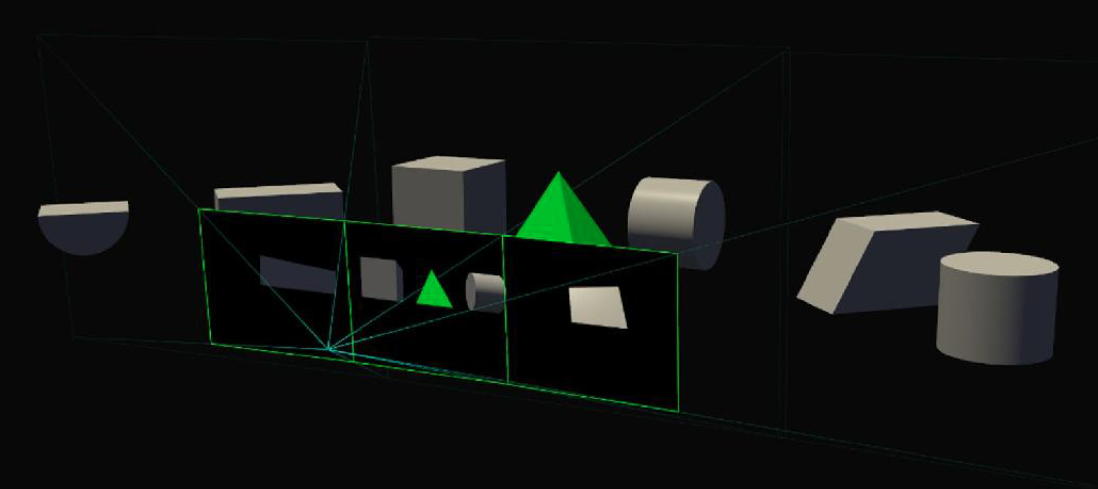

Perspective Surround is a new technology. It uses SMP to render a wider field of view and the correct image perspective, across all three monitors, with a single geometry pass.

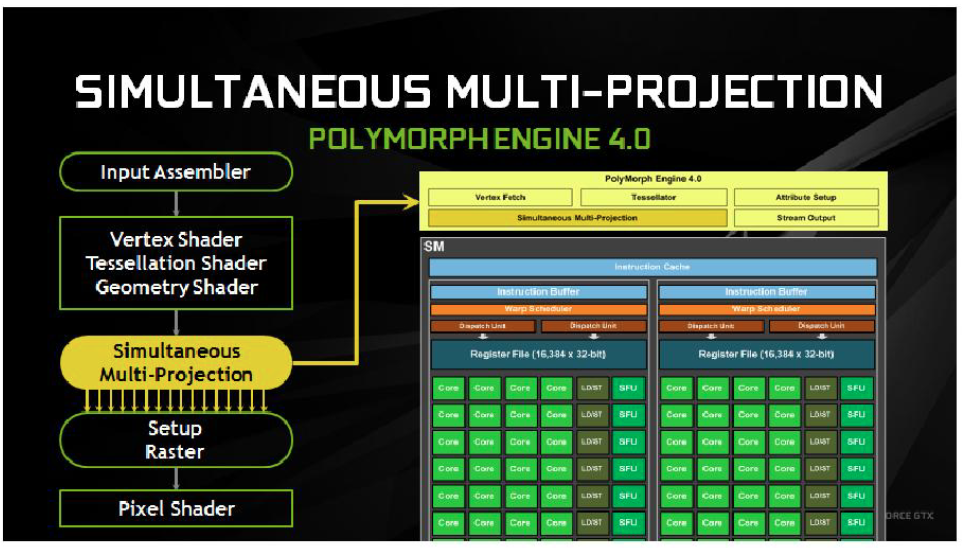

Nvidia have also developed a new Simultaneous Multi Projection technology, which for the first time allows the GPU to simultaneously map a single primitive onto up to sixteen different projections from the same viewpoint.

Each of these projections can be either mono or stereo and it will allow the GTX 1080 to accurately match the curved projection required for VR displays as well as the multiple projection angles required for surround display configurations.

Pascal graphics cards are based around different configurations of Graphics Processing Clusters (GPCs), Streaming Multiprocessors (SMs) and memory controllers. Each Streaming multiprocessor is paired up with a PolyMorph Engine that handles tessellation, vertex fetch, viewport transformation, perspective correction and vertex attribute setup. The GP104 PolyMorph Engine includes the Simultaneous Multi Projection unit discussed above. The combination of a SM plus one PolyMorph Engine is referred to as a TPC.

The GP104 powered GTX 1080 consists of four GPCs, twenty Pascal Streaming Multiprocessors and eight memory controllers. Inside the GTX 1080 each GPC ships with a dedicated raster engine and five SMs. Each of these SM's contains 128 CUDA Cores, 256kb of register file capacity, a 96KB shared memory unit, 48kb of total L1 cache storage and eight texture units.

The SM is critical, it is a highly parallel multiprocessor which can schedule warps (groups of 32 threads) to CUDA cores and other execution units within the SM. Almost all operations flow through the SM during the rendering pipeline. With 20 SM's listed, the GTX 1080 comprises 2560 CUDA cores and 160 texture units.

The GTX 1080 has eight 32 bit memory controllers (256 bit in total). Connected to each 32 bit memory controller are eight ROP units and 256kb of Level 2 cache. The full GP104 chip in the GTX 1080 has a total of 64 ROPs and 2,048kb of Level 2 cache.

| GPU | Geforce GTX 980 (Maxwell) |

Geforce GTX 1080 (Pascal) |

| Streaming Multiprocessors | 16 | 20 |

| CUDA Cores | 2048 | 2560 |

| Base Clock | 1126mhz | 1607mhz |

| GPU Boost Clock | 1216mhz | 1733mhz |

| GFLOPS | 4981 | 8873 |

| Texture Units | 128 | 160 |

| Texture fill-rate | 155.6 Gigatexels/Sec | 277.3 Gigatexels/Sec |

| Memory Clock (Data Rate) | 7,000 mhz | 10,000 mhz |

| Memory Bandwidth | 224 GB/sec | 320 GB/s |

| ROPs | 64 | 64 |

| L2 Cache size | 2048 KB | 2048KB |

| TDP | 165 watts | 180 watts |

| Transistors | 5.2 billion | 7.2 billion |

| Die Size | 398 mm2 | 314 mm2 |

| Manufacturing Process | 28nm | 16nm |

GDDR5X is a critical performance improvement that Nvidia wanted to bring with Pascal. It is a faster and more advanced interface standard, achieving 10 Gbps transfer rates, or roughly 100 picoseconds (ps) between data bits. Putting this into context, light travels at only an inch in a 100 ps time interval; the GDDR5X IO circuit has less than half that time available to sample a bit as it arrives, or the data will be lost as the bus transitions to a new set of values.

Coping with such a tremendous speed of operation required the development of a new IO circuit architecture. It took them years to implement. Nvidia have been focusing on reducing power consumption since early 2014, and with these new circuit developments and lower 1.35V GDDR5X standards, combined with process technologies this allowed for a 43% higher operating frequency without increasing power demand.

If you were paying attention on the first page of this review, you will see that the memory interface on the GTX 1080 is 256 bit. But the earlier GTX980 ti and GTX Titan X were both 384 bit. All of these cards use a system of enhanced memory compression, utilising lossless memory compression techniques to reduce DRAM bandwidth demands. Memory compression helps in three key areas; it:

- Reduces the amount of data written out to memory.

- Reduces the amount of data transferred from memory to L2 cache, effectively providing a capacity increase for the L2 cache, as a compressed tile (block of frame buffer pixels or samples) has a smaller memory footprint than an uncompressed tile.

- Reduces the amount of data transferred between clients such as the texture unit and the frame buffer.

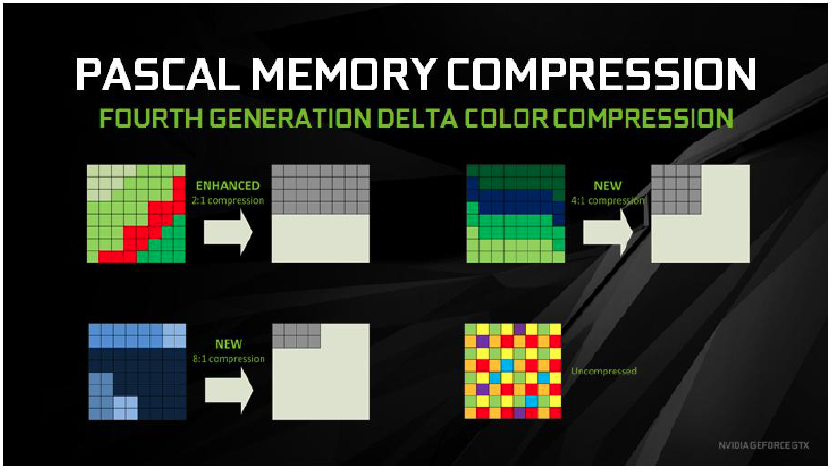

Of all the algorithms the GPU compression pipeline has to handle, one of the most important is delta colour compression. The GPU will calculate the differences between pixels in a block and then store that block as a set of reference pixels as well as the delta values from the reference. If the deltas are small then only a few bits per pixel are required. If the packed result of reference values and the delta values is less than half the uncompressed storage size, then delta colour compression is successful and the data is stored at 2:1 compression, which is half size.

The GTX 1080 benefits from a significantly enhanced delta colour compression system.

- 2:1 compression has been improved to be more effective than before.

- A new 4:1 delta colour compression mode is added to cover cases where the per pixel deltas are very small and are possible to pack in 25% of the original storage.

- A new 8:1 delta colour compression mode is added to combine 4:1 constant colour compression of 2×2 pixel blocks with 2:1 compression of the deltas between those blocks.

The latest games place a dramatic workload on a graphics card, often running with multiple independent or asynchronous workloads that ultimately work together to create the final rendered image. For overlapping workloads, such as when a GPU is dealing with physics and audio processing Pascal has new ‘dynamic load balancing' support.

Maxwell architecture dealt with overlapping workloads with static partitioning of the GPU into a subset that runs graphics and a subset that runs compute. This was only efficient provided that the balance of work between the two loads was roughly matching the partitioning ratio. New hardware dynamic load balancing solves the problem by allowing either workflow to adapt to use idle resources.

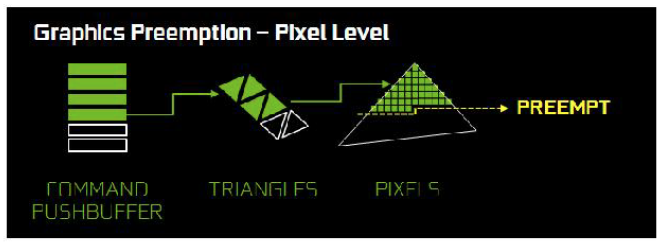

Time critical workloads are another important asynchronous compute scenario. An asynchronous timewarp operation for instance must complete before scanout starts, or a frame is dropped. The GPU therefore in this scenario needs to support very fast, low latency preemption to move the less critical workload from the GPU, so the more critical workload can run as soon as possible.

A single rendering command from a game engine can possibly call for hundreds of draw calls, with each draw call asking for hundreds of triangles, with each triangle containing hundreds of pixels which each have to be shaded and rendered. Traditionally a GPU cycle that implements preemption at a high level in the graphics pipeline would have to complete all of this work before switching tasks, which could theoretically result in a very long delay.

Nvidia wanted to improve this situation so they implemented Pixel Level Preemption in the GPU architecture of Pascal. The graphics units in Pascal are superior in that they can now keep track of their intermediate progress on rendering work, so when preemption is requested they can stop at that point, save off context information to start up later, and preempt quickly.When we last ran a poll on KitGuru, less than 5% of readers who responded were running with an SLi configuration. There is no doubt that only a tiny percentage of enthusiast gamers have a system with more than one graphics card, and even less with 3 or 4.

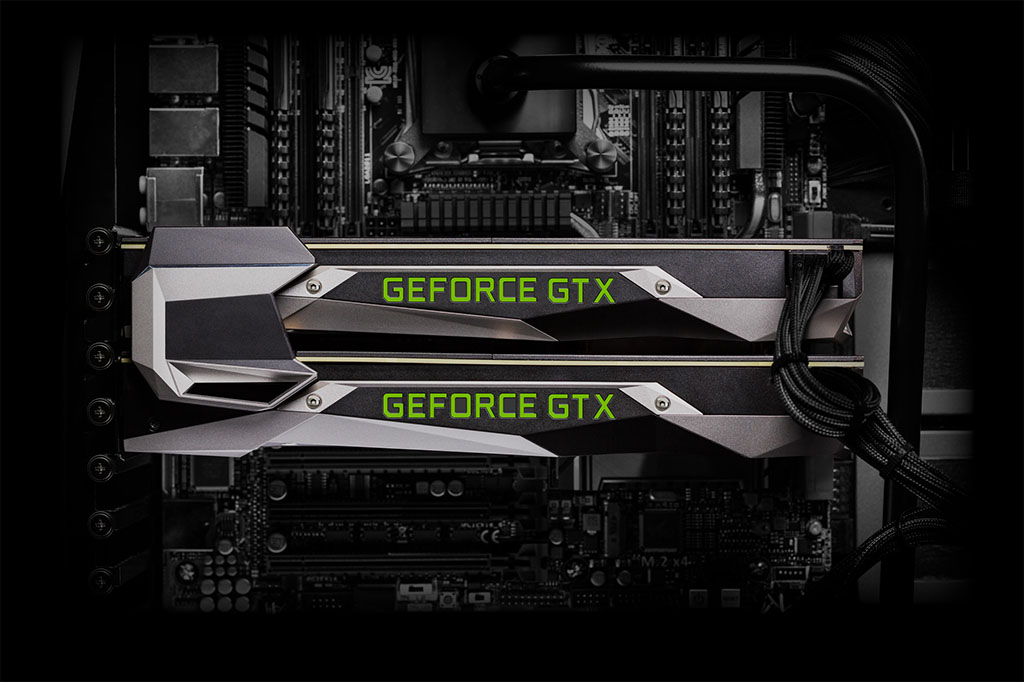

With the release of Pascal Nvidia are making some big changes to SLi so we felt it was important to highlight in our analysis today.

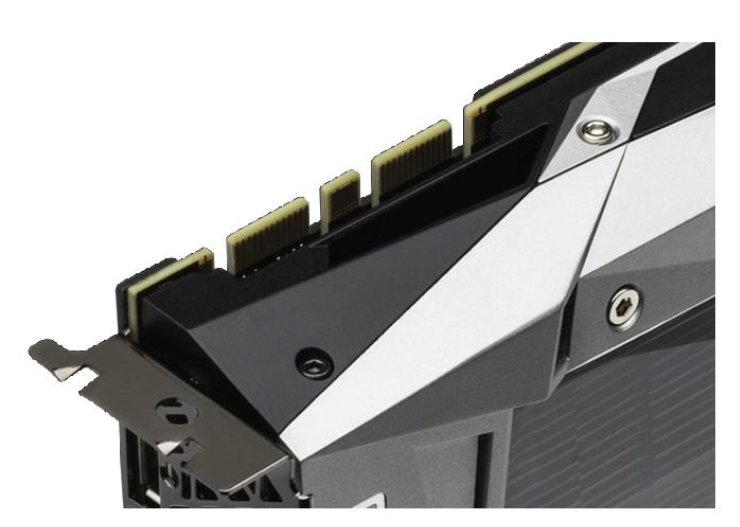

While AMD have adopted a ‘bridgeless Crossfire' system in recent years, Nvidia SLI gamers have always had to rely on a physical SLi bridge and this won't change. What does change however is the implementation of the bridging system.

Two of the SLI interfaces have historically been used to enable communications between three or more GPUs in a system. The second SLI interface is required for 3 and 4 way SLI as all the GPUs in a system need to transfer their rendered frames to the display connected to the master GPU. Up to this point each interface has been independent.

With Pascal, the two interfaces are now linked together to improve bandwidth between the graphics cards. The new dual link SLI mode allows both SLI interfaces to be used together to feed one high resolution panel or multiple displays for Nvidia surround.

Dual Link SLI mode is supported with new SLI Bridge which Nvidia are calling SLI HB. This new bridging system allows for higher speed data transfer between the two GPUs connecting both interfaces.

So, do the old SLI connectors work with the new Pascal architecture? Nvidia say the GTX 1080 cards are compatible with ‘legacy SLI bridges, however the GPU will be limited to the maximum speed of the bridge being used'.

Sadly Nvidia didn't send us two cards so we have been unable to test any of this but they do say ‘Connecting both SLI interfaces is the best way to achieve full SLI clock speeds with GTX 1080's in SLI'.

The new SLI HB Bridge with a pair of GTX 1080's runs at 650mhz, compared to 400mhz in previous Geforce GPU's using a ‘legacy' SLi Bridge. To complicate matters even further, Nvidia claim that older SLI bridges will get a ‘speed boost' when paired up with Pascal hardware. Custom bridges that include LED lighting will now operate at up to 650mhz when used with the GTX 1080, utilising the faster IO capabilities of Pascal. Nvidia recommend the use of the new HB Bridge for 4k, 5k and surround panel resolutions.

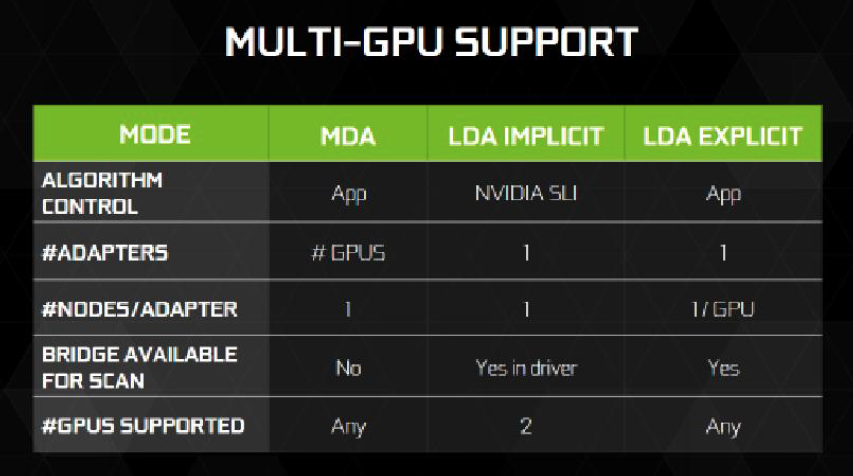

Microsoft have changed multi GPU functionality in the Direct X 12 API. There are two basic options for developers when using Nvidia hardware in multi GPU mode on Direct X 12. These are known as Linked Display Adapter (LDA) mode and Multi Display Adapter (MDA) mode.

LDA Mode has two forms: Implicit LDA Mode which Nvidia uses for SLI and Explicit LDA Mode which allows game developers to handle much of the responsibility for multi GPU operations. MDA and LDA Explicit Mode was created to help give game developers more control over the game engine as well as multi GPU performance.

In LDA mode each GPUs memory can be combined to appear as one large pool of memory for the developer. Although there are some exceptions regarding peer-to-peer memory. There is a performance penalty to be paid if the data needed resides in the other GPUs memory, since the memory is accessed through inter GPU peer to peer communication – much like PCIe. MDA Mode is different, in that each GPUs memory is accessed independent of the other GPU, so each of the graphics cards cannot access the other's memory.

LDA is a mode for multi GPU systems that have GPUs that are similar, while MDA has fewer limitations – discrete GPUs can be paired with integrated GPUs for instance, or even discrete GPUs from another manufacturer.

The Geforce GTX 1080 supports up to two GPUs. 3 Way and 4 Way SLI modes are no longer recommended by Nvidia. Nvidia claim that as games have evolved it is becoming more difficult for 3 and 4 way SLI modes to provide beneficial performance scaling. They add that games are becoming more bottlenecked by the CPU when running 3 and 4 way SLI and that games are using techniques that make it difficult to extract frame to frame parallelism.

Nvidia recommend systems to be built to target MDA or LDA Explicit, or 2 Way SLI with a dedicated Physx GPU.

Enthusiast Key

Nvidia are clearly moving away from 3 and 4 way SLI systems, however they are still offering some support for hard core gamers who demand 3 or 4 graphics cards. Nvidia do admit that some games will still benefit from more than 2 graphics cards.

To accommodate those gamers with 3 or 4 Nvidia graphics cards the company are incorporating a new system based around the concept of an ‘Enthusiast Key'. The process requires:

1: Run an app locally to generate a signature for the GPU you own.

2: Request an Enthusiast Key from an upcoming Enthusiast Key website.

3: Download the key.

4: Install the key to unlock the 3 and 4 way function.

Nvidia have yet to release details of the Enthusiast Key website, but they claim this will become public knowledge after the GTX 1080 cards are officially launched.

This seems rather convoluted to me but perhaps it will work better than I fear it will. I have also found SLI to be rather problematic in the last year.

Rise Of The Tomb Raider required a hack to get SLI working properly at all which I found quite shocking considering Nvidia were involved in the development of the game. At Ultra HD 4k resolutions the extra video card makes a huge difference to the overall gaming experience. More information on this over HERE.

Far Cry Primal ran very badly at Ultra HD 4k with our reference overclocked 5960X system featuring 3x Titan X cards in SLi. The best way around this was to manually force the Tri- SLi configuration into 2 way SLI by adjusting the Nvidia panel to use the third Titan X as a dedicated PhysX card.

With two cards in SLI the game was perfectly smooth at Ultra HD 4k, although a Titan X was completely overkill for simple PhysX duties. Clearly there was no effort invested on getting 3 way SLI working at all which is disappointing to see especially with a high profile AAA title.

Perhaps this rather public move to focus on simple 2 way SLI might help developers and Nvidia produce more working SLI profiles. Time will tell, although I have my doubts.

Fast Sync

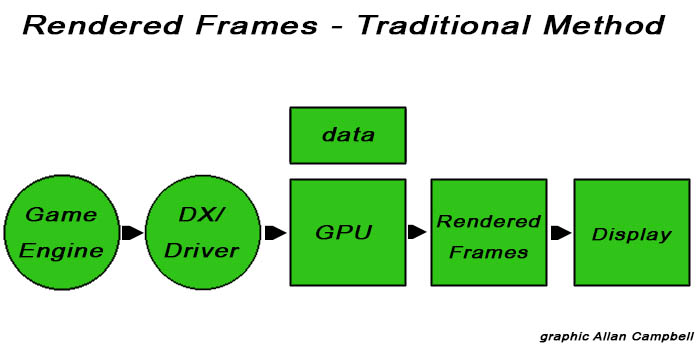

Nvidia have implemented a new ‘latency conscious alternative' to traditional Vertical Sync (V-SYNC). It eliminates tearing while allowing the GPU to render unrestrained by the refresh rate to reduce input latency.

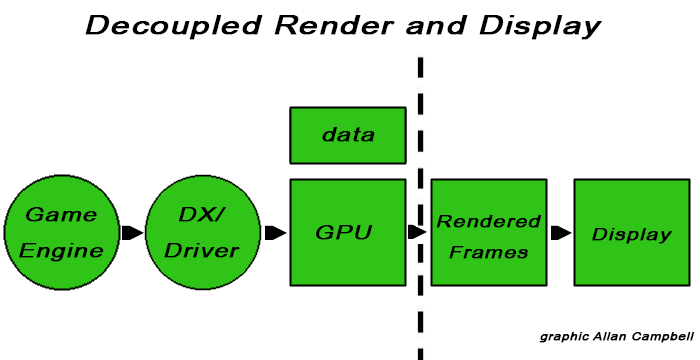

Above, a diagram showing how traditional frame rendering works through the Nvidia graphics pipeline. The game engine also has to calculate animation time and the encoding inside the frame which eventually gets rendered. The draw calls and information are communicated forward and the Nvidia driver and GPU converts them into the actual rendering and then produces a rendered frame to the GPU frame buffer. The last step is to scan the frame to the display.

Nvidia have adapted their thinking with Pascal.

They mentioned Counter Strike: Global Offensive as one such test subject. Nvidia say the Pascal hardware is able to power that game at hundreds of frames per second and currently there are two choices for most people – VSYNC on, or OFF.

A lot of people use VSYNC to eliminate frame tearing. With V-SYNC on however the pipeline gets back-pressured all the way to the game engine and the pipeline slows down to the refresh rate of the display. When enabled the display is basically telling the game engine to slow down because only one frame can be generated for each display refresh interval.

With VSYNC off the pipeline is told to ignore the display refresh rate and to produce game frames as fast as the hardware will allow. With VSYNC off the latency is reduced as there is no backpressure but frame tearing can rear its ugly head. Many eSports gamers are playing with it disabled as they don't want to deal with increased input latencies. Frame tearing at high frame rates can lead to jittering which can cause issues for gameplay.

Nvidia have decided to look at the traditional process and are decoupling the rendering and display from the pipeline. This allows the rendering stage to continually generate new frames from data sent by the game engine and driver at full speed – meaning those frames can be temporarily stored in the GPU frame buffer.

Fast Sync removes flow control meaning that the game engine works as if VSYNC is off and with the removal of back pressure input latency is almost as low as with VSYNC OFF. Frame tearing is removed because Fast Sync selects which of the rendered frames to scan to the display.

| V-SYNC ON | V-SYNC OFF | FAST SYNC | |

| Flow Control | Backpressure | None | None |

| Input Latency | High | Low | Low |

| Frame Tearing | None | Tearing | None |

Fast Sync allows the front of the pipeline to run as fast as it can scanning out selected frames to the display and preserving entire frames so they can be displayed without tearing. Nvidia claim that turning Fast Sync on delivers only 8ms more latency than V-SYNC off, while producing entire frames without tearing.

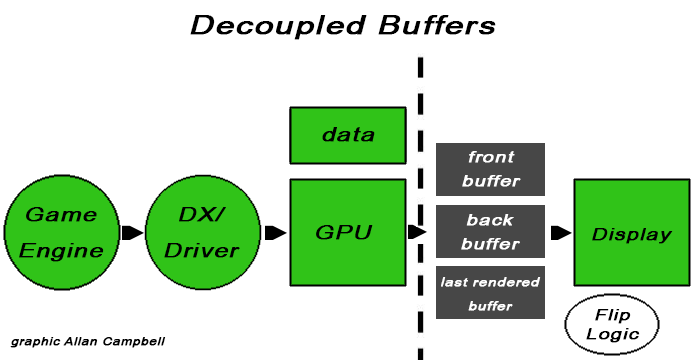

Imagine three areas in the frame buffer have been assigned in different ways. The first two buffers are very similar to double buffered VSYNC in classic GPU pipelines. The Front Buffer (FB) is the buffer scanned out to the display. The Back Buffer (BB) is the buffer that is currently being rendered and it can't be scanned out until it is completed. Standard VSYNC in high render rate games has a negative impact on latency as the game has to wait for the display refresh interval to flip the back buffer to become the front buffer before another frame can be rendered into the back buffer. The process is slowed down and latency is increased due to the addition of back buffers

Fast Sync adds a third buffer called the Last Rendered Buffer which holds all the newly rendered frames just finished in the back buffer – holding a copy of the most recently rendered back buffer, until the front buffer has finished scanning. At this point the Last Rendered Buffer is copied to the Front Buffer and the process continues. Direct buffer copies would be inefficient, so the buffers are renamed. The buffer being scanned to the display is the FB, the buffer being actively rendered is the BB and the buffer holding the most recently rendered frame is the LRB.

Flip Logic in the Pascal architecture controls the entire process.

High Dynamic Range

The new GTX 1080 supports all of the HDR display capabilities of the Maxwell range of hardware with the display controller capable of 12 bit colour, BT.2020 wide colour gamut, SMPTE 2084 (Perceptual Quantization) and HDMI 2.0b 10/12 bit for 4K HDR.

Pascal adds the following features:

- 4k@60hz 10/12b HEVC Decode (for HDR Video)

- 4k@60hz 10b HEVC Encode (for HDR recording or streaming).

- DP 1.4 ready HDR Metadata Transport (to connect to HDR displays using DisplayPort).

Nvidia are working on bringing HDR to games, including Rise Of the Tomb Raider, Paragon, The Talos Principle, Shadow Warrior 2, Obduction, The Witness and Lawbreakers.

Pascal introduces full PlayReady 3.0 (SL3000) support and HEVC decode in hardware, bringing the capability to watch 4K Premium video on the PC for the first time. Soon, enthusiast users will be able to stream 4K Netflix content and 4k content from other content providers on Pascal GPUs.

| Geforce GTX 980 (Maxwell) |

Geforce GTX 1080 (Pascal) |

|

| Number of Active Heads | 4 | 4 |

| Number of Connectors | 6 | 6 |

| Max Resolution | 5120 x 3200 @ 60hz (requires 2 DP 1.2 connectors) | 7680 x 4320 @ 60 hz (requires 2 DP 1.3 connectors) |

| Digital Protocols | LVDS, TMDS/HDMI 2.0, DP 1.2 | HDMI 2.0b with HDCP 2.2, DP (DP 1.2 certified, DP 1.3 Ready, DP 1.4 ready) |

The GTX 1080 is DisplayPort 1.2 certified, DisplayPort 1.3 and 1.4 ready – enabling support for 4k displays at 120hz, 5k displays at 60hz and 8k displays at 60hz (using two cables).

| Geforce GTX 980 (Maxwell) |

Geforce GTX 1080 (Pascal) |

|

| H.264 Encode | Yes | Yes (2x 4k@ 60 hz) |

| HEVC Encode | Yes | Yes (2x 4k@60 hz) |

| 10-bit HEVC Encode | No | Yes |

| H.264 Decode | Yes | Yes (4k@120hz up to 240 Mbps) |

| HEVC Decode | No | Yes (4k@120hz / 8k @ 30hz up to 320 Mbps) |

| VP9 Decode | No | Yes (4k@120hz up to 320 Mbps) |

| MPEG2 Decode | Yes | Yes |

| 10 bit HEVC Decode | No | Yes |

| 12 bit HEVC Decode | No | Yes |

Our GTX 1080 ‘Founders Day' review sample was shipped directly from Nvidia. The box artwork is very cool indeed.

I removed the anti static wrap for the picture above. More manufacturers should actually ship their cards like this – its very space saving. This is not a retail sample so all we get is the card itself.

The new angular shape of the cooler has split opinion with our readers on Facebook. I quite like the appearance and the Nvidia reference designs in recent years have been top notch. The GTX 1080 is built on the new 16nm FinFET manufacturing process and contains 7.2 billion transistors.

The GTX 1080 uses a vapour chamber cooling system, which is a first for a <250W Nvidia designed graphics card.

The new design is classed as ‘industrial', with a faceted body and a new low profile backplate. The backplate features a removable section to allow better airflow between multiple graphics cards in adjacent SLI configurations.

In hand the graphics card feels substantial. This ‘Founders Day' Edition was ‘crafted by Nvidia engineers with premium materials and components, including a die cast aluminum body and low profile backplate, all machine finished and heat treated for strength and rigidity'. I am glad Nvidia didn't remove the glowing green ‘Geforce GTX' branding along the edge of the card.

The thermal solution has been created to deliver consistent performance, and we know the reference design scores highly in multi GPU environments as it effectively removes most of the radiated heat out the rear of the case. The card ships with a single fan with an advanced vapor chamber cooler. It ships with a metal backplate on of low profile components to help improve heat removal from the PCB.

The SLI connectors are shown in the image above. If you haven't already, then I recommend you head back to the third page of this review to get detailed information on the new SLI configuration introduced today with Pascal hardware.

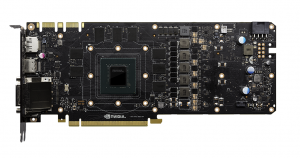

The GTX 1080 gets all the power it needs from the slot, and a single 8 Pin PCIe connector. Yes, the GTX1080 only requires one connector.

The Geforce GTX 1080 is DisplayPort 1.2 certified, DisplayPort 1.3 and 1.4 ready enabling support for 4K display at 120hz, 5K displays at 60hz and 8k Displays at 60hz (with two cables). The GTX 1080 Founders Edition includes three DisplayPort Connectors, one HDMI 2.0b connector and one dual link DVI connector. Up to four displayheads can be driven simultaneously from a single card.

As we mentioned on the previous pages, the GTX 1080 also supports HDR gaming, as well as video encoding and decoding. New to Pascal is HDR Video (4k@60 10/12b HEVC Decode), HDR Record/Stream (4k@60hz 10b HEVC Encode) and HDR Interface Support (DP 1.4).

The GTX 1080 has been designed with a low impedance power delivery network, custom voltage regulators and a 5 phase dual-FET power supply which is optimised for clean power delivery. Nvidia claim the GTX 1080 Founders Edition delivers increased power efficiency, reliability and exceptional overclocking capabilities.

If you have been paying attention you will know that Nvidia have upgraded their power supply delivery from 4 phase to a 5 phase dual-FET design and tuned for bandwidth, phase balancing and acoustics. Nvidia added extra capacitance to their filtering network and optimised the power delivery network on the PCB for low impedance. They claim power efficiency has increased by around 6% compared with the GTX980. Peak to peak voltage noise has been reduced from 209mV to 120mV for improved overclocking.

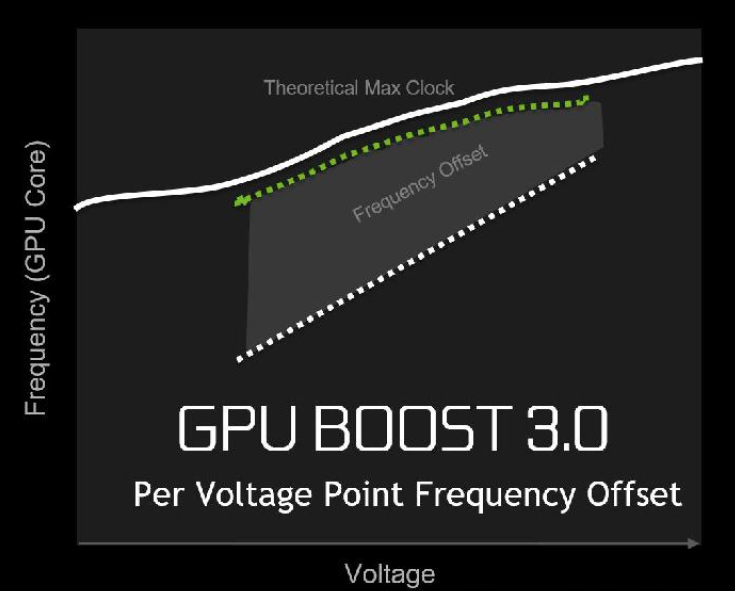

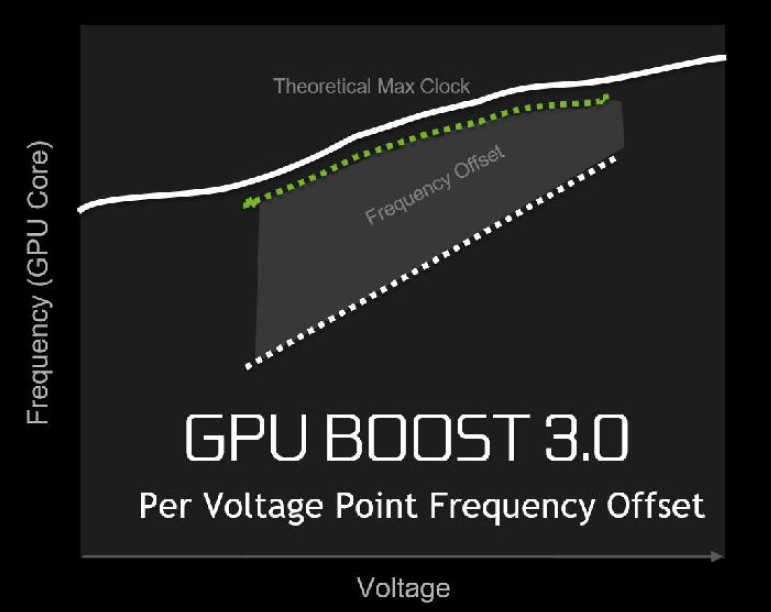

A new feature in GPU Boost 3.0 is the ability to set frequency offsets for individual voltage points. The previous version of GPU Boost 2.0 could only apply a fixed frequency offset, shifting the existing V/F curve upward by the defined offset amount. More on this later.

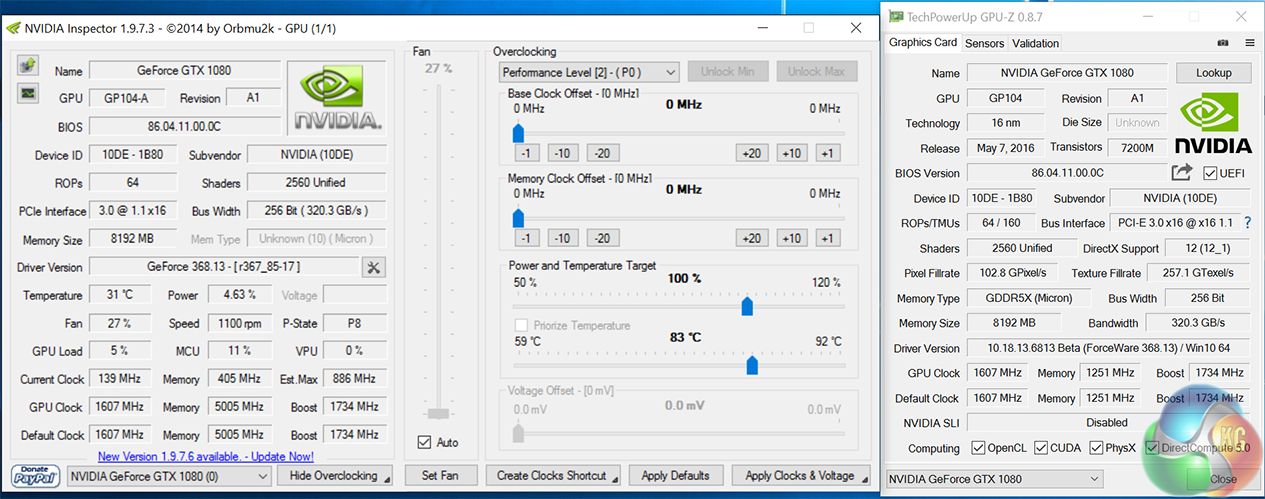

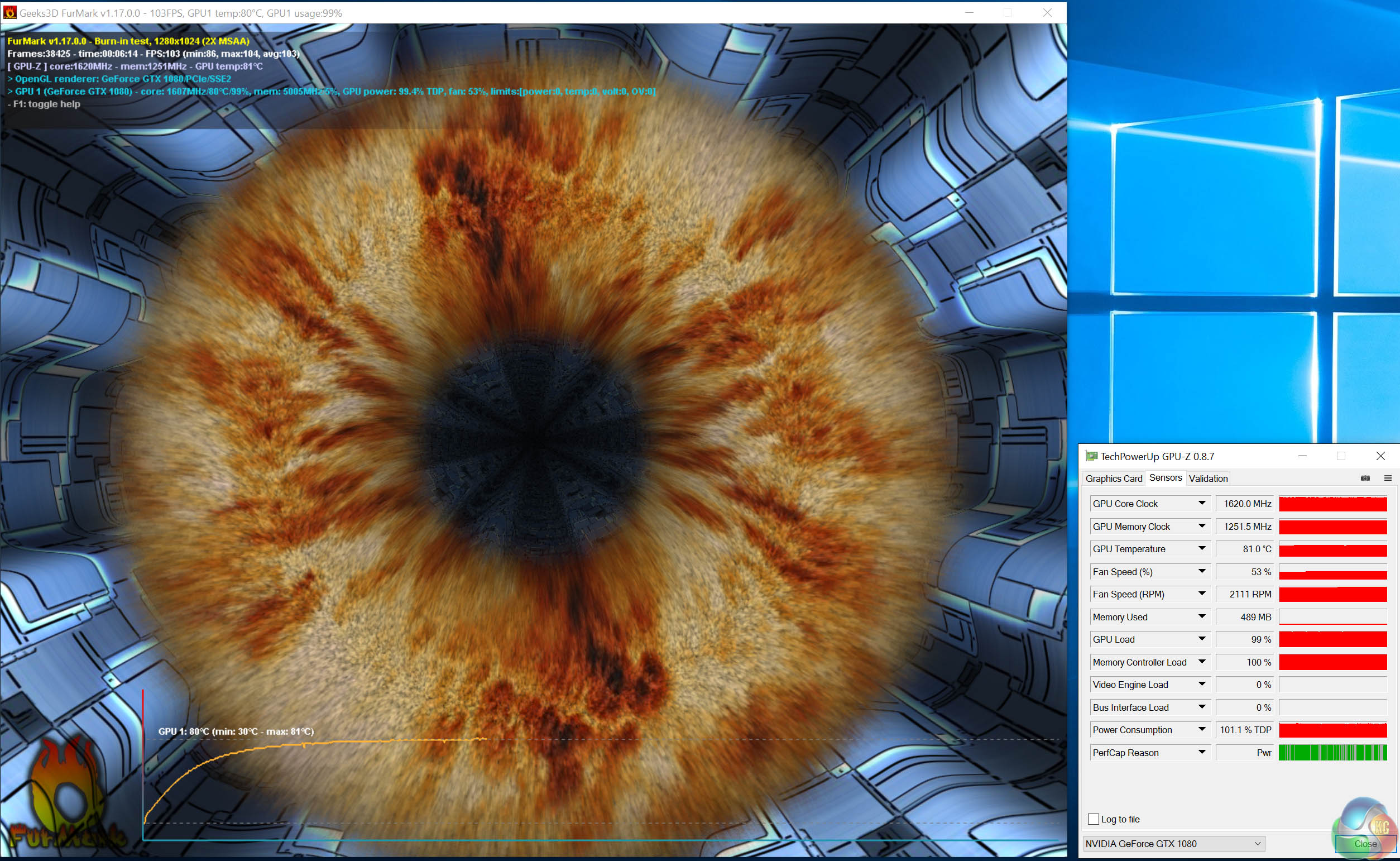

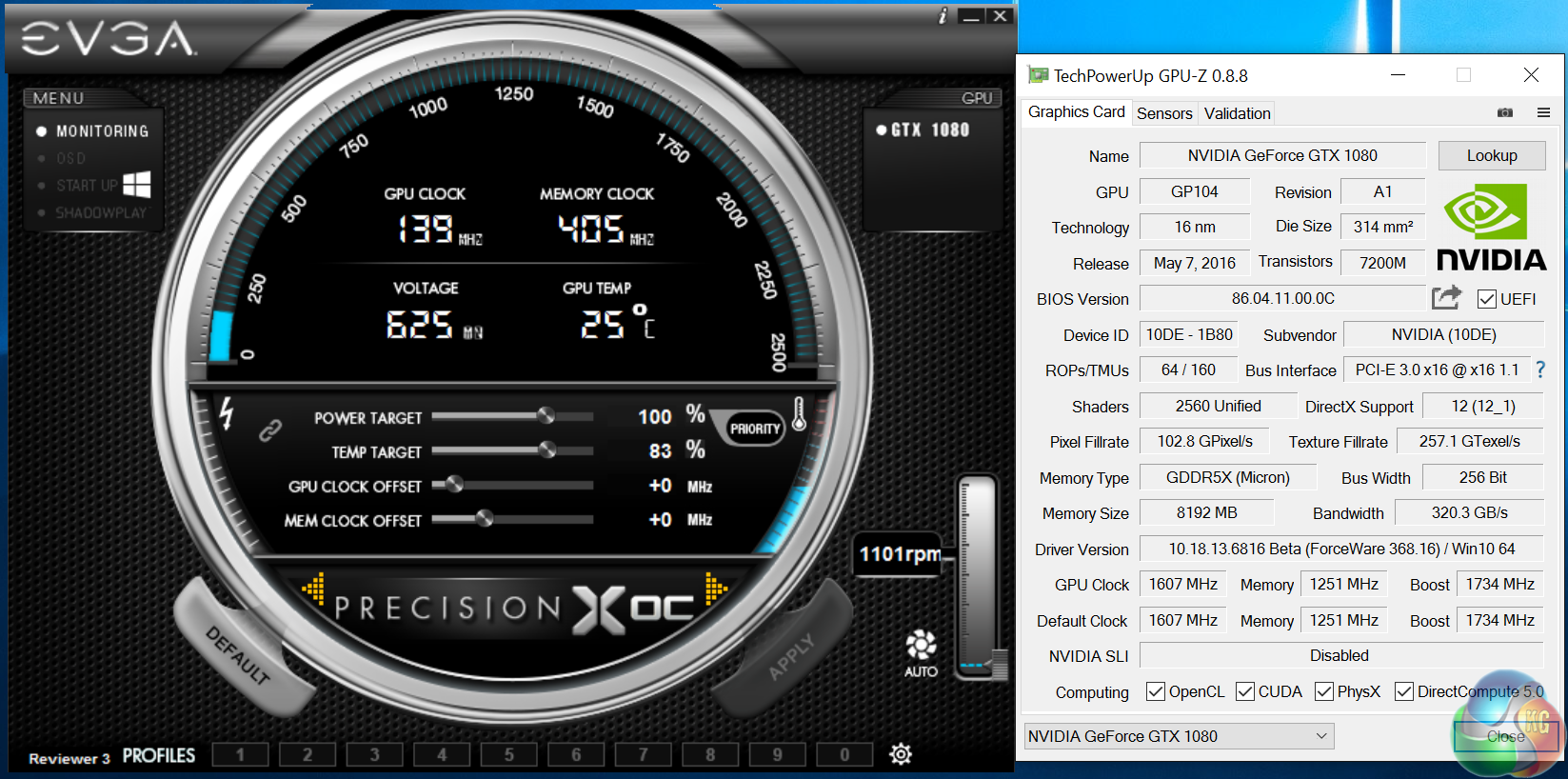

An overview of the hardware, as shown in GPUz. Special thanks to Mike over at Techpowerup for sending his latest beta version. The GP104 core is built on the 16nm manufacturing process. It has 64 ROPS, 160 Texture units and 2560 Cuda Cores. The 8GB of GDDR5x Memory is connected via a 256 bit memory interface.

The core clock is set at 1,607mhz with a boost frequency set at 1,734mhz – a massive increase over previous generation Maxwell hardware. We do recommend you take a look earlier in the review at the Tech overview page to get to grips with some of the new changes and enhancements in the Pascal architecture.

I have spent the last week benchmarking a selection of AMD and NVIDIA cards with the latest drivers on one of our new 6700k test beds. We are using the AMD Crimson Edition Display Driver, Version 16.15.2211 and Nvidia ForceWare 368.13 driver. Due to public demand we also add in a range of tests at 1080p to supplement the results at 1440p and Ultra HD 4K resolutions.

We list each resolution test for every game on its own page – meaning if you are just interested in 4K resolutions for instance, you can skip the other resolutions without effort. If you want to read the whole review and find all the page changes annoying – click on our menu system top right of these pages, and head to '34. view all pages'.

We are using a custom Titan Bayonet system supplied by Overclockers UK as the basis of our test system today. Read more on this system over HERE.

Case: Phanteks Enthoo Evolv ATX Mid Tower

Processor: Intel 6700K @ 4.4ghz

Memory: Corsair Vengeance LPX 16GB (2x8GB) @ 3000mhz

Motherboard: ASUS Z170-E DDR4 ATX Motherboard

Power Supply: Super Flower Leadex 850W Gold Certified

Software: Microsoft Windows 10 64 Bit

SSD: Samsung 250GB 850 EVO

HDD: Seagate 1TB 7,200 rpm 64MB Cache.

If you want to purchase this system yourself head to THIS page on OCUK.

Graphics cards:

Nvidia GTX 1080 (1607mhz core/ 1733mhz boost / 5005 mhz memory)

Comparison Cards on test:

Sapphire R9 390 Nitro 8GB (Rev 2 w/ backplate). (1040mhz core / 1500 mhz memory)

Sapphire R9 295X2 (1,018 mhz core / 1,250mhz memory)

AMD R9 Fury X (1,050 mhz core / 500 mhz memory)

AMD R9 Nano (1000mhz core / 500 mhz memory)

Gigabyte GTX980 Ti XTREME Gaming (1216 mhz core / 1800mhz memory)

Nvidia GTX Titan Z (706 mhz core / 1,753 mhz memory)

Nvidia GTX Titan X (1,000 mhz core / 1,753 mhz memory)

Asus GTX980 Strix (1,178 mhz core / 1,753 mhz memory)

Nvidia GTX980 Ti (1000 mhz core / 1,753 mhz memory)

Sapphire R9 390X Tri-X 8GB (1,055 mhz core / 1,500 mhz memory)

Sapphire R9 390 Nitro 8GB (1,010 mhz core / 1,500 mhz memory)

Software:

Windows 10 64 bit

Unigine Heaven Benchmark

3DMark 11

3DMark

Fraps Professional

Steam Client

FurMark

Games:

Ashes Of the Singularity

Dirt Rally

Hitman 2016

Middle Earth: Shadow Of Mordor

Rise Of the Tomb Raider

Grand Theft Auto 5

Metro Last Light Redux

We perform under real world conditions, meaning KitGuru tests games across five closely matched runs and then average out the results to get an accurate median figure. If we use scripted benchmarks, they are mentioned on the relevant page.

Game descriptions edited with courtesy from Wikipedia.

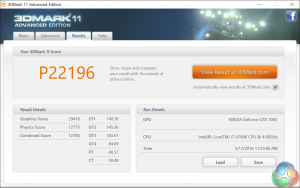

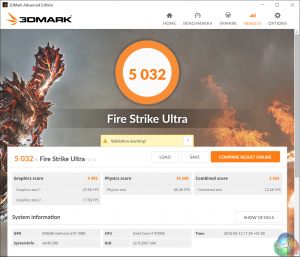

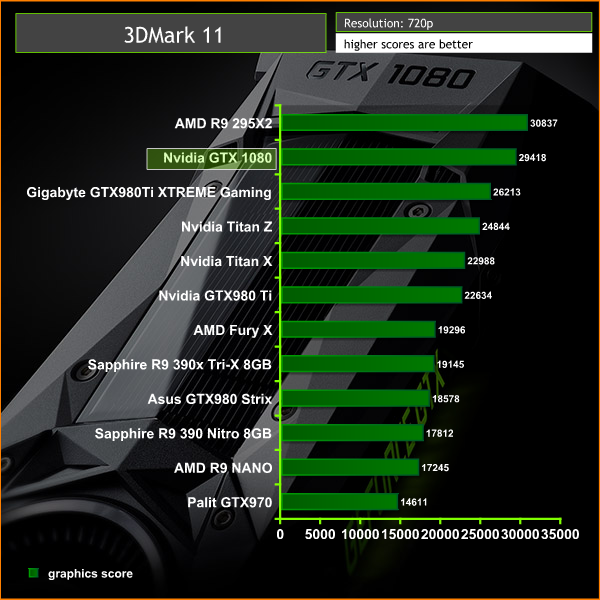

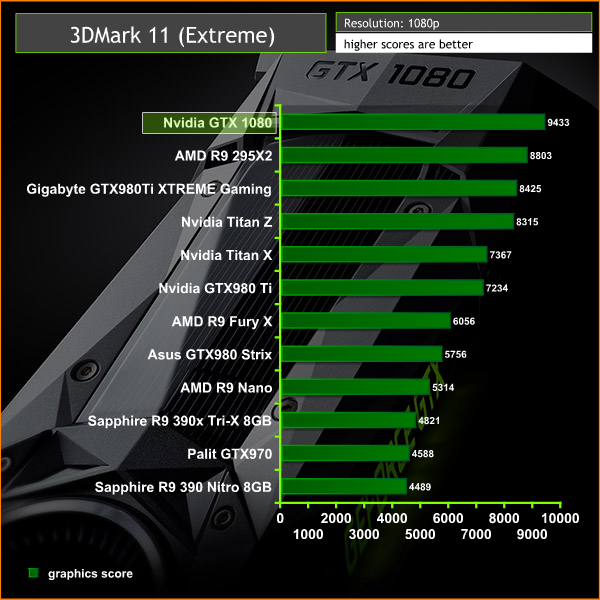

3DMark 11 is designed for testing DirectX 11 hardware running on Windows 7 and Windows Vista. The benchmark includes six all new benchmark tests that make extensive use of all the new features in DirectX 11 including tessellation, compute shaders and multi-threading. After running the tests 3DMark gives your system a score with larger numbers indicating better performance. Trusted by gamers worldwide to give accurate and unbiased results, 3DMark 11 is the best way to test DirectX 11 under game-like loads.

The Nvidia GTX 1080 is the fastest single GPU on test today with this last generation Futuremark benchmark. I was shocked to see the GTX 1080 outscoring the AMD R9 295X2 at Ultra HD 4k resolutions.

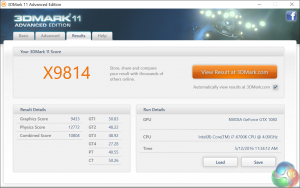

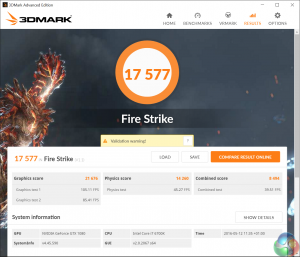

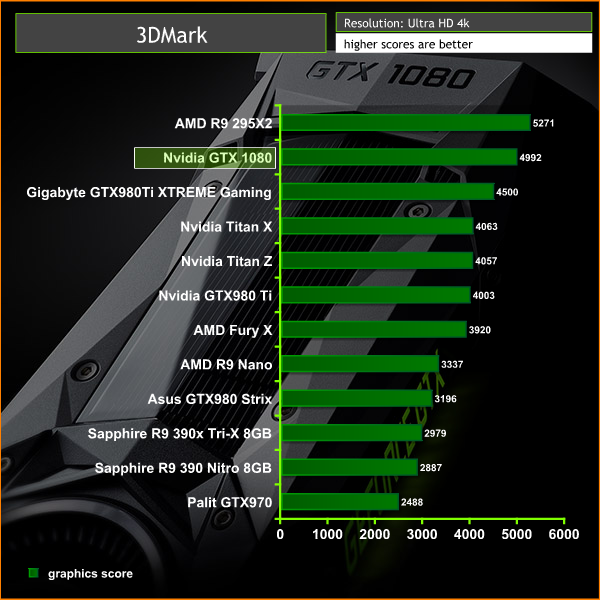

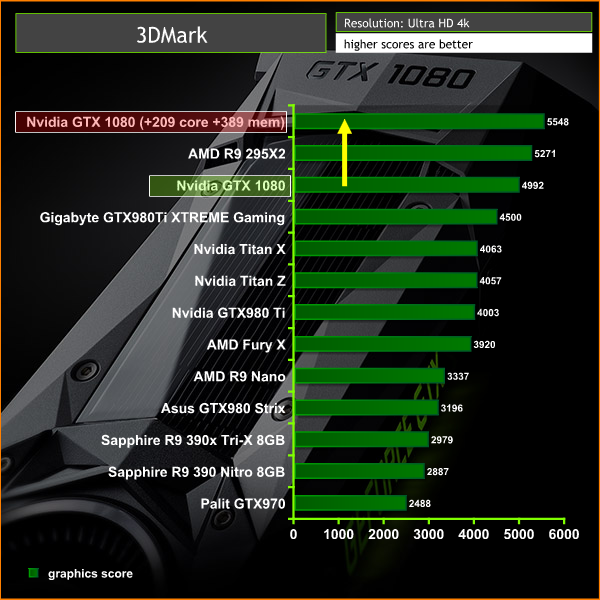

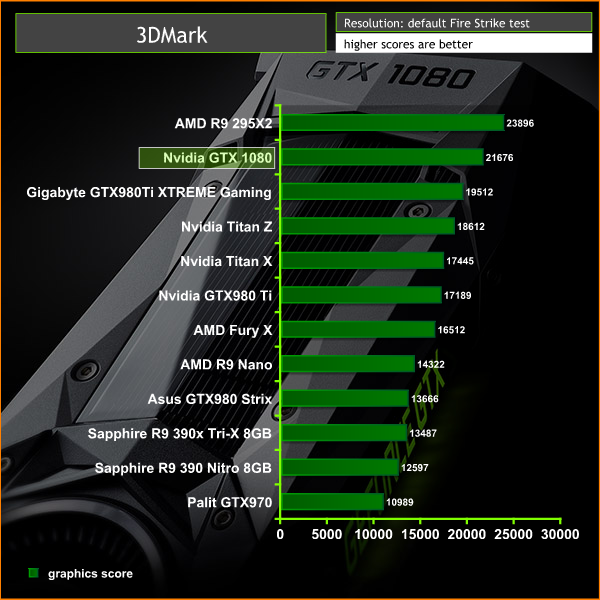

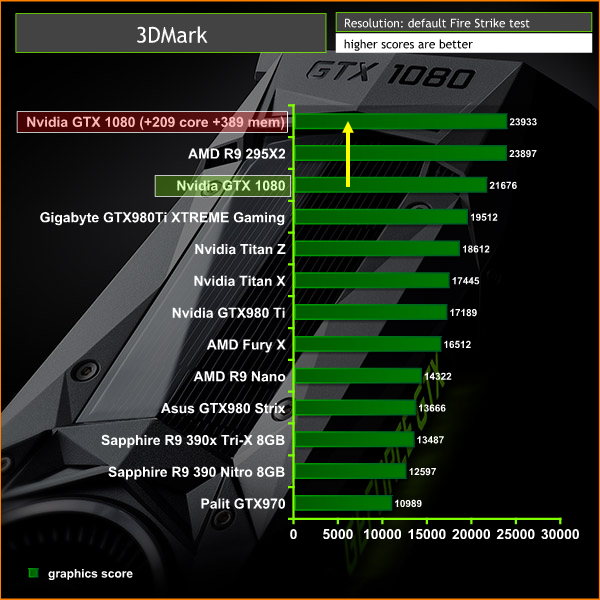

3DMark is an essential tool used by millions of gamers, hundreds of hardware review sites and many of the world’s leading manufacturers to measure PC gaming performance.

Futuremark say “Use it to test your PC’s limits and measure the impact of overclocking and tweaking your system. Search our massive results database and see how your PC compares or just admire the graphics and wonder why all PC games don’t look this good.

To get more out of your PC, put 3DMark in your PC.”

Nvidia's GTX 1080 takes a position at the very top of the graphs, just behind the dual GPU R9 295X2. At Ultra HD 4K resolutions, the GTX 1080 scores close to 500 points more than the massively overclocked Gigabyte GTX980 Ti XTREME Gaming.

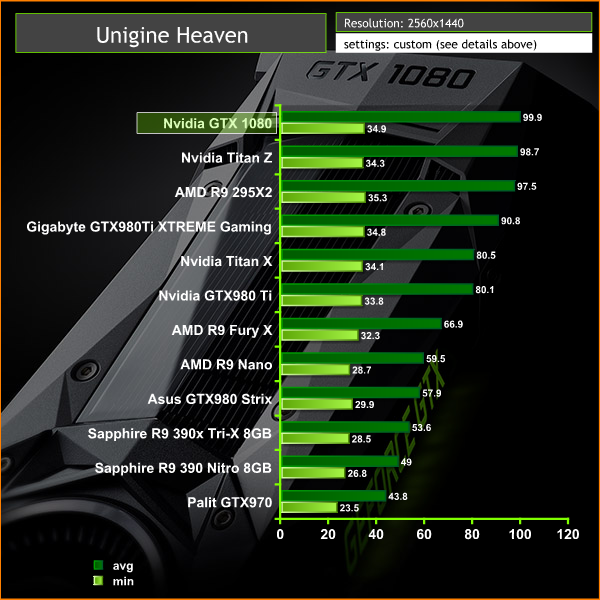

Unigine provides an interesting way to test hardware. It can be easily adapted to various projects due to its elaborated software design and flexible toolset. A lot of their customers claim that they have never seen such extremely-effective code, which is so easy to understand.

Heaven Benchmark is a DirectX 11 GPU benchmark based on advanced Unigine engine from Unigine Corp. It reveals the enchanting magic of floating islands with a tiny village hidden in the cloudy skies. Interactive mode provides emerging experience of exploring the intricate world of steampunk. Efficient and well-architected framework makes Unigine highly scalable:

- Multiple API (DirectX 9 / DirectX 10 / DirectX 11 / OpenGL) render

- Cross-platform: MS Windows (XP, Vista, Windows 7) / Linux

- Full support of 32bit and 64bit systems

- Multicore CPU support

- Little / big endian support (ready for game consoles)

- Powerful C++ API

- Comprehensive performance profiling system

- Flexible XML-based data structures

We test at 2560×1440 with quality setting at ULTRA, Tessellation at NORMAL, and Anti-Aliasing at x2.

Nvidia have always had strong performance with Tessellation and their optimisations for Unigine Heaven ensure the GTX 1080 claims top spot – even above the dual GPU Titan Z and R9 295X2.

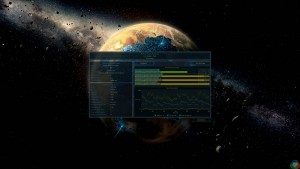

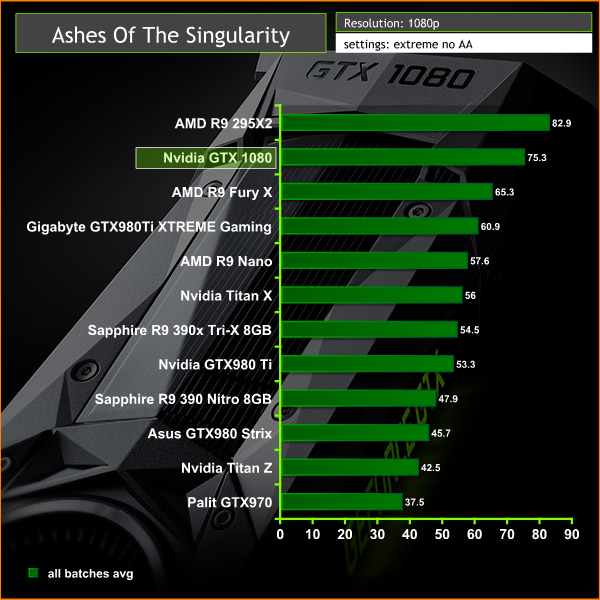

Ashes of the Singularity is a real-time strategy game set in the future where descendants of humans (called Post- Humans) and a powerful artificial intelligence (called the Substrate) fight a war for control of a resource known as Turinium.

Players will engage in massive-scale land/air battles by commanding entire armies of their own design. Each game takes place on one area of a planet, with each player starting with a home base (known as a Nexus) and a single construction unit.

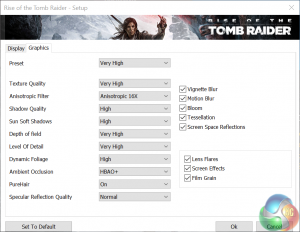

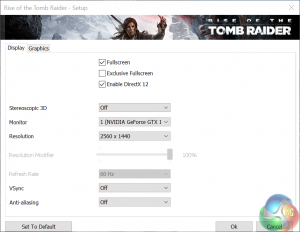

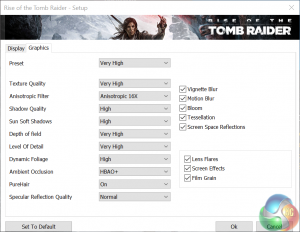

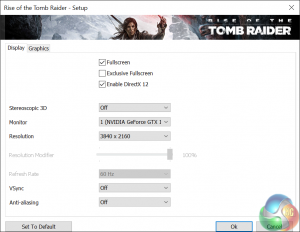

We test the final retail game at 1080p resolution and with EXTREME image quality settings, shown above.

When we tested this game in Alpha state, the performance was constantly changing between builds. As the title has gone to retail we have noticed that general AMD performance has increased over our earlier findings.

The AMD Fury X scores extremely well in this test – with the average frame rate around 4fps ahead of the Gigabyte GTX980 Ti XTREME Gaming. The new GTX 1080 outperforms the R9 Fury X by around 10 frames per second, falling into second place behind the dual GPU R9 295X2.

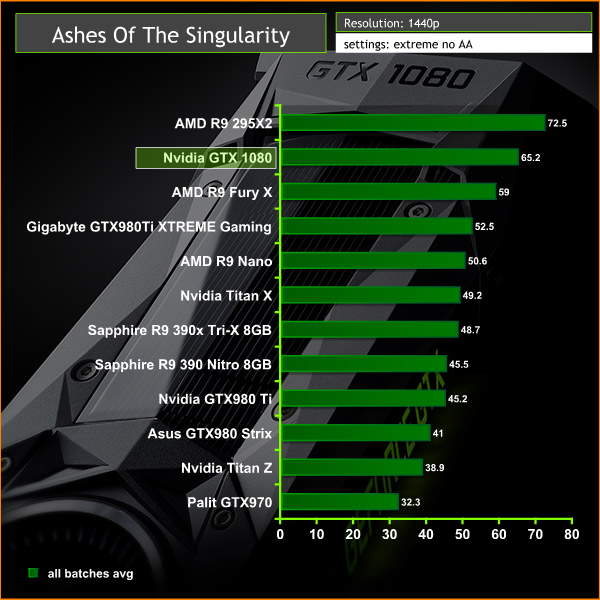

Ashes of the Singularity is a real-time strategy game set in the future where descendants of humans (called Post- Humans) and a powerful artificial intelligence (called the Substrate) fight a war for control of a resource known as Turinium.

Players will engage in massive-scale land/air battles by commanding entire armies of their own design. Each game takes place on one area of a planet, with each player starting with a home base (known as a Nexus) and a single construction unit.

We test the final retail game at 1440p resolution and with EXTREME image quality settings, shown above.

At 1440p the GTX1080 lead over the R9 295X2 drops to around 6 fps – from a 10 fps lead at 1080p. AMD's R9 295X2 still holds top performance spot.

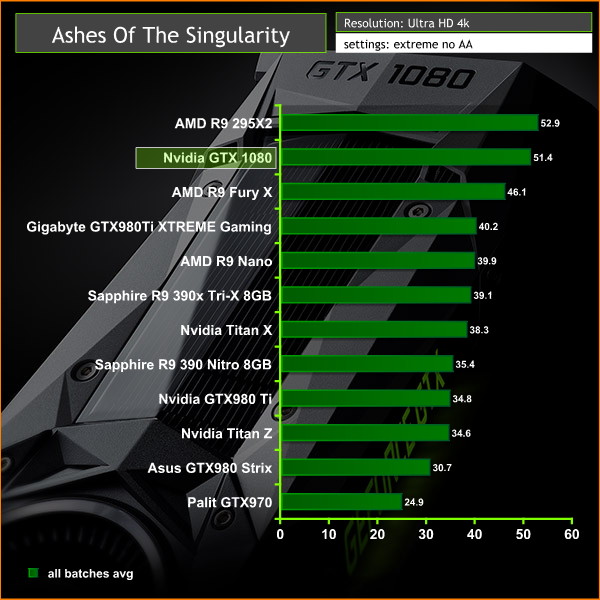

Ashes of the Singularity is a real-time strategy game set in the future where descendants of humans (called Post- Humans) and a powerful artificial intelligence (called the Substrate) fight a war for control of a resource known as Turinium.

Players will engage in massive-scale land/air battles by commanding entire armies of their own design. Each game takes place on one area of a planet, with each player starting with a home base (known as a Nexus) and a single construction unit.

We test the final retail game at 4k resolution and with EXTREME image quality settings, shown above.

AMD's Fury X scores particularly well at Ultra HD 4k, holding a 6 frames per second differential over the Gigabyte GTX980 ti XTREME Gaming. The Nvidia GTX 1080 is the star of the show however as it closes the gap between the R9 295X2, to only 1.5 frames per second.

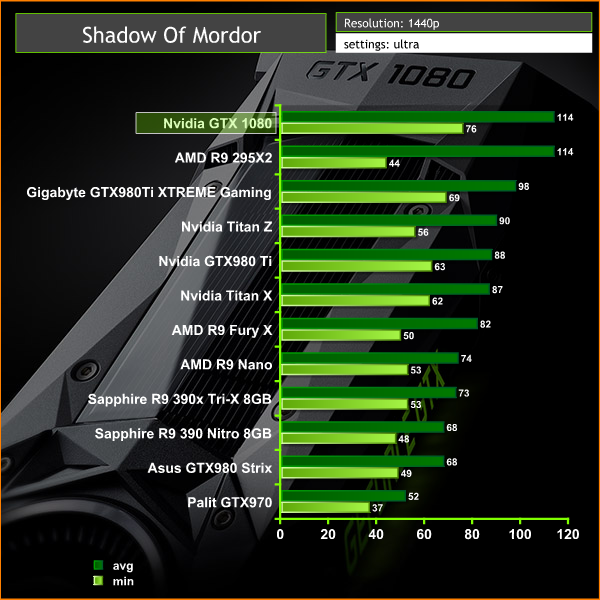

Middle-earth: Shadow of Mordor is a third-person open world video game, where the player controls a ranger by the name of Talion who seeks revenge on the forces of Sauron after his family, including his wife, are killed. Players can travel across locations in the game through parkour, riding monsters, or accessing Forge Towers, which serve as fast travel points.

We test at 1440p with the image quality settings at Ultra.

The Nvidia GTX1080 takes top position in our performance graph, averaging 114 frames per second. While this is the same as the R9 295X2 – the AMD hardware suffers from lower minimum frame rates throughout the test.

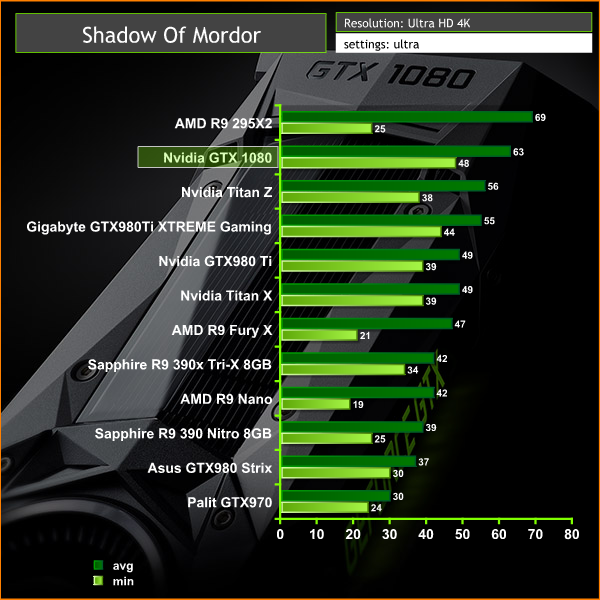

Middle-earth: Shadow of Mordor is a third-person open world video game, where the player controls a ranger by the name of Talion who seeks revenge on the forces of Sauron after his family, including his wife, are killed. Players can travel across locations in the game through parkour, riding monsters, or accessing Forge Towers, which serve as fast travel points.

With test at Ultra HD 4k resolution with Ultra image settings enabled.

At Ultra HD 4k resolution, the AMD R9 295X2 claims top position over the GTX 1080, although as before, the minimum frame rate is noticeably worse.

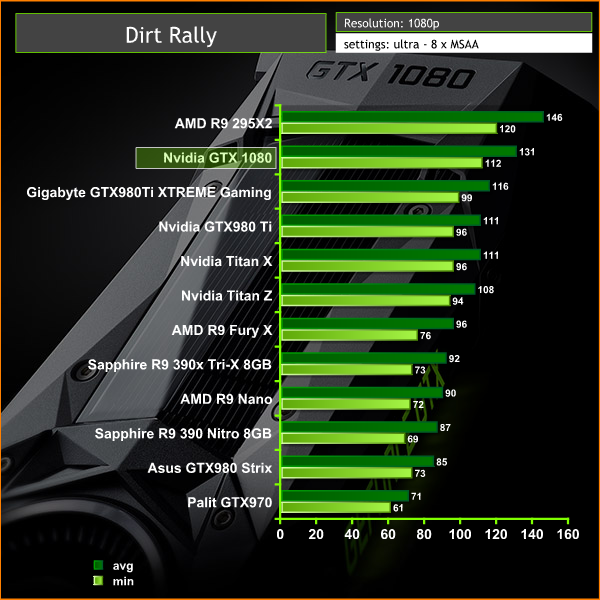

Dirt Rally is developed by British video game developer Codemasters using the in house Ego engine. Development began with a small team of individuals following the release of their 2012 video game Dirt: Showdown. Codemasters have emphasised a desire to create a simulation with Dirt Rally. They started by prototyping a handling model and creating tracks based on map data. The game employs a different physics model from previous titles, rebuilt from the ground up.

We test at 1080p with 8x MSAA and the ultra image quality setting enabled.

No problems powering this game at 1080p. The Nvidia GTX 1080 takes second position behind the R9 295X2 which holds both higher average and minimum frame rates. We recorded a 15 frame per second advantage for the Nvidia GTX 1080 against the overclocked Gigabyte GTX980 Ti XTREME Gaming solution.

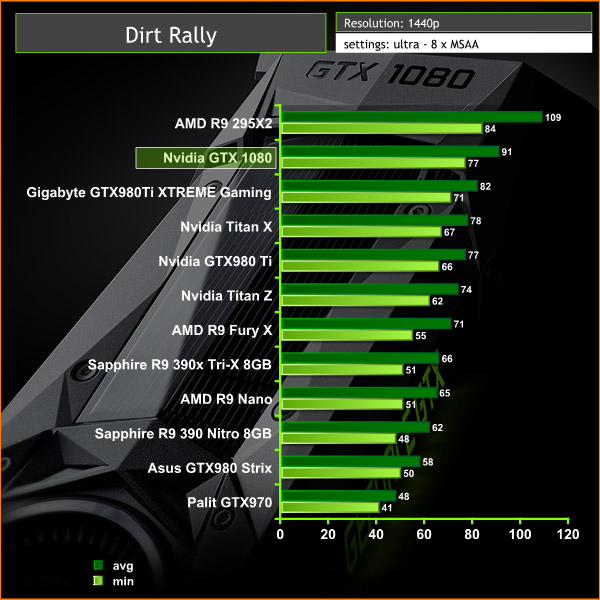

Dirt Rally is developed by British video game developer Codemasters using the in house Ego engine. Development began with a small team of individuals following the release of their 2012 video game Dirt: Showdown. Codemasters have emphasised a desire to create a simulation with Dirt Rally. They started by prototyping a handling model and creating tracks based on map data. The game employs a different physics model from previous titles, rebuilt from the ground up.

We test at 1440 with 8x MSAA and the ultra image quality setting enabled.

At 1440p the Nvidia GTX 1080 is the fastest single GPU card on test, holding a 9fps over the Gigabyte GTX980 ti XTREME Gaming card.

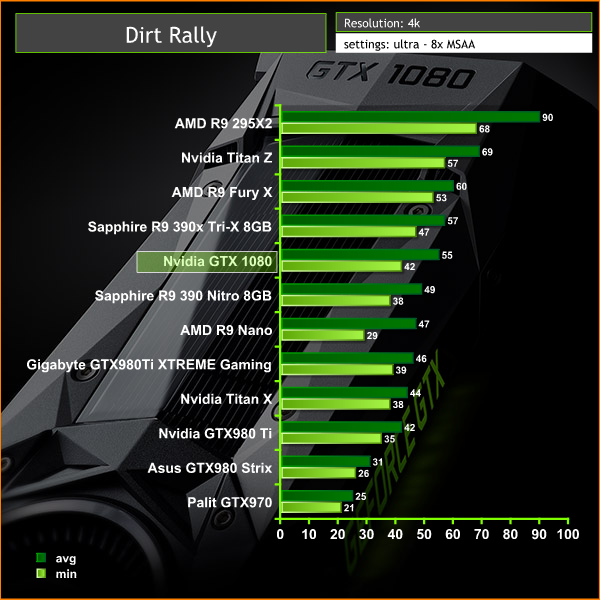

Dirt Rally is developed by British video game developer Codemasters using the in house Ego engine. Development began with a small team of individuals following the release of their 2012 video game Dirt: Showdown. Codemasters have emphasised a desire to create a simulation with Dirt Rally. They started by prototyping a handling model and creating tracks based on map data. The game employs a different physics model from previous titles, rebuilt from the ground up.

We test at Ultra HD 4k with 8x MSAA and the ultra image quality setting enabled.

At Ultra HD 4k, the AMD R9 295X2 is clearly the performance leader, averaging 90 frames per second. It is the only card on test that can hold a constant 60 frame rate rate at these settings at 4k. For some reason the GTX 1080 dropped down the list a little at Ultra HD 4k with 8x MSAA enabled, which we can only assume is due to some misoptimisation within the driver/game. We tested this with both 368.13 and 368.16 drivers.

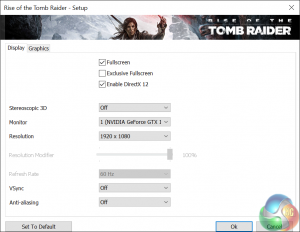

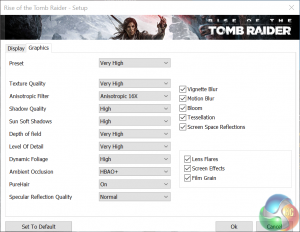

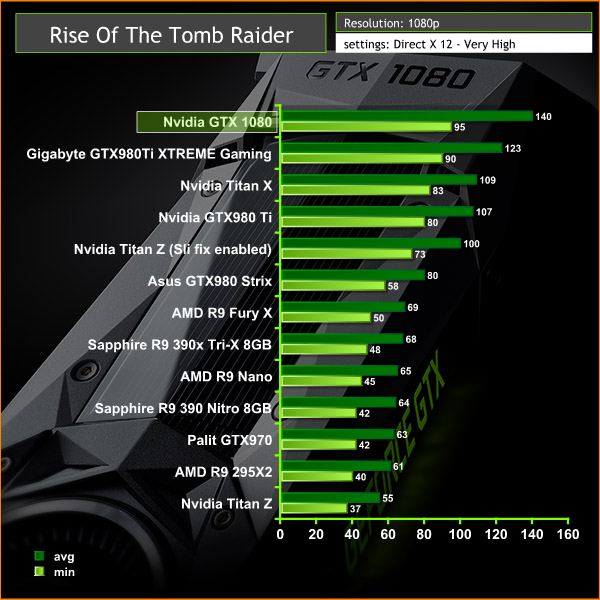

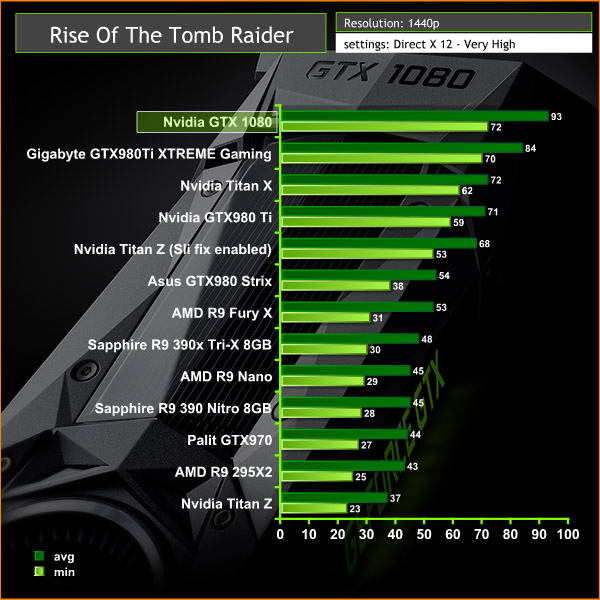

Rise of the Tomb Raider is a third-person action-adventure game that features similar gameplay found in 2013's Tomb Raider. Players control Lara Croft through various environments, battling enemies, and completing puzzle platforming sections, while using improvised weapons and gadgets in order to progress through the story. It uses a Direct X 12 capable engine.

We enable Direct X 12, Vsync is off and graphics are set to the ‘very high' profile, shown above. We test the Nvidia Titan Z with the SLi optimisation both disabled, then enabled. This is clearly marked in the graph. See more HERE.

Nvidia hardware dominates in this game, and the GTX 1080 takes top spot in the performance chart at 1080p, averaging 140 frames per second. AMD hardware does not fare so well with this game engine.

Rise of the Tomb Raider is a third-person action-adventure game that features similar gameplay found in 2013's Tomb Raider. Players control Lara Croft through various environments, battling enemies, and completing puzzle platforming sections, while using improvised weapons and gadgets in order to progress through the story. It uses a Direct X 12 capable engine.

We enable Direct X 12, Vsync is off and graphics are set to the ‘very high' profile, shown above. We test the Nvidia Titan Z with the SLi optimisation both disabled, then enabled. This is clearly marked in the graph. See more HERE.

The Nvidia GTX 1080 dominates with this engine, averaging 93 frames per second, against 84 from the Gigabyte GTX 980 ti XTREME Gaming solution. AMD hardware does not fare so well with this game engine.

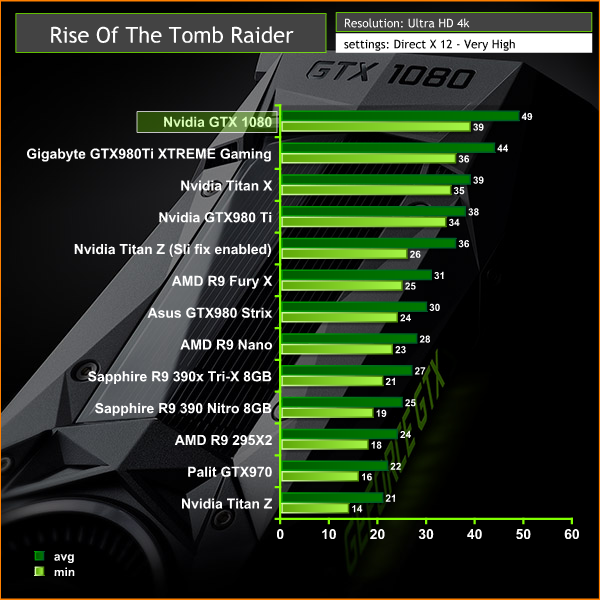

Rise of the Tomb Raider is a third-person action-adventure game that features similar gameplay found in 2013's Tomb Raider. Players control Lara Croft through various environments, battling enemies, and completing puzzle platforming sections, while using improvised weapons and gadgets in order to progress through the story. It uses a Direct X 12 capable engine.

We enable Direct X 12, Vsync is off and graphics are set to the ‘very high' profile, shown above. We test the Nvidia Titan Z with the SLi optimisation both disabled, then enabled. This is clearly marked in the graph. See more HERE.

The Nvidia GTX 1080 manages to maintain the top performance position at Ultra HD 4k, averaging almost 50 frames per second, and holding a constant frame rate close to 40 fps at all times. AMD hardware does not fare so well with this game engine.

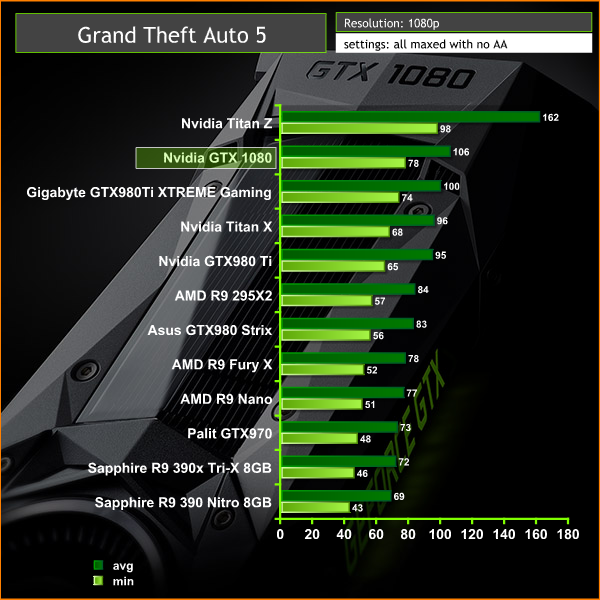

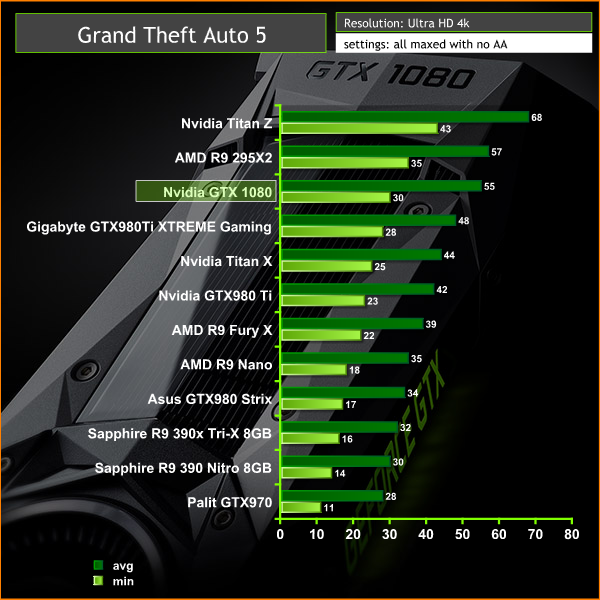

Grand Theft Auto V is an action-adventure game played from either a first-person or third-person view. Players complete missions—linear scenarios with set objectives—to progress through the story. Outside of missions, players may freely roam the open world. Composed of the San Andreas open countryside area and the fictional city of Los Santos, the world is much larger in area than earlier entries in the series. It may be fully explored after the game's beginning without restriction, although story progress unlocks more gameplay content.

We maximise all the image quality settings, but leave anti aliasing turned off, as it dramatically impacts performance.

The Grand Theft Auto 5 engine is very advanced and we can see the Nvidia hardware claiming all of the top performance spots at 1080p. The Titan Z is the clear performance leader in this engine, averaging 162 frames per second. The Nvidia GTX 1080 claims the top position for single GPU graphics cards.

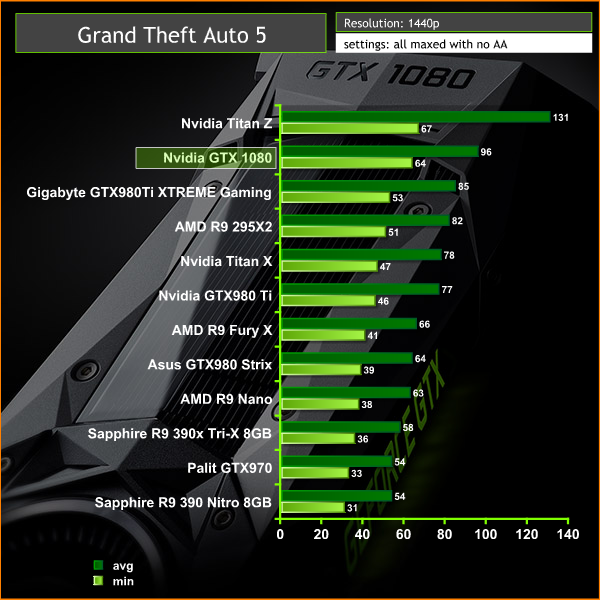

Grand Theft Auto V is an action-adventure game played from either a first-person or third-person view. Players complete missions—linear scenarios with set objectives—to progress through the story. Outside of missions, players may freely roam the open world. Composed of the San Andreas open countryside area and the fictional city of Los Santos, the world is much larger in area than earlier entries in the series. It may be fully explored after the game's beginning without restriction, although story progress unlocks more gameplay content.

We maximise all the image quality settings, but leave anti aliasing turned off, as it dramatically impacts performance.

Scaling from 1080p shows the same performance positions at the top of the graph, with the Nvidia Titan Z holding a clear lead at the top of the chart.

Grand Theft Auto V is an action-adventure game played from either a first-person or third-person view. Players complete missions—linear scenarios with set objectives—to progress through the story. Outside of missions, players may freely roam the open world. Composed of the San Andreas open countryside area and the fictional city of Los Santos, the world is much larger in area than earlier entries in the series. It may be fully explored after the game's beginning without restriction, although story progress unlocks more gameplay content.

We maximise all the image quality settings, but leave anti aliasing turned off, as it dramatically impacts performance.

At Ultra HD 4k, the AMD R9 295X2 comes screaming from below to claim second performance position, behind Nvidia's GTX Titan Z. The Nvidia GTX 1080 holds the highest performance slot for single GPU cards.

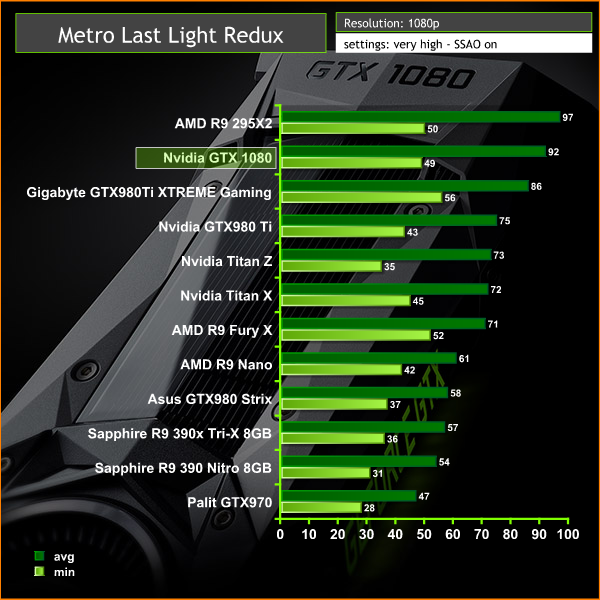

Just like the original game Metro 2033, Metro: Last Light is played from the perspective of Artyom, the player-character. The story takes place in post-apocalyptic Moscow, mostly inside the metro system, but occasionally missions bring the player above ground. Metro: Last Light takes place one year after the events of Metro 2033, following the canonical ending in which Artyom chose to proceed with the missile strike against the Dark Ones (this happens regardless of your actions in the first game). Redux adds all the DLC and graphical improvements.

Quality-Very High, SSAA-on, Texture Filtering-16x, Motion Blur-Normal, Tessellation-Normal, Advanced Physx-off.

Nvidia's GTX 1080 performs exceptionally well at 1080p, holding an average of 92 frames per second. It is around 6 frames per second faster than the Gigabyte GTX980 Ti Xtreme Gaming card.Just like the original game Metro 2033, Metro: Last Light is played from the perspective of Artyom, the player-character. The story takes place in post-apocalyptic Moscow, mostly inside the metro system, but occasionally missions bring the player above ground. Metro: Last Light takes place one year after the events of Metro 2033, following the canonical ending in which Artyom chose to proceed with the missile strike against the Dark Ones (this happens regardless of your actions in the first game). Redux adds all the DLC and graphical improvements.

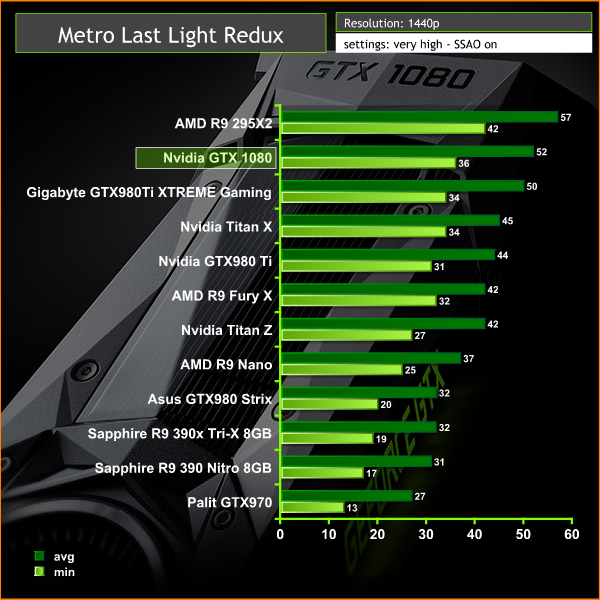

Quality-Very High, SSAA-on, Texture Filtering-16x, Motion Blur-Normal, Tessellation-Normal, Advanced Physx-off.

At 1440p the gap between the overclocked Gigabyte GTX980ti and GTX 1080 is only 2 frames per second. The R9 295X2 claims top spot, averaging 57 frames per second.

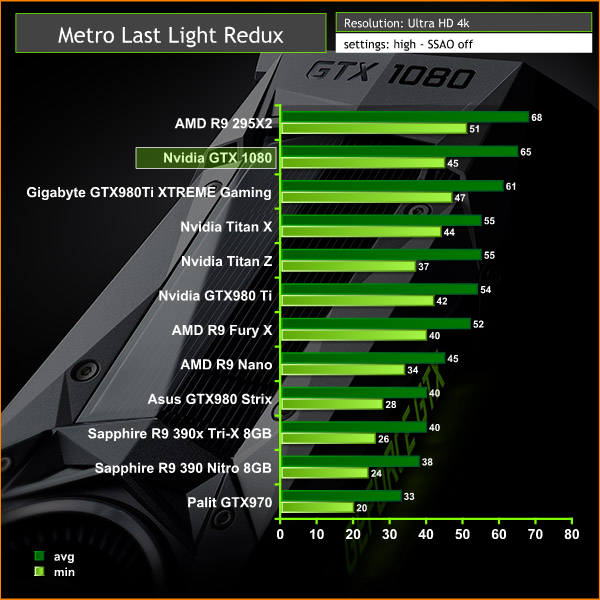

Just like the original game Metro 2033, Metro: Last Light is played from the perspective of Artyom, the player-character. The story takes place in post-apocalyptic Moscow, mostly inside the metro system, but occasionally missions bring the player above ground. Metro: Last Light takes place one year after the events of Metro 2033, following the canonical ending in which Artyom chose to proceed with the missile strike against the Dark Ones (this happens regardless of your actions in the first game). Redux adds all the DLC and graphical improvements.

Quality-High, SSAA-off, Texture Filtering-16x, Motion Blur-Normal, Tessellation-Normal, Advanced Physx-off.

At Ultra HD 4k resolution, the R9 295X2 claims top position, although the GTX 1080 pulls back the differential a little, falling only 3 frames per second behind the flagship dual GPU AMD part. The Gigabyte GTX980 Ti Xtreme Gaming puts in a good showing, only 4 frames per second behind the GTX 1080.

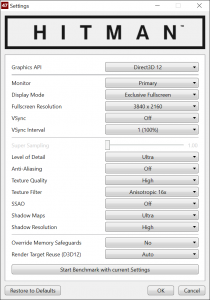

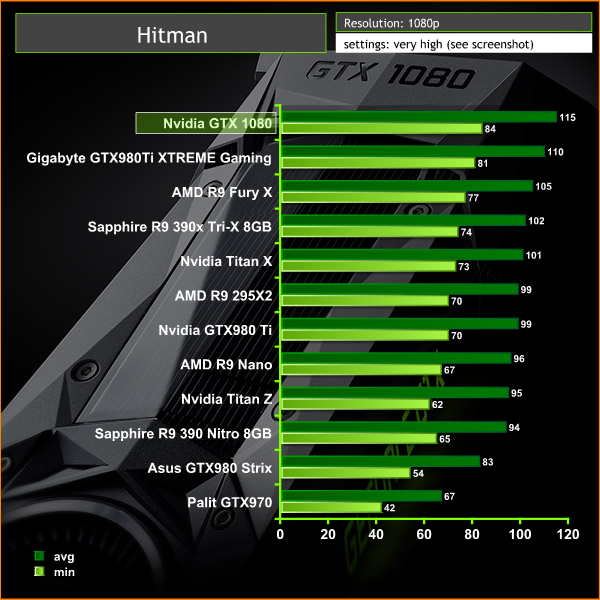

Hitman (2016) is a third-person stealth video game in which players take control of Agent 47, a genetically enhanced, superhuman assassin, travelling to international locations and eliminating contracted targets. As in other games in the Hitman series, players are given a large amount of room for creativity in approaching their assassinations. The game is being released in stages, which hasn't proved too popular with a large audience.

We test with many of the settings maximised, SSAO is disabled however as I found it causes some crashing. I have to say that Hitman is a little unstable at times under the best of situations, but we managed to get it running well enough to benchmark. The built in benchmark is very flaky indeed however, so real world runs had to be used.

This engine proves quite straightforward to power at 1080p, even for an Nvidia GTX970. The GTX 1080 claims top position in the benchmark graph.

Hitman (2016) is a third-person stealth video game in which players take control of Agent 47, a genetically enhanced, superhuman assassin, travelling to international locations and eliminating contracted targets. As in other games in the Hitman series, players are given a large amount of room for creativity in approaching their assassinations. The game is being released in stages, which hasn't proved too popular with a large audience.

We test with many of the settings maximised, SSAO is disabled however as I found it causes some crashing. I have to say that Hitman is a little unstable at times under the best of situations, but we managed to get it running well enough to benchmark. The built in benchmark is very flaky indeed however, so real world runs had to be used.

At 1440p, the Nvidia GTX 1080 averages 94 frames per second, holding top spot. At 1440p the AMD R9 295X2 raises its position on the leaderboard to fall into second place, just ahead of the Gigabyte GTX980 ti Xtreme Gaming.

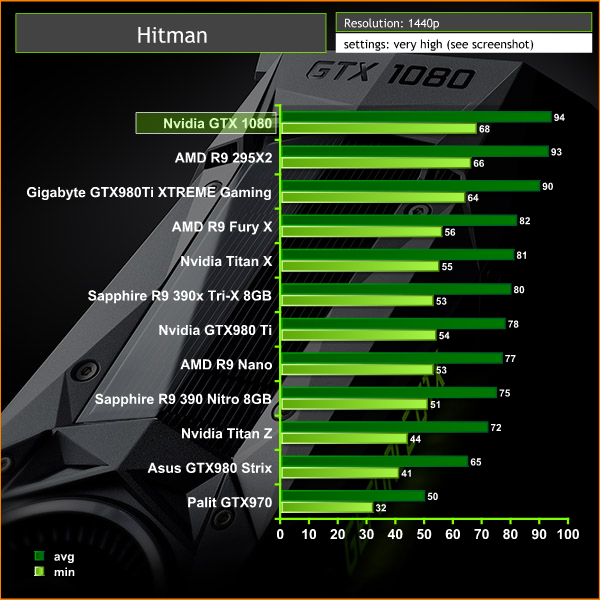

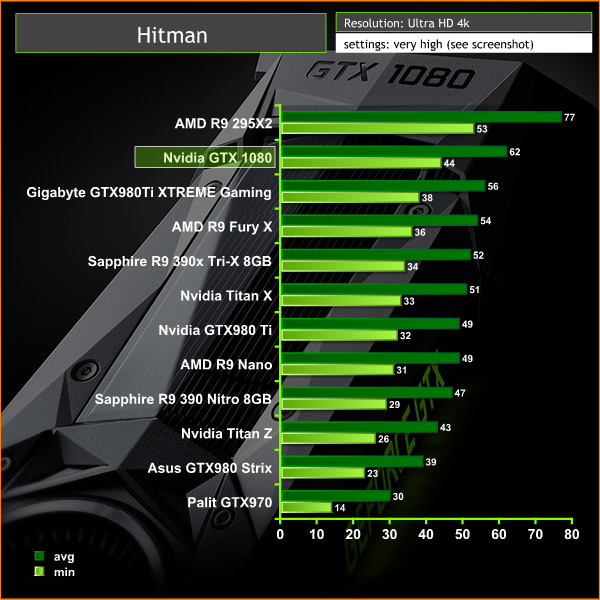

Hitman (2016) is a third-person stealth video game in which players take control of Agent 47, a genetically enhanced, superhuman assassin, travelling to international locations and eliminating contracted targets. As in other games in the Hitman series, players are given a large amount of room for creativity in approaching their assassinations. The game is being released in stages, which hasn't proved too popular with a large audience.

We test with many of the settings maximised, SSAO is disabled however as I found it causes some crashing. I have to say that Hitman is a little unstable at times under the best of situations, but we managed to get it running well enough to benchmark. The built in benchmark is very flaky indeed however, so real world runs had to be used.

At Ultra HD 4k, the R9 295X2 claims top position, averaging 77 frames per second. The GTX 1080 drops into second place, 6 frames per second ahead of the Gigabyte GTX980 ti Xtreme Gaming.

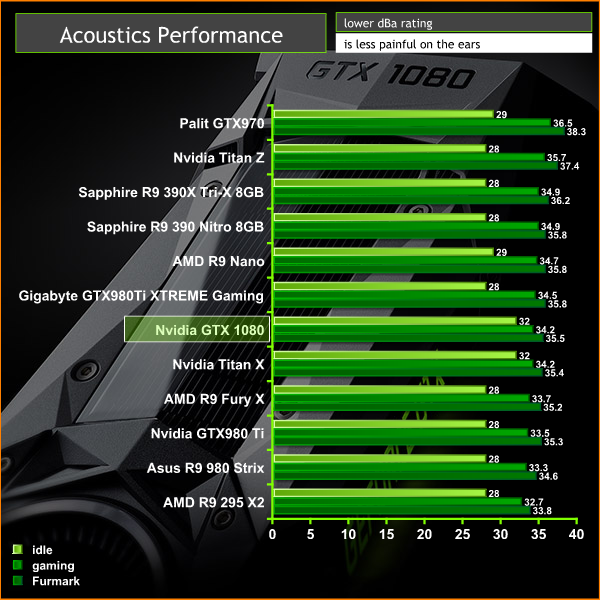

We have built a system inside a Lian Li chassis with no case fans and have used a fanless cooler on our CPU. The motherboard is also passively cooled. This gives us a build with almost completely passive cooling and it means we can measure noise of just the graphics card inside the system when we run looped 3dMark tests.

We measure from a distance of around 1 meter from the closed chassis and 4 foot from the ground to mirror a real world situation. Ambient noise in the room measures close to the limits of our sound meter at 28dBa. Why do this? Well this means we can eliminate secondary noise pollution in the test room and concentrate on only the video card. It also brings us slightly closer to industry standards, such as DIN 45635.

KitGuru noise guide

10dBA – Normal Breathing/Rustling Leaves

20-25dBA – Whisper

30dBA – High Quality Computer fan

40dBA – A Bubbling Brook, or a Refrigerator

50dBA – Normal Conversation

60dBA – Laughter

70dBA – Vacuum Cleaner or Hairdryer

80dBA – City Traffic or a Garbage Disposal

90dBA – Motorcycle or Lawnmower

100dBA – MP3 player at maximum output

110dBA – Orchestra

120dBA – Front row rock concert/Jet Engine

130dBA – Threshold of Pain

140dBA – Military Jet takeoff/Gunshot (close range)

160dBA – Instant Perforation of eardrum

I have always been a fan of the Nvidia reference coolers. They work great for expelling all the hot air outside the case – and unless you are watercooling, for SLi configurations they work better than any of the third party cooling systems from Nvidia partners.

The GTX 1080 when idle is basically inaudible, and under load, it sounds just like the other high end (single GPU) Nvidia reference cards we have tested in recent years. The Nvidia Titan Z card is louder, as it is dealing with two GPUs on the one PCB. It is worth pointing out that the fan is always active on the GTX1080.

The tests were performed in a controlled air conditioned room with temperatures maintained at a constant 21c – a comfortable environment for the majority of people reading this. Idle temperatures were measured after sitting at the desktop for 30 minutes. Load measurements were acquired by playing Rise Of The Tomb Raider for 90 minutes and measuring the peak temperature. We also have included Furmark results, recording maximum temperatures throughout a 10 minute stress test. All fan settings were left on automatic.

The single fan copes well, holding gaming temperatures around 77c under extended load.

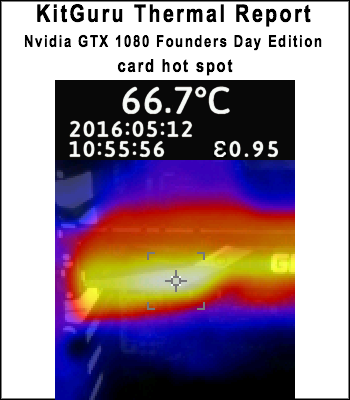

We install the graphics card into the system and measure temperatures on the back of the PCB with our Fluke Visual IR Thermometer/Infrared Thermal Camera. This is a real world running environment playing Rise Of The Tomb Raider for extended periods of time.

The image above shows the Nvidia GTX 1080 remains relatively cool, thanks to the backplate. Load temperatures rise to give a reading of 66.7C under extended conditions. The backplate helps stop the build up of hot spots on the PCB which is exactly what we want to see.

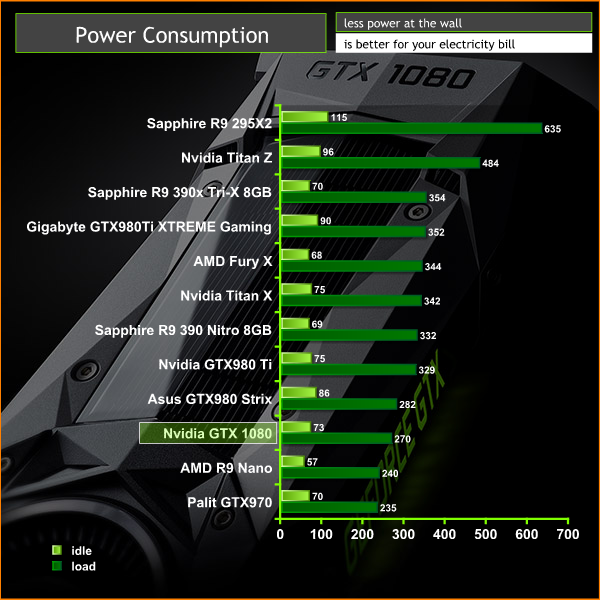

We measure power consumption from the whole system when idle and when gaming, excluding the monitor.

The Nvidia GTX 1080 is a very efficient card considering the rendering power. System power draw with the GTX1080 installed is around 270 watts when fully loaded.

The Gigabyte GTX980Ti XTREME Gaming card from the previous generation raises system demand to just over 350 watts under the same conditions. With the R9 295X2 card installed, the system power demand rises to a staggering 635 watts.

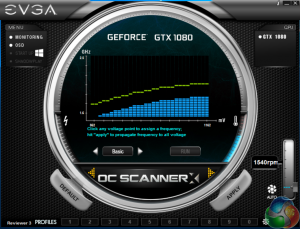

Nvidia have enhanced their GPU Boost System with their latest iteration – V3.0. The new version features custom ‘per voltage' point frequency offsets.

GPU Boost 3.0 offers the ability to set frequency offsets for individual voltage points. Older versions could only hold a fixed frequency offset, essentially shifting the existing V/F curve upward by the defined offset amount.

Above, is a theoretical custom V/F curve set in GPU Boost 3.0. The offset is set just below the upper limits of the theoretical maximum clock making full use of all the available headroom – ensuring performance is fully utilised for the GPU in question.

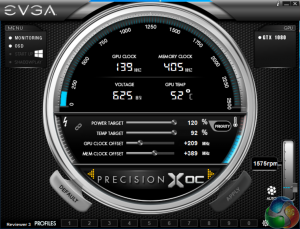

We overclock the Nvidia GTX 1080 with a beta version of EVGA Precision XOC, which Nvidia supplied. We used an updated driver for overclocking which works specifically with this beta software. Version V368.16.

This software is beta right now and is not quite finished. Nvidia sent it out a couple of days before the review was due to go live which didn't give us a lot of time to test, as the review was well under way at that stage.

Ideally I would have liked to present each game in this review with the GTX 1080 running at maximum manually overclocked speeds as well as the default clock results, but time was against us.

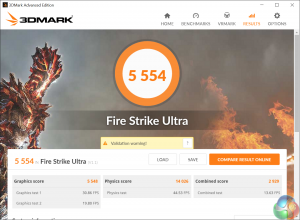

We increased power target to 120% and temperature target to 92c to increase the OC potential. We managed to get the card stable with a +209 core and +389 mem clock boost. This is very substantial indeed and a good indication of how advanced the new 16nm FinFET manufacturing process is.

Watch via our VIMEO Channel (below) or over on YouTube HERE

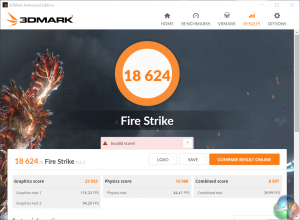

As we can see by the video when running 3DMark, the GTX1080 core clocks held steady over 2,000mhz – but how did this impact the performance?

Overclocking the Nvidia GTX 1080 pushes performance even higher. We can see that when the core is running at over 2ghz the GTX 1080 is able to outperform the AMD R9 295X2 in the latest 3DMARK, even at Ultra HD 4k. A truly remarkable result.

It has been some time since I have reviewed a new graphics card, never mind one based on a new architecture and fabricated on the new 16nm FinFET process. I have to admit that after Maxwell I had high hopes for Nvidia's Pascal architecture and I certainly can't say that I have been disappointed handling this analysis for KitGuru. Pascal has actually surpassed all my preconceptions.

The Nvidia GTX1080 is without question a truly phenomenal graphics card. I have spent more than 100 hours analysing the hardware in the last week and can only come to the conclusion that the GTX 1080 is probably the finest GPU Nvidia have ever created.

If you have read KitGuru's analysis from the start, you will already be aware of all the technological enhancements Nvidia have brought to the Pascal platform – if you haven't then check out the first couple of pages in the review today. Nvidia have created a completely new GPU circuit architecture and changed the board channel design. The power supply has been upgraded to 5 phase dualFET and they have tuned it to maximise bandwidth and enhance phase balancing. Nvidia have added extra capacitance to their filtering network and optimised the power delivery on the PCB for low impedance.

Following on from highly efficient Maxwell architecture, Nvidia have managed to take efficiency optimisations to the next level with Pascal. Power efficiency is increased, even when compared against the GTX980 and peak to peak voltage is reduced from 209mV to 120mV to help ensure stellar overclocks.

The adoption of GDDR5x memory has certainly helped drive new levels of performance. It is a faster and more advanced interface standard, achieving 10 Gbps transfer rates, or roughly only 100 picoseconds (ps) between data bits. In real world terms, this card is able to drive very high levels of bandwidth, ideal when pushing a lot of texture data at Ultra HD 4k resolutions. The memory subsystem of the GTX 1080 delivers 1.7 times more bandwidth than the GTX 980.

This is the first time that Nvidia have introduced a vapour chamber cooling system on a reference card, and it has certainly helped to ensure that there is plenty of potential for overclocking.

While all cards will deliver slightly different results we were able to get the core speed running at just over 2ghz which helped push performance of the GTX 1080 past even the dual GPU AMD R9 295X2. This in itself is a remarkable achievement but when you factor in that the GTX1080 system demands only 270 watts, compared against the same system using the R9 295X2 requiring 635 watts of power. This is staggering.

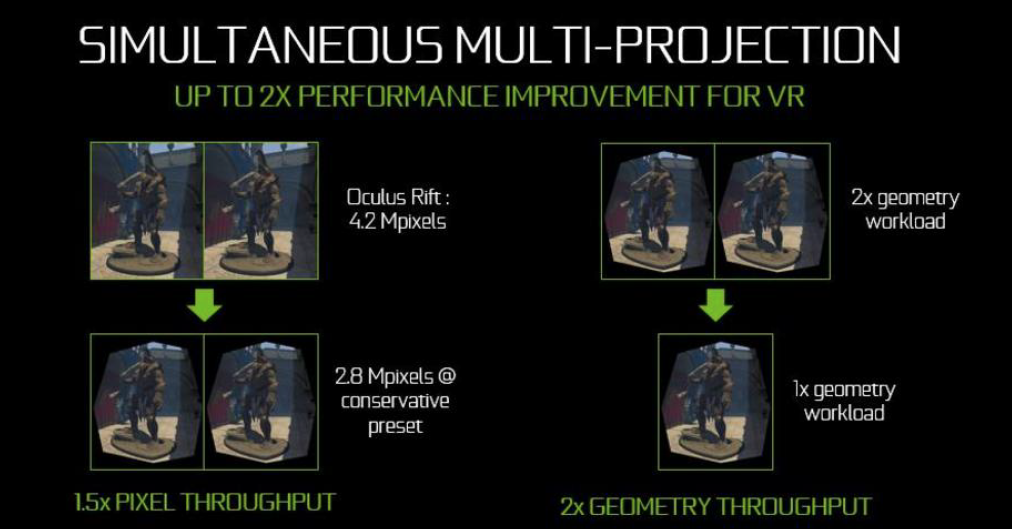

Nvidia have also placed a focus on technologies to improve the VR experience. Simultaneous Multi-Projection provides performance for new display technologies. The GTX 1080 GPU can simultaneously render to unique viewports that are designed specifically for VR headsets and triple display users. Lens Matched Shading improves pixel shading performance by rendering up to 16 viewports that will better support the design of the latest VR headsets. This helps to avoid rendering pixels that would be trashed before the final imagery is sent to the VR headset.

Additionally, the new Single Pass Stereo feature uses Simultaneous Multi Projection to render the geometry required for both eyes in a VR headset – all in a single rendering pass rather than a pass for each eye. This obviously halves the geometry workload when directly compared to a more traditional VR rendering situation. This will also help support gamers with three screen configurations as Perspective Surround gets the GPU to simultaneously render projections for each of the three displays with proper perspective views. We haven't been able to test this yet, but it seems like a good move for enthusiast users who enjoy multi screen systems for gaming.

If you own an older Nvidia graphics card and are building a new system this year for Ultra HD 4k gaming then the GTX 1080 is the graphics card to get. So how much will the GTX 1080 set you back? Nvidia confirmed £619 inc vat as the launch price today – so while very expensive it is cheaper than the Nvidia Titan X, which is still available at £869.99 today. We hope to see prices of the GTX980 Ti and GTX 980 dropping in the near future.

It would be fair to say that as an overall package the Nvidia GTX 1080 has exceeded all our expectations. We do hope that AMD can answer the challenge and deliver the goods with Polaris, even in the lower sector. Thankfully, we won't have too long to wait now to give you all an answer.

Discuss on our Facebook page, over HERE.

Pros:

- New performance leader.

- class leading efficiency.

- cooler design is very good.

- overclocks to 2ghz+.

- GDDR5X improves bandwidth.

Cons:

- could be quieter.

- likely very expensive in the UK.

Kitguru says: It is difficult to find fault with Nvidia's GTX 1080. This is one of the finest cards the company have ever produced and sets a big challenge for AMD's Polaris.

Be sure to check out our sponsors store EKWB here

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards