Despite the strides made in AI technology, artificial intelligence still carries a heavy stigma with it, prompting fears of the machines rising above humans. This might seem the stuff of fiction, but those in the field know the implications of misplaced values in a system designed to think and act like a human. In a small experiment, researchers at Massachusetts Institute of Technology (MIT) purposely fed its AI with algorithmic bias to determine what the result would be.

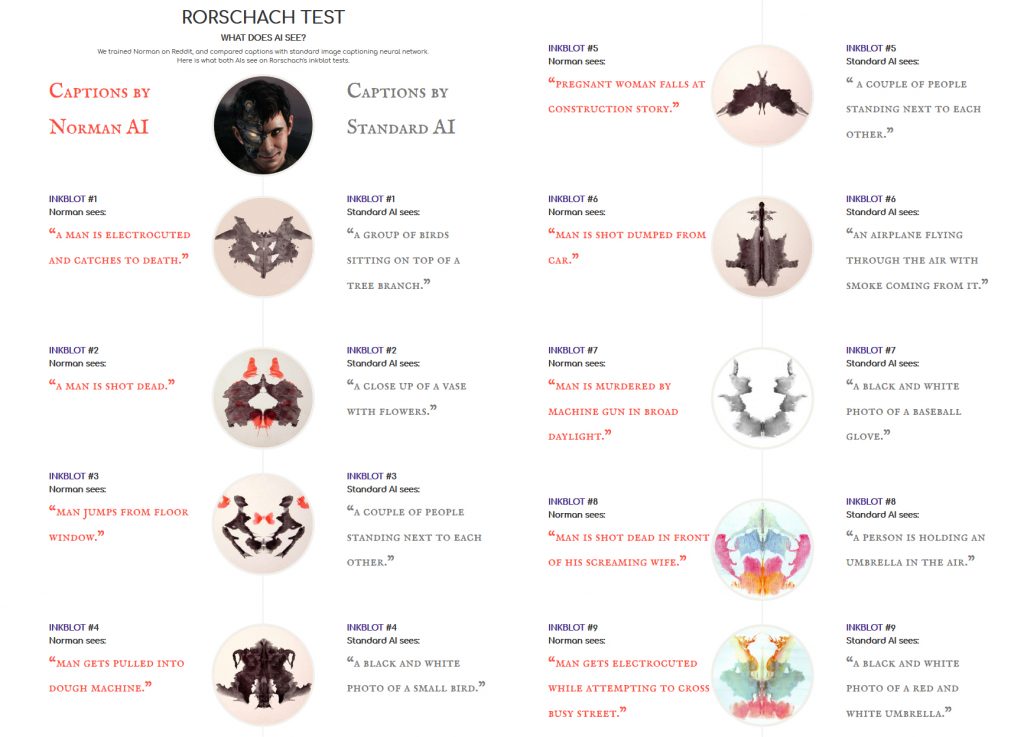

Enter Norman, the AI in question whose namesake comes from Alfred Hitchcock’s titular character from Psycho. Subjected to the deepest and darkest depths of Reddit, Norman was trained using “a popular deep learning method of generating a textual description of an image.” The image captions used in this process came from a particularly infamous subreddit pertaining to “the disturbing reality of death.”

“Then, we compared Norman’s responses with a standard image captioning neural network (trained on MSCOCO dataset) on Rorschach inkblots; a test that is used to detect underlying thought disorders,” explain the researchers.

Even with doubts cast upon the Rorschach test as a valid method to test the human psychological state, this is sure to ring alarm bells in the general reader’s mind surrounding the adopted mentality of AI. After all, geniuses such as the late Stephen Hawking have all warned the developers of artificial intelligence to be careful since its rise in popularity.

Testing is important, though. Necessary, even, to further progress of artificial intelligence in a meaningful way, whereas ignorance will surely lead to the catastrophic consequences depicted within dystopian science fiction stories. In fact, the team at MIT has conducted numerous experiments using AI to craft horror stories, judge moral decisions for self-driving cars, and even induce deep empathy.

Bias within artificial intelligence is something that has been consistently criticised throughout its development, with Norman being the latest to highlight the importance in recognising the consequences of machine learning via certain weighted algorithms.

KitGuru Says: While this is a pretty basic test, it is a scary look into what could happen if AI were to take a wrong turn due to human error in teaching it. It also draws attention to machine learning as a whole, and whether or not AI would eventually deem humanity unworthy. Do you trust the future of AI?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards