After months – even years – of leaks, teasers and false-starts, Intel's Arc GPUs are finally hitting the market. Today we assess the mid-range Arc A750 Limited Edition, offering performance that is allegedly on-par with the RTX 3060, but priced at $289. On paper, it certainly sounds like Intel could be the saviour of the sub-$300 GPU market, but as always there is plenty more to the story…

This review will focus on the Intel Arc A750 Limited Edition graphics card, but we do also have a day-1 review of the Arc A770 Limited Edition that you can find over HERE.

We've taken a look at the Xe HPG architecture in the past, but to remind ourselves of what we are looking at today, above we can see a comparison of the core spec of the A750 and A770. Both SKUs are built on Intel's ACM-G10 silicon, but the A750 is shaved down slightly. While the full die offers 32 Xe cores, the A750 ships with 28 Xe cores, where each core offers 16 vector engines, with each vector engine housing 8 FP32 ALUs, for a grand total of 3584.

Each Xe core is accompanied by a Ray Tracing Unit, while we also find 224 TMUs and 112 ROPs. A 256-bit memory interface is used for both A750 and A770, but 16Gbps GDDR6 memory is used for the A750, giving total memory bandwidth of 512 GB/s. Intel also rates the A750 for 2050MHz graphics clock, and 225W total board power, something we look at closely in this review using our in-depth power testing methodology.

It's also worth clarifying that today we are reviewing the Limited Edition cards. Despite the name, these are not limited in quantity and you can instead think of the Limited Edition as the equivalent to Nvidia's Founders Edition – boards manufactured by Intel and sold directly to consumers. Intel was firm with us that the Limited Edition models will be available at the announced prices, so $289 for the A750 and $349 for the A770, with the A770 Limited Edition only available with 16GB VRAM. Partner cards with 8GB VRAM will start at $329.

The Arc A750 Limited Edition ships in a compact blue box, with the Arc branding positioned prominently on the front.

Inside, a quick start guide and ‘thank you' note are the only included accessories.

We've already seen a positive reaction to the design of Intel's Limited Edition cards from our unboxing video and we have to say Intel has done a great job with the aesthetic here. The card is almost entirely matte black, with no gaudy design elements or aggressive angles, and on the underside of the shroud we just get a look at the two 90mm axial fans. It's a very simple, but elegant design and it makes a refreshing change from the RGB monsters we have become used to over the last few years.

That's also true in terms of dimensions, as the Limited Edition is just 2-slots thick and a standard 10.5″, or 266.7mm, length. A metallic silver strip runs the length of the card, replacing the RGB strip that is present on the A770. There is no RGB lighting on the A750, just a white LED that illuminates the Intel Arc logo on the front of the card.

That logo is visible above, along with the front of the shroud. Intel's backplate design is also eye-catching, with a pin-stripe design. The backplate itself is made from plastic and doesn't feel particularly sturdy, but it certainly looks the part.

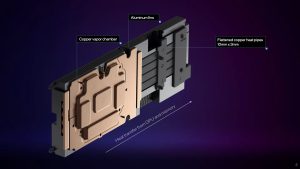

I've not yet disassembled the cards themselves but above we can see the renders supplied by Intel. The cards use a copper vapour chamber and aluminium fin stack, with a total of four flattened 10mm heat pipes. Air from the two fans blows down onto the cooler and escapes through the back and sides of the card.

Power requirements consist of one 8-pin and one 6-pin PCIe connector – no 12VHPWR here. Meanwhile for display outputs, credit to Intel for coming to market with three DisplayPort 2.0 connectors, the first of any GPU manufacturer to do so, while there's a single HDMI 2.1 port as well.

Driver Notes

- All AMD GPUs were benchmarked with the public Adrenalin 22.9.1 driver.

- All Nvidia GPUs were benchmarked with the 516.94 driver.

- All Intel GPUs were benchmarked with the 101.3433 driver supplied to press.

Test System:

We test using ta custom built system powered by MSI, based on Intel’s Alder Lake platform. You can read more about this system HERE and check out MSI on the CCL webstore HERE.

| CPU |

Intel Core i9-12900K

|

| Motherboard |

MSI MEG Z690 Unify

|

| Memory |

32GB (2x16GB) ADATA XPG Lancer DDR5 6000MHz

CL 40-40-40

|

| Graphics Card |

Varies

|

| SSD |

2TB MSI Spatium M480

|

| Chassis | MSI MPG Velox 100P Airflow |

| CPU Cooler |

MSI MEG CoreLiquid S360

|

| Power Supply |

Corsair 1200W HX Series Modular 80 Plus Platinum

|

| Operating System |

Windows 11 Pro 22H2

|

| Monitor |

MSI Optix MPG321UR-QD

|

| Resizable BAR |

Enabled for all supported GPUs

|

Comparison Graphics Cards List

- AMD RX 6800 XT 16GB

- Gigabyte RX 6600 XT Gaming OC Pro 8GB

- Gigabyte RX 6600 Eagle 8GB

- ASUS RTX 3080 TUF Gaming 10GB

- Nvidia RTX 3070 FE 8GB

- Nvidia RTX 3060 Ti FE 8GB

- Palit RTX 3060 StormX 12GB

- Nvidia RTX 2060 FE 6GB

All cards were tested at reference specifications.

Software and Games List

- 3DMark Fire Strike & Fire Strike Ultra (DX11 Synthetic)

- 3DMark Time Spy (DX12 Synthetic)

- Assassin's Creed Valhalla (DX12)

- Cyberpunk 2077 (DX12)

- Days Gone (DX11)

- Dying Light 2 (DX12)

- Far Cry 6 (DX12)

- Forza Horizon 5 (DX12)

- God of War (DX11)

- Horizon Zero Dawn (DX12)

- Marvel's Spider-Man Remastered (DX12)

- Metro Exodus Enhanced Edition (DXR)

- Red Dead Redemption 2 (DX12)

- Resident Evil Village (DX12)

- Total War: Warhammer III (DX11)

We run each benchmark/game three times, and present mean averages in our graphs. We use FrameView to measure average frame rates as well as 1% low values across our three runs.

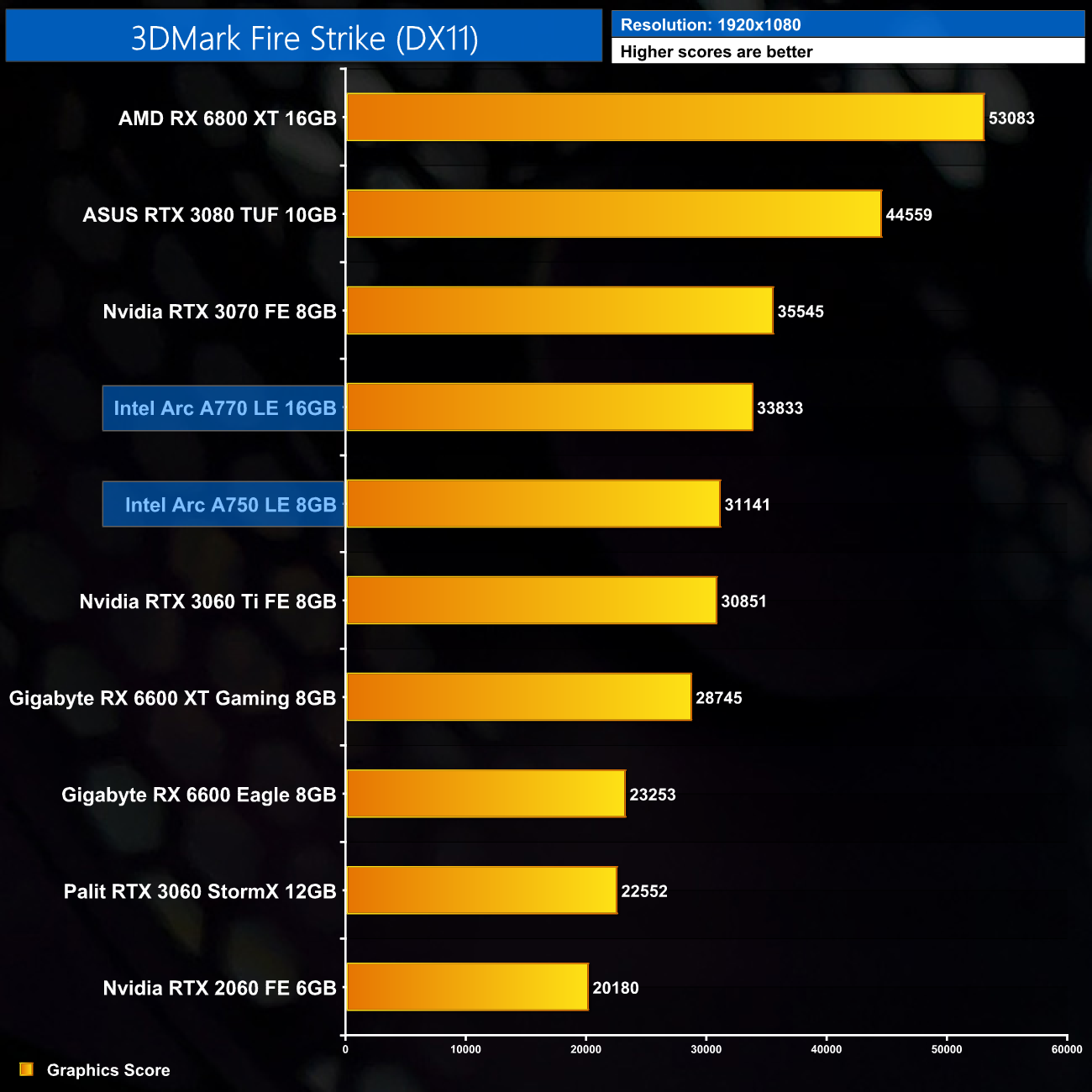

Fire Strike is a showcase DirectX 11 benchmark for modern gaming PCs. Its ambitious real-time graphics are rendered with detail and complexity far beyond other DirectX 11 benchmarks and games. Fire Strike includes two graphics tests, a physics test and a combined test that stresses the CPU and GPU. (UL).

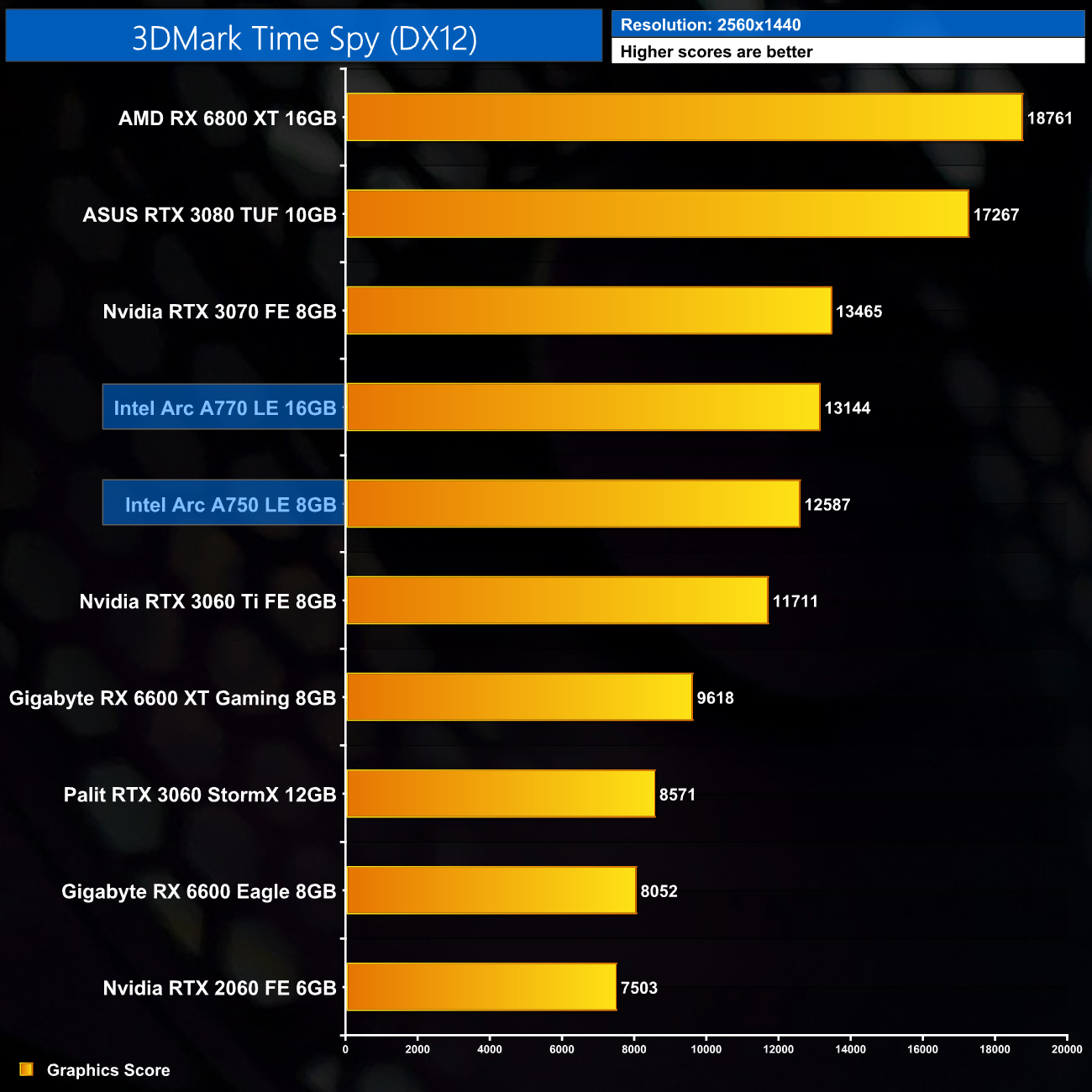

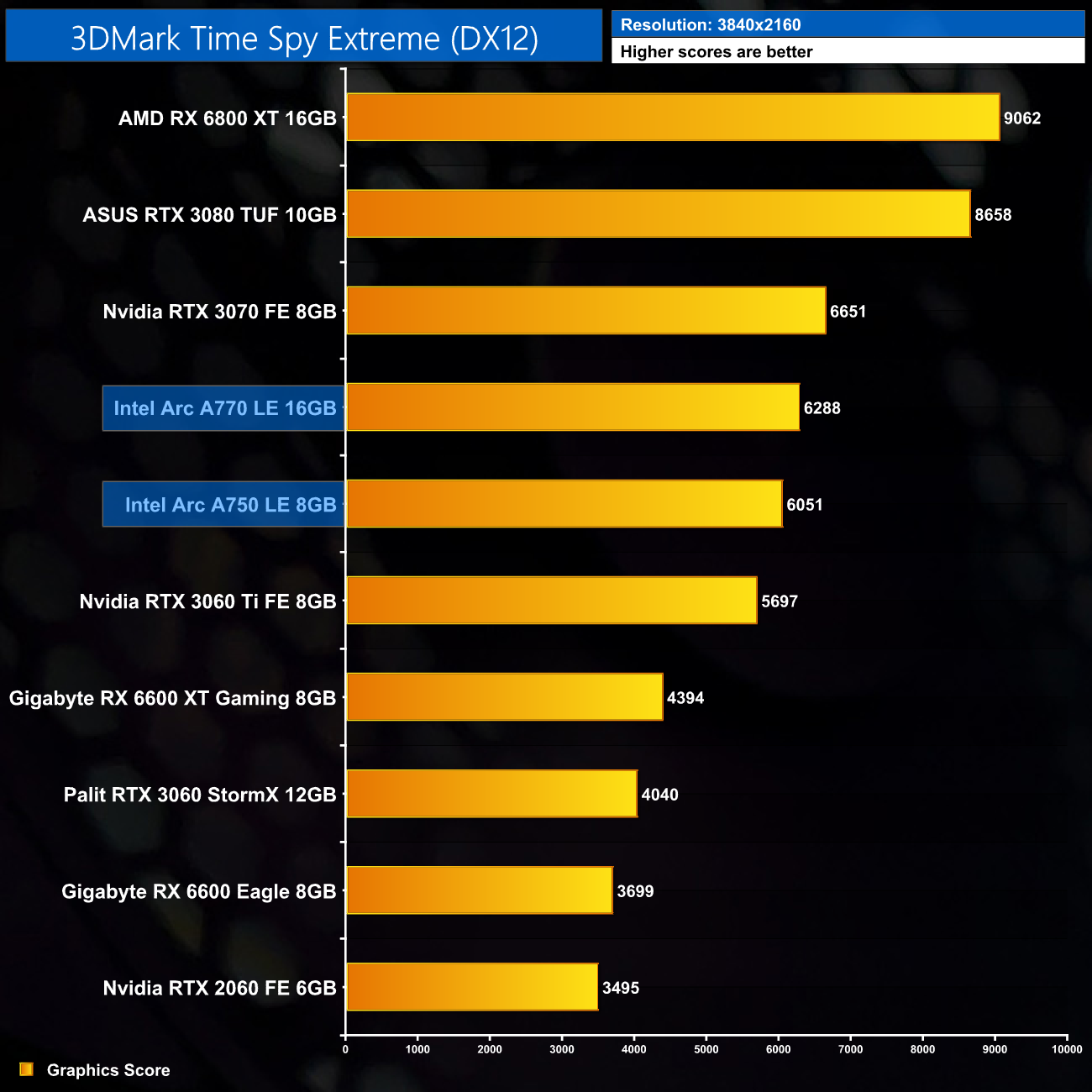

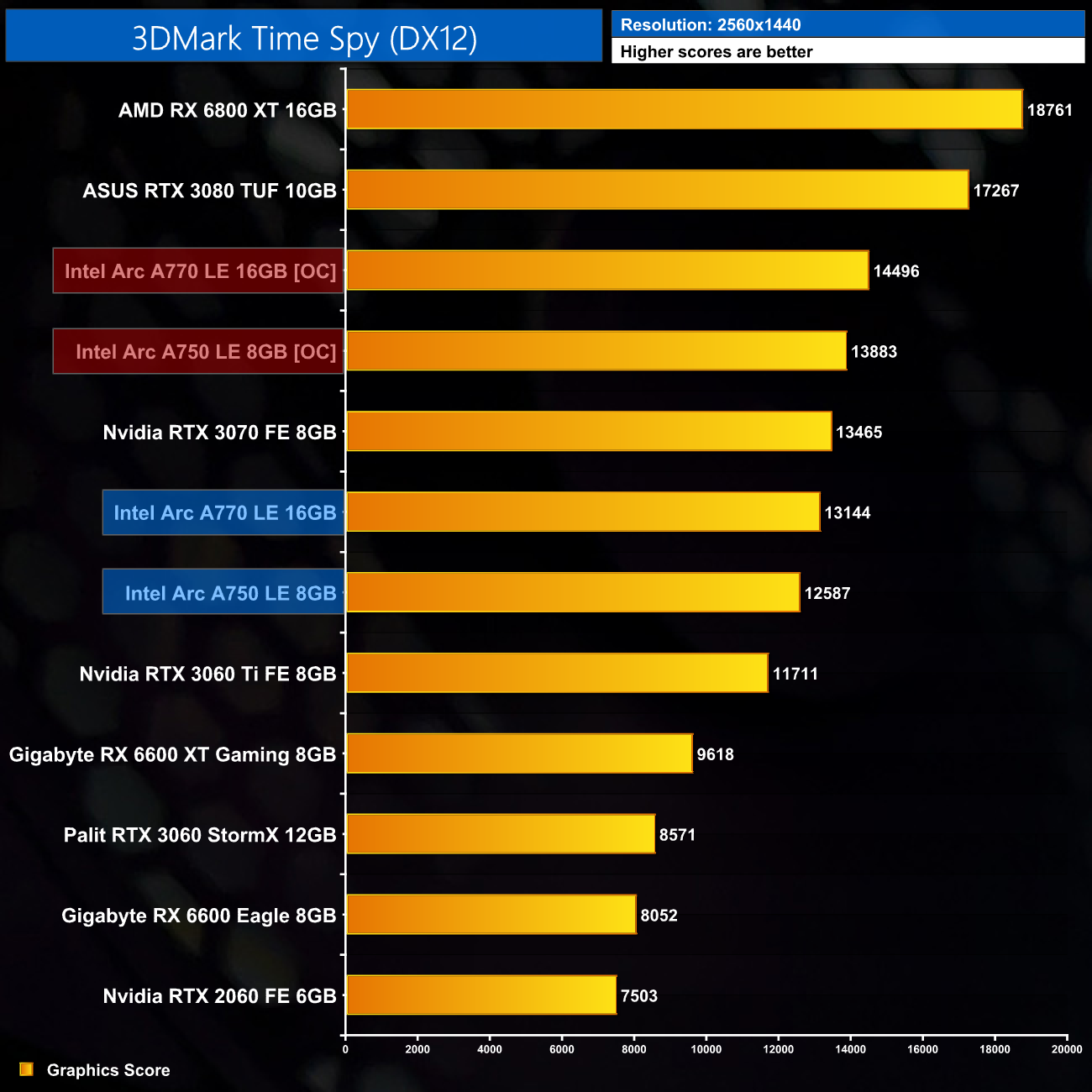

3DMark Time Spy is a DirectX 12 benchmark test for Windows 10 gaming PCs. Time Spy is one of the first DirectX 12 apps to be built the right way from the ground up to fully realize the performance gains that the new API offers. With its pure DirectX 12 engine, which supports new API features like asynchronous compute, explicit multi-adapter, and multi-threading, Time Spy is the ideal test for benchmarking the latest graphics cards. (UL).

3DMark performance is strong for the Arc A750. It's slightly faster than the RTX 3060 Ti in Fire Strike, and does even better in the DX12 Time Spy and Time Spy Extreme benchmarks, where it out-points the 3060 Ti in every test.

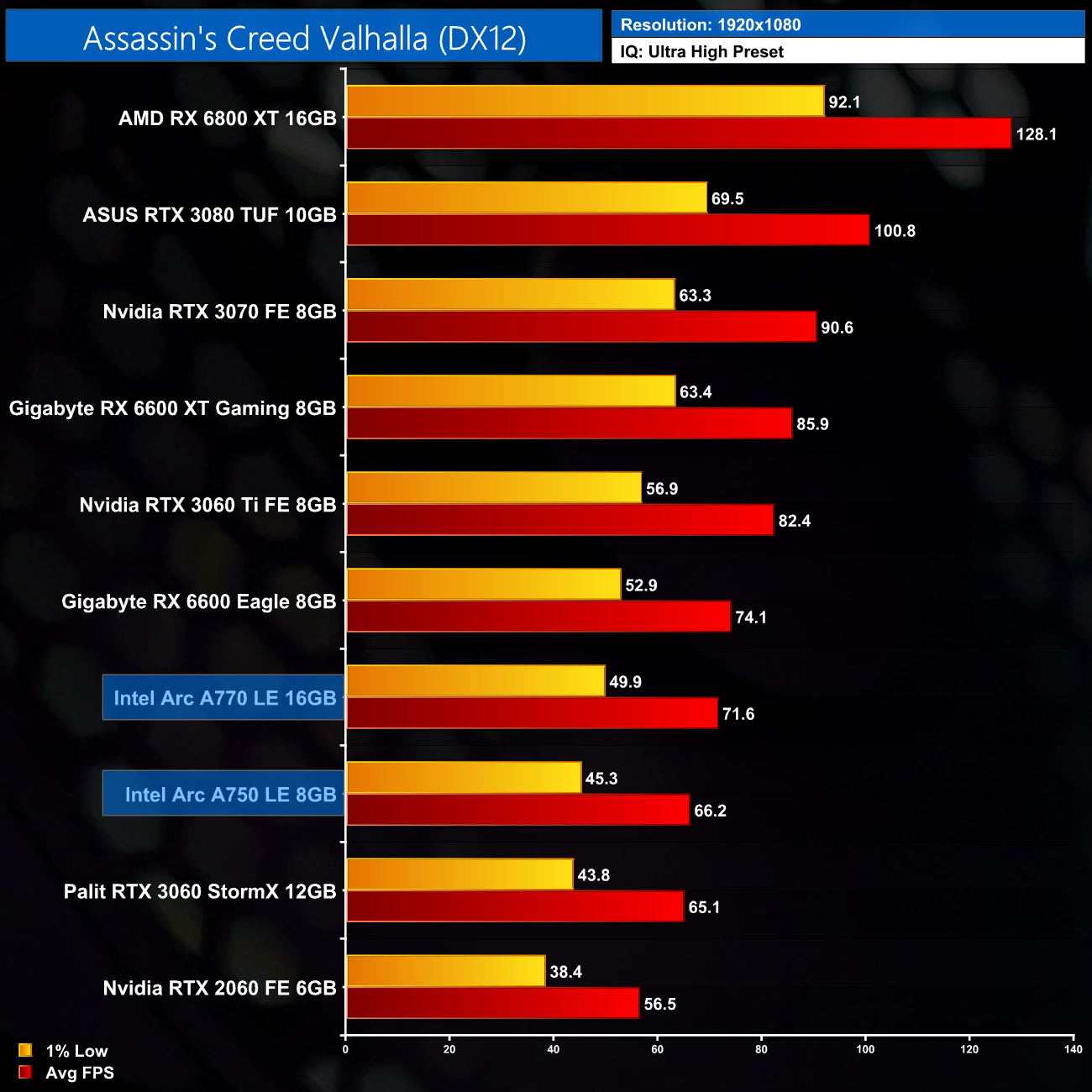

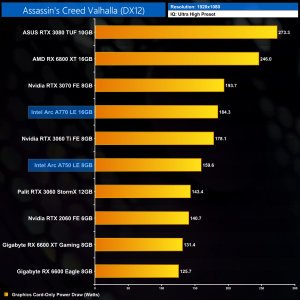

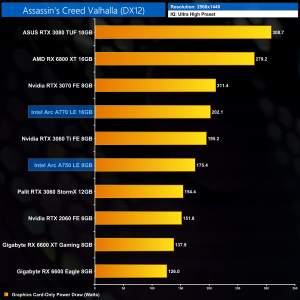

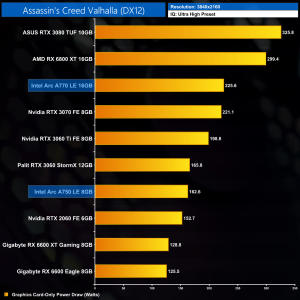

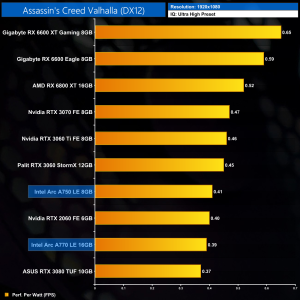

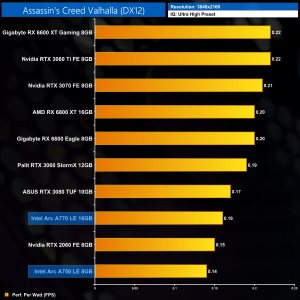

Assassin's Creed Valhalla is an action role-playing video game developed by Ubisoft Montreal and published by Ubisoft. It is the twelfth major installment and the twenty-second release in the Assassin's Creed series, and a successor to the 2018's Assassin's Creed Odyssey. The game was released on November 10, 2020, for Microsoft Windows, PlayStation 4, Xbox One, Xbox Series X and Series S, and Stadia, while the PlayStation 5 version was released on November 12. (Wikipedia).

Engine: AnvilNext 2.0. We test using the Ultra High preset, DX12 API.

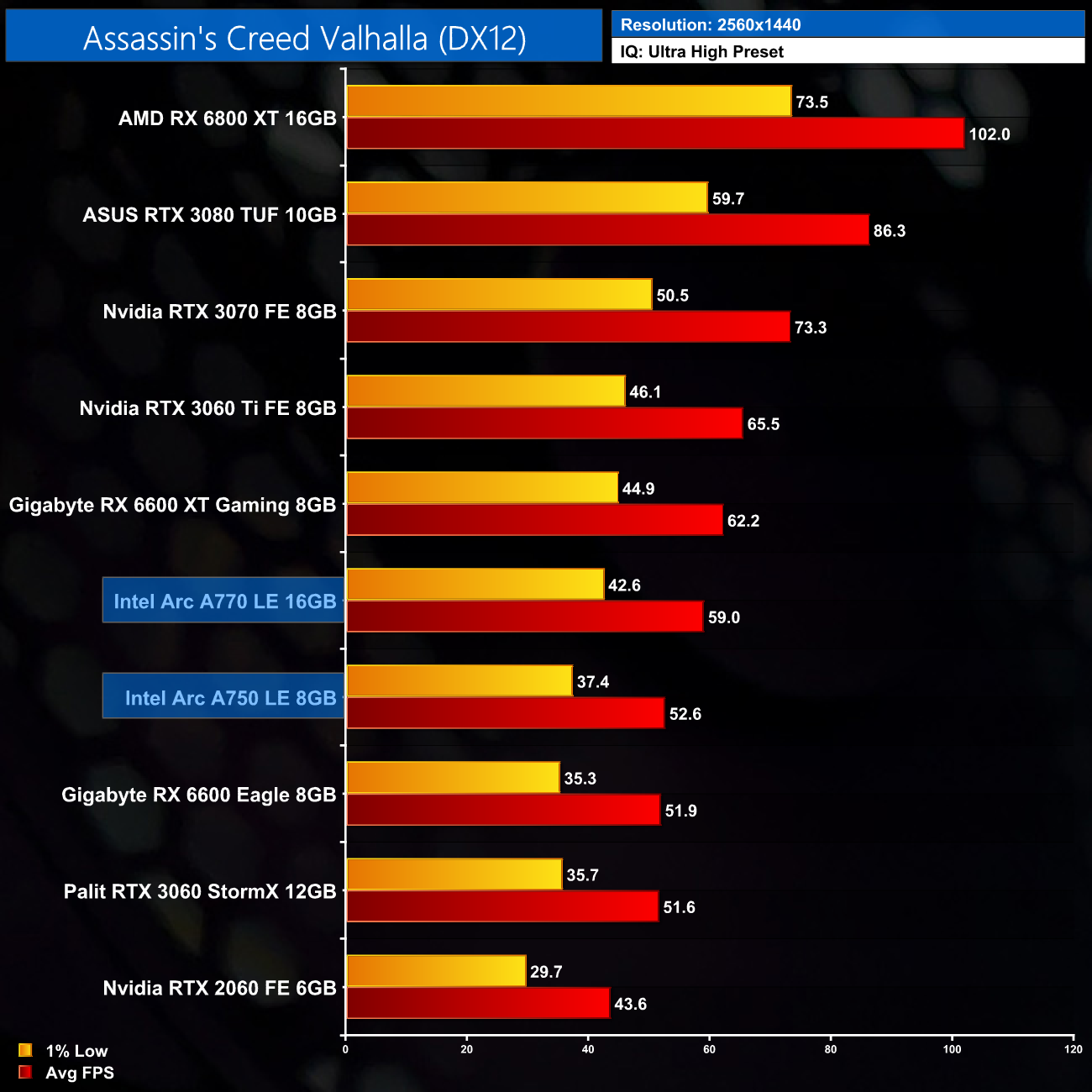

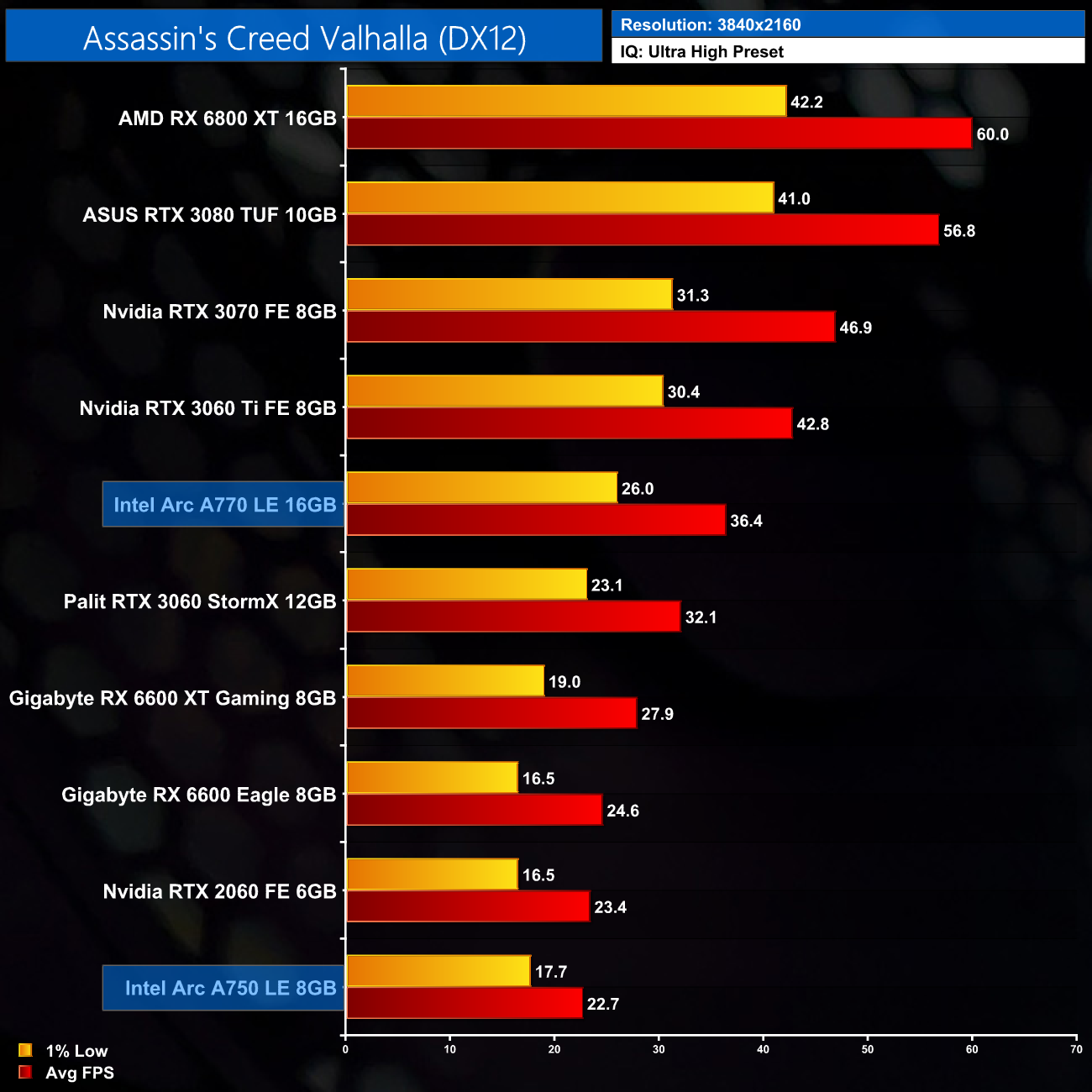

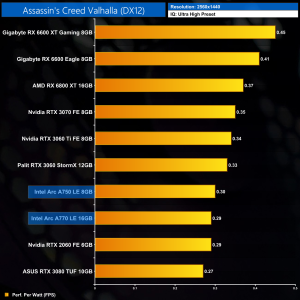

Our first game is Assassin's Creed Valhalla, where the A750 averages 66FPS at 1080p, putting it neck-and-neck with the RTX 3060. At 1440p it manages 52.6FPS, so it is exactly 1 frame ahead of the RTX 3060, but 15% slower than the RX 6600 XT.

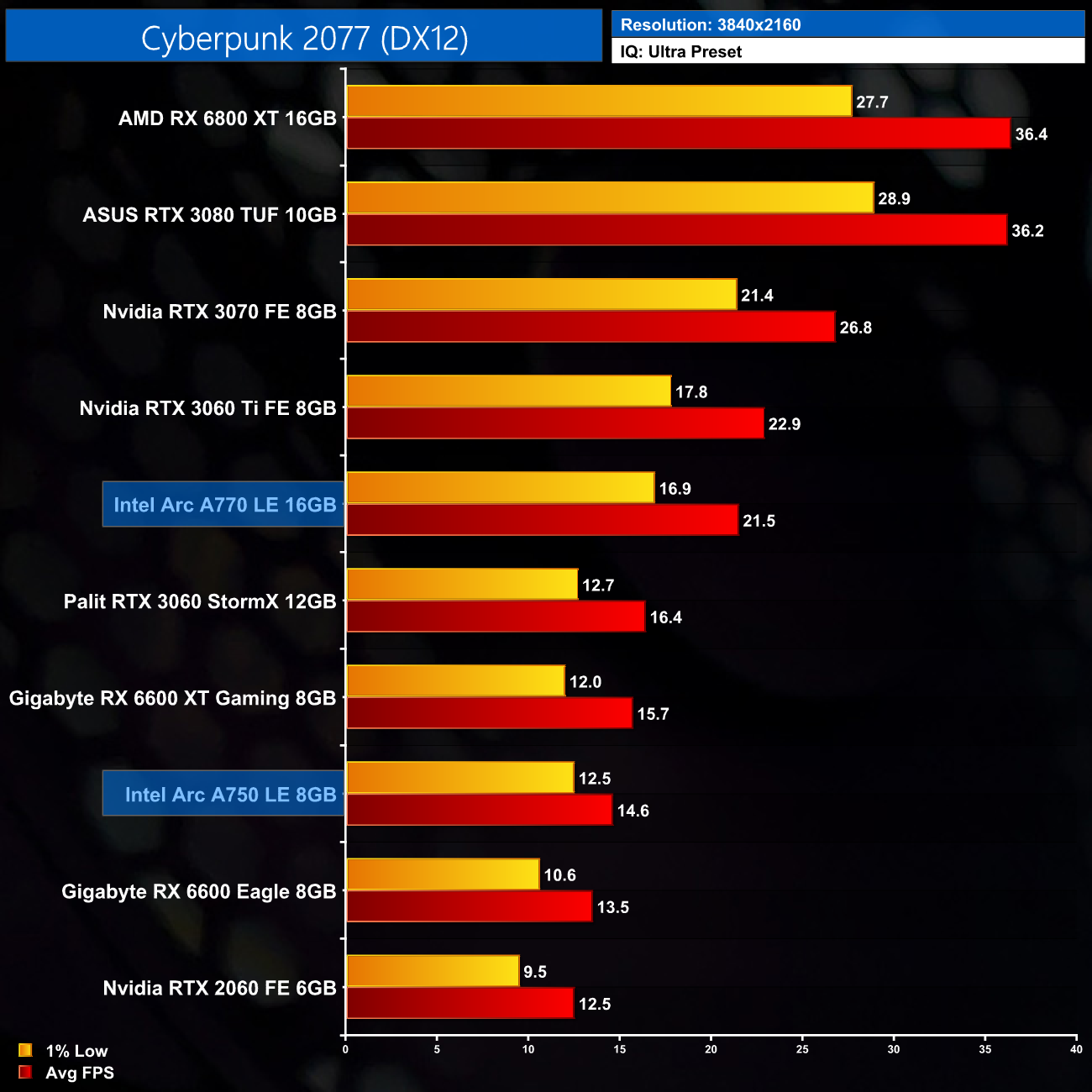

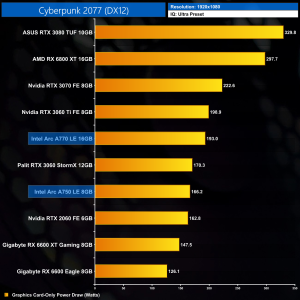

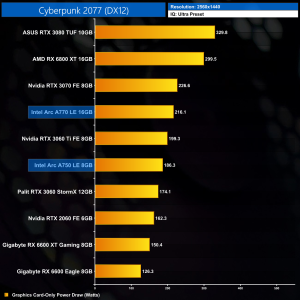

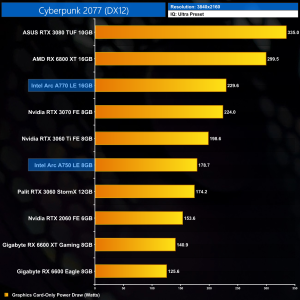

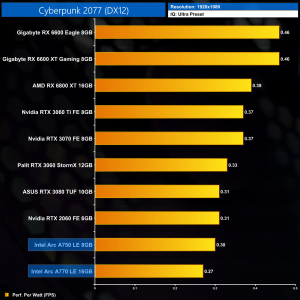

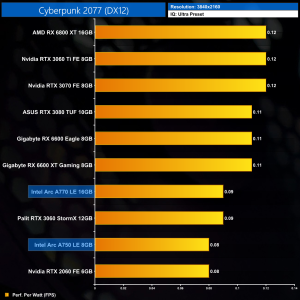

Cyberpunk 2077 is a 2020 action role-playing video game developed and published by CD Projekt. The story takes place in Night City, an open world set in the Cyberpunk universe. Players assume the first-person perspective of a customisable mercenary known as V, who can acquire skills in hacking and machinery with options for melee and ranged combat. Cyberpunk 2077 was released for Microsoft Windows, PlayStation 4, Stadia, and Xbox One on 10 December 2020. (Wikipedia).

Engine: REDengine 4. We test using the Ultra preset, DX12 API.

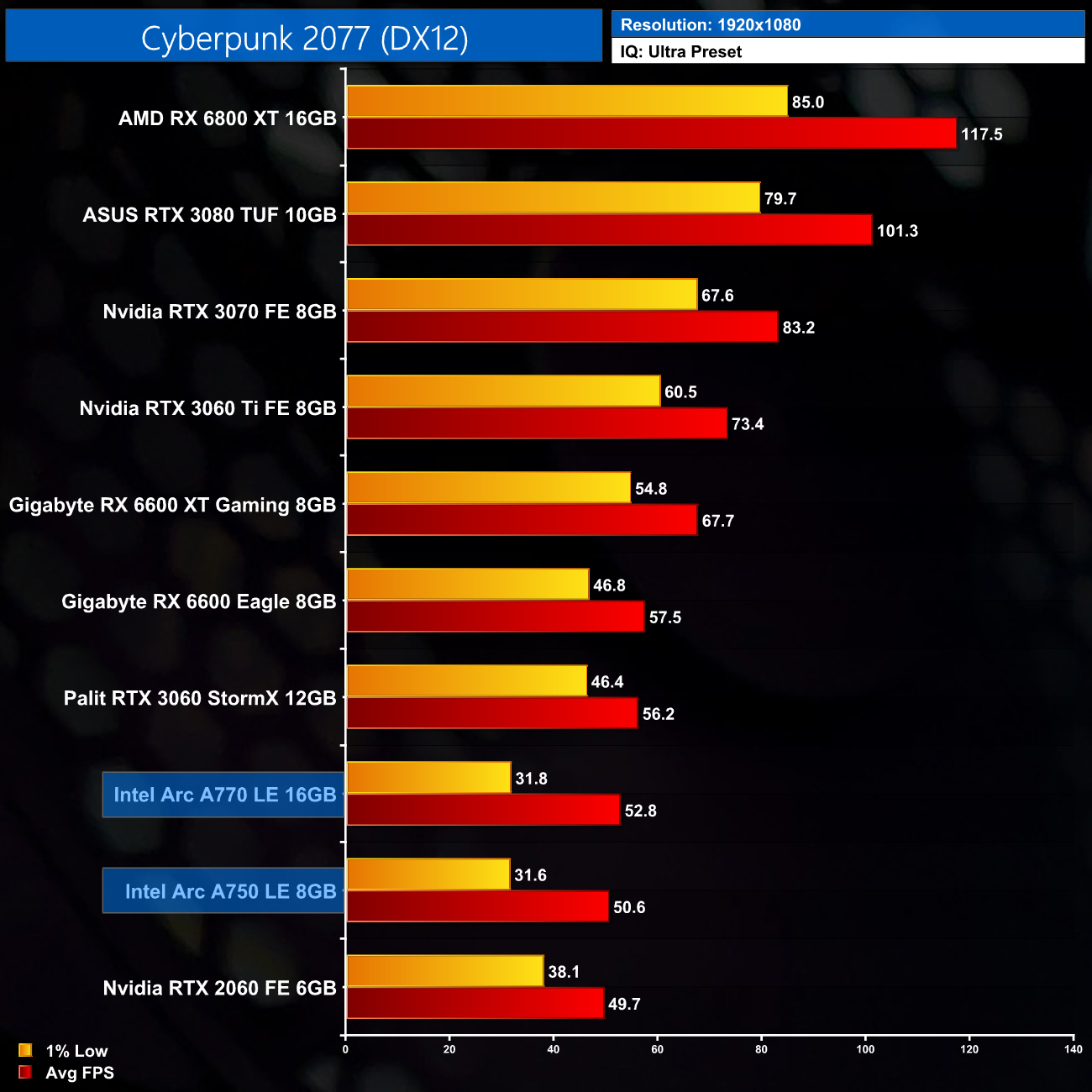

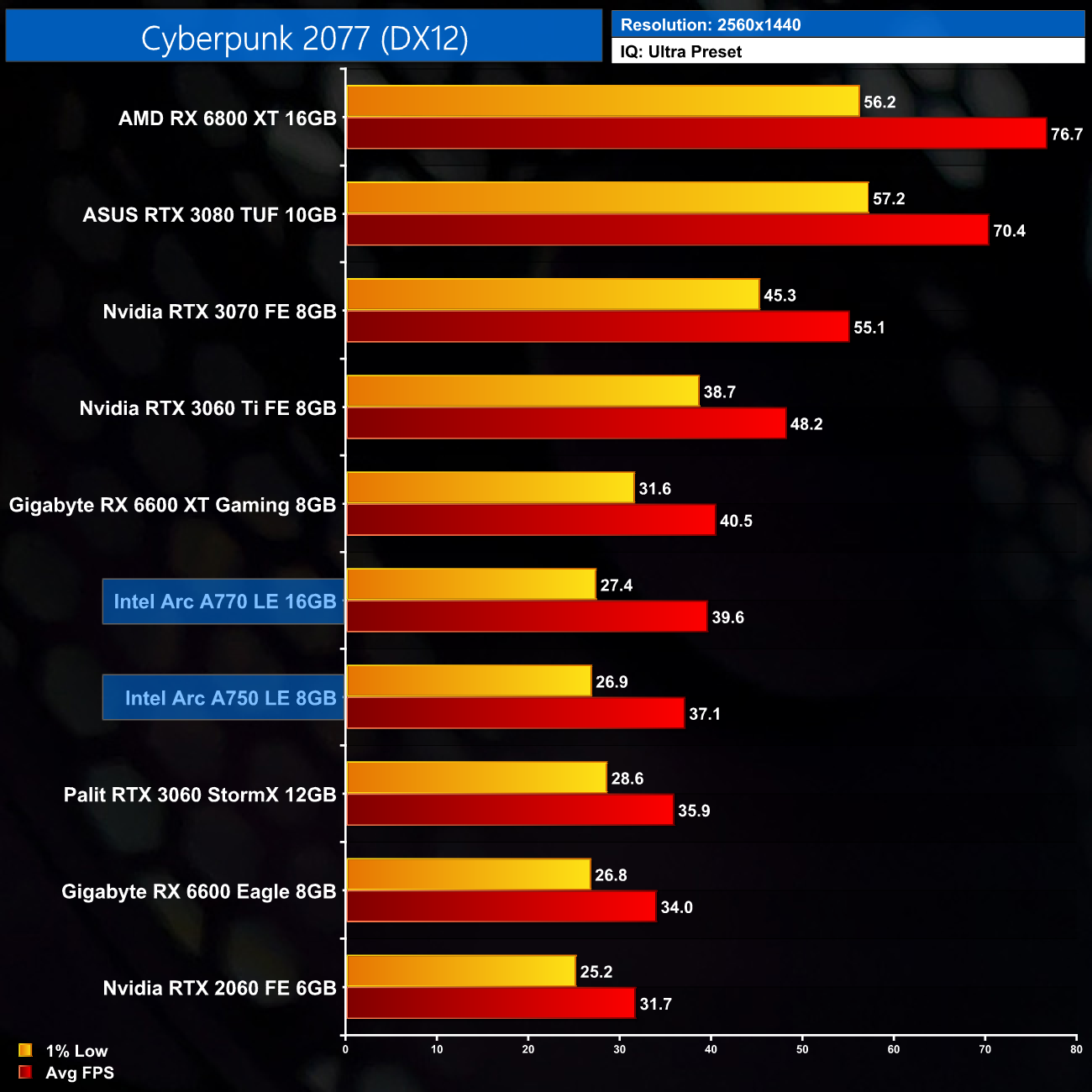

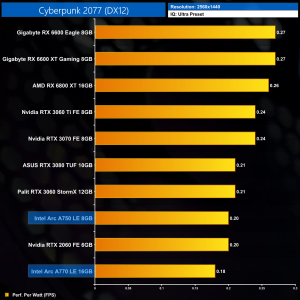

Cyberpunk 2077 brings us to the first of several driver-related issues we will discuss today, with more shown in the video. Frame time consistency is simply very poor at 1080p, resulting in 1% low performance that is significantly worse than even the RTX 2060. Weirdly, this does improve – relatively speaking – at 1440p, but this game with Ultra settings is still too heavy for this calibre of GPU at the higher resolution.

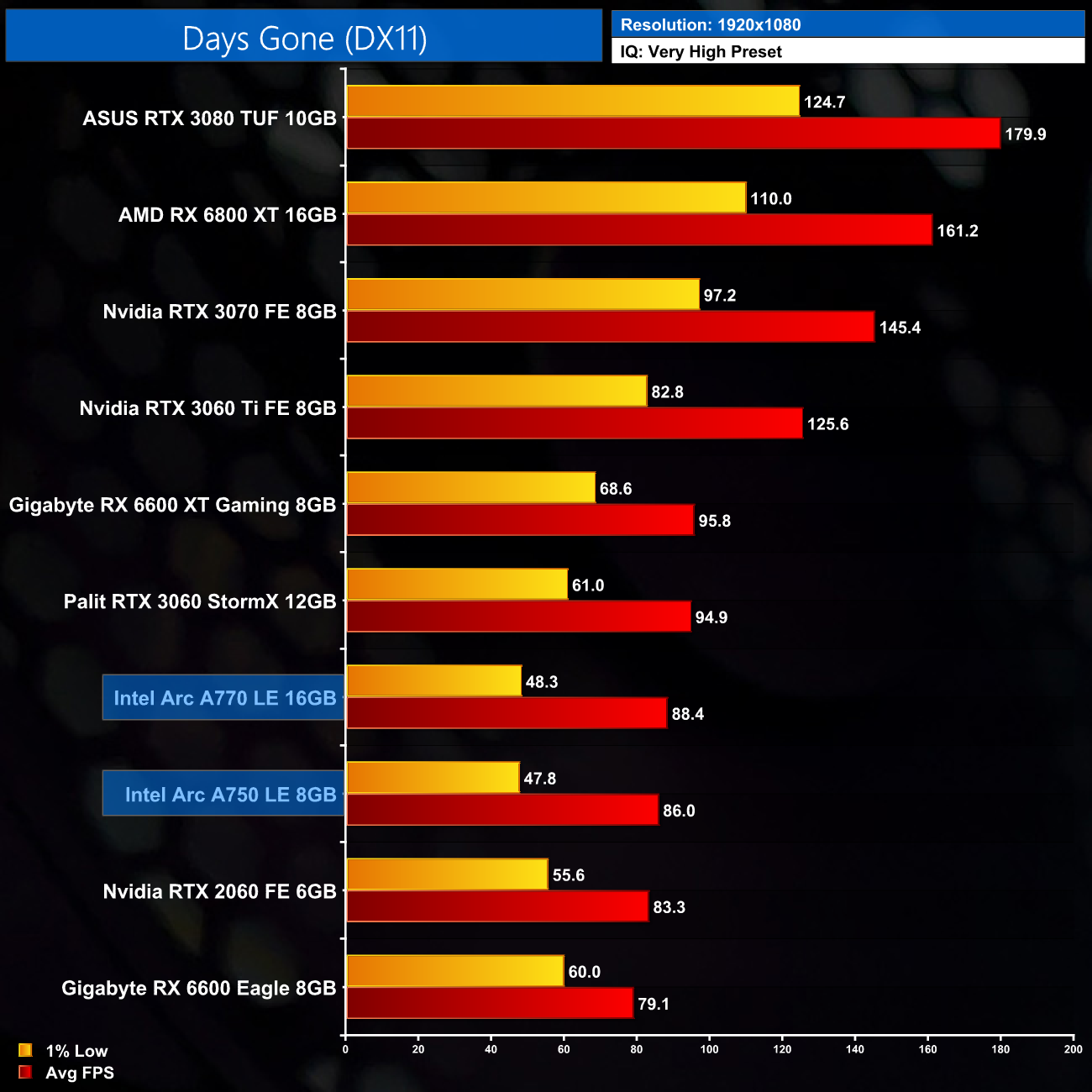

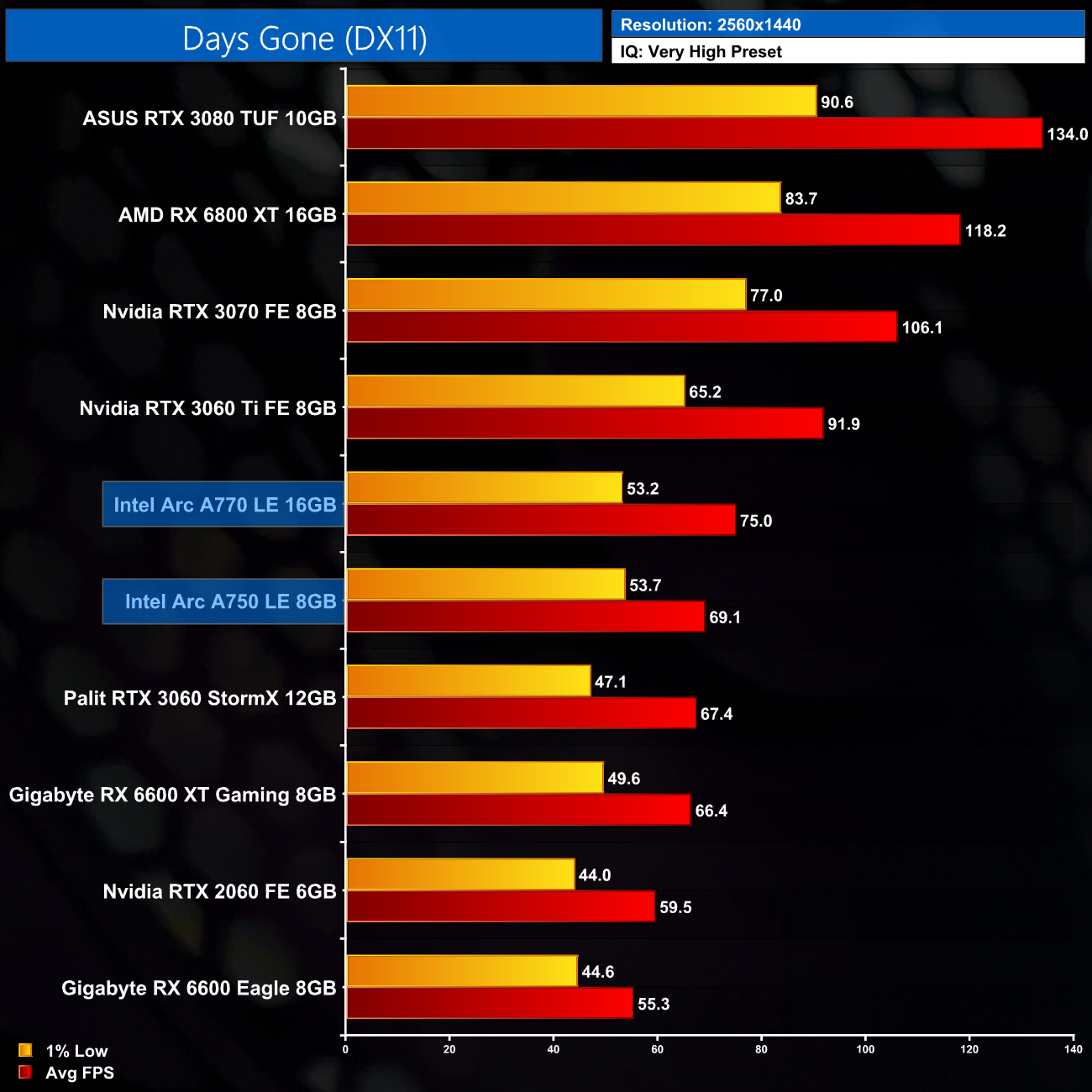

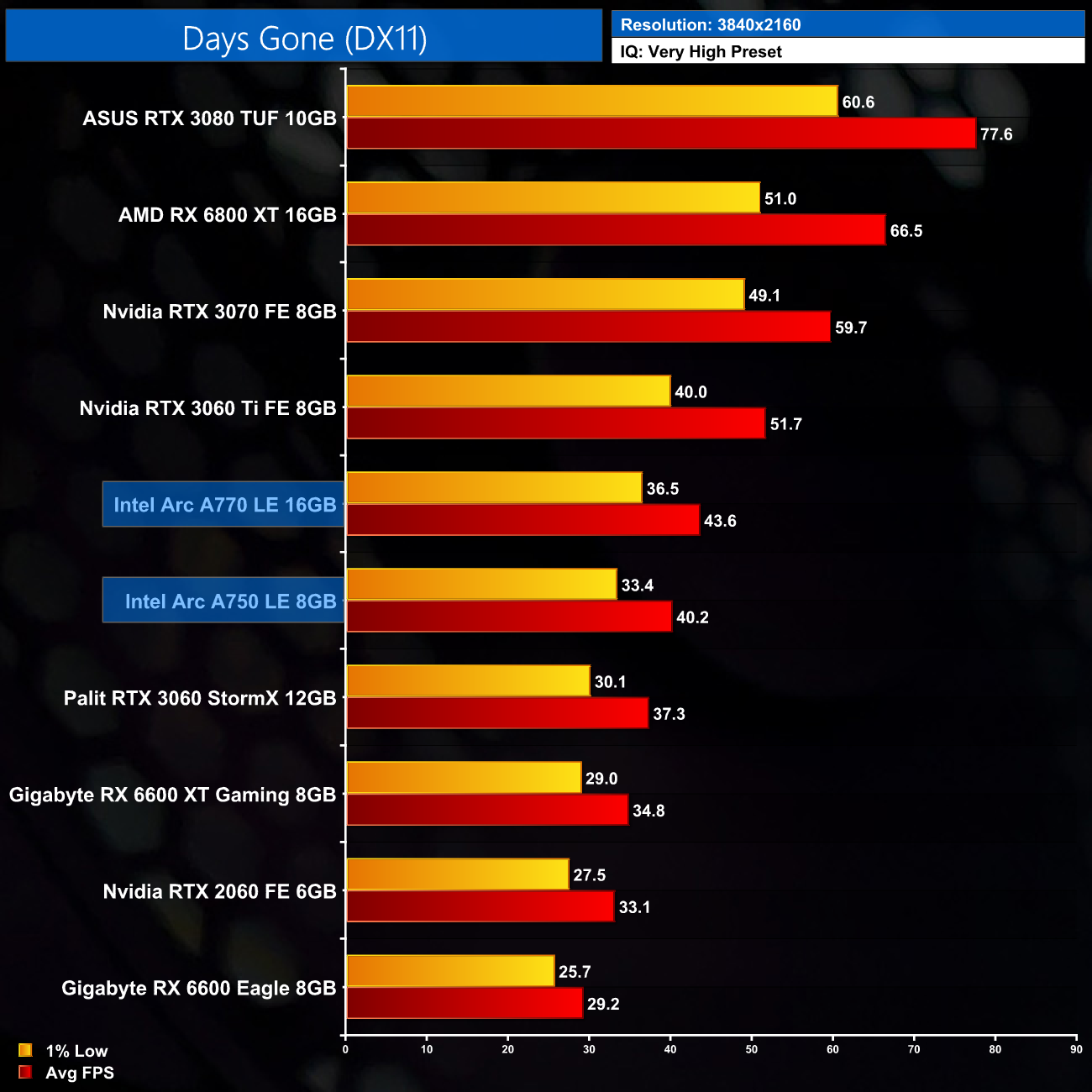

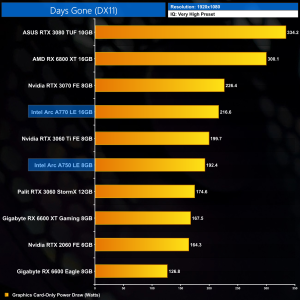

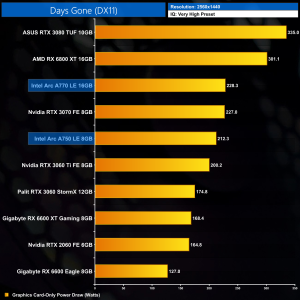

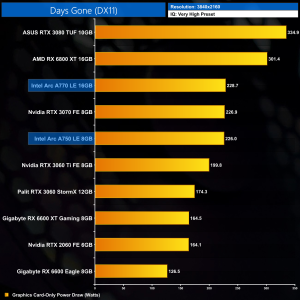

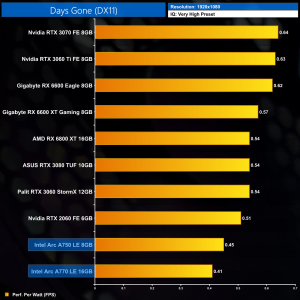

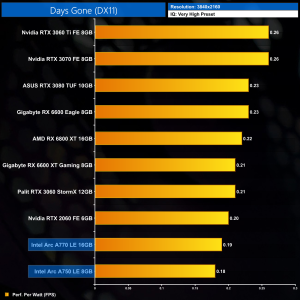

Days Gone is a 2019 action-adventure survival horror video game developed by Bend Studio and published by Sony Interactive Entertainment for the PlayStation 4 and Microsoft Windows. As part of Sony's efforts to bring more of its first-party content to Microsoft Windows following Horizon Zero Dawn, Days Gone released on Windows on May 18, 2021. (Wikipedia).

Engine: Unreal Engine 4. We test using the Very High preset, DX11 API.

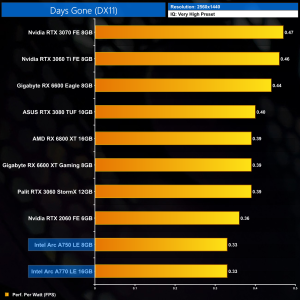

Just like Cyberpunk 2077, Days Gone exhibits awful frame times. The average frame rates are relatively strong, but the 1% lows make this a borderline unplayable experience. Once more things do improve at 1440p, and admittedly Days Gone is a DX11 title which Arc GPUs do struggle with (as we see in more detail later), but the behaviour is still very odd.

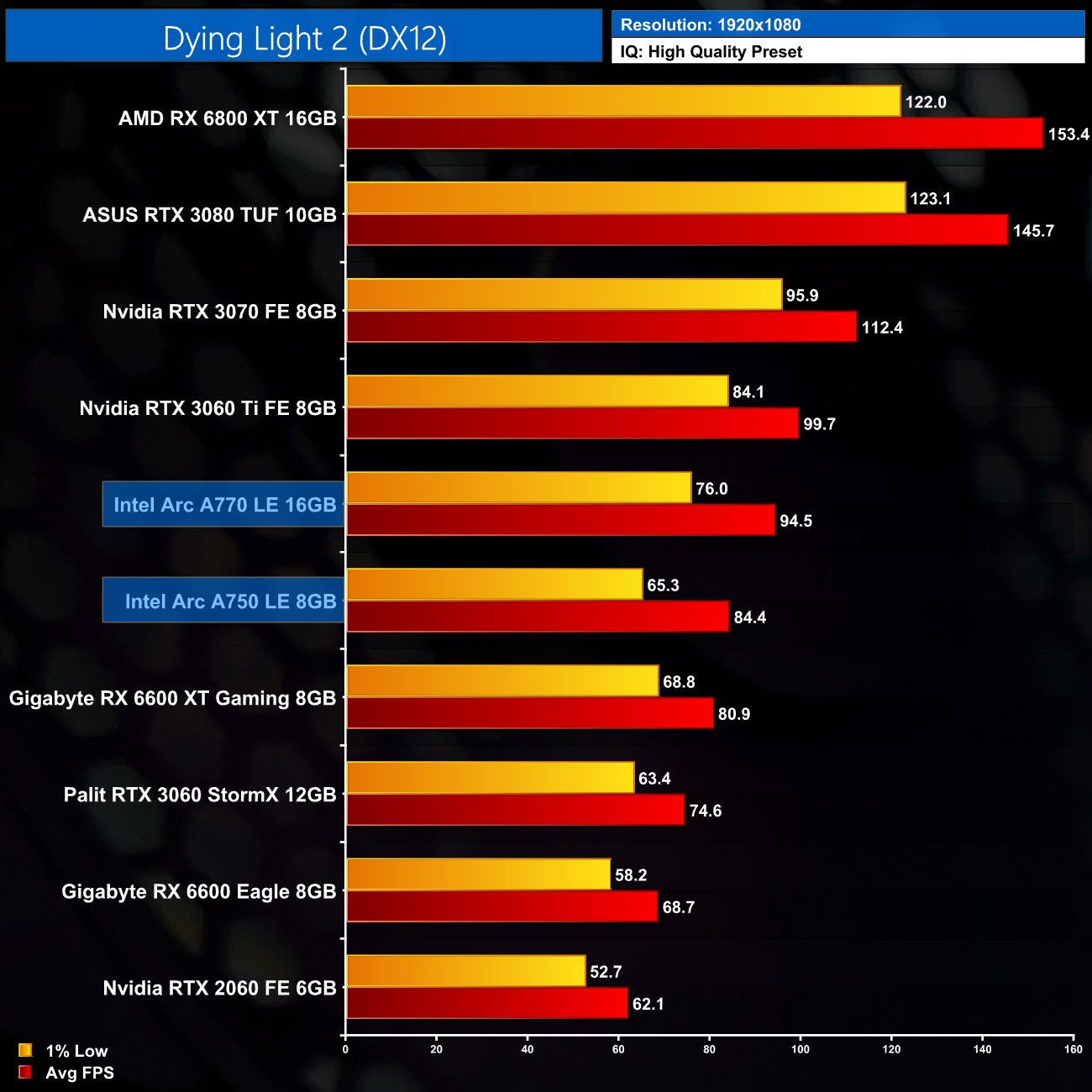

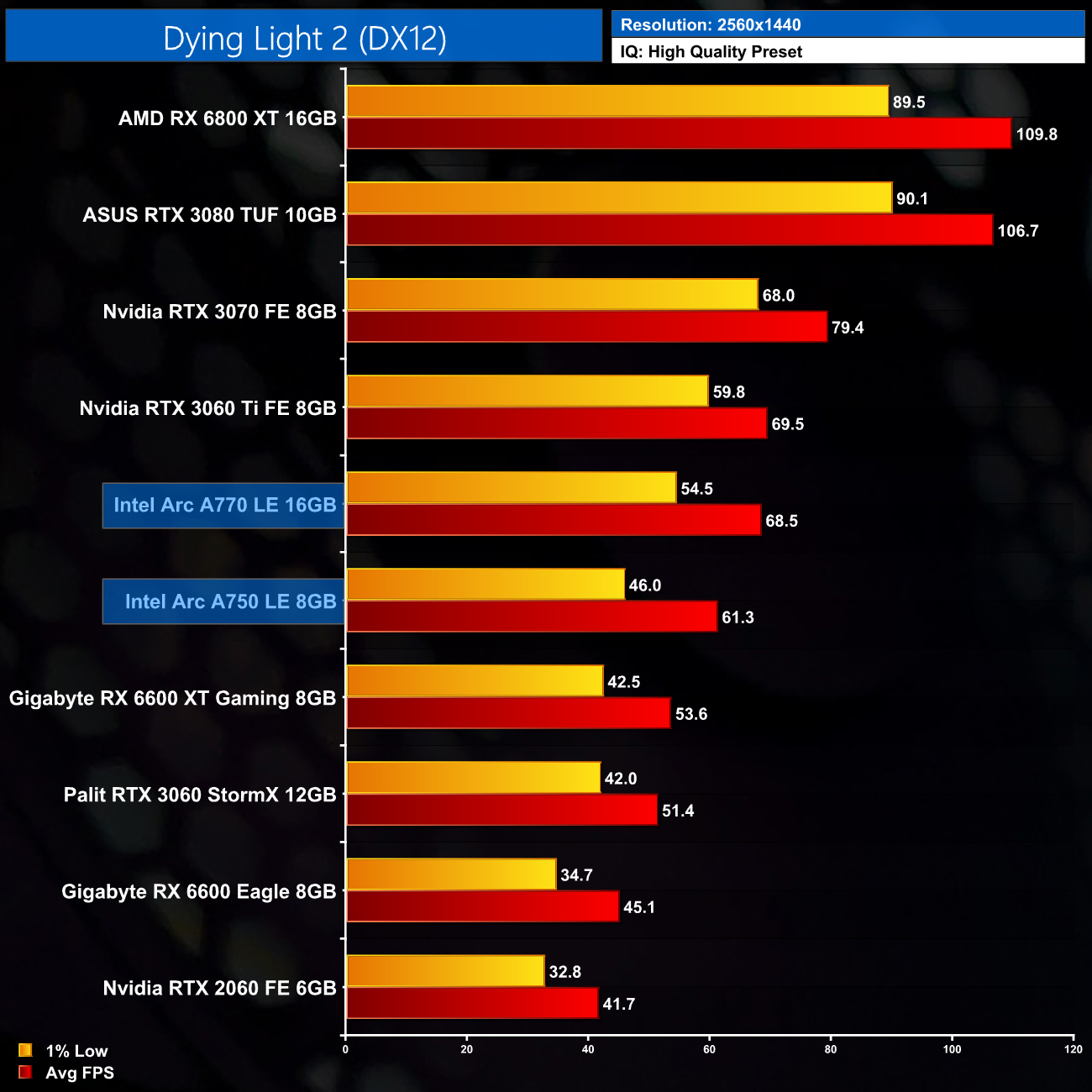

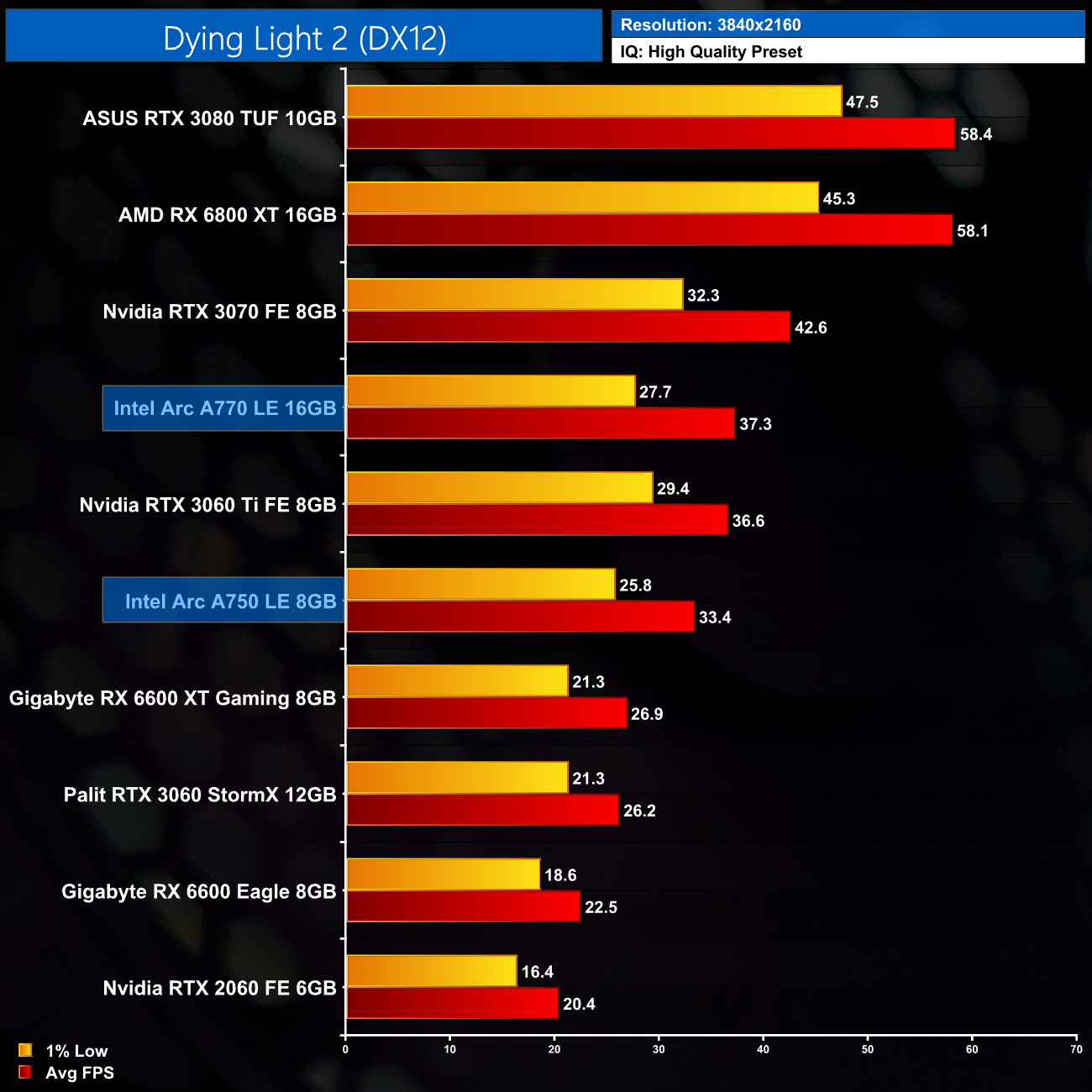

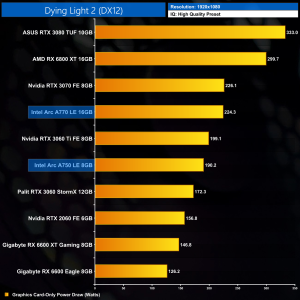

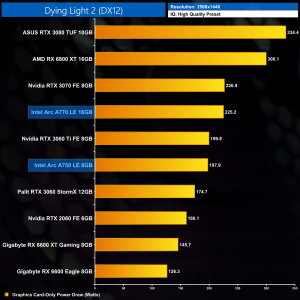

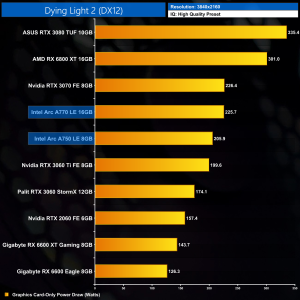

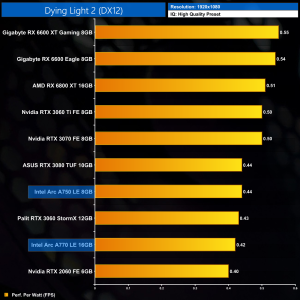

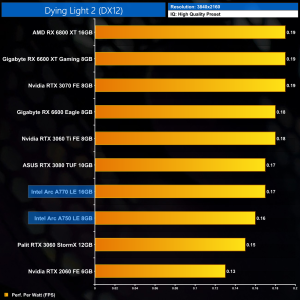

Dying Light 2: Stay Human is a 2022 action role-playing game developed and published by Techland. The sequel to Dying Light (2015), the game was released on February 4, 2022 for Microsoft Windows, PlayStation 4, PlayStation 5, Xbox One, and Xbox Series X/S. (Wikipedia).

Engine: C-Engine. We test using the High preset, DX12 API.

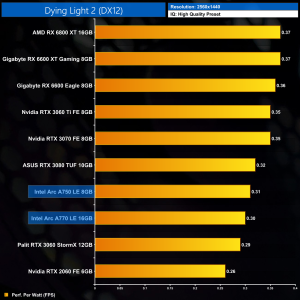

Dying Light 2 is one of the better performers for Intel Arc GPUs, and at 1080p the A750 is good for 84FPS on average, putting it 13% ahead of the RTX 3060 and 4% ahead of the RX 6600 XT. At 1440p it extends those margins further, coming in 19% faster than the 3060 and now 14% faster than the 6600 XT – very impressive stuff.

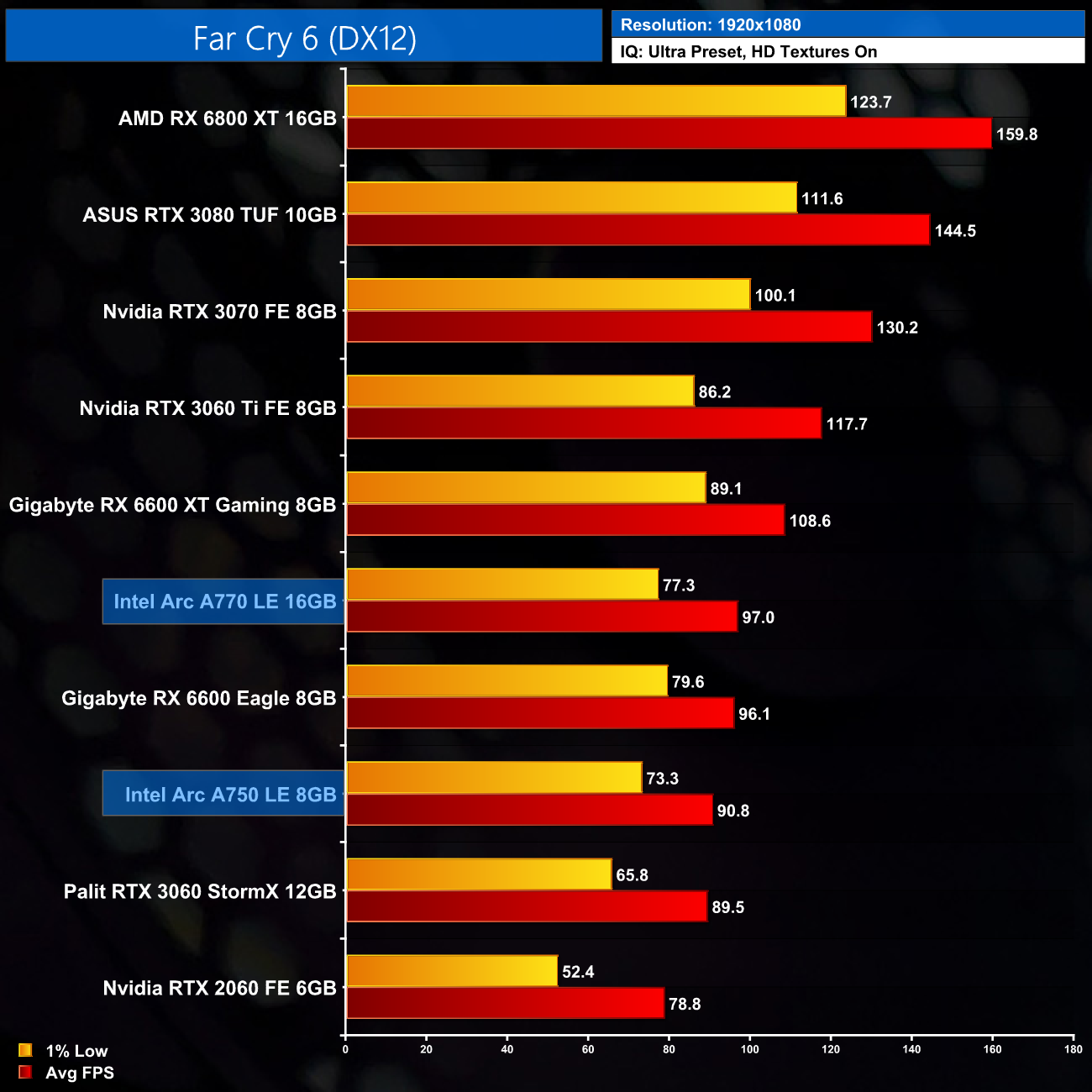

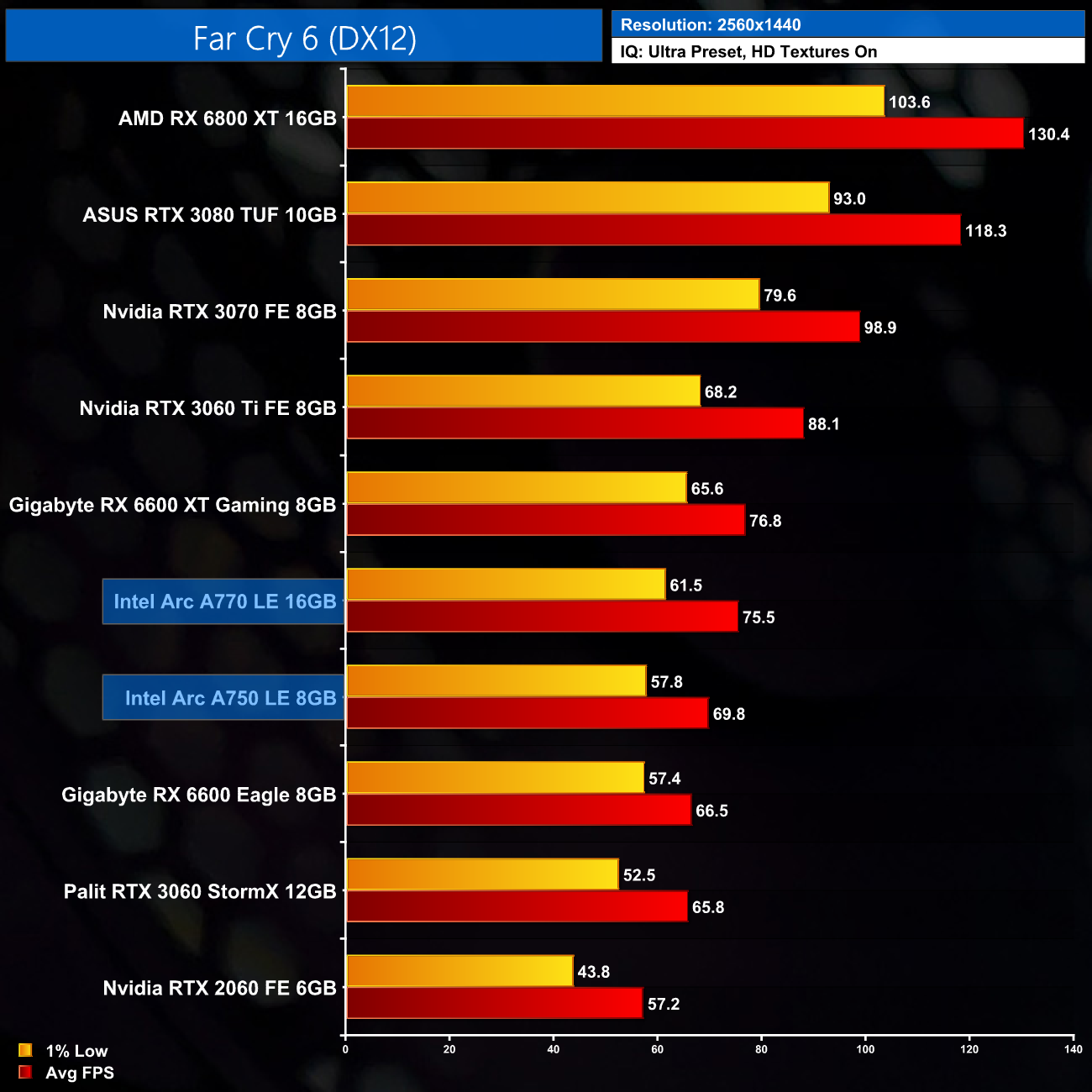

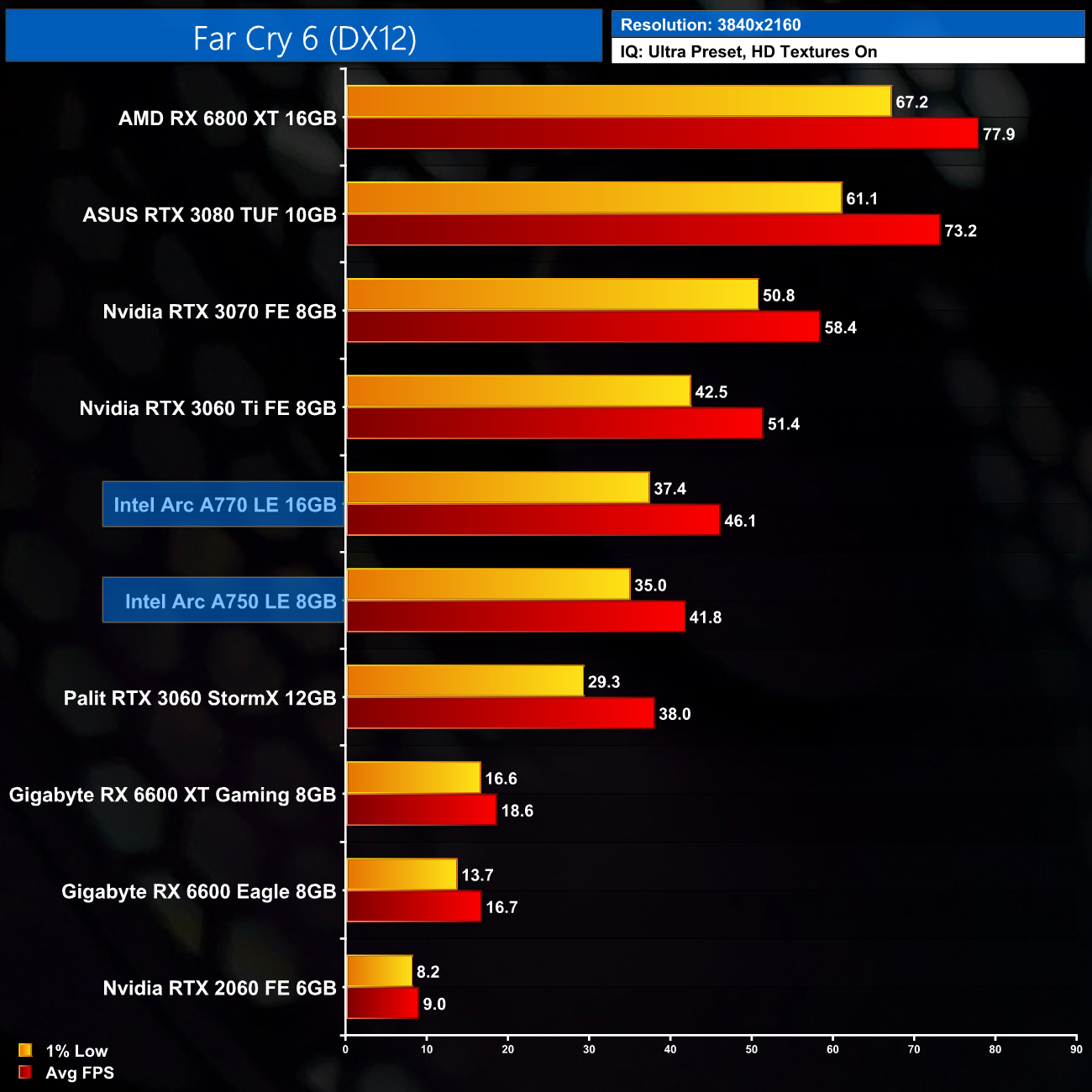

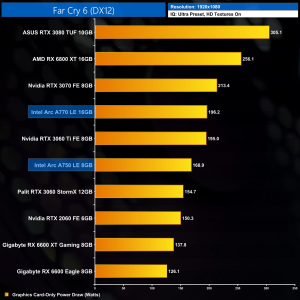

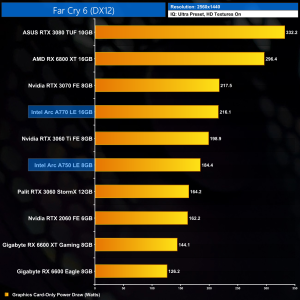

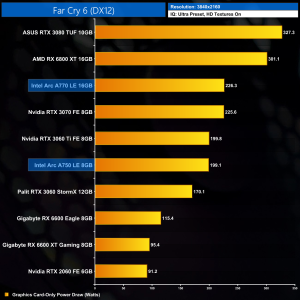

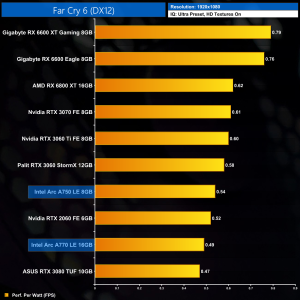

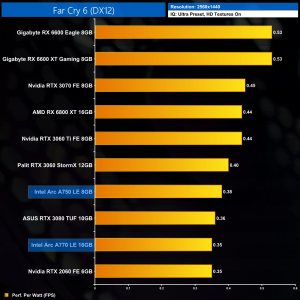

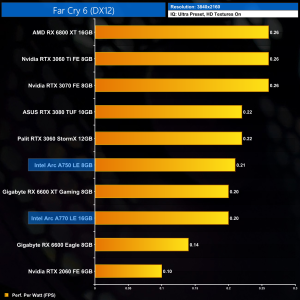

Far Cry 6 is a 2021 action-adventure first-person shooter game developed by Ubisoft Toronto and published by Ubisoft. It is the sixth main installment in the Far Cry series and the successor to 2018's Far Cry 5. The game was released on October 7, 2021, for Microsoft Windows, PlayStation 4, PlayStation 5, Xbox One, Xbox Series X/S, Stadia, and Amazon Luna. (Wikipedia).

Engine: Dunia Engine. We test using the Ultra preset, HD Textures enabled, DX12 API.

The A750 does well in Far Cry 6, too. It just pips the RTX 3060 to the post at 1080p, only by a single frame, though it is slower than the RX 6600. At 1440p, the A750 stretches its legs slightly, however, with a 6% lead over the RTX 3060, while it's just 9% behind the RX 6600 XT.

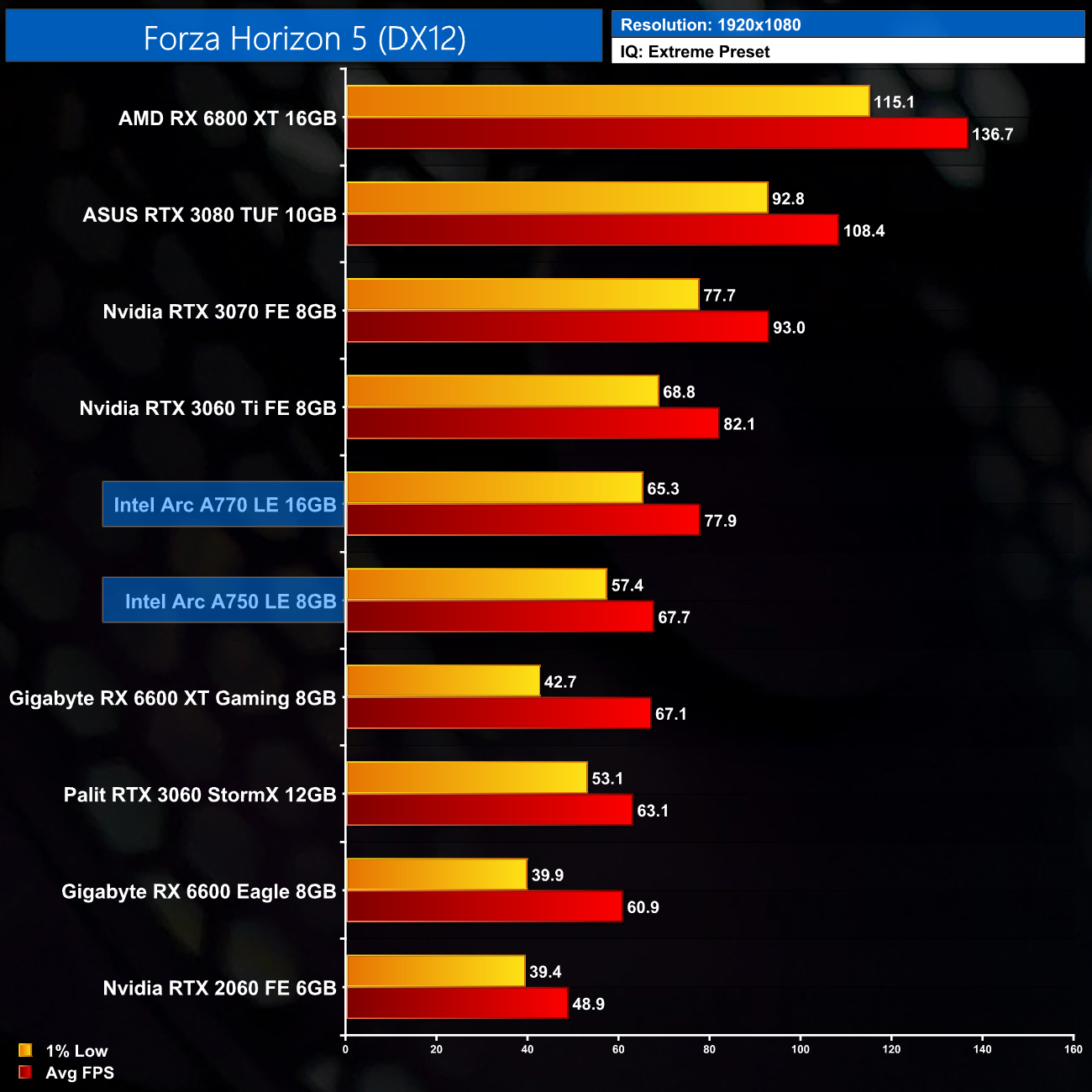

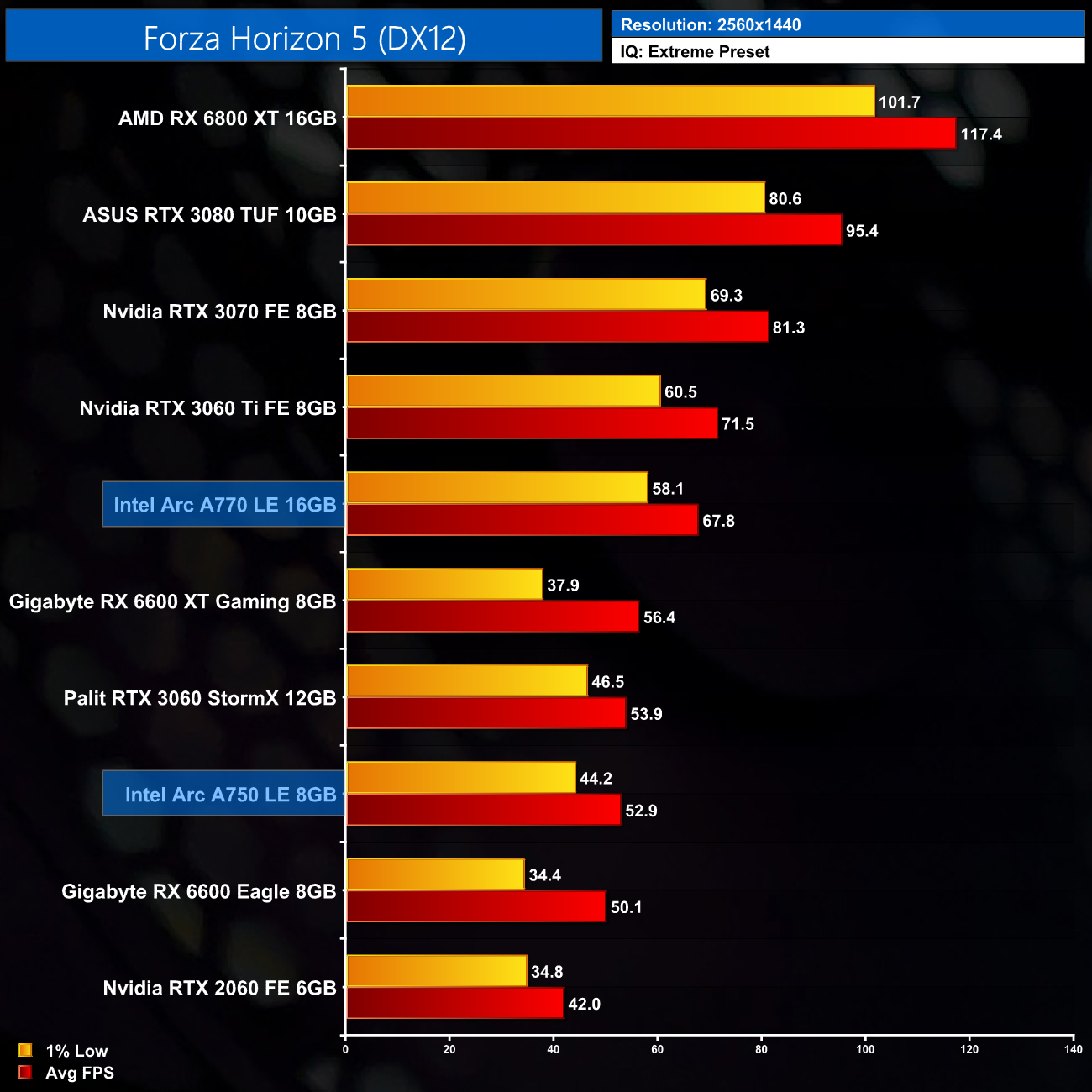

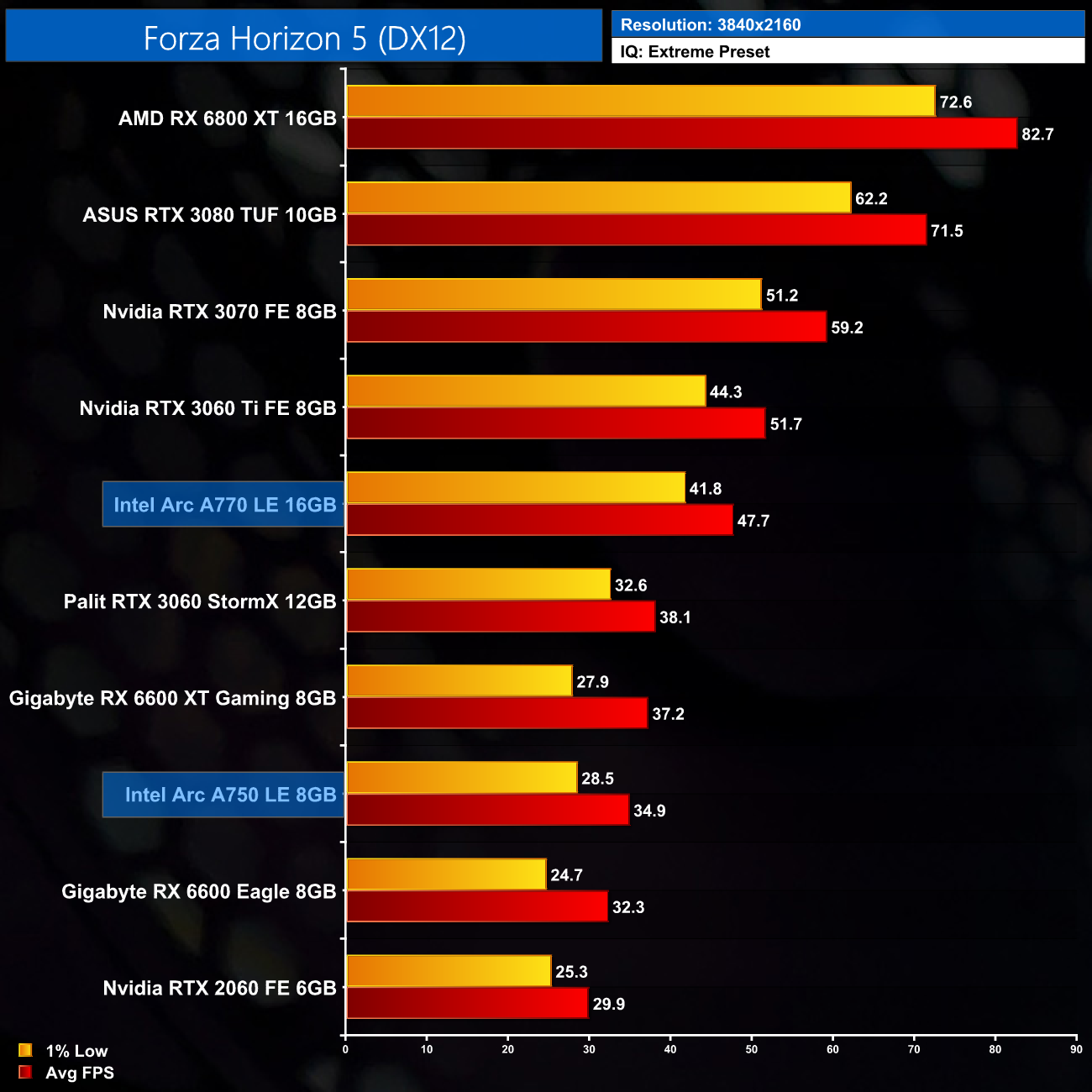

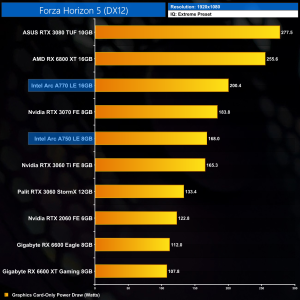

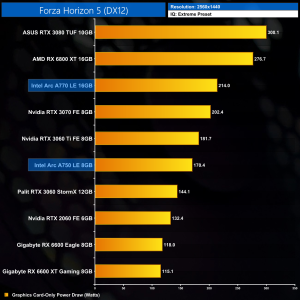

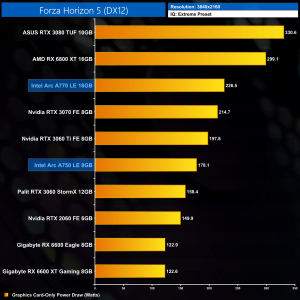

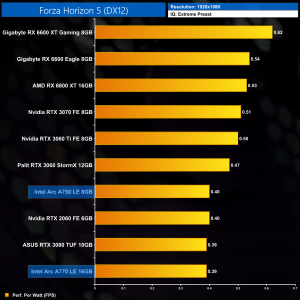

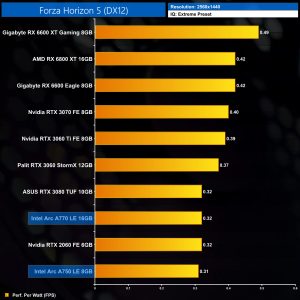

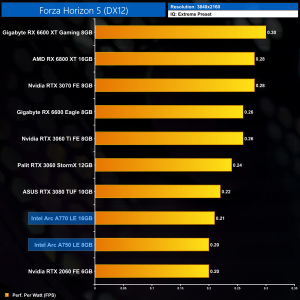

Forza Horizon 5 is a 2021 racing video game developed by Playground Games and published by Xbox Game Studios. The twelfth main instalment of the Forza series, the game is set in a fictionalised representation of Mexico. It was released on 9 November 2021 for Microsoft Windows, Xbox One, and Xbox Series X/S. (Wikipedia).

Engine: ForzaTech. We test using the Extreme preset, DX12 API.

Forza Horizon 5 is highly demanding using the Extreme preset and is particularly VRAM intensive even at 1080p. We can see the A750 fall 13% behind the A770 here – the biggest margin between the two we will see today – with VRAM a likely factor. At 1440p the A750 drops off even more and falls 22% behind the A770, so it does show there are at least some games which benefit from more than 8GB VRAM, even in this performance segment.

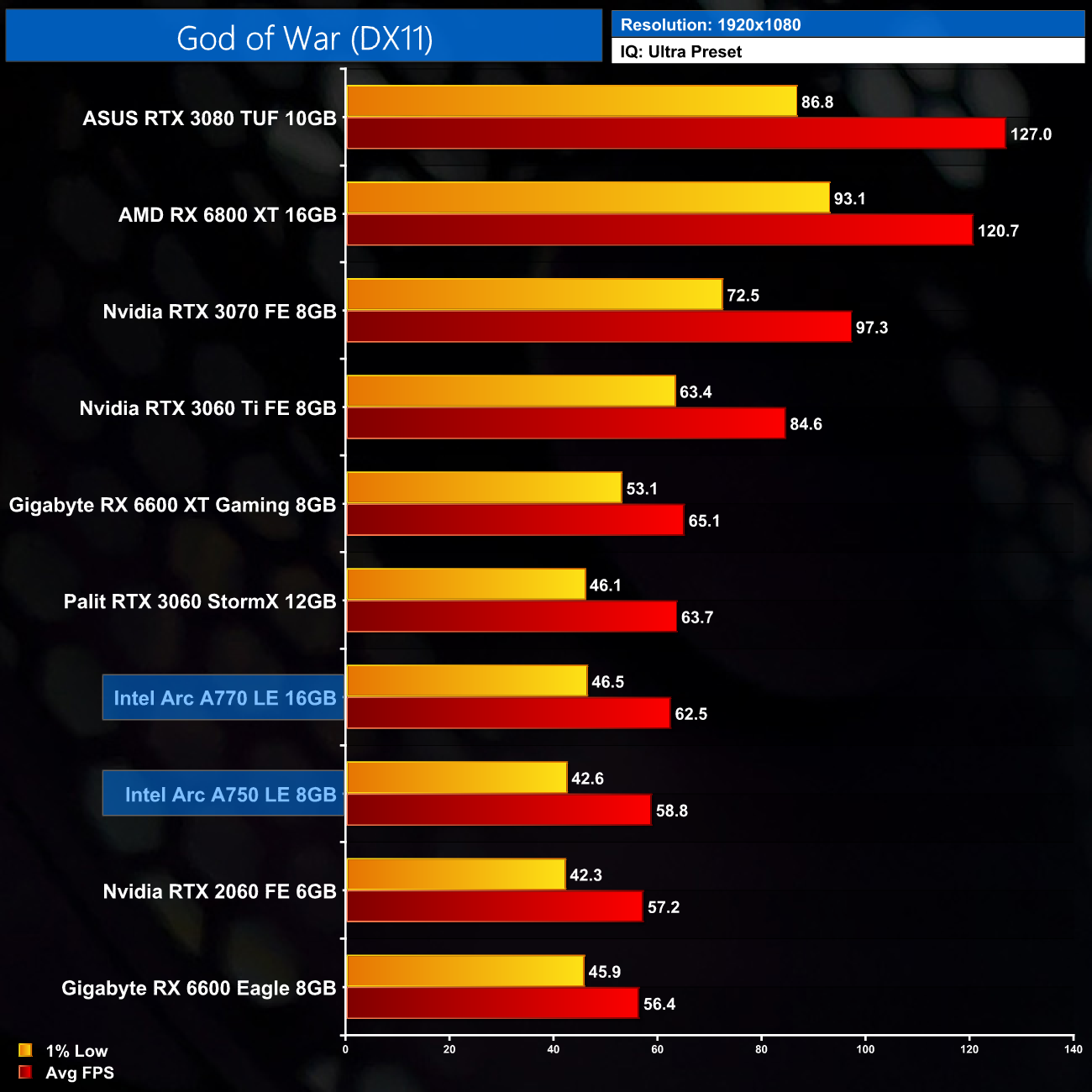

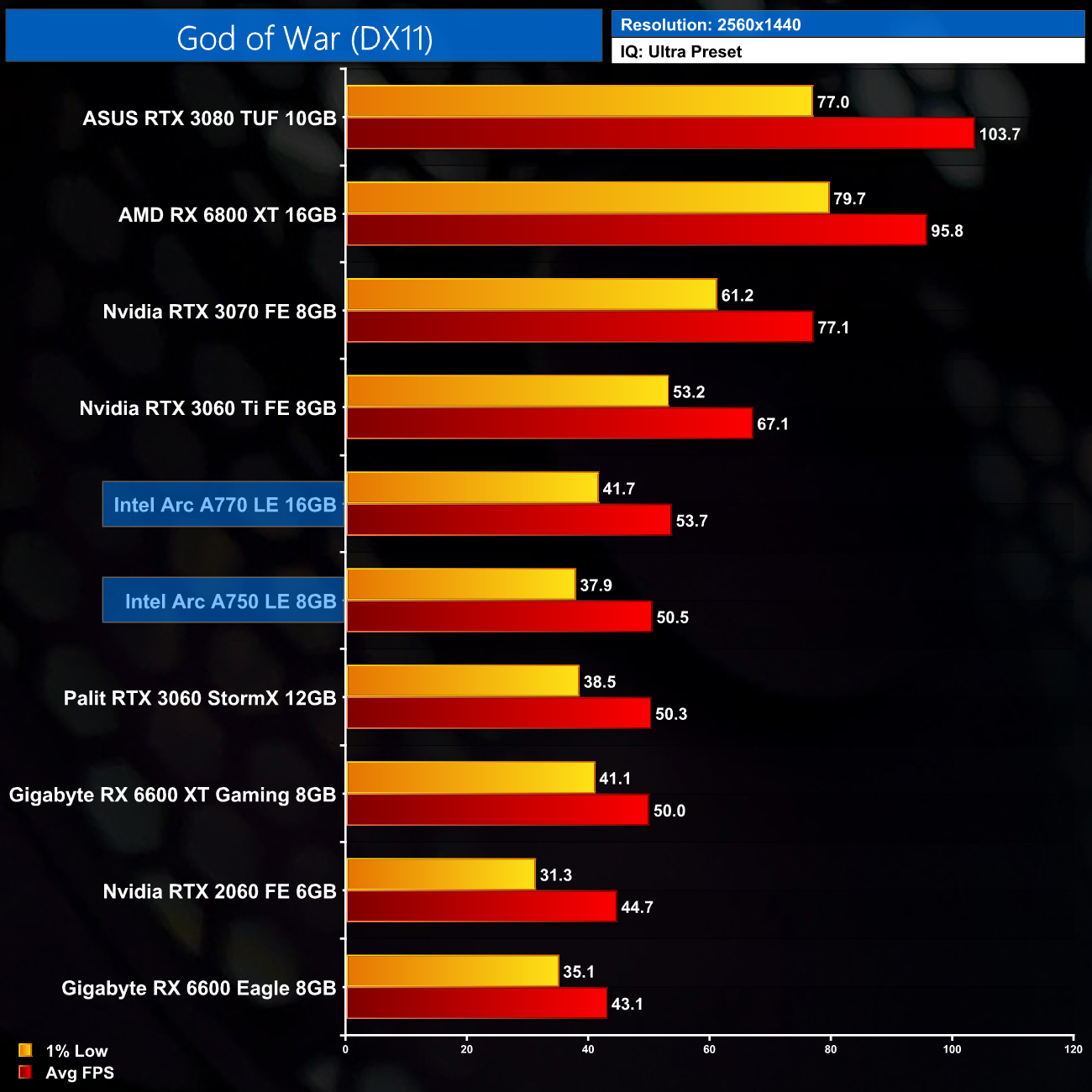

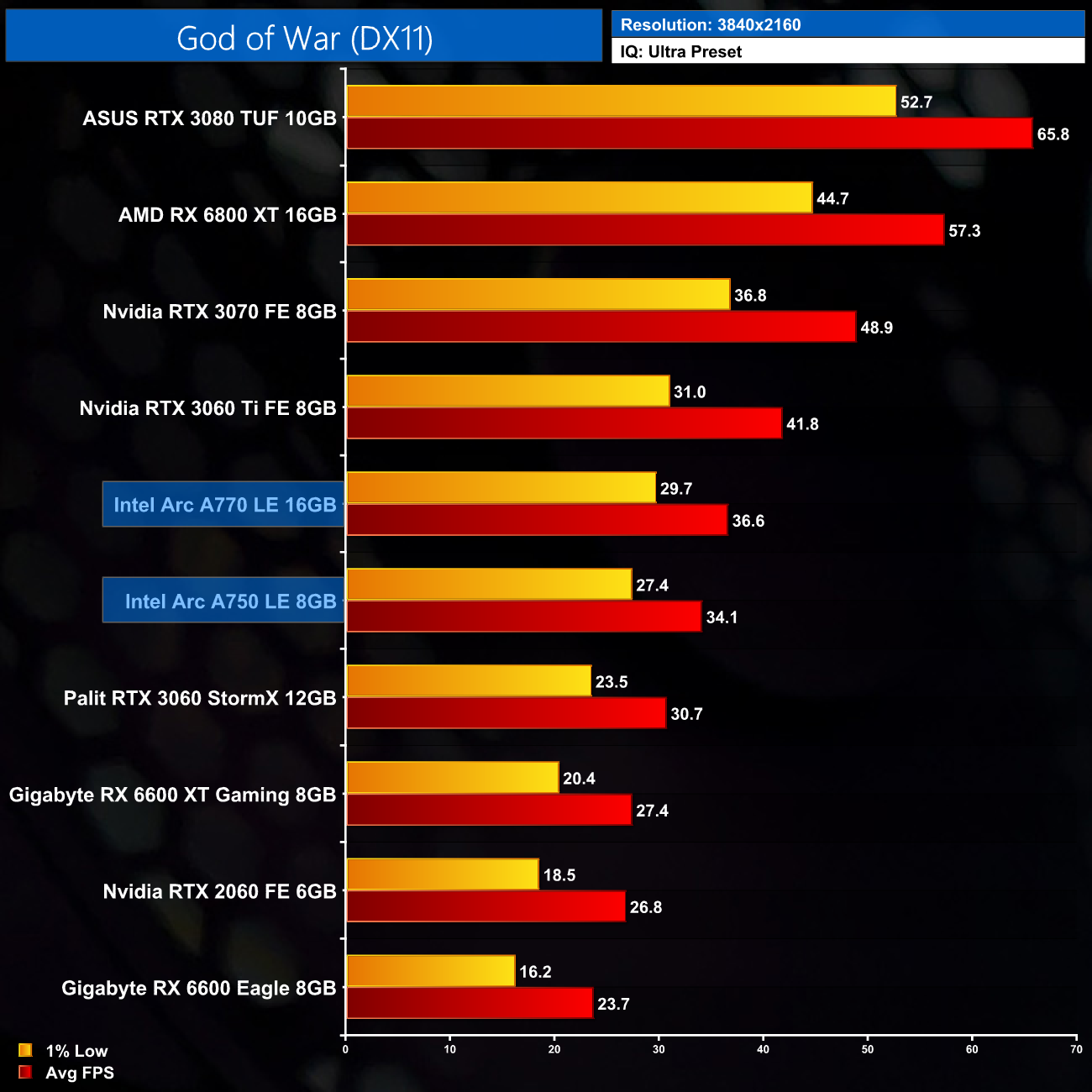

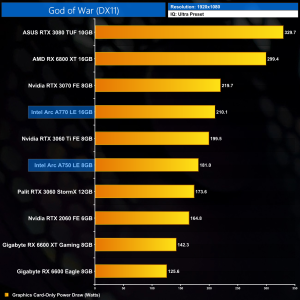

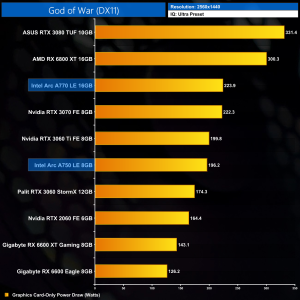

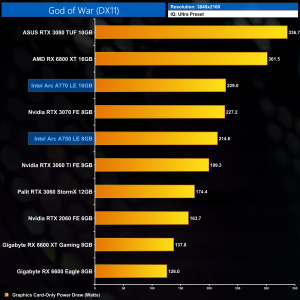

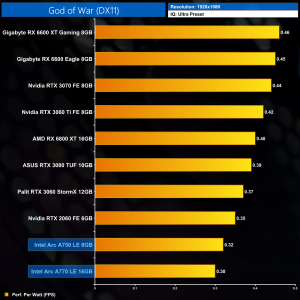

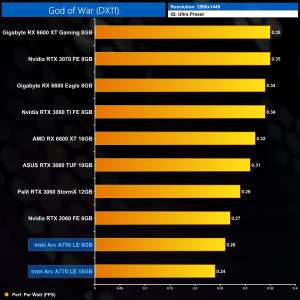

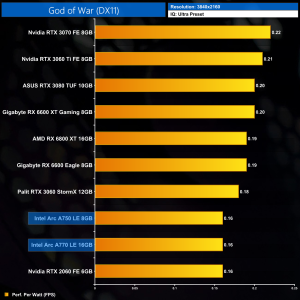

God of War is an action-adventure game developed by Santa Monica Studio and published by Sony Interactive Entertainment (SIE). It was released worldwide on April 20, 2018, for the PlayStation 4 with a Microsoft Windows version released on January 14, 2022. (Wikipedia).

Engine: Sony Santa Monica Proprietary. We test using the Ultra preset, DX11 API.

As a DX11 title, I was expecting worse performance from God of War, but things aren't too bad for the A750. It's only just faster than the RTX 2060 at 1080p, so it's hardly wonderful, but it could be a lot worse. As a major DX11 title released this year, I would imagine the Intel software team has optimised the driver for God of War, at least to a greater extent than some of the other examples that we will see later in the review.

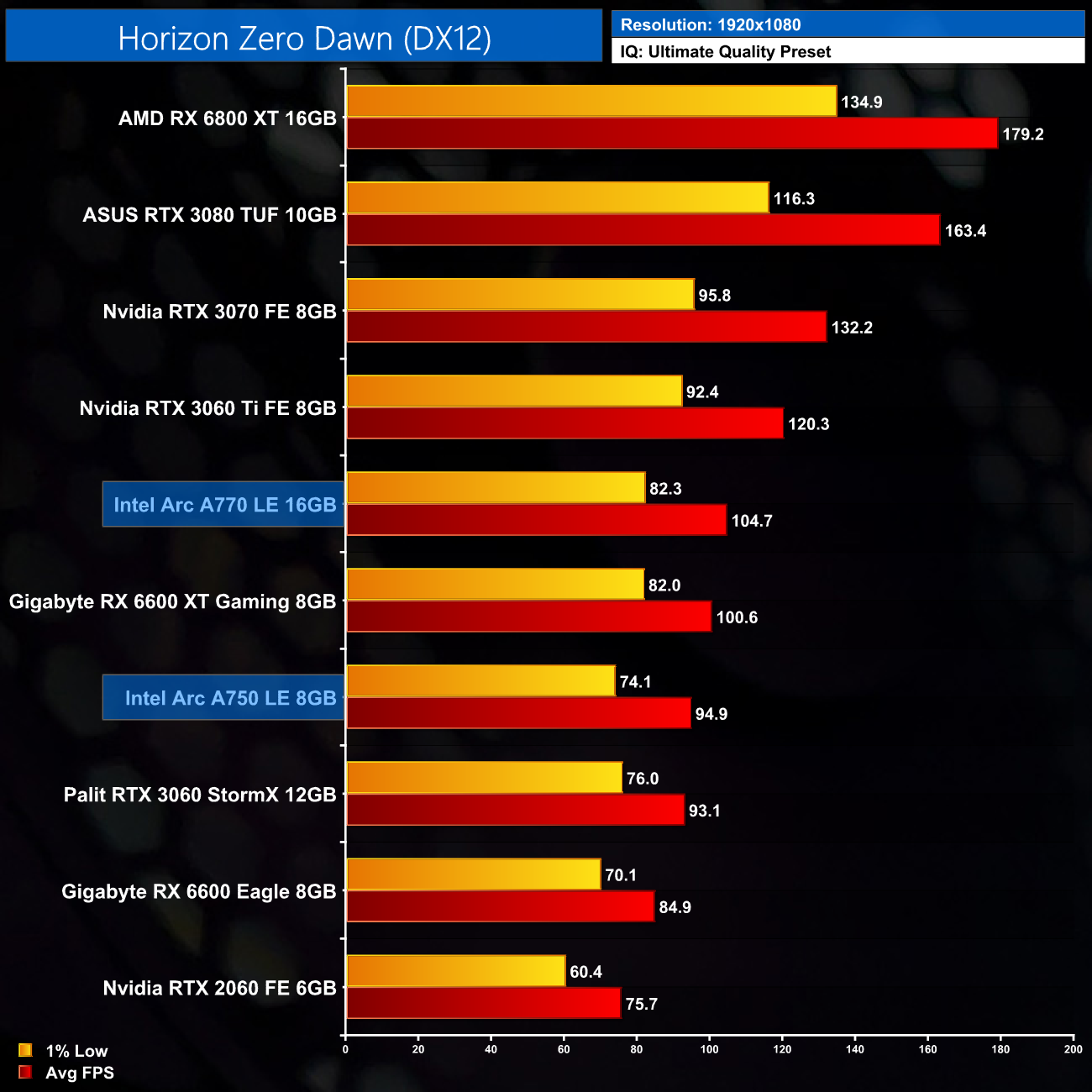

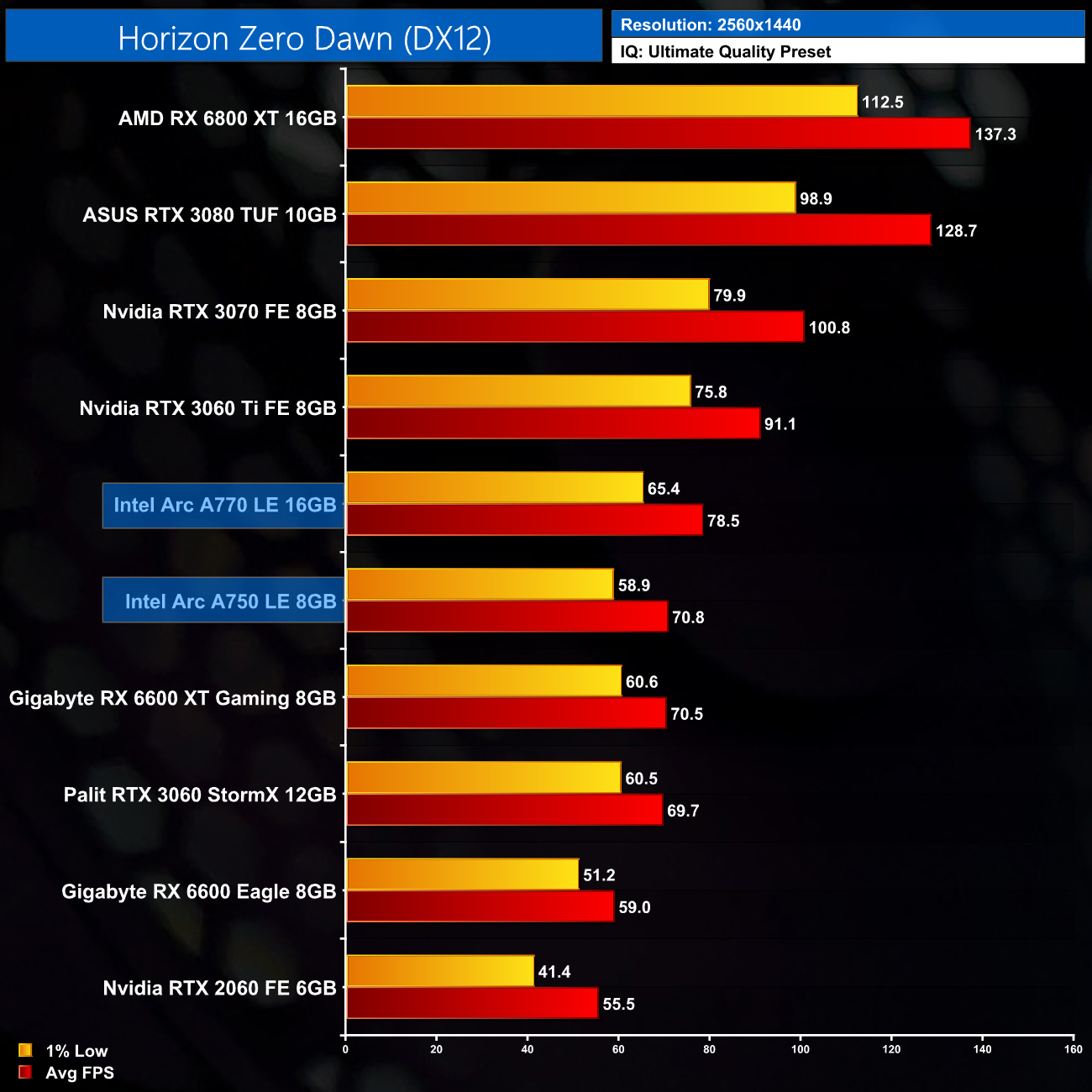

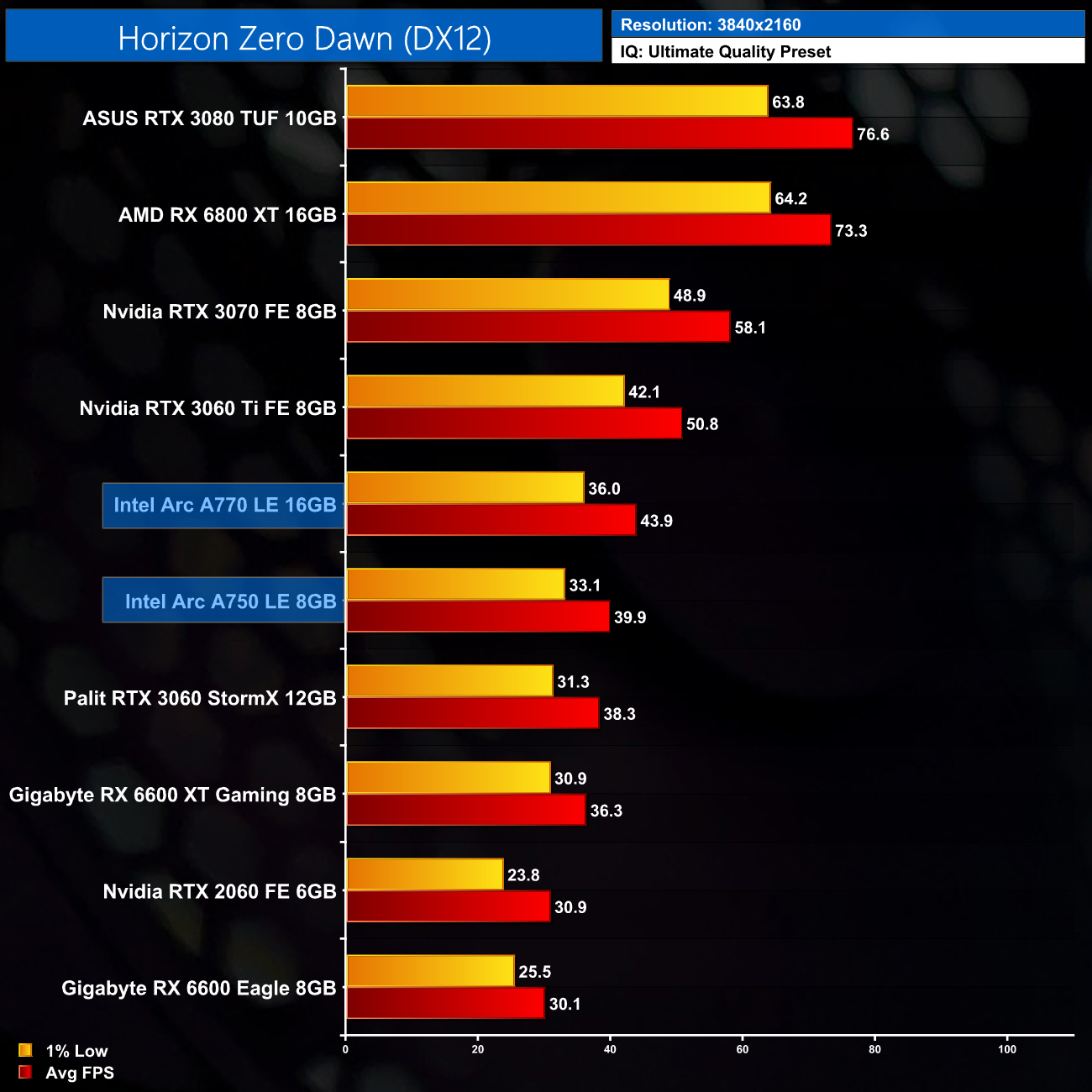

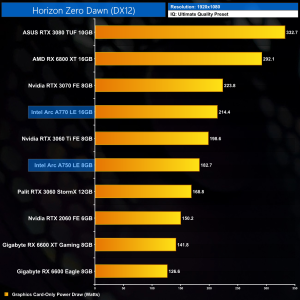

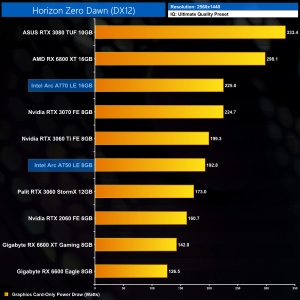

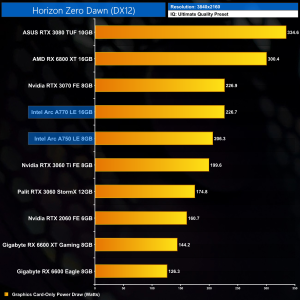

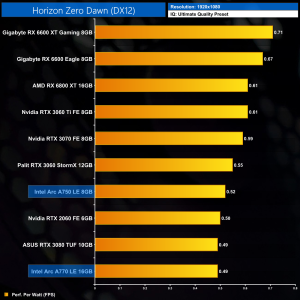

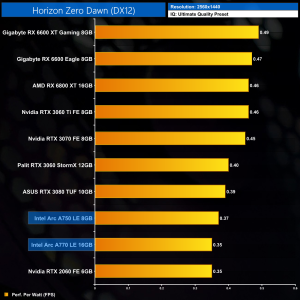

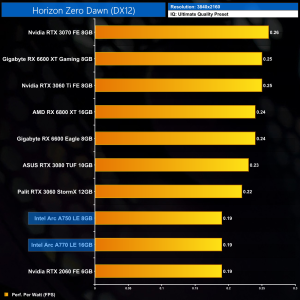

Horizon Zero Dawn is an action role-playing game developed by Guerrilla Games and published by Sony Interactive Entertainment. The plot follows Aloy, a hunter in a world overrun by machines, who sets out to uncover her past. It was released for the PlayStation 4 in 2017 and Microsoft Windows in 2020. (Wikipedia).

Engine: Decima. We test using the Ultimate Quality preset, DX12 API.

Horizon Zero Dawn is another competitive title for the A750. It closely matches the RTX 3060 at both 1080p and 1440p, while it is only 6% behind the RX 6600 XT at the former resolution, before drawing level at 1440p.

Marvel's Spider-Man Remastered is a 2018 action-adventure game developed by Insomniac Games and published by Sony Interactive Entertainment. A remastered version of Marvel's Spider-Man, featuring all previously released downloadable content, was released for the PlayStation 5 in November 2020 and for Microsoft Windows in August 2022. (Wikipedia).

Engine: Insomniac Games Proprietary. We test using the Very High preset, DX12 API.

Marvel's Spider-Man Remastered is – or would be – a new addition to our test suite, but currently exhibits a game-breaking lighting issue on Intel Arc GPUs, as shown in our video.

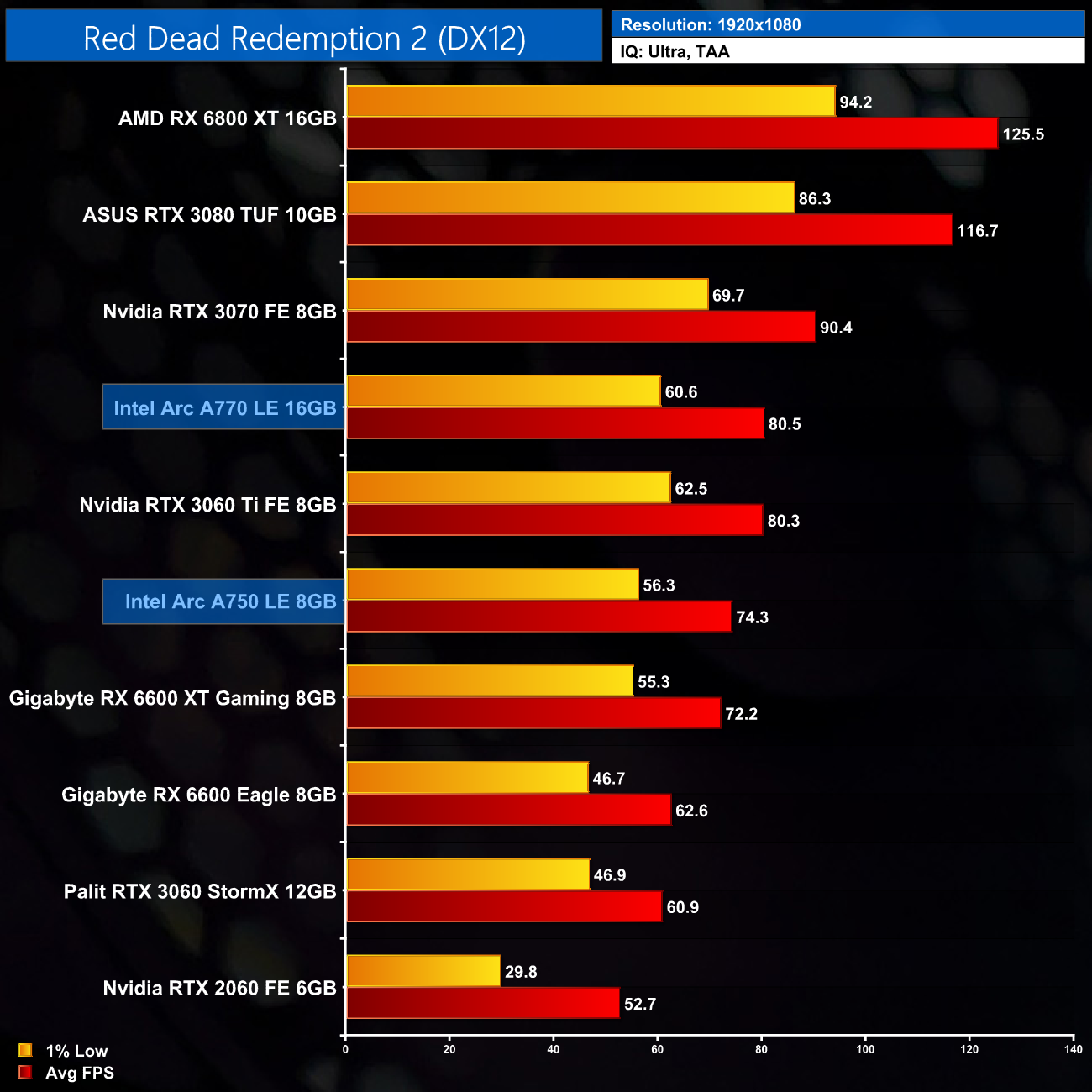

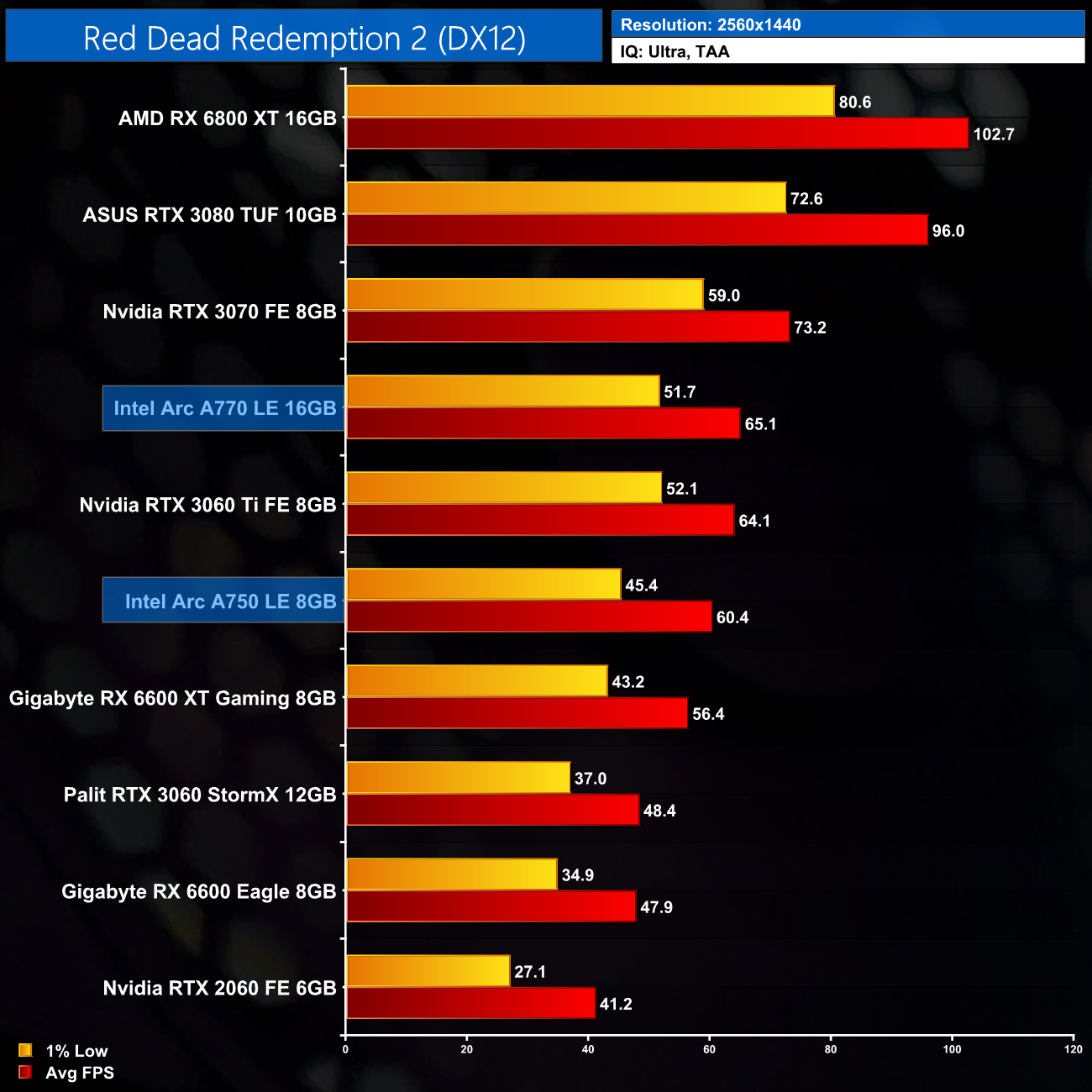

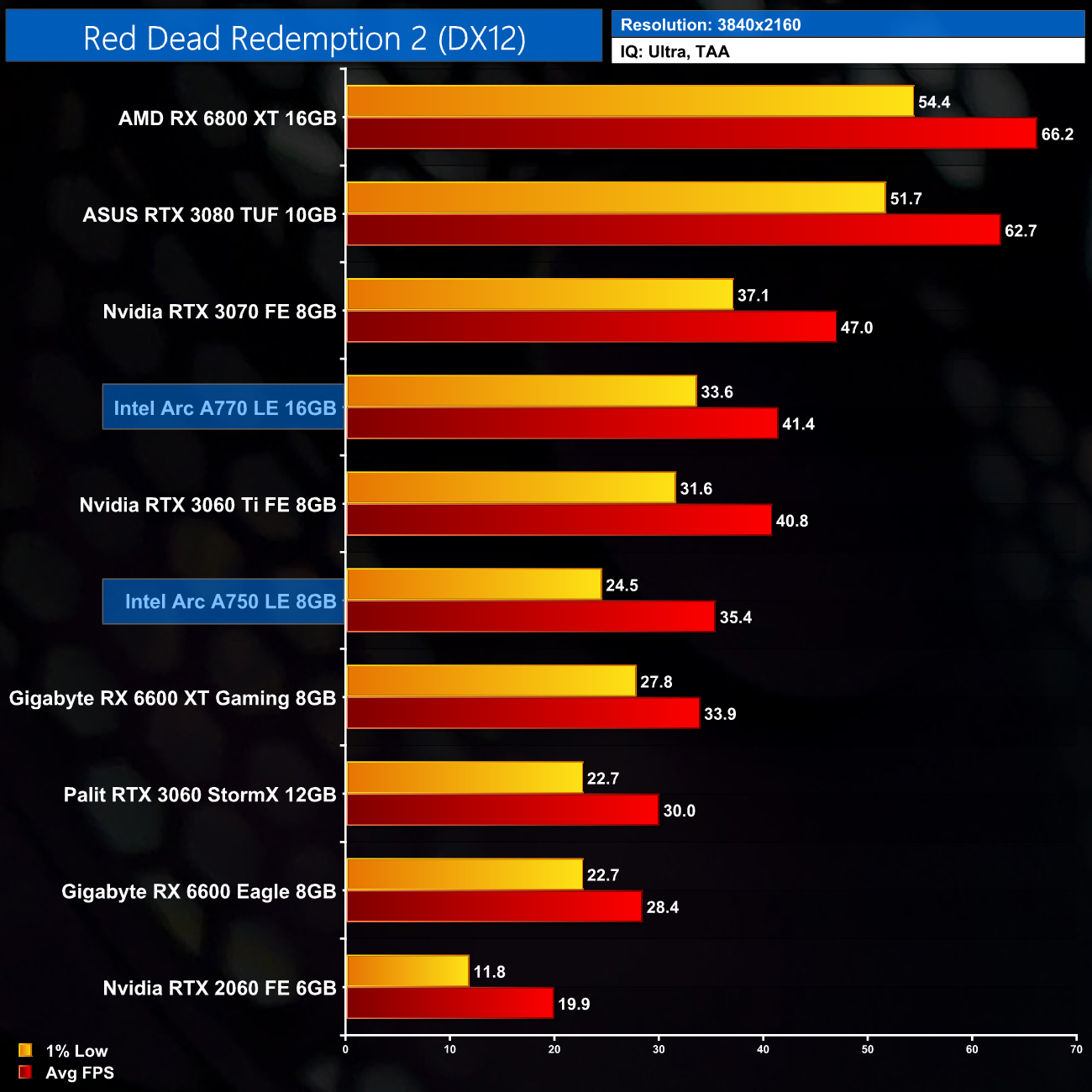

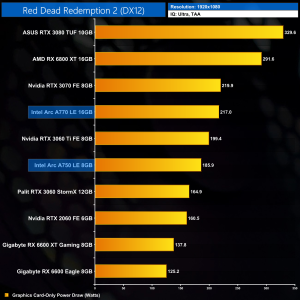

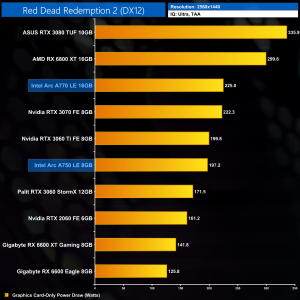

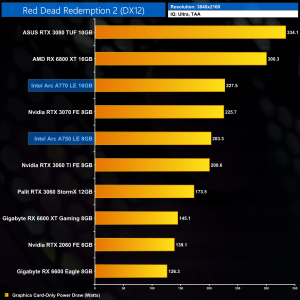

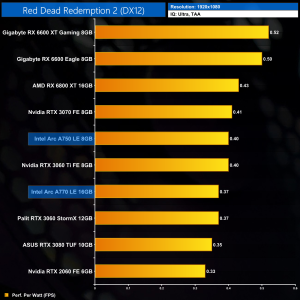

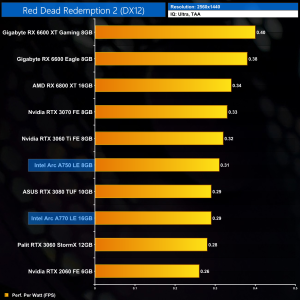

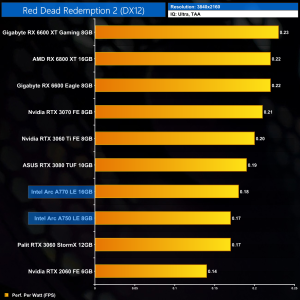

Red Dead Redemption 2 is a 2018 action-adventure game developed and published by Rockstar Games. The game is the third entry in the Red Dead series and is a prequel to the 2010 game Red Dead Redemption. Red Dead Redemption 2 was released for the PlayStation 4 and Xbox One in October 2018, and for Microsoft Windows and Stadia in November 2019. (Wikipedia).

Engine: Rockstar Advance Game Engine (RAGE). We test by manually selecting Ultra settings (or High where Ultra is not available), TAA, DX12 API.

Red Dead Redemption 2 is the best-case scenario for Intel Arc, based on our testing. The A750 absolutely flies at 1080p, hitting 74FPS at Ultra settings, making it 22% faster than the RTX 3060 while it's not far off the RTX 3060 Ti! At 1440p, it increases its lead over the 3060 to 25%, and it's 7% faster than the RX 6600 XT as well.

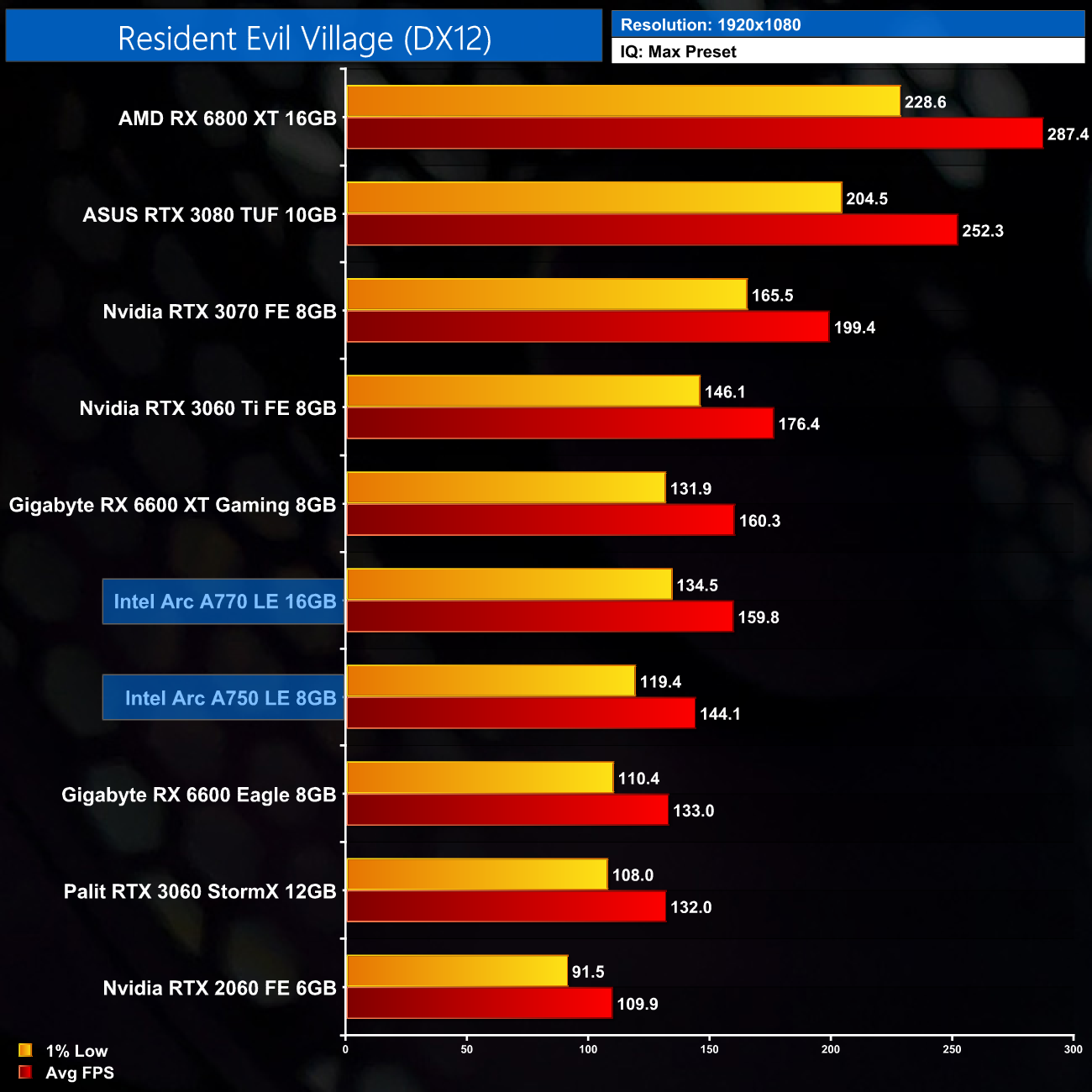

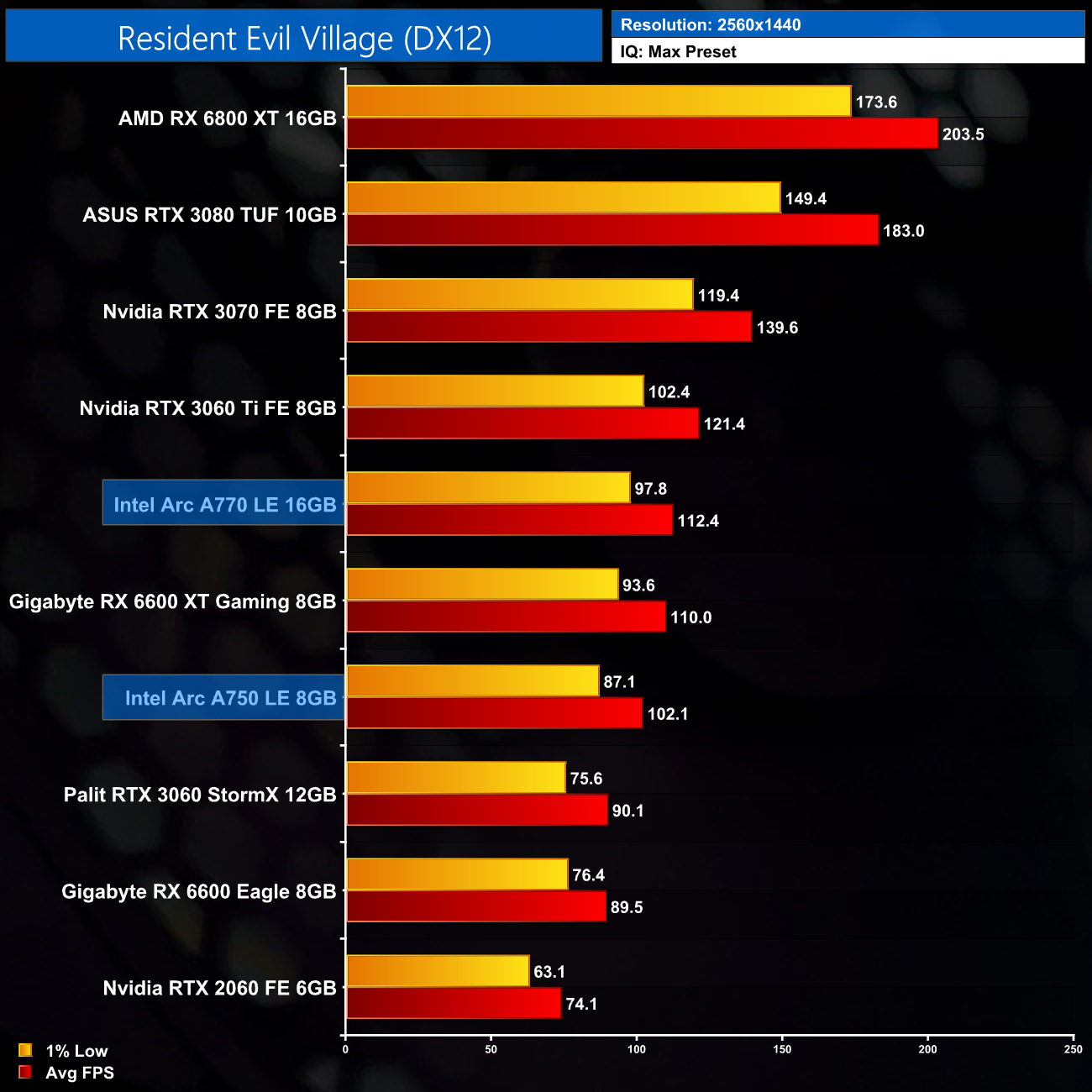

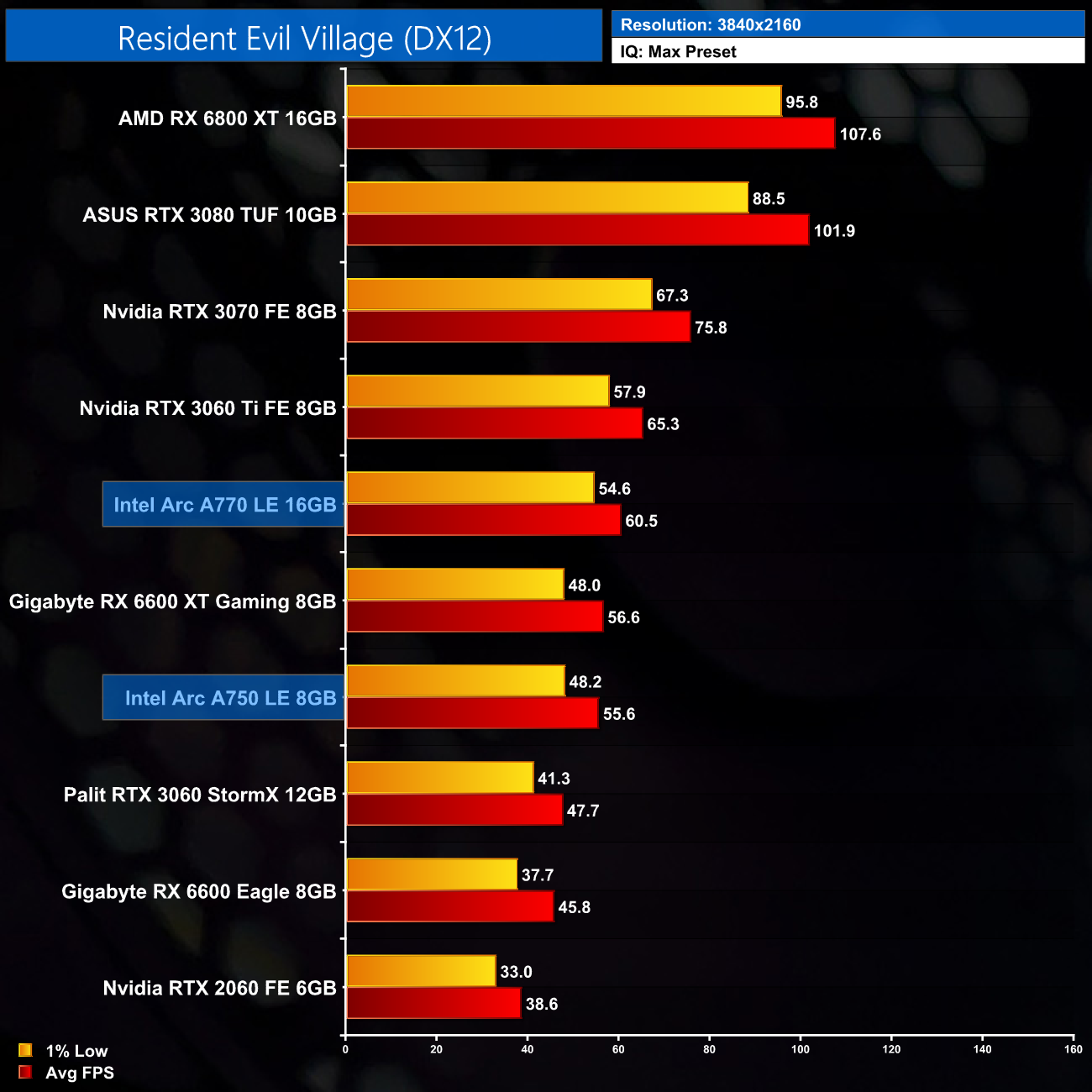

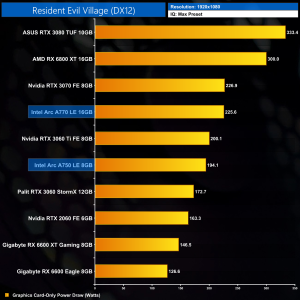

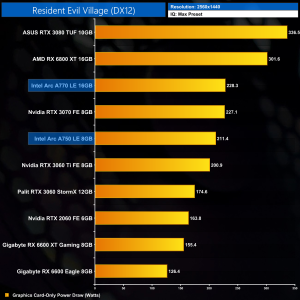

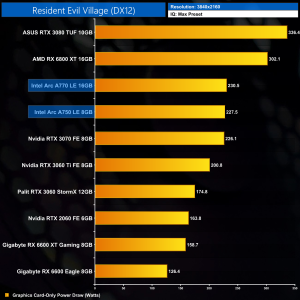

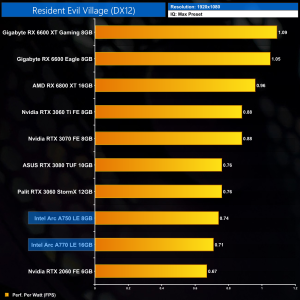

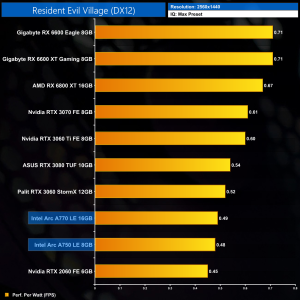

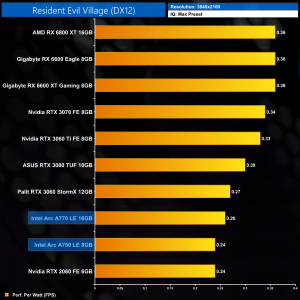

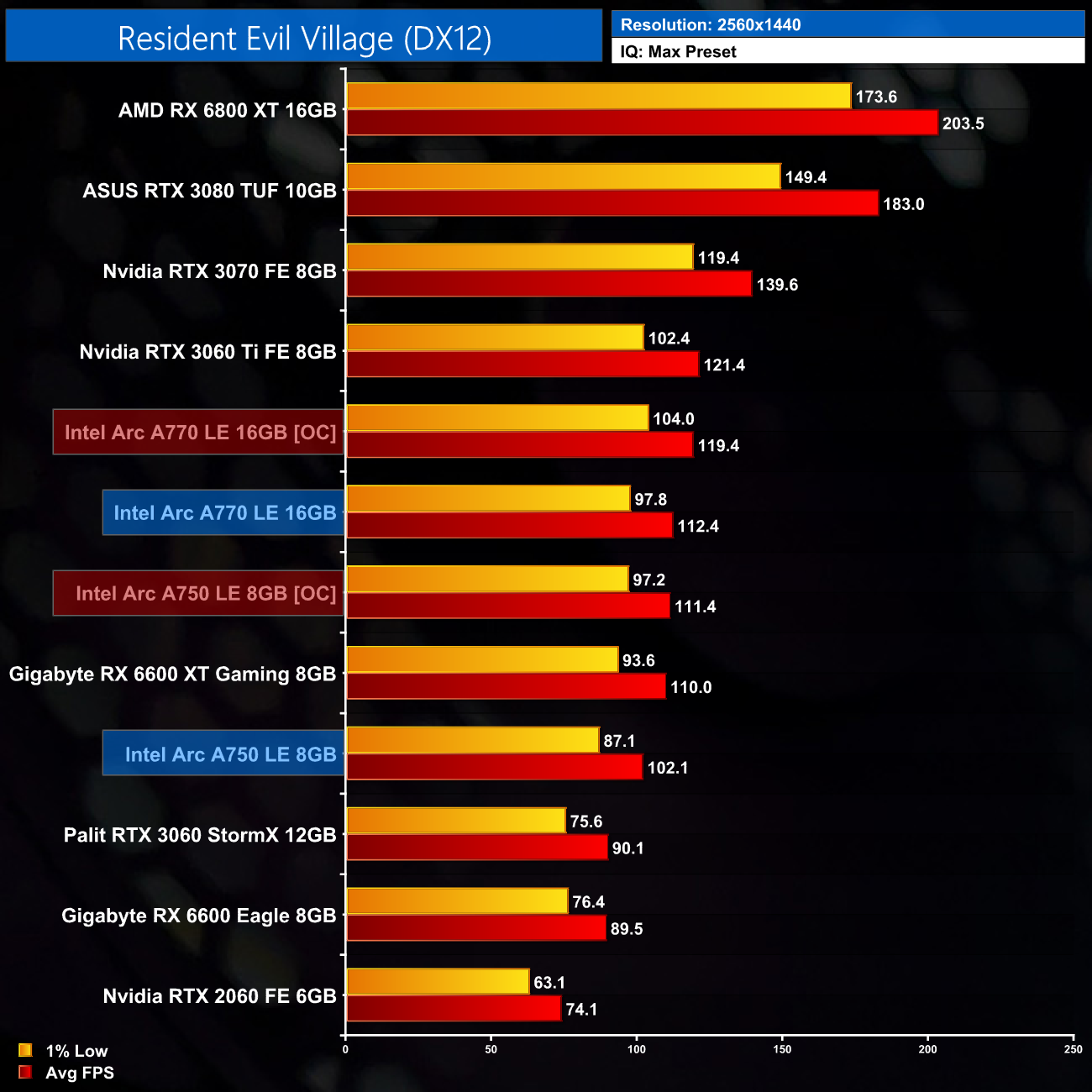

Resident Evil Village is a survival horror game developed and published by Capcom. The sequel to Resident Evil 7: Biohazard (2017), players control Ethan Winters, who is searching for his kidnapped daughter; after a fateful encounter with Chris Redfield, he finds himself in a village filled with mutant creatures. The game was announced at the PlayStation 5 reveal event in June 2020 and was released on May 7, 2021, for Windows, PlayStation 4, PlayStation 5, Xbox One, Xbox Series X/S and Stadia. (Wikipedia).

Engine: RE Engine. We test using the Max preset, with V-Sync disabled, DX12 API.

As for Resident Evil Village, the A750 puts in another decent showing. Delivering 144FPS at 1080p makes it 9% faster than the RTX 3060, though still 10% slower than the RX 6600 XT. At 1440p it does scale better than both of those GPUs however, as it is now 13% faster than the 3060 and just 7% behind the 6600 XT. Either way, delivering 100FPS results in a very smooth gaming experience.

Total War: Warhammer III is a turn-based strategy and real-time tactics video game developed by Creative Assembly and published by Sega. It is part of the Total War series, and the third to be set in Games Workshop's Warhammer Fantasy fictional universe (following 2016's Total War: Warhammer and 2017's Total War: Warhammer II). The game was announced on February 3, 2021, and was released on February 17, 2022. (Wikipedia).

Engine: TW Engine 3 (Warscape). We test using the Ultra preset, with unlimited video memory enabled, DX11 API.

Total War: Warhammer III would be the last game we test, but unfortunately there is more game-breaking visual corruption. Intel told us that this issue was meant to be fixed in the previous driver revision, but as you can see in the video, that is not the case.

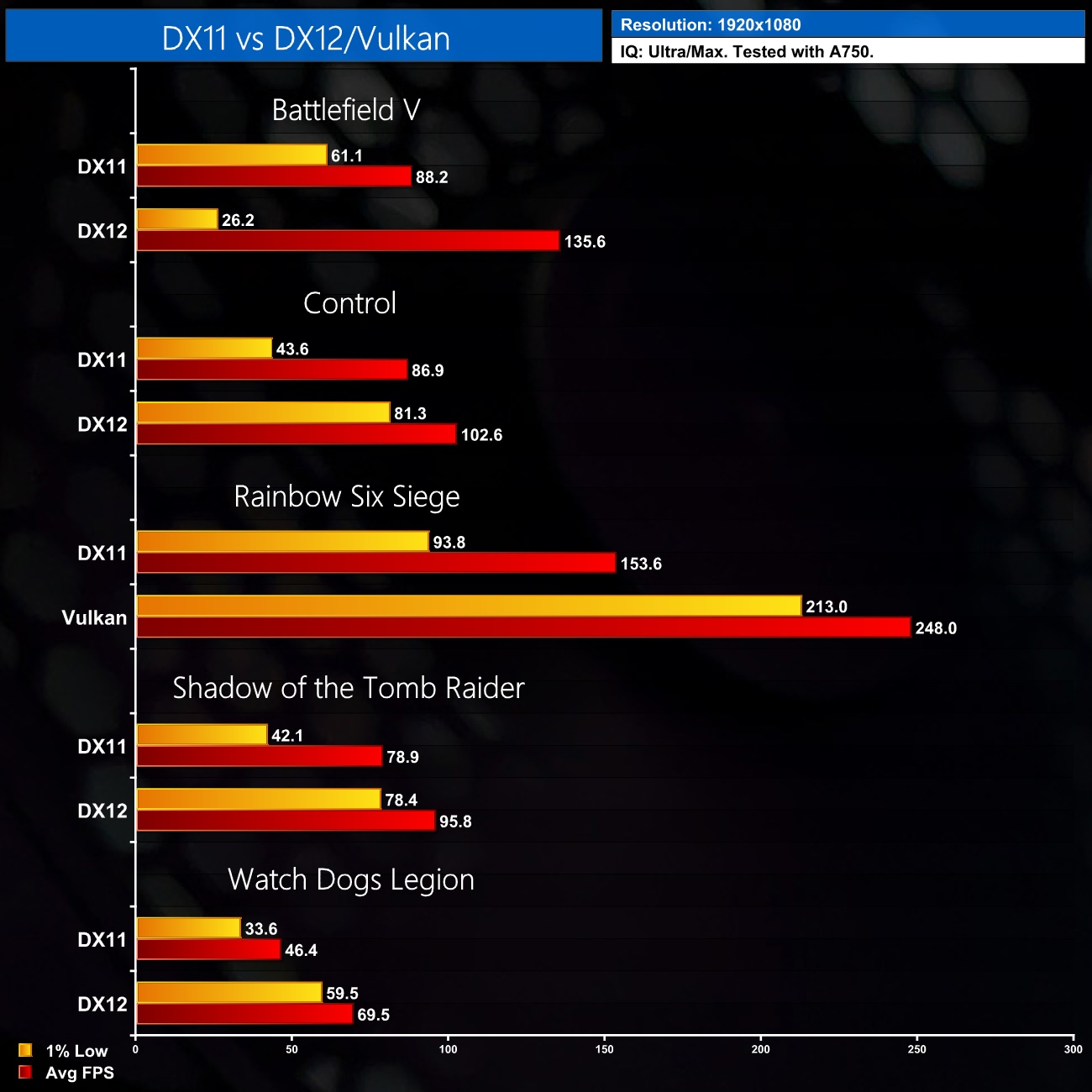

While three of the twelve games we tested (or hoped to test) today are DX11 titles, Intel was very upfront to us about the problem posed by games running on DX11 – and older – APIs. As these APIs place a much large emphasis on the driver itself, which is an obvious problem considering Intel's newcomer status in the GPU market, performance is likely to suffer versus low-level APIs such as DX12 and Vulkan.

To put this to the test, we benchmarked five titles that support DX11, and either DX12 or Vulkan:

It's quite amusing that the first title tested – Battlefield V – delivers such poor frame times using DX12, that although the average frame rate is 35% worse, DX11 actually offers a better gaming experience here. It's also worth pointing out that I did want to include Kena: Bridge of Spirits in these benchmarks, but it crashed several times when switching from DX11 to DX12, so it goes to show that DX12 isn't a guarantee of good performance for Intel Arc.

Still, DX11 is generally much worse across the board. Some titles, like Control, see fairly similar average frame rates but with a huge reduction to 1% low performance when using DX11. Other games, such as Rainbow Six Siege, get significantly slower across the board.

According to Intel's Tom Peterson, optimising DX11 titles is a task that will last ‘forever' for Intel Arc, and we can see why. It's a major red flag for Intel's first dGPUs.

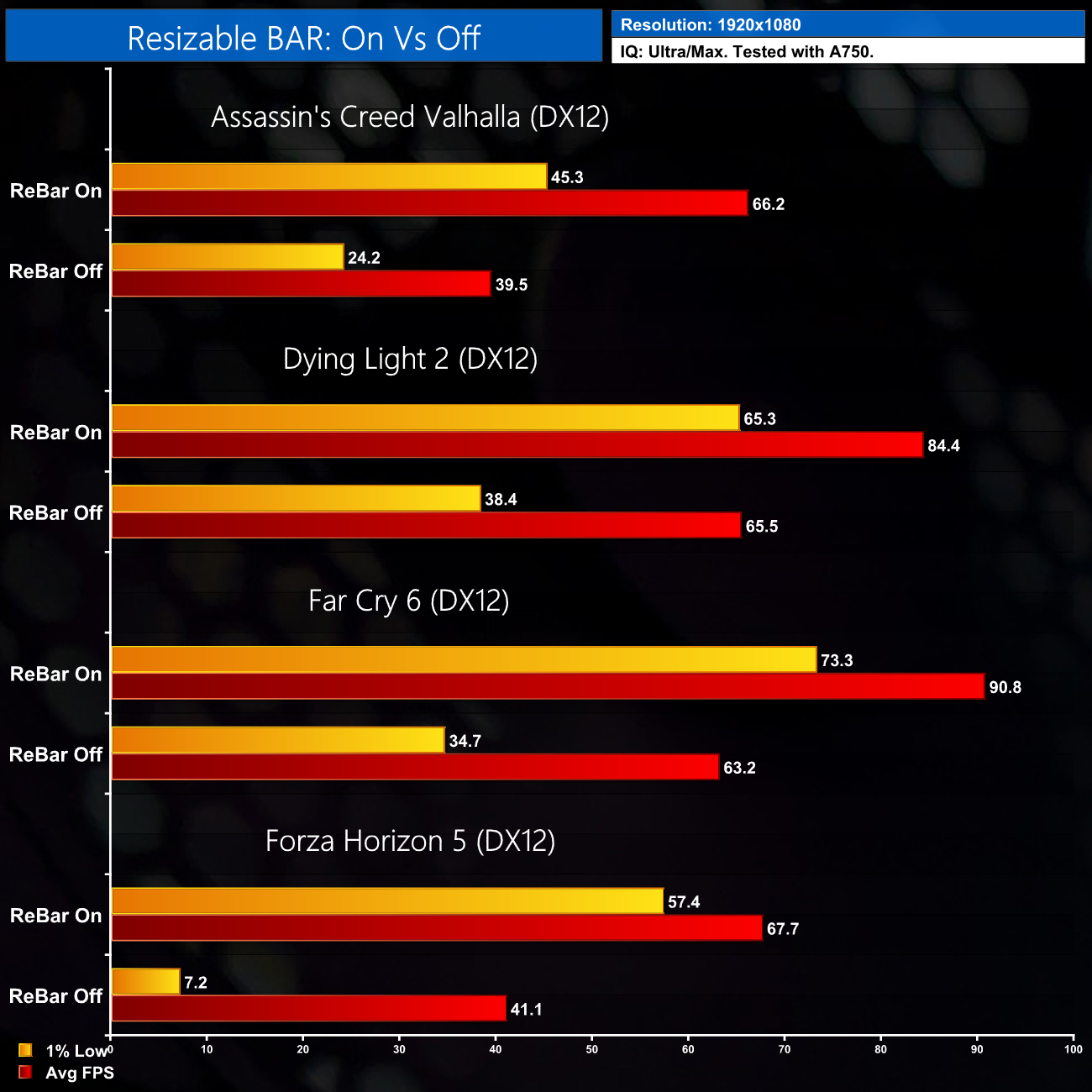

At KitGuru we have been running our benchmarks with Resizable BAR on since the beginning of the year. Intel has again been quite upfront about the fact that Resizable BAR, or Rebar, is essentially a requirement for its Arc GPUs; in a press briefing, Tom Peterson was as blunt as saying users who can't enable Rebar should just get a 3060 without even considering Arc!

We tested four games, with Rebar on and then with Rebar off, to take a closer look.

The results quickly show why Intel is more-or-less positioning Rebar as a requirement for Arc GPUs – the 1% lows are near enough cut in half in Assassin's Creed Valhalla and face significant reductions in both Dying Light 2 and Far Cry 6. Forza Horizon 5 is the worst offender however and becomes simply unplayable without Rebar.

While clearly a huge issue for those with systems that don't support Rebar, for me this isn’t as big of an issue as the DX11 performance is. My reasoning is that at least the last two motherboard generations from Intel and AMD do support Rebar, and in some cases even further back then that, so a fair number of PC enthusiasts should have a compatible system. And as we will get to, right now I don’t think many people who aren’t enthusiasts will be buying Arc as it’s not ready for the mainstream yet, though the Rebar situation is certainly something to keep in mind.

It's at this part of a GPU review where I usually show a performance summary, giving the average frame rate from across all 12 games tested so we can compare the card in question against the competition. However, I won’t be doing that for Intel Arc just yet.

For one, two of the twelve games that I wanted to test are visually broken, so there's that… But more fundamentally, I just don’t think you can really ‘boil down' the experience of using Arc into a single chart. I personally would be worried that someone could view such a chart and take the data at face value, which really wouldn't tell the whole picture and could be misleading. I’ve found that the experience of using Arc can vary massively from game to game, so I didn’t want to try and distil the performance in a way that doesn’t really do it justice.

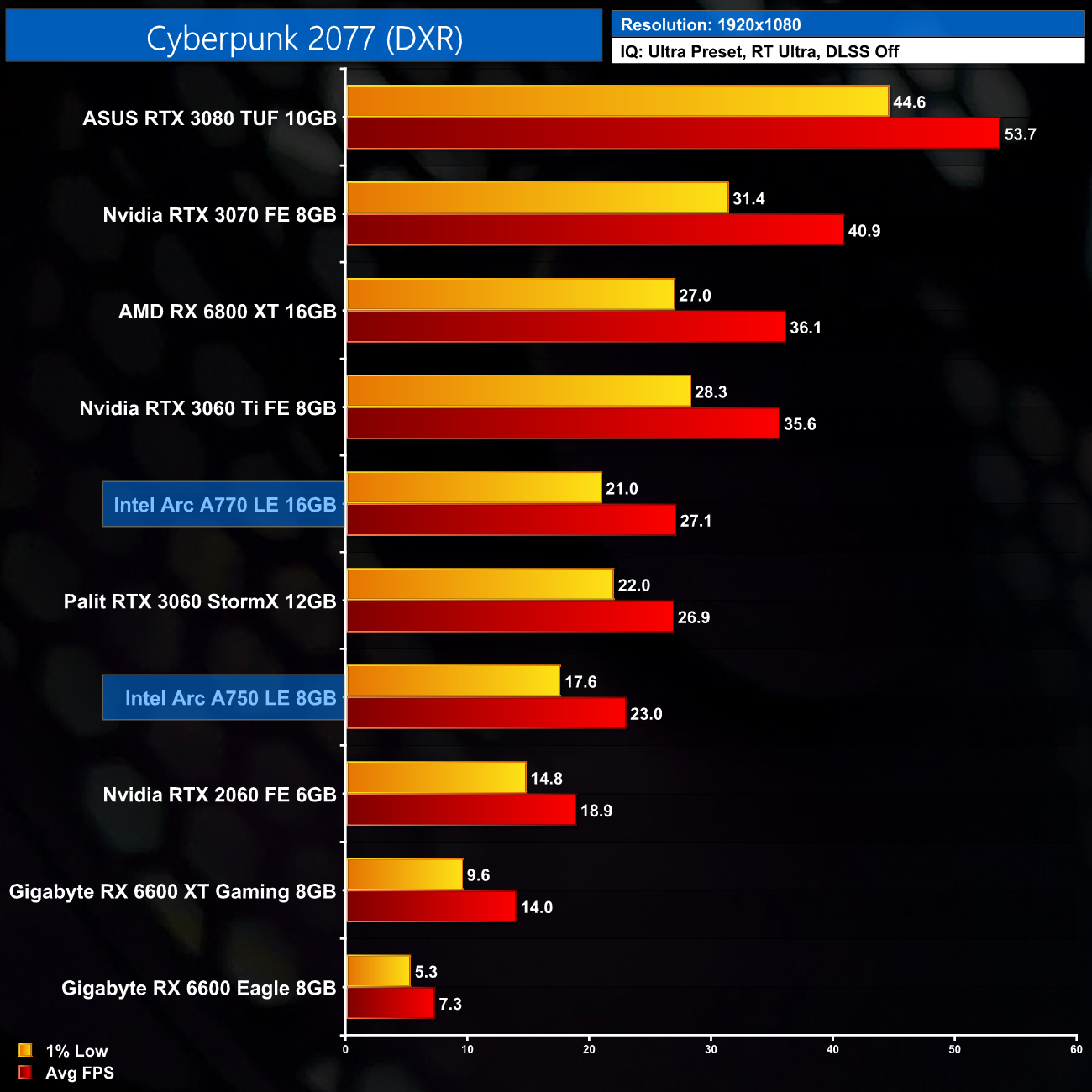

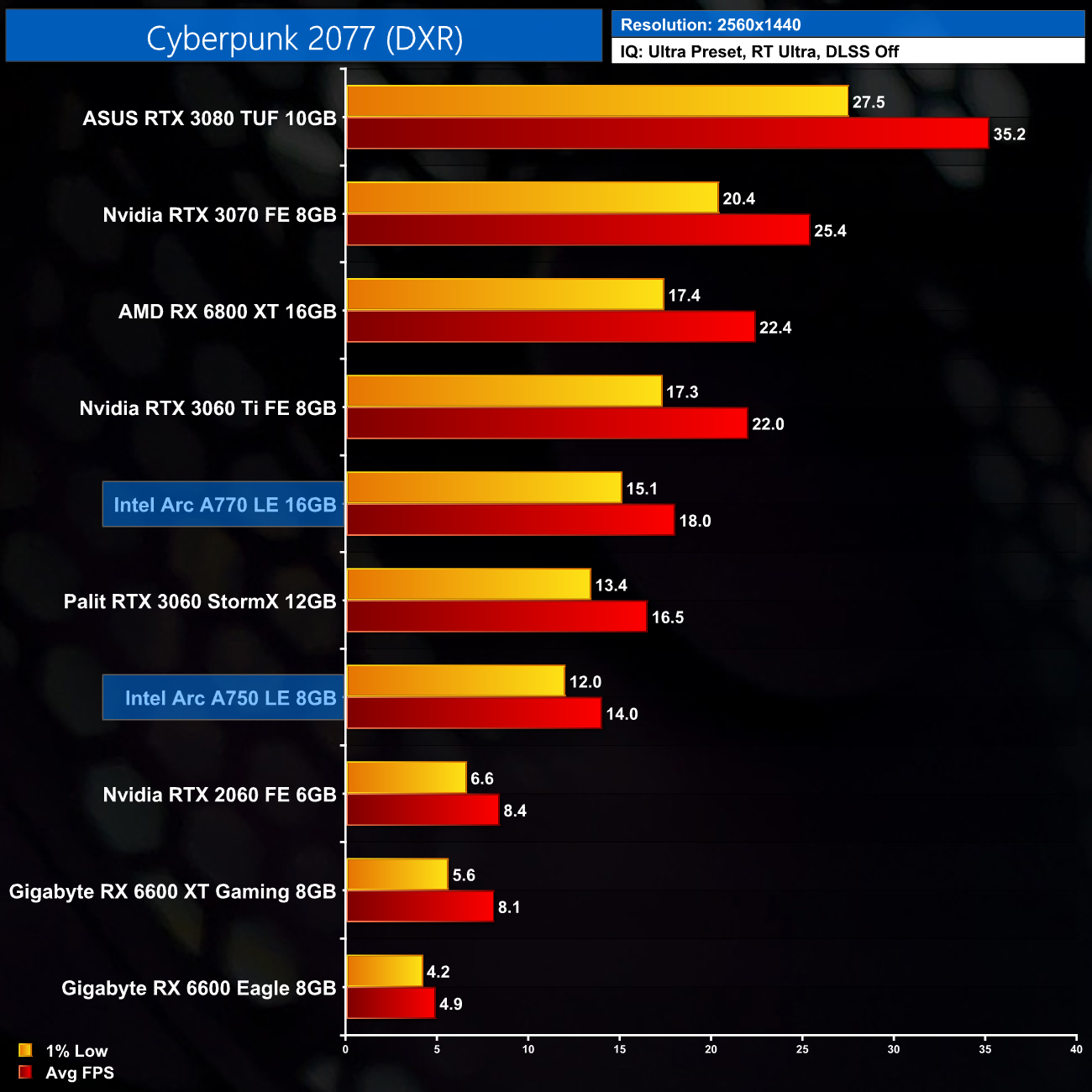

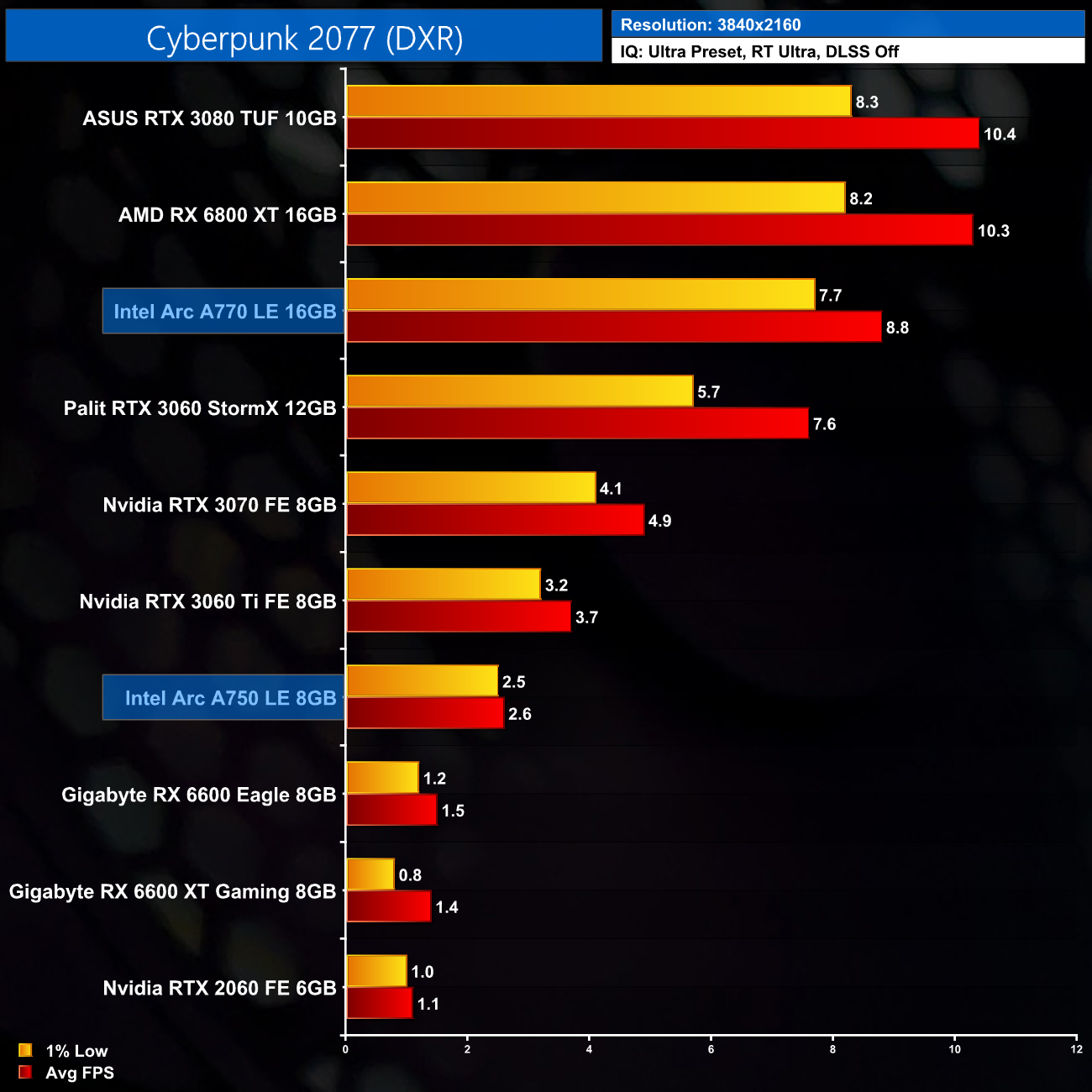

Here we test Cyberpunk 2077, with RT Lighting set to Ultra.

Ray tracing in Cyberpunk is impressive for the A750. It can't hold above 30FPS at Ultra settings, but it's only just behind the RTX 3060 at 1080p, which bodes well for easier-to-run ray tracing titles.

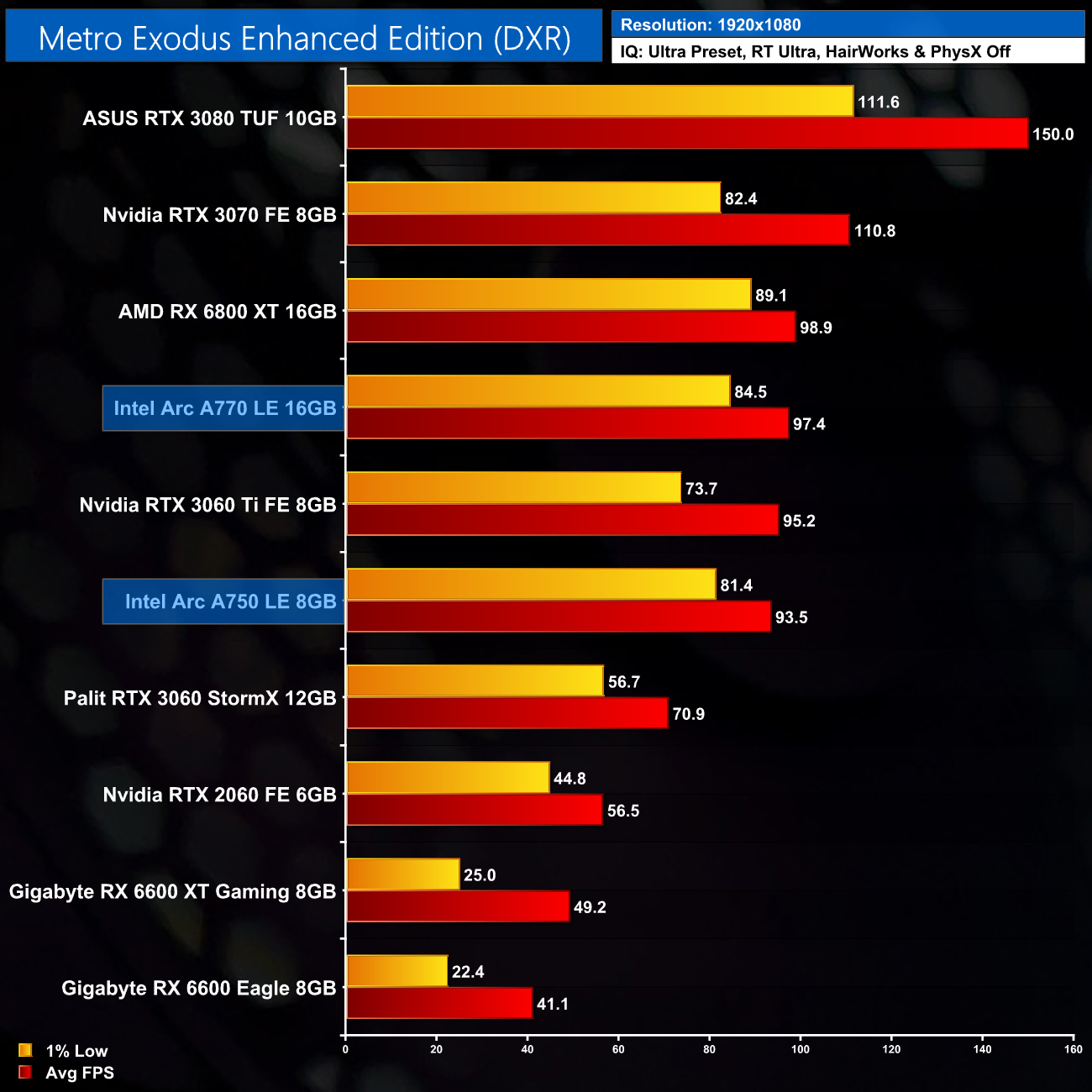

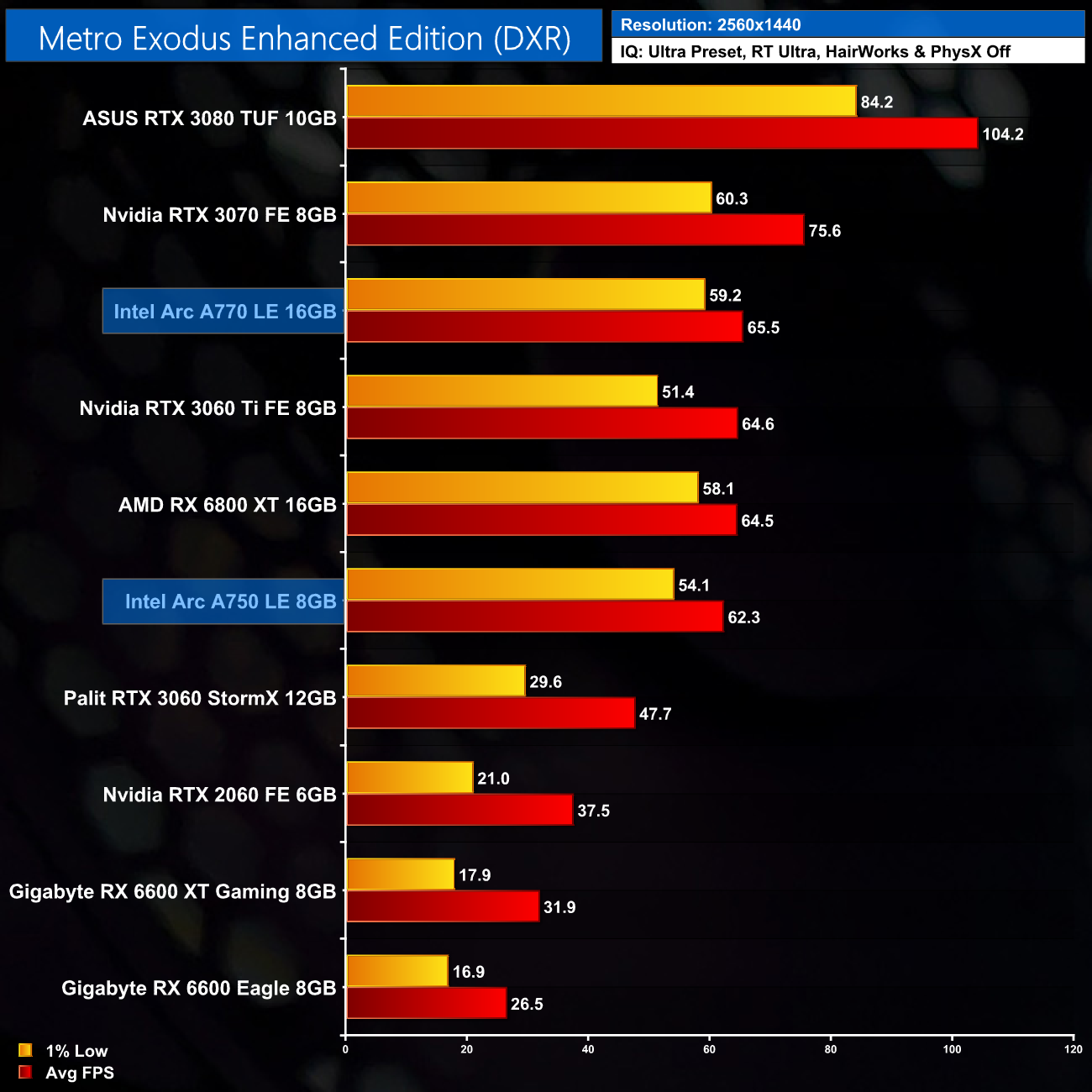

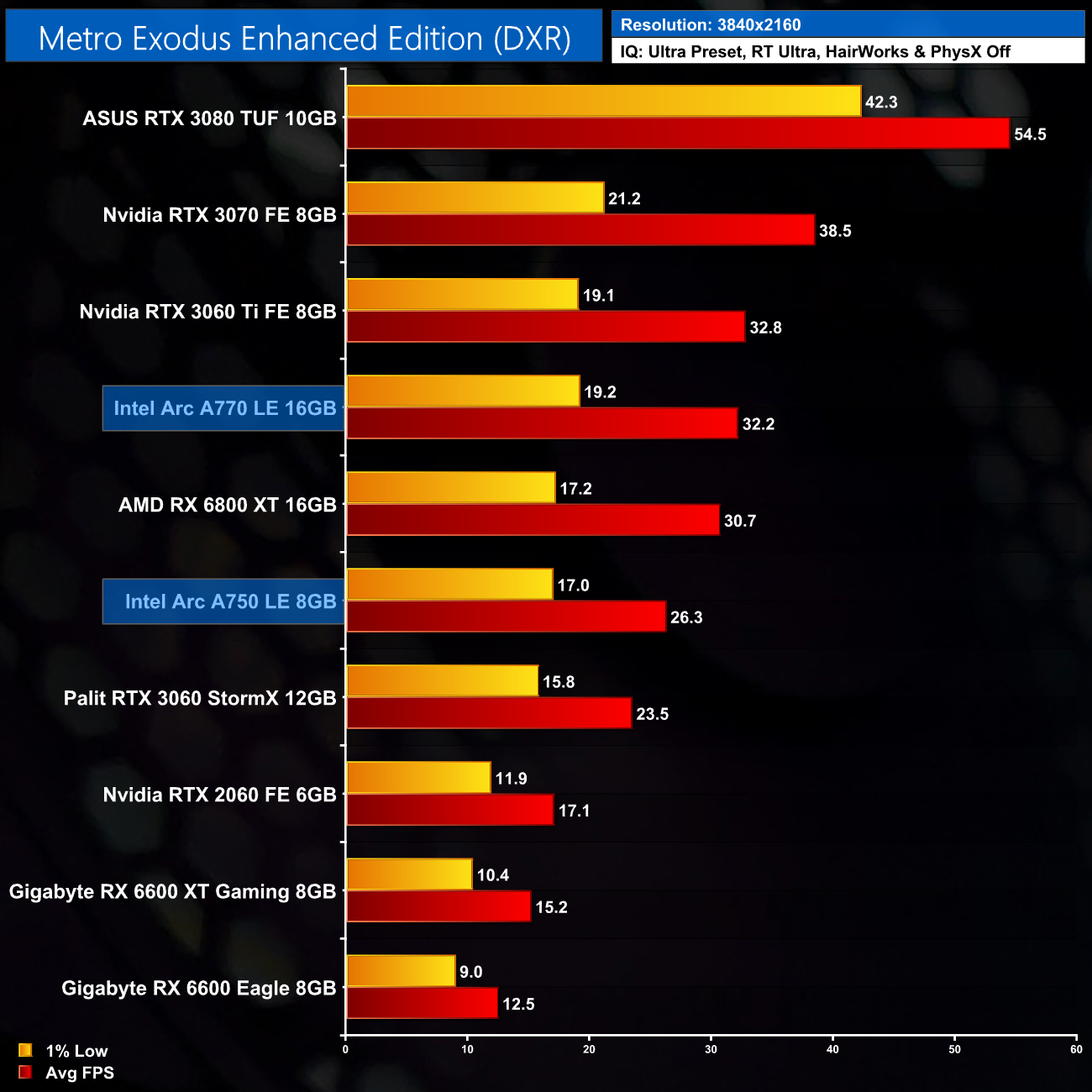

Here we test Metro Exodus Enhanced Edition, with the in-game ray tracing effects set to Ultra.

Metro Exodus Enhanced Edition is a very strong performer for the A750. At 1080p it delivers almost 94FPS, putting it on par with the RTX 3060 Ti which is quite incredible to see. At 1440p it's also just behind the RX 6800 XT, a card that launched at over twice the price of the A750.

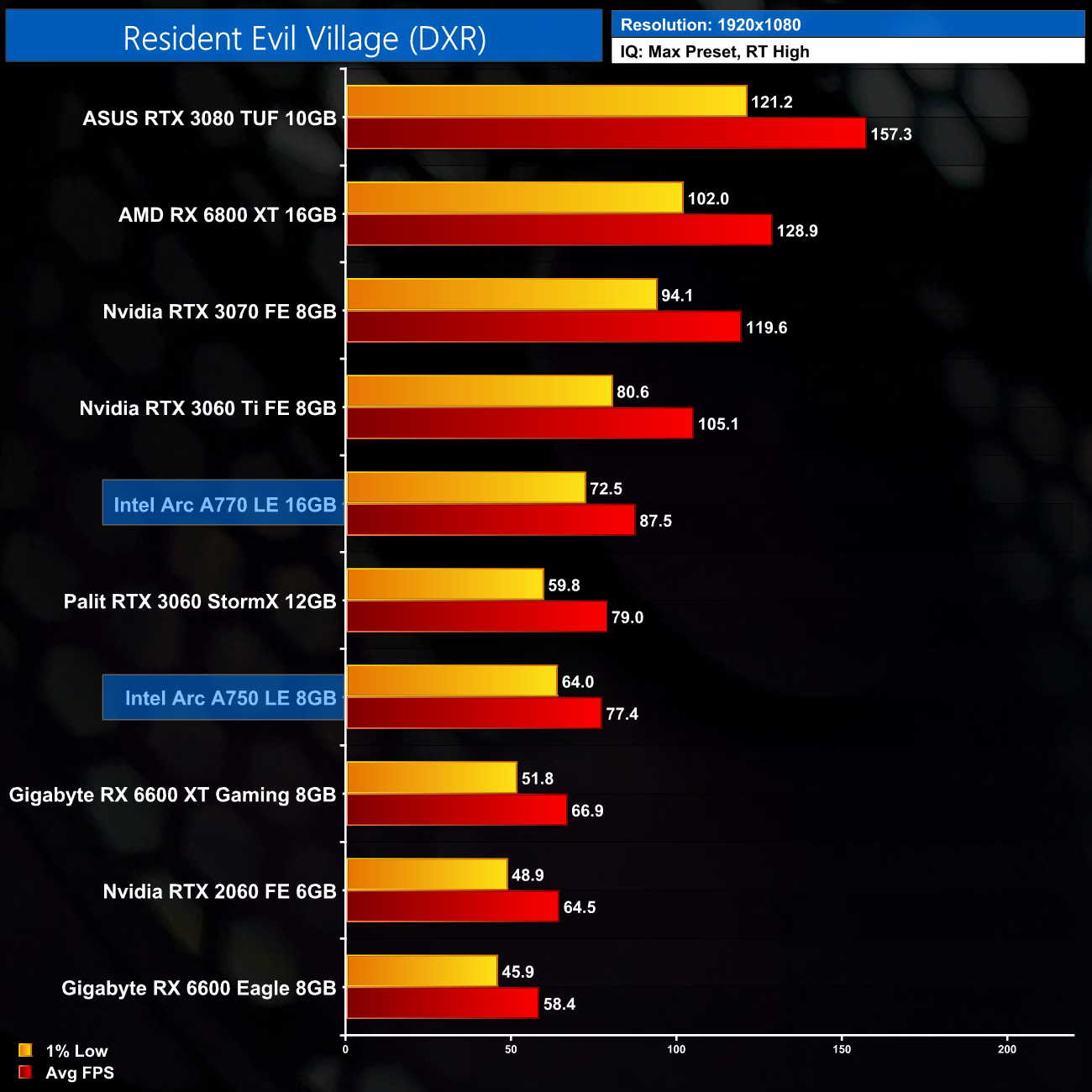

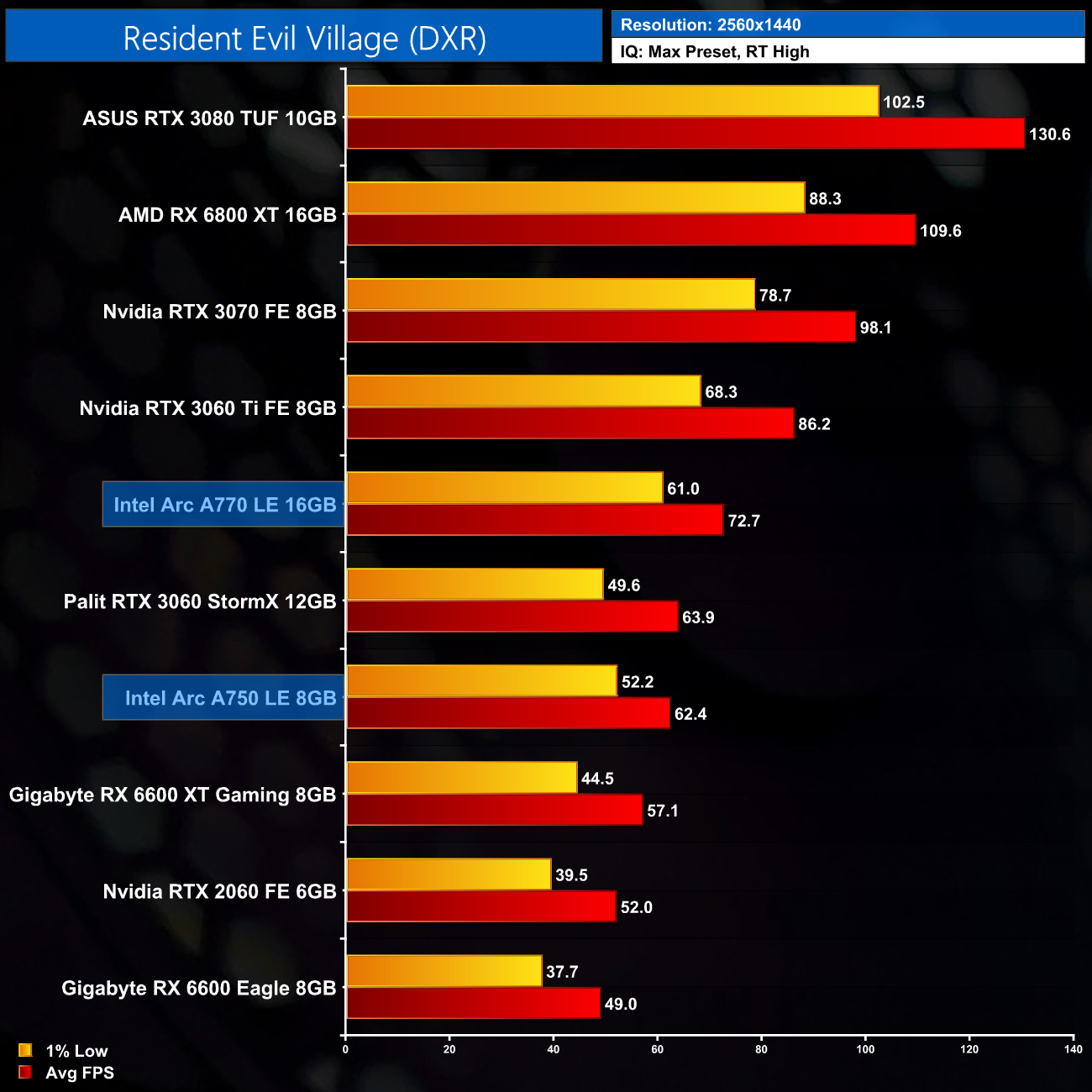

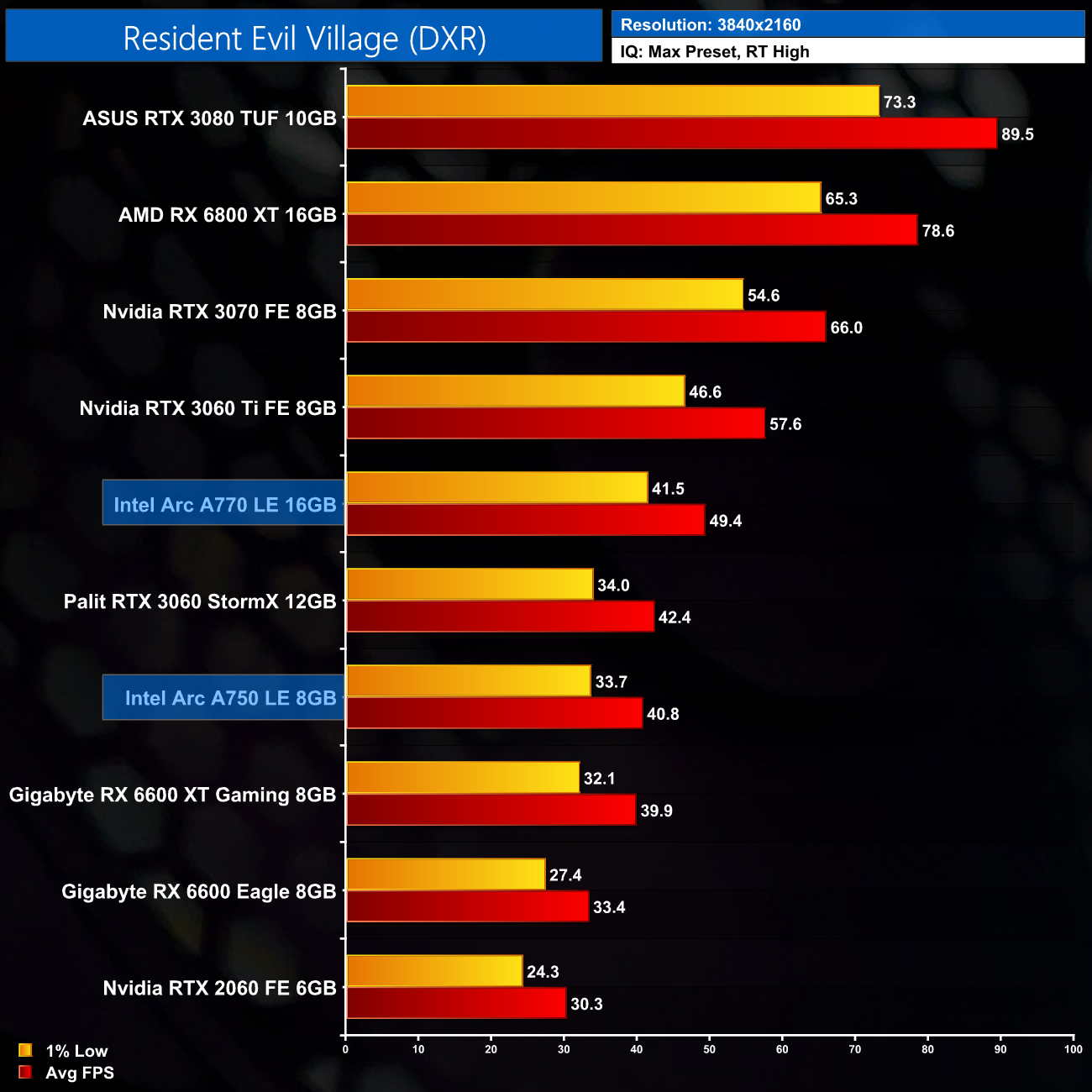

Here we test Resident Evil Village, this time testing with the in-game ray tracing effects set to High.

Resident Evil Village also shows the A750's ray tracing performance to be at a similar level to the RTX 3060, with both cards averaging just below 80FPS at 1080p, and around 60FPS at 1440p.

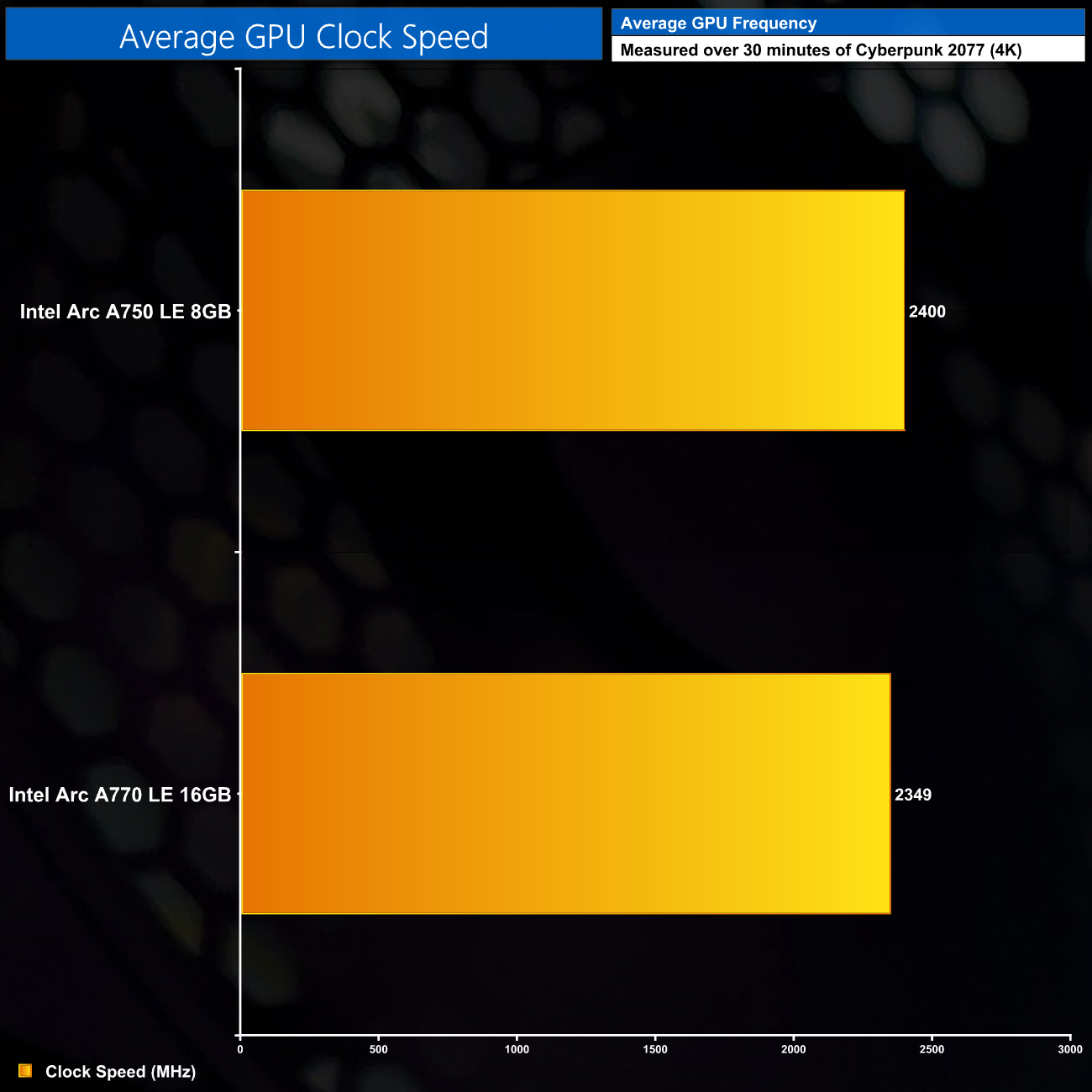

Here we present the average clock speed for each graphics card while running Cyberpunk 2077 for 30 minutes. We use GPU-Z to record the GPU core frequency during gameplay. We calculate the average core frequency during the 30-minute run to present here.

With only two Arc cards to test, operating clock speeds don't mean too much just yet, but over our 30-minute 4K workload, the A750 averaged 2400MHz exactly, with the A770 hitting 2349MHz. These figures are a fair bit higher than Intel's rated graphics clock speeds, but the exact frequency will vary depending on the workload in question.

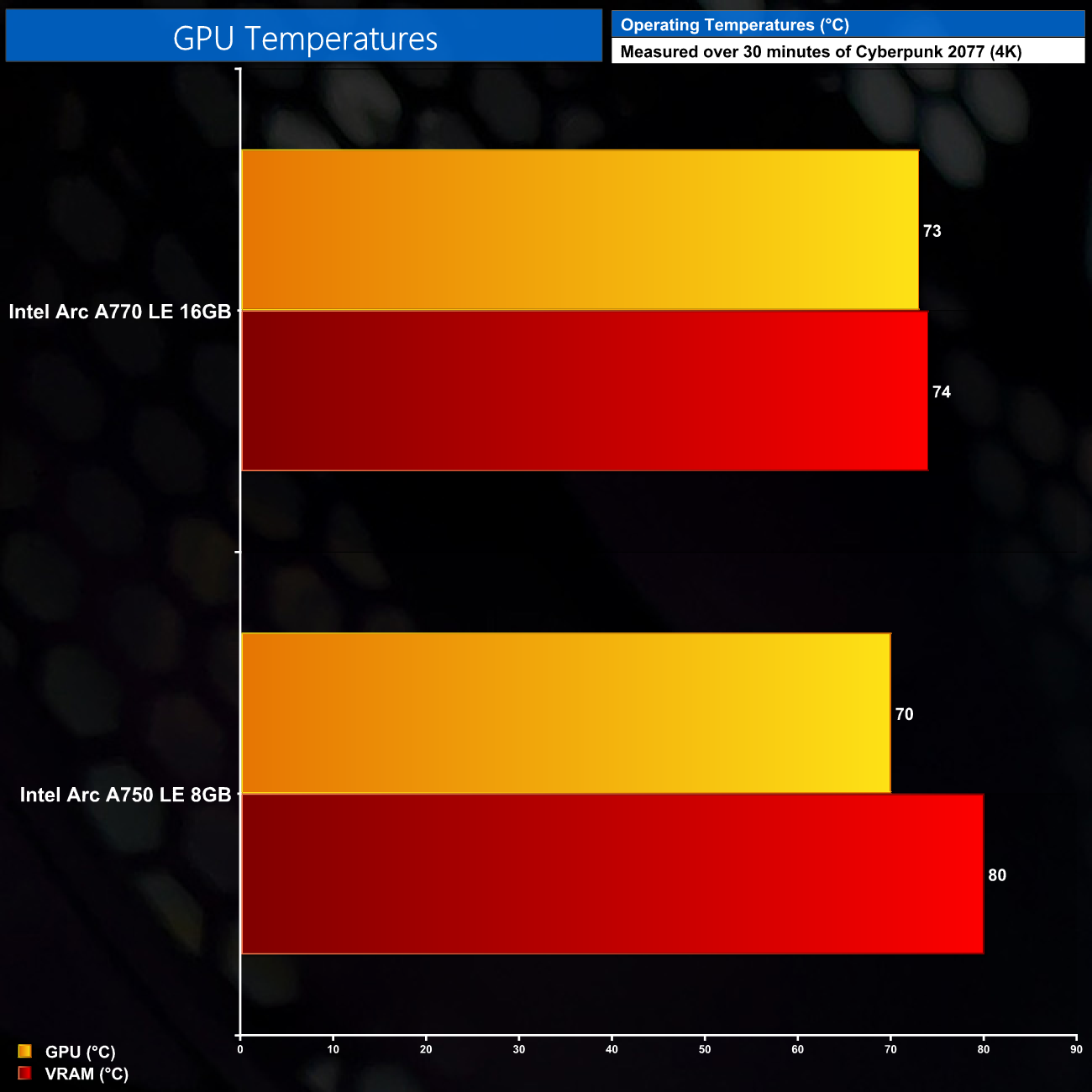

For our temperature testing, we measure the peak GPU core temperature under load. A reading under load comes from running Cyberpunk 2077 for 30 minutes.

Thermally, we have no complains about the design of the Intel Arc Limited Edition cooler. Do note the chart above shows both GPU and VRAM temperatures, but both cards were able to keep the GPU temperatures at or around 70C under load. The VRAM on the A750 did run a bit hotter than that of the A770, but peaking at 80C is still absolutely fine.

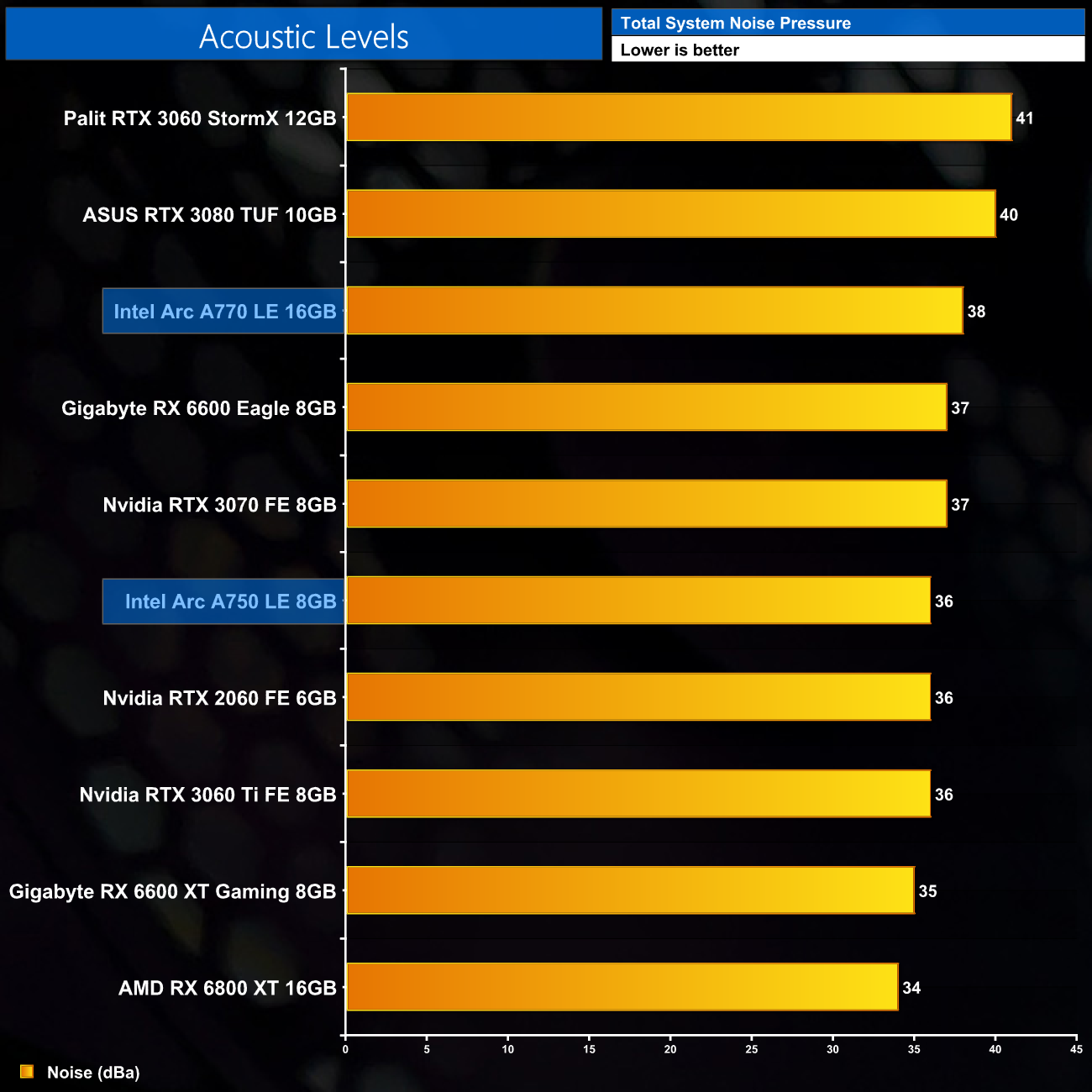

We take our noise measurements with the sound meter positioned 1 foot from the graphics card. I measured the noise floor to be 32 dBA, thus anything above this level can be attributed to the graphics cards. The power supply is passive for the entire power output range we tested all graphics cards in, while all CPU and system fans were disabled. A reading under load comes from running Cyberpunk 2077 for 30 minutes.

As for noise levels, both Arc GPUs are right in line with the competition. As we'd expect given both SKUs use the same cooler, but the A770 is the faster chip, it does run a bit louder, with its fan at about 1800rpm, producing 38dBa of noise. The A750 runs its fans slightly slower, at about 1600rpm in our testing, producing 36dBa of noise – equivalent to an RTX 2060 Founders Edition.

While fan noise is absolutely fine, I did notice some coil whine present, especially on the A750. This isn't too noticeable while gaming, but in certain game menus with an uncapped frame rate, a high-pitched squealing sound is audible. We provide a sound test of this in the video.

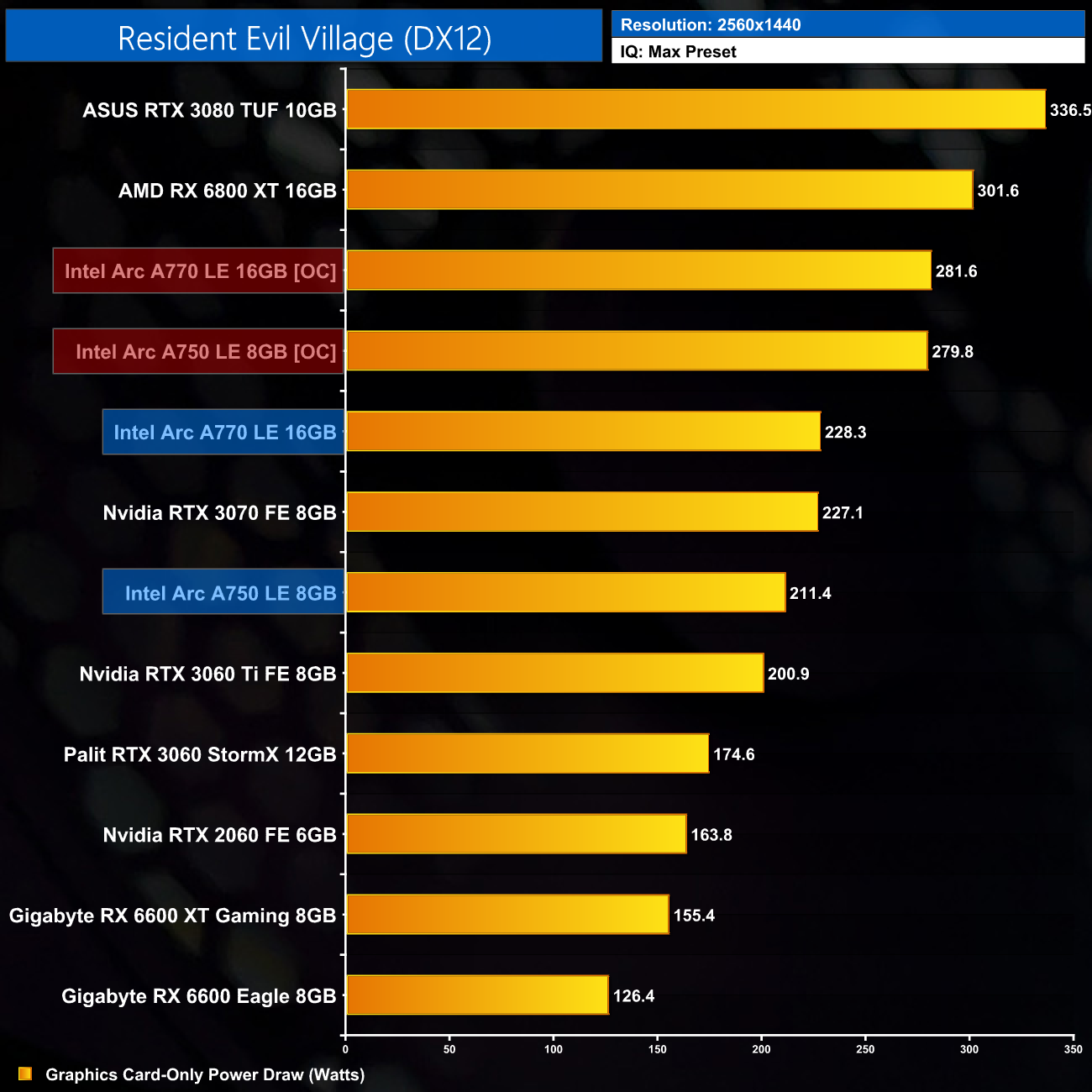

Here we present power draw figures for the graphics card-only, on a per-game basis for all twelve games we tested at 1080p. This is measured using Nvidia's Power Capture Analysis Tool, also known as PCAT. You can read more about our updated power draw testing methodology HERE.

Per-Game Results at 1080p:

Click to enlarge.

Despite being rated at 225W board power, at 1080p the A750 typically draws much lower than that. Above you can see the power draw results from every game tested, but it averaged 178.9W at 1080p.

Here we present power draw figures for the graphics card-only, on a per-game basis for all twelve games we tested at 1440p. This is measured using Nvidia's Power Capture Analysis Tool, also known as PCAT. You can read more about our updated power draw testing methodology HERE.

Per-Game Results at 1440p:

Click to enlarge.

At 1440p, power draw for the A750 climbs slightly, with a new average of 192.4W, though this is still comfortably below the 225W rated power draw.

Here we present power draw figures for the graphics card-only, on a per-game basis for all twelve games we tested at 2160p (4K). This is measured using Nvidia's Power Capture Analysis Tool, also known as PCAT. You can read more about our updated power draw testing methodology HERE.

Per-Game Results at 2160p (4K):

Click to enlarge.

Even at 4K, power draw for the A750 averaged just over 200W, hitting 200.2W, though some games do pull slightly more than like, including Resident Evil Village – as you can see above.

Using the graphics card-only power draw figures presented earlier in the review, here we present performance per Watt on a per-game basis for all twelve games we tested at 1080p.

Per-Game Results at 1080p:

Click to enlarge.

Efficiency is not particularly competitive for the A750, though it is better at than the A770 across the board. Typical performance per Watt is often times equal to or slightly worse than the Turing-based RTX 2060.

Using the graphics card-only power draw figures presented earlier in the review, here we present performance per Watt on a per-game basis for all twelve games we tested at 1440p.

Per-Game Results at 1440p:

Click to enlarge.

The 1440p data closely follows the trends shown at 1080p. Red Dead Redemption 2 is a best-case scenario for the A750, but even then it is behind the RTX 3060 Ti, and significantly behind the RX 6600 series in terms of performance per Watt.

Using the graphics card-only power draw figures presented earlier in the review, here we present performance per Watt on a per-game basis for all twelve games we tested at 2160p (4K).

Per-Game Results at 2160p (4K):

Click to enlarge.

Lastly, we close our performance per Watt testing with the 4K data – it's not hugely relevant as this is not a 4K gaming card, but it's interesting nonetheless.

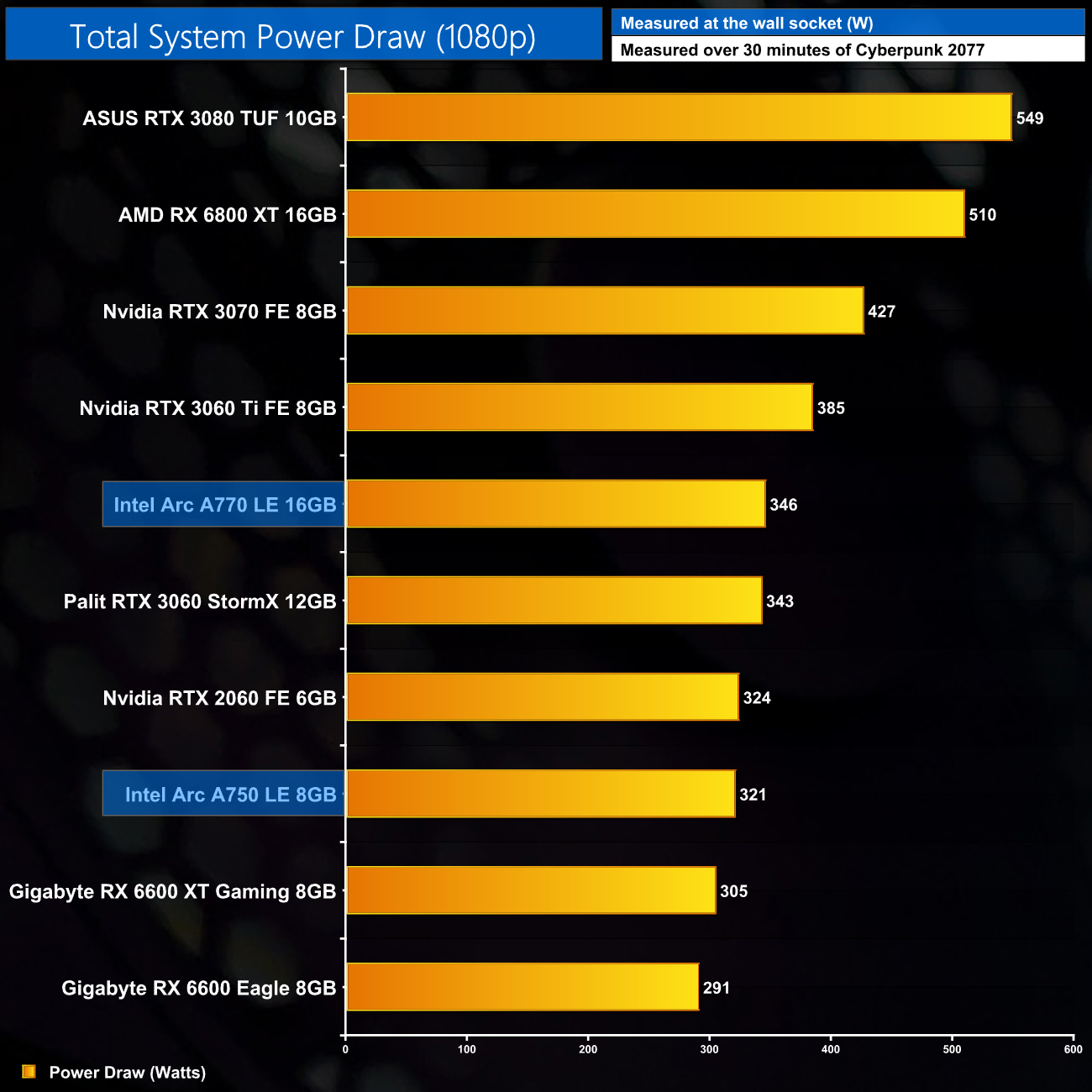

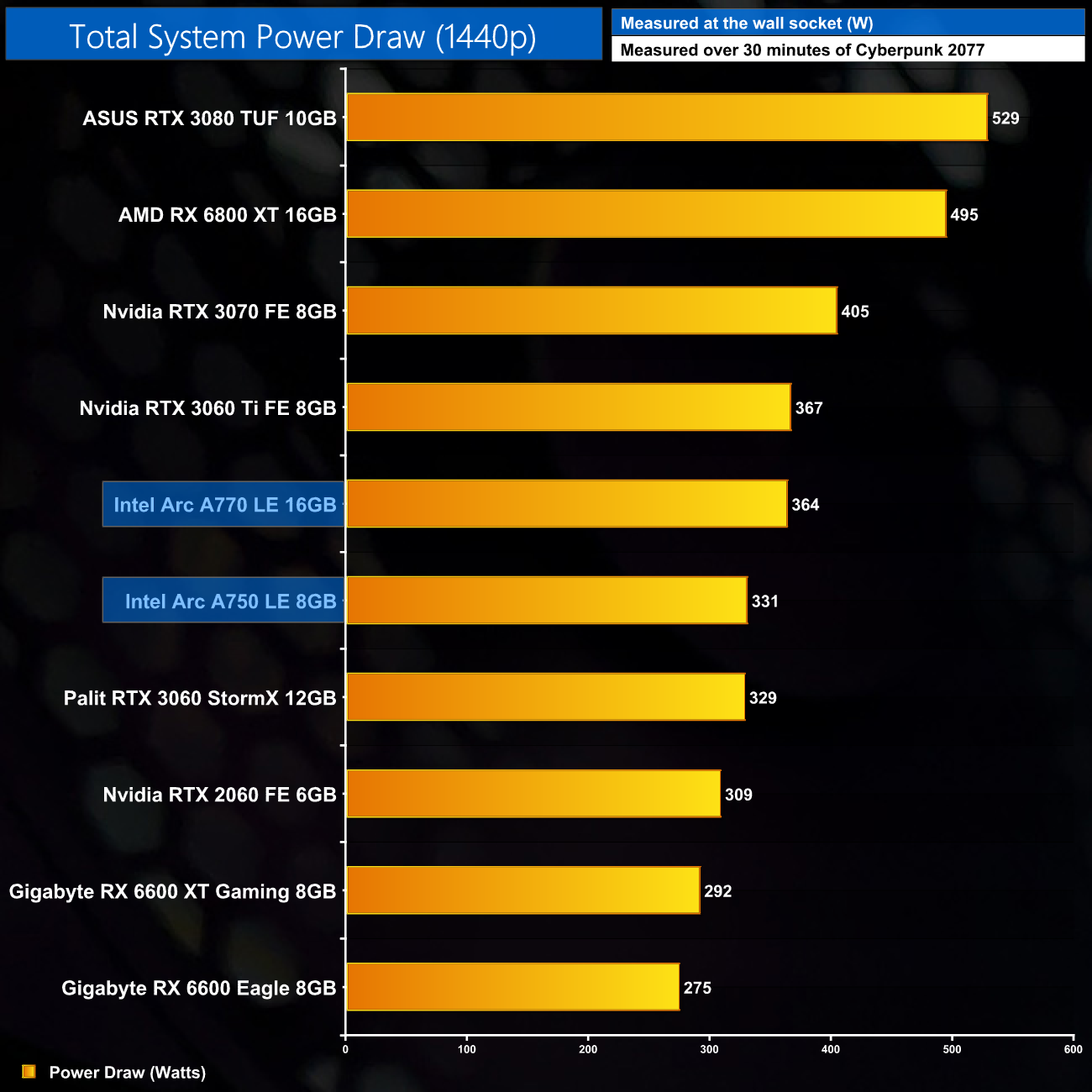

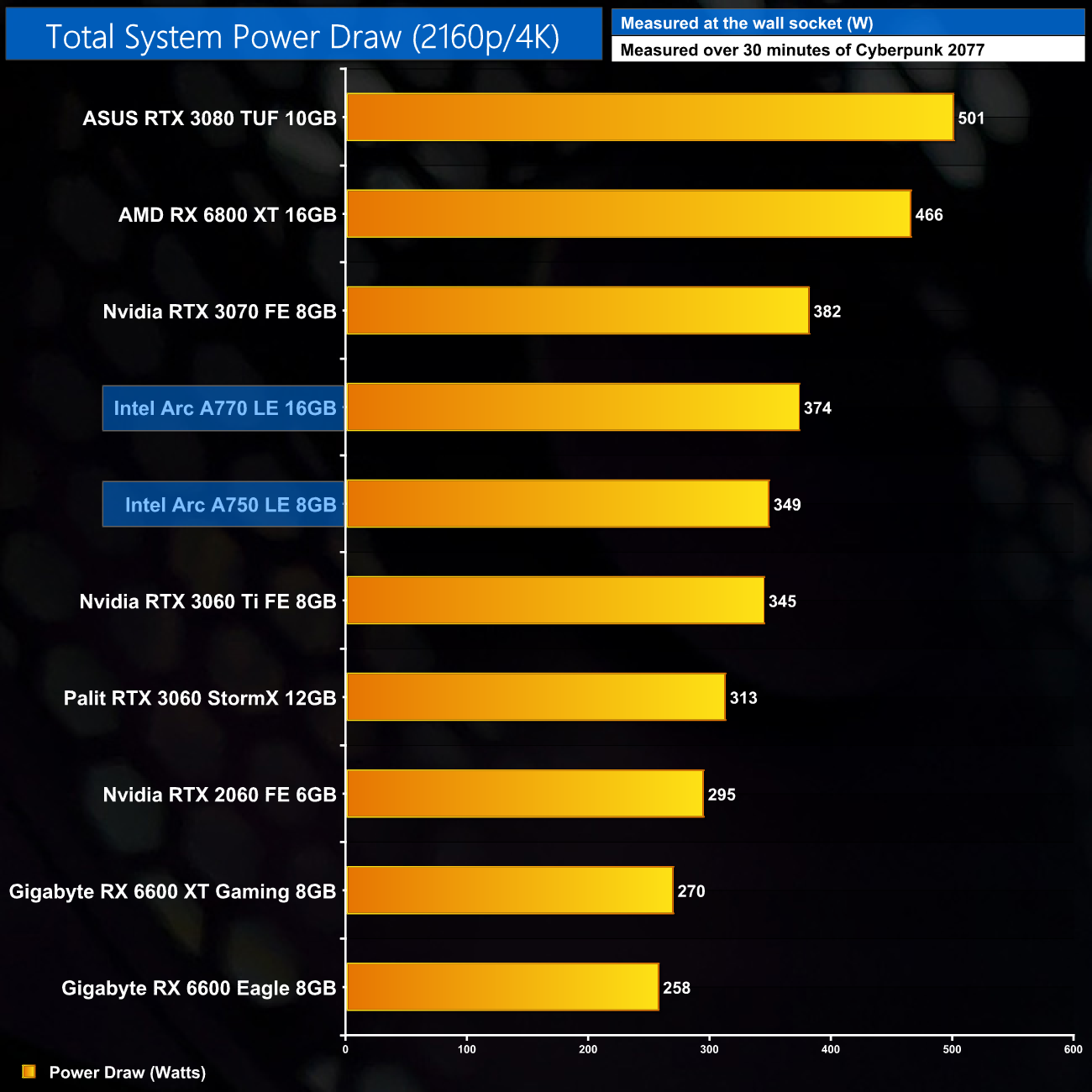

We measure system-wide power draw from the wall while running Cyberpunk 2077 for 30 minutes. We do this at 1080p, 1440p and 2160p (4K) to give you a better idea of total system power draw across a range of resolutions, where CPU power is typically higher at the lower resolutions.

To add to our detailed graphics card-only power draw testing, we also look at power of the entire system measured at the wall socket. Power draw for the Arc GPUs increases as we step up in resolution, peaking at 350W for the A750 at 4K. A 500W PSU should be enough to power this card.

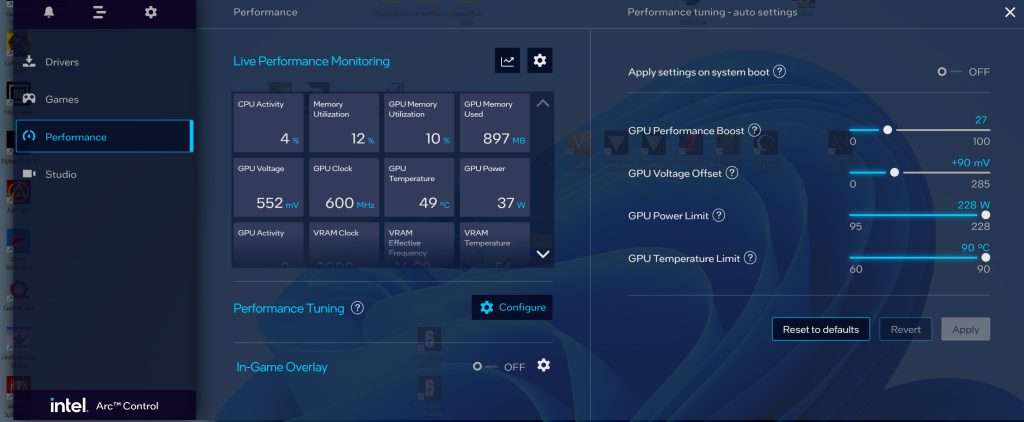

For our manual overclocking tests, we used Intel's Arc Control software. Our best results are as below.

Overclocking with Arc Control was surprisingly painless. We increased the power limit to its maximum value of 228W, and set the GPU voltage offset to +90mV, with the GPU performance boost set to 27.

This saw our Time Spy score increase by 10%, and offered a 9% boost to frame rates in Resident Evil Village, so it's not too shabby at all for a pretty painless OC.

Of course, power draw did also increase, hitting 279.8W, further reducing the A750's overall efficiency.

With the GPU market dominated by Nvidia and AMD for as long as I can remember, it is hugely exciting to see a third player breaking into the discrete graphics segment. After first launching the low-end A380 exclusively in China, Intel has, at last, launched its mainstream options to the western market. This review is focused on the $289 A750 Limited Edition, but we do also have a day-1 review of the A770 Limited Edition that you can find HERE.

In all honesty, I had very little idea of what to expect when the Arc GPUs arrived on my doorstep. After spending the last several days testing a multitude of different games, engines and APIs, it is safe to say we now have a clear picture. And in my view, these GPUs just aren't ready for the mainstream market. Yet.

I add that caveat as generally speaking, the Arc hardware is impressive. The glimpses we have seen are very promising indeed, particularly the performance on offer in games such as Red Dead Redemption 2 and Dying Light 2 which far outstrip the competition at this price point. Ray tracing is a clear high point too, with Intel's 1st gen architecture rivalling the RTX 3060 in all of the ray traced games we tested – the same could not be said for AMD's first ray tracing architecture.

Intel's design of the Limited Edition cards is deserving of praise. Not only is the A750 visually alluring with its sleek, matte black aesthetic, but it runs quiet and cool – impressively so considering its compact nature. We did find the VRAM ran slightly hotter than the A770, but not by much and it's certainly nothing to worry about.

Instead, what holds the Arc GPUs back right now is the driver and software side of things. Throughout my testing, I experienced incredibly poor frame times in certain games, visual glitches that affected two of the twelve games I wanted to benchmark, as well as game crashes and even system BSODs. Performance in DX11 titles is also a huge problem for Arc, while Rebar is absolutely essential for a hope of a smooth gaming experience. I'd add to that by saying I wasn't trying to go out of my way to find problems. I simply set out to benchmark a wide variety of titles, and this was my experience.

As much as the hardware does show potential, and while we certainly want to give Intel a fair bit of leeway here as this is the company's first dGPU launch, our buying advice has to put those two factors to one side. At the end of the day, a manufacturer has come to market with a product and is now asking for your money – but based on my experience, I can't recommend spending the cash that is being asked.

Certainly not at the $289 asking price, at least. While this is an aggressive play by Intel, it doesn't go far enough in my opinion, not when multiple RX 6650 XTs are currently selling on Newegg.com for between $300-329. If the A750 was priced at, say, $199 then it might – might – be worth a gamble for an experienced PC gamer who is happy to deal with the accompanying driver issues, given the strong performance seen in some games.

But to crack the mass market, Intel's GPU team has a long road ahead of them. We remain optimistic that Arc could be a success in the future, as we say there certainly are glimpses of strong potential here, and we look forward to testing the A750 and A770 as major updates land and hopefully change the picture.

Right now, however, isn't the time to jump on the Arc.

The Intel Arc A750 Limited Edition has an MSRP of $289, and Intel is adamant that cards will be in stock and selling for that price on October 12. We're still unclear on UK availability and pricing but will update this article when we know more.

Discuss on our Facebook page HERE.

Pros

- Impressive ray tracing performance for a 1st gen architecture.

- Outperforms the RTX 3060 handily in certain games.

- Well-designed Limited Edition cooler.

- Overclocked fairly well.

- A750 offers better price-to-performance than A770 16GB.

Cons

- DX11 performance is woeful compared to DX12 or Vulkan.

- Resizable BAR support is an absolute must.

- We experienced numerous crashes, visual glitches and BSODs while testing.

- Frame times can be incredibly erratic in certain games.

- Overall efficiency is poor compared to RDNA 2.

- Pricing isn't currently aggressive enough to warrant the significant risk of purchase.

KitGuru says: We can't see Intel's entry to the dGPU market space as anything other than a good thing. Right now, however, the company has a lot of work to do before we can recommend purchasing an Arc graphics card.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards