Today we take a look at the second Fury card to hit our labs, the Asus Radeon R9 Fury Strix. This card ships with the latest Asus DirectCU III cooler and a very modest overclock, activated directly via ASUS GPU Tweak 2 software. I was impressed with the Sapphire Tri-X R9 Fury when I tested it last week, so we will see today if Asus are able to bat at the same level.

There is no doubt that Fury is an important SKU for AMD as it is priced around £80+ less than the flagship Fury X, subsequently targeting a broader enthusiast audience interested in buying a modified GTX980.

If you want a brief recap of Fiji, the new architecture and HBM memory, visit this page.

| GPU | R9 390X | R9 290X | R9 390 | R9 290 | R9 380 | R9 285 | Fury X | Fury |

| Launch | June 2015 | Oct 2013 | June 2015 | Nov 2013 | June 2015 | Sep 2014 | June 2015 | June 2015 |

| DX Support | 12 | 12 | 12 | 12 | 12 | 12 | 12 | 12 |

| Process (nm) | 28 | 28 | 28 | 28 | 28 | 28 | 28 | 28 |

| Processors | 2816 | 2816 | 2560 | 2560 | 1792 | 1792 | 4096 | 3584 |

| Texture Units | 176 | 176 | 160 | 160 | 112 | 112 | 256 | 224 |

| ROP’s | 64 | 64 | 64 | 64 | 32 | 32 | 64 | 64 |

| Boost CPU Clock | 1050 | 1000 | 1000 | 947 | 970 | 918 | 1050 | 1000 |

| Memory Clock | 6000 | 5000 | 6000 | 5000 | 5700 | 5500 | 500 | 500 |

| Memory Bus (bits) | 512 | 512 | 512 | 512 | 256 | 256 | 4096 | 4096 |

| Max Bandwidth (GB/s) | 384 | 320 | 384 | 320 | 182.4 | 176 | 512 | 512 |

| Memory Size (MB) | 8192 | 4096 | 8192 | 4096 | 4096 | 2048 | 4096 | 4096 |

| Transistors (mn) | 6200 | 6200 | 6200 | 6200 | 5000 | 5000 | 8900 | 8900 |

| TDP (watts) | 275 | 290 | 275 | 275 | 190 | 190 | 275 | 275 |

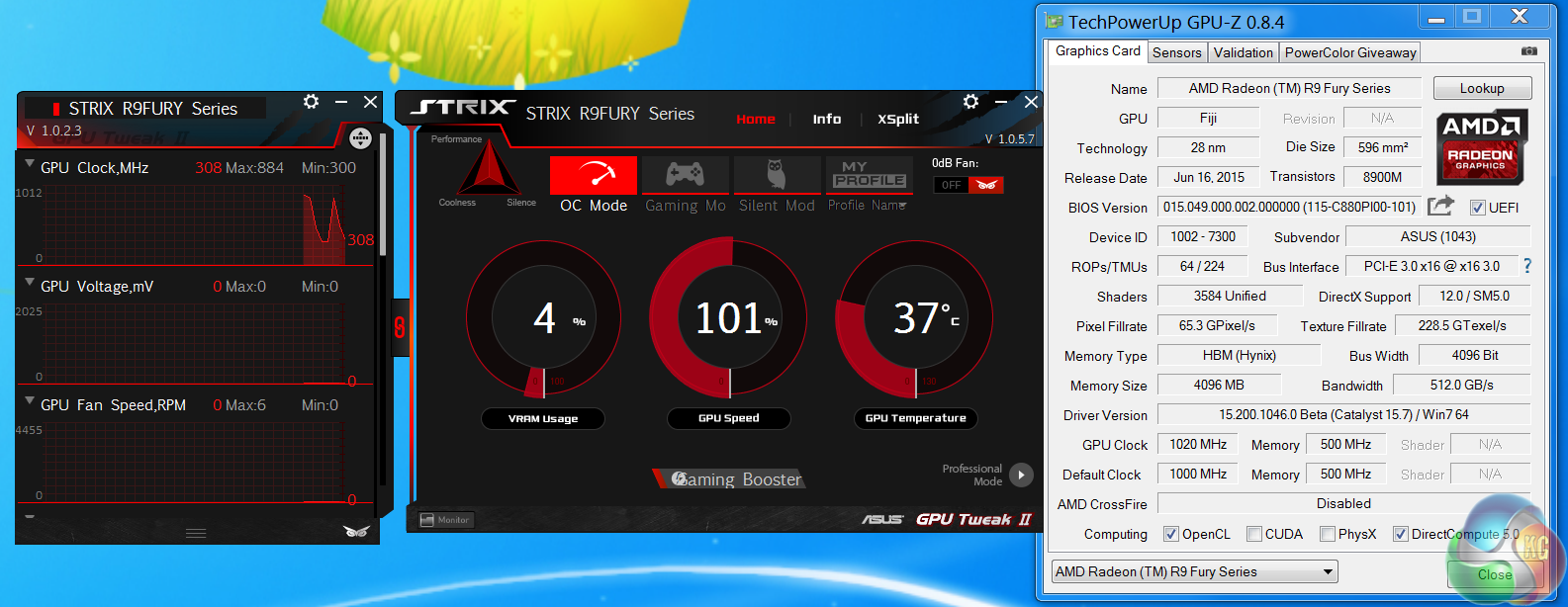

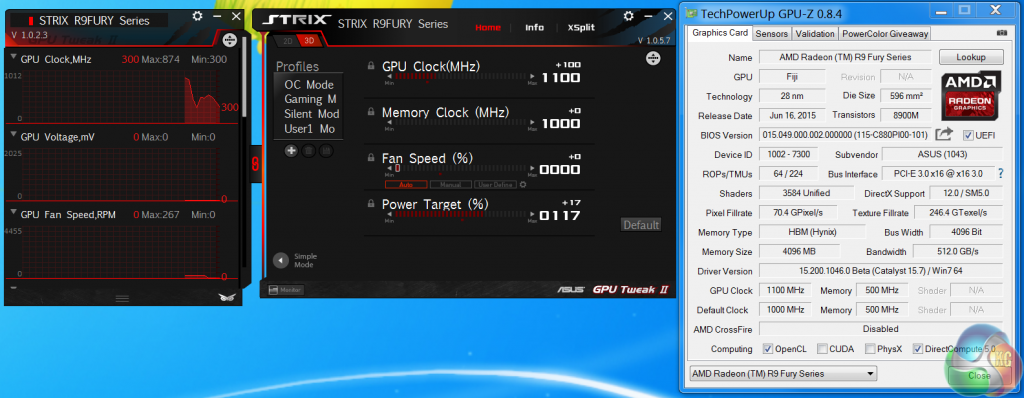

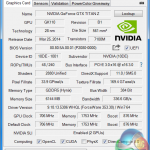

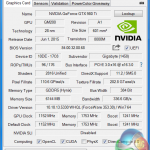

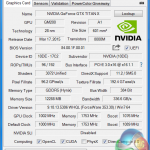

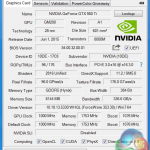

When we first started testing the Asus R9 Fury STRIX DC3 we noticed that the core speed was set at 1000mhz, yet Overclockers UK have the card listed as an ‘OC' model HERE. I contacted ASUS UK about the 20mhz difference and they told me that the clock boost is tied into their latest version of GPU Tweak 2, shown in the image above. Yes, there is only one R9 Fury model available from ASUS.

ASUS could have simply just set the BIOS clock speed to 1,020mhz and done away with the need for any software at all.

So is this card classed as an ‘OC' model or not? If you install the proprietary software and click the OC button, then yes it is, but for those people who don't install the software, its running at AMD's reference clock speeds of 1,000mhz. We tested as Asus requested, at 1,020mhz with GPU Tweak 2 running in OC mode. Not a big deal in the grand scheme of things, but a little bewildering. On a brighter note, Asus GPU Tweak 2 is actually a really good piece of software, and appears to run in a very stable manner.

The AMD R9 Fury GPU is built on the 28nm process and is equipped with 64 ROPS, 224 texture units and 3,584 shaders. The more expensive Fury X has 64 ROPS, 256 Texture units and 4,096 shaders. They both have 4GB of HBM (Hynix) memory across a super wide 4096 bit memory interface. Bandwidth rating for both cards is 512 GB/s.

The Asus Strix OWL makes another appearance on the front of the box, with some specifications underneath.

Details of the cooler are listed on the rear of the box.

Inside the box is a software disc, sticker, literature on the product, and a power converter cable.

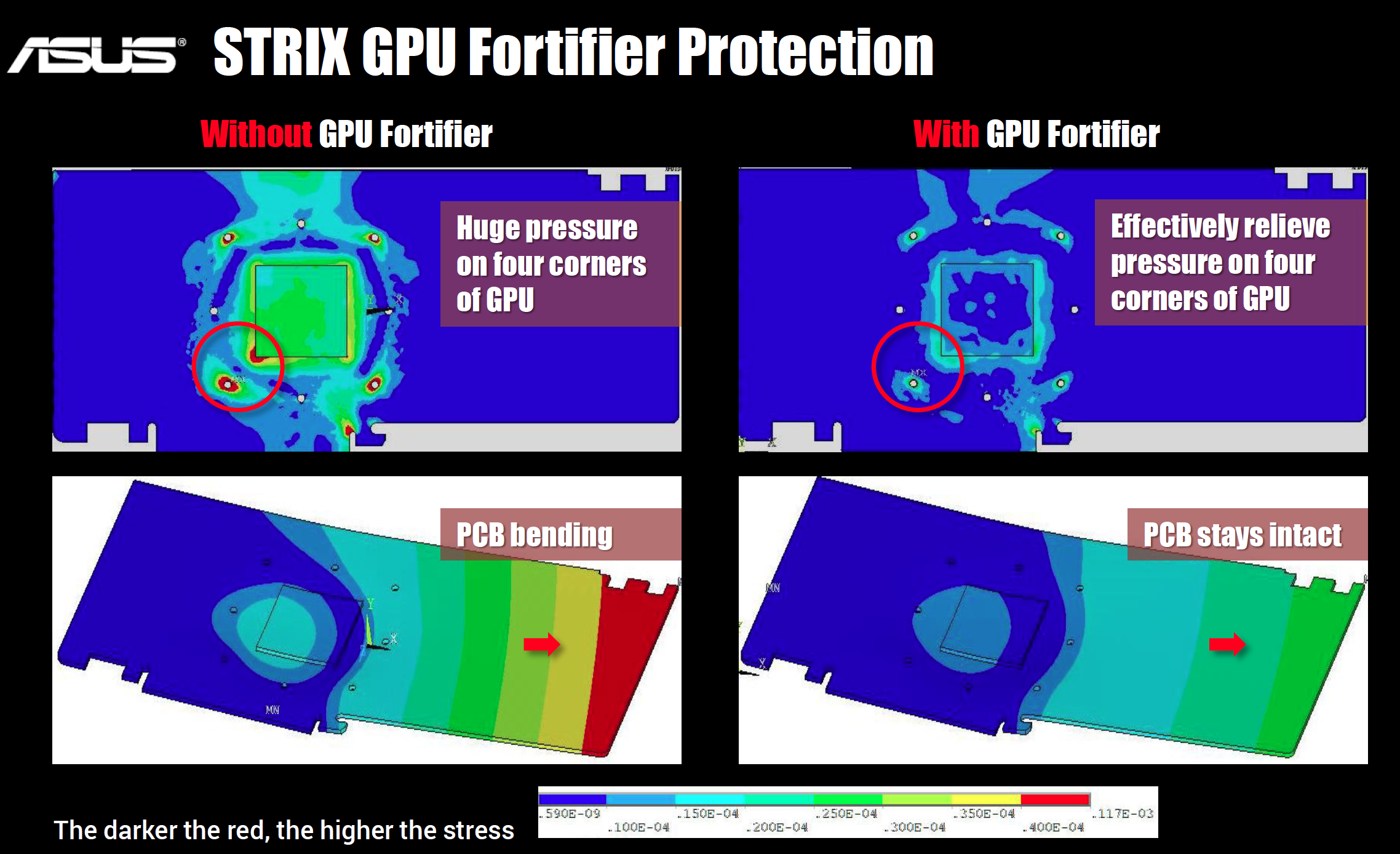

The Asus Radeon R9 Fury Strix is a big heavy card and it is fitted with a substantial back plate. They also install a ‘GPU Fortifier'. The triple fans are based on the ‘wing-blade’ technology which places more air pressure on the edge of the blades to increase maximum air flow without increasing noise levels.

This ‘GPU Fortifier' is said to give relief to the four corners of the GPU core on the PCB and to help ensure it doesn't bend either.

The card takes power from two eight pin power connectors.

AMD have ditched VGA and DVI connectivity on the rear I/O plate – opting for DisplayPort 1.2a and HDMI ports. We have no problems with this, especially as the card ships with a DVI converter cable, however there is one, rather niggling issue. AMD are still using HDMI 1.4a ports on their cards which are limited to 30hz at Ultra HD 4K.

If you want to game on a large Ultra HD 4K television it is likely it won’t have a DisplayPort connector – only a small percentage do. You are therefore not able to get 60hz at the native resolution … and no one wants to play games at 30hz. Nvidia cards have had full HDMI 2.0 support now for quite some time. This may not be an issue for many, but we have already noticed enthusiast gamers complaining about AMD’s lack of HDMI 2.0 support, on our Facebook page. I think this is a rather glaring oversight by AMD.

On a more positive note, the card supports 4 monitors natively and up to six monitors with an MST hub or by Daisy chaining. There is full support for AMD Freesync, VSR, Liquid VR and bridgeless Crossfire.

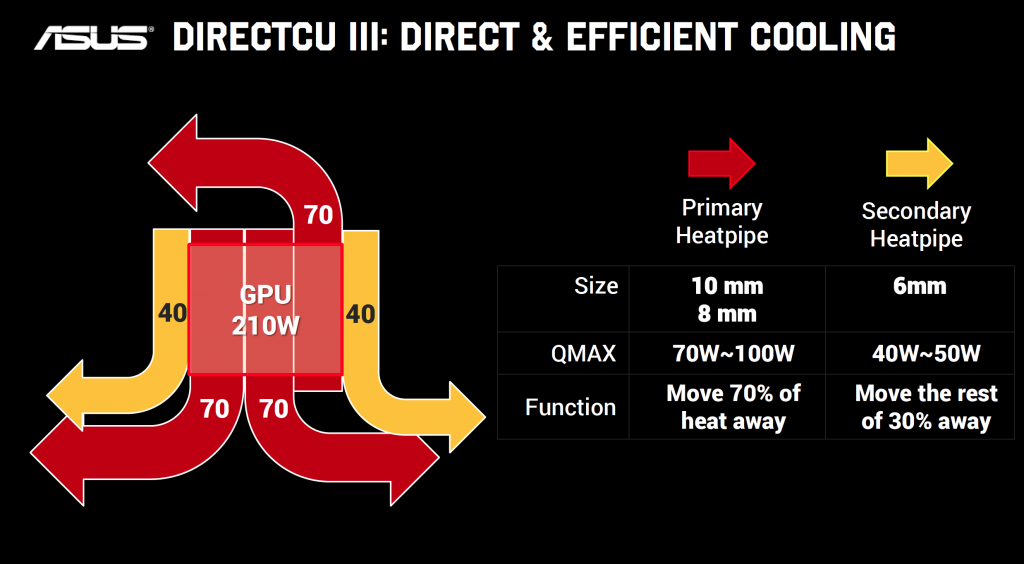

Sapphire didn't want us to disassemble their card, although ASUS seemed much more relaxed over taking the card apart. After we had finished testing we disassembled the R9 Fury card. We can see it is comprised of 5 thick nickel plated copper heatpipes running into two rack of aluminum fins on either side. Parts of the DCIII cool VRM components underneath.

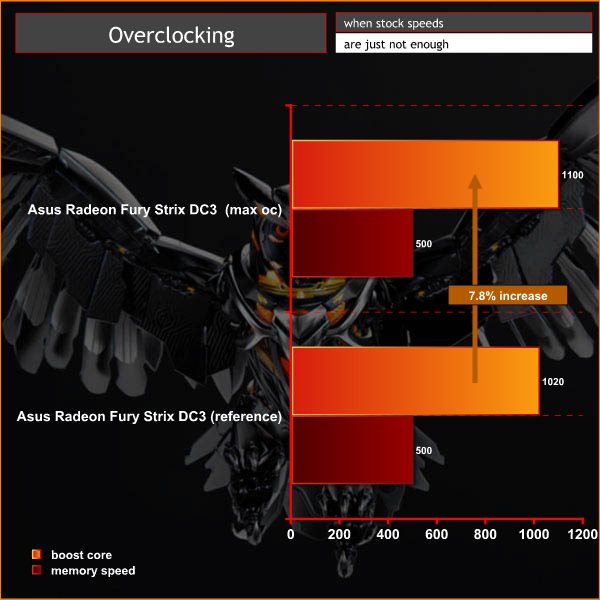

The diagram above came from ASUS and shows how their cooler design works.As we spent a lot of time retesting all our cards with the latest AMD and NVIDIA drivers we felt it was worthwhile adding in results from the Fury 4GB with it overclocked to breaking point. How far did we manage to get it clocked?

We used the version of GPU Tweak 2 that ASUS supplied for this card – Ver 1057.

Right now overclocking the memory proves difficult – the option is locked by default and the slider has disappeared completely with Catalyst 15.7. For now we managed to overclock the core by 80mhz to 1,100mhz. Increasing the power settings further didn’t help improve the GPU clocks.

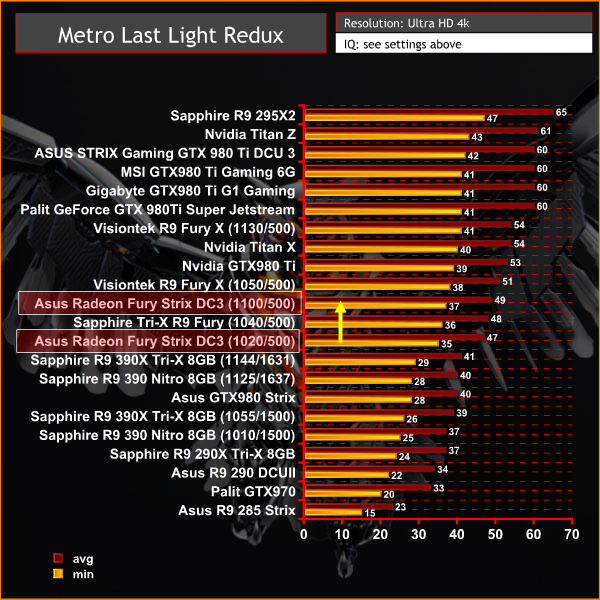

All our graphs today will show performance from the Fury 4GB at 1020mhz speeds, along with a yellow arrow pointing upwards to indicate additional results at 1,100mhz.On this page we present some high resolution images of the product taken with a Canon 1DX and Canon 28-70mm F2.8 lens. These will take much longer to open due to the dimensions, especially on slower connections. If you use these pictures on another site or publication, please credit Kitguru.net as the owner/source.

For the last 20 days we have been testing and retesting all the video cards in this review with the latest 15.6 Catalyst and 353.30 Forceware drivers. We have also selected some new game sections to benchmark during our ‘real world runs’.

If you want to read more about our test system, or are interested in buying the same Kitguru Test Rig, check out our article with links on this page. We are using an Asus PB287Q 4k and Apple 30 inch Cinema HD monitor for this review today.

Due to reader feedback we have changed the 1600p tests to 1440p, and we have also disabled Nvidia specific features such as Hairworks in The Witcher 3: Wild Hunt as it can have such a negative impact on partnering hardware.

Anti Aliasing is also now disabled in our tests at Ultra HD 4K as readers have indicated they don’t need it at such a high resolution.

If you have other suggestions please email me directly at zardon(at)kitguru.net.

Cards on test:

Asus Radeon R9 Fury Strix DC3 OC (1,020 mhz core / 500 mhz memory) & (1,100mhz core)

Sapphire Tri-X Radeon R9 Fury 4GB (1,040 mhz core / 500 mhz memory)

Palit Geforce GTX980 Ti Super Jetstream (1,152 mhz core / 1,753 mhz memory)

MSI GTX980 Ti Gaming 6G (1,178 mhz core / 1774 mhz memory)

ASUS STRIX Gaming GTX 980 Ti DirectCU 3 (1,216 mhz core / 1800mhz memory)

Visiontek Radeon R9 Fury X 4GB (1,050mhz core / 500mhz memory) & (1,130mhz core)

Sapphire R9 295X2 (1,018 mhz core / 1,250 mhz memory)

Nvidia Titan Z (706mhz core / 1,753 mhz memory)

Gigabyte GTX980 Ti G1 Gaming (1,152mhz / 1,753 mhz memory)

Nvidia Titan X (1,002 mhz core / 1,753 mhz memory)

Nvidia GTX 980 Ti (1,000 mhz core / 1,753 mhz memory)

Asus GTX980 Strix (1,178 mhz core / 1,753 mhz memory)

Sapphire R9 390 X 8GB (1,055 mhz core / 1,500 mhz memory) & (1,144mhz core / 1631 mhz memory)

Sapphire R9 390 Nitro 8GB (1,010 mhz core / 1,500 mhz memory) & (1,125mhz core / 1637 mhz memory)

Sapphire R9 290 X 8GB (1,020 mhz core / 1,375 mhz memory)

Asus R9 290 Direct CU II ( 1,000 mhz core / 1,250 mhz memory)

Asus R9 285 Strix (954 mhz core / 1,375 mhz memory)

Palit GTX970 (1,051 mhz core / 1,753 mhz memory)

Software:

Windows 7 Enterprise 64 bit

Unigine Heaven Benchmark

Unigine Valley Benchmark

3DMark Vantage

3DMark 11

3DMark

Fraps Professional

Steam Client

FurMark

Games:

Grid AutoSport

Tomb Raider

Grand Theft Auto 5

Witcher 3: The Wild Hunt

Metro Last Light Redux

We perform under real world conditions, meaning KitGuru tests games across five closely matched runs and then average out the results to get an accurate median figure. If we use scripted benchmarks, they are mentioned on the relevant page.

Game descriptions edited with courtesy from Wikipedia.

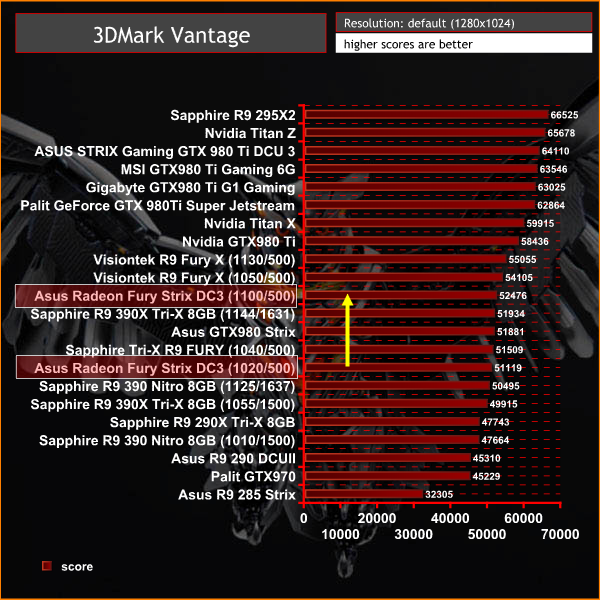

Futuremark released 3DMark Vantage, on April 28, 2008. It is a benchmark based upon DirectX 10, and therefore will only run under Windows Vista (Service Pack 1 is stated as a requirement) and Windows 7. This is the first edition where the feature-restricted, free of charge version could not be used any number of times. 1280×1024 resolution was used with performance settings.

The default scores are quite good, indicating strong performance with older Direct X 10 titles. When manually overclocked to 1,100mhz the scores increase – slotting in just behind the reference clocked Fury X.

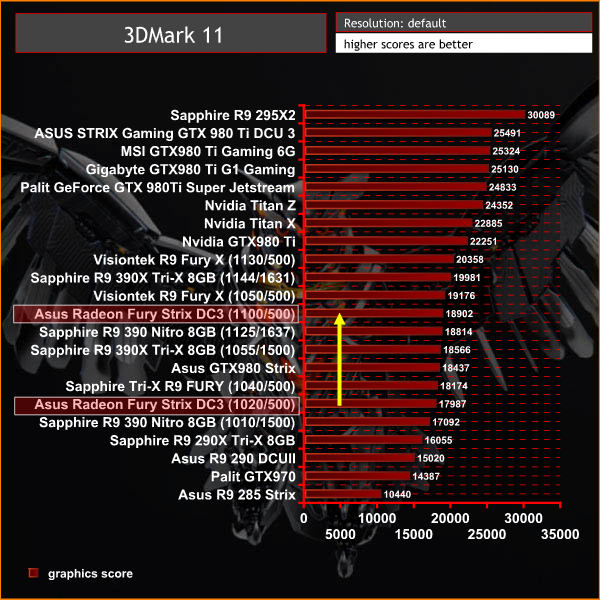

3DMark 11 is designed for testing DirectX 11 hardware running on Windows 7 and Windows Vista the benchmark includes six all new benchmark tests that make extensive use of all the new features in DirectX 11 including tessellation, compute shaders and multi-threading. After running the tests 3DMark gives your system a score with larger numbers indicating better performance. Trusted by gamers worldwide to give accurate and unbiased results, 3DMark 11 is the best way to test DirectX 11 under game-like loads.

Strong performance, again scoring just in behind the reference clocked R9 Fury X when overclocked to the limits.

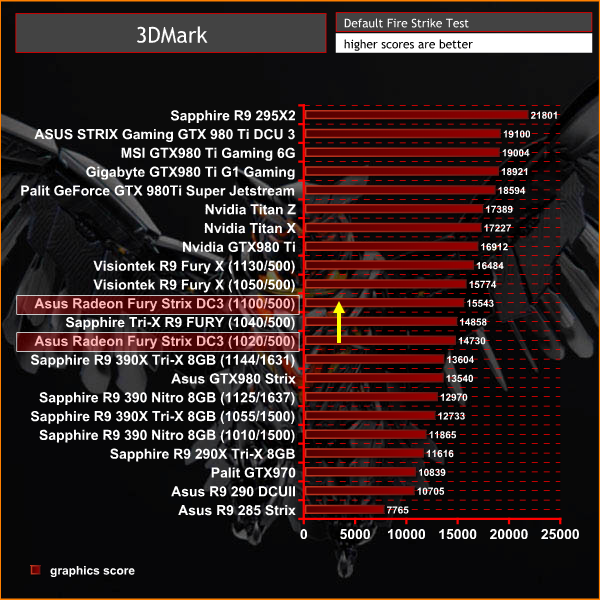

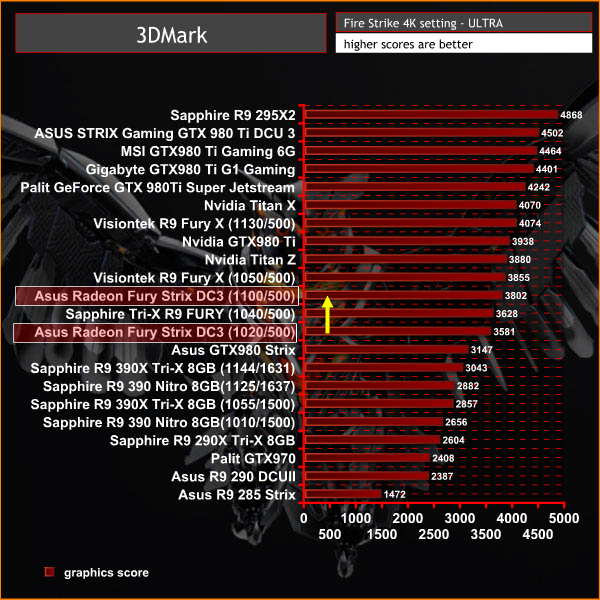

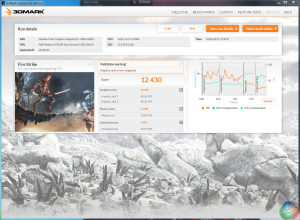

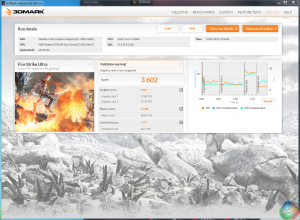

3DMark is an essential tool used by millions of gamers, hundreds of hardware review sites and many of the world’s leading manufacturers to measure PC gaming performance.

Futuremark say “Use it to test your PC’s limits and measure the impact of overclocking and tweaking your system. Search our massive results database and see how your PC compares or just admire the graphics and wonder why all PC games don’t look this good.

To get more out of your PC, put 3DMark in your PC.”

Excellent performance at 4K resolutions, placing the Asus Fury at 1,100mhz just in behind the reference clocked Fury X.Unigine provides an interesting way to test hardware. It can be easily adapted to various projects due to its elaborated software design and flexible toolset. A lot of their customers claim that they have never seen such extremely-effective code, which is so easy to understand.

Heaven Benchmark is a DirectX 11 GPU benchmark based on advanced Unigine engine from Unigine Corp. It reveals the enchanting magic of floating islands with a tiny village hidden in the cloudy skies. Interactive mode provides emerging experience of exploring the intricate world of steampunk. Efficient and well-architected framework makes Unigine highly scalable:

- Multiple API (DirectX 9 / DirectX 10 / DirectX 11 / OpenGL) render

- Cross-platform: MS Windows (XP, Vista, Windows 7) / Linux

- Full support of 32bit and 64bit systems

- Multicore CPU support

- Little / big endian support (ready for game consoles)

- Powerful C++ API

- Comprehensive performance profiling system

- Flexible XML-based data structures

We test at 2560×1440 with quality setting at ULTRA, Tessellation at NORMAL, and Anti-Aliasing at x2.

AMD have made improvements with their Tessellation performance, although Nvidia still hold a clear lead in this area. Regardless, the Fury card scores well at these high image quality settings.

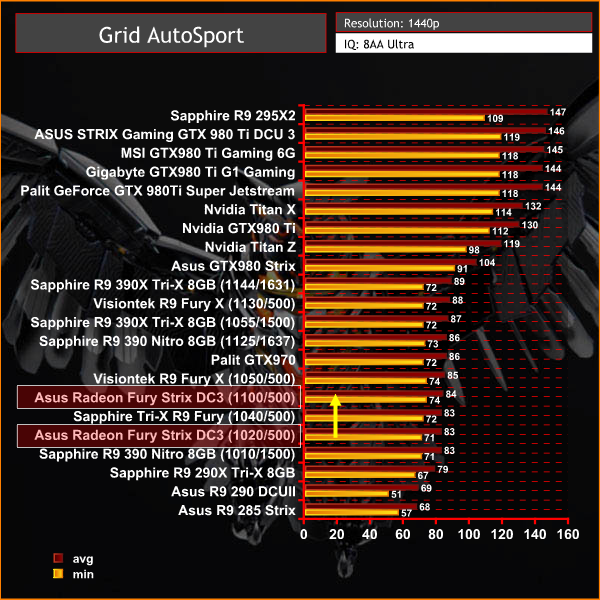

Grid Autosport (styled as GRID Autosport) is a racing video game by Codemasters and is the sequel to 2008′s Race Driver: Grid and 2013′s Grid 2. The game was released for Microsoft Windows, PlayStation 3 and Xbox 360 on June 24, 2014. (Wikipedia).

We test with the image quality on ULTRA and 8x anti aliasing enabled.

The Asus Fury is able to maintain a frame rate above 60 at all times, meaning perfectly smooth playback. When we compare against other solutions, we can see the card is underperforming a little at 1440p.

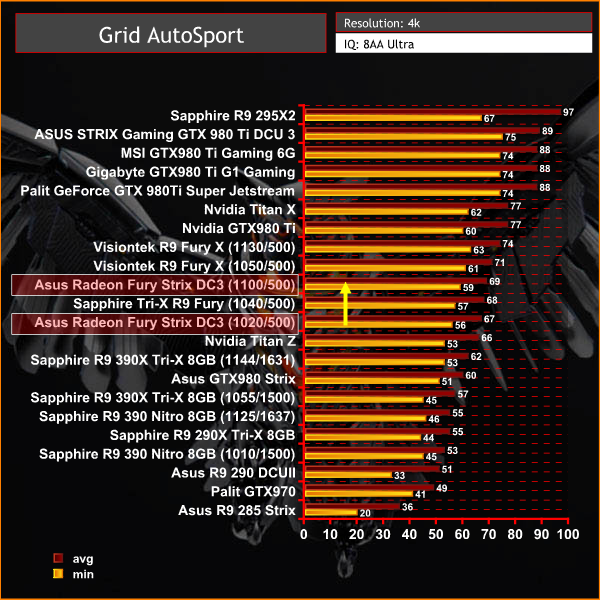

Grid Autosport (styled as GRID Autosport) is a racing video game by Codemasters and is the sequel to 2008′s Race Driver: Grid and 2013′s Grid 2. The game was released for Microsoft Windows, PlayStation 3 and Xbox 360 on June 24, 2014. (Wikipedia).

We test with the image quality on ULTRA and 8 anti aliasing enabled.

Super smooth at Ultra HD 4K, maintaining well in excess of 60 frames per second at all times and averaging 88 frames per second. It is clear to see that due to the high bandwidth nature of the architecture the Fury cards compete much better at higher resolutions.

Tomb Raider received much acclaim from critics, who praised the graphics, the gameplay and Camilla Luddington’s performance as Lara with many critics agreeing that the game is a solid and much needed reboot of the franchise. Much criticism went to the addition of the multiplayer which many felt was unnecessary. Tomb Raider went on to sell one million copies in forty-eight hours of its release, and has sold 3.4 million copies worldwide so far. (Wikipedia).

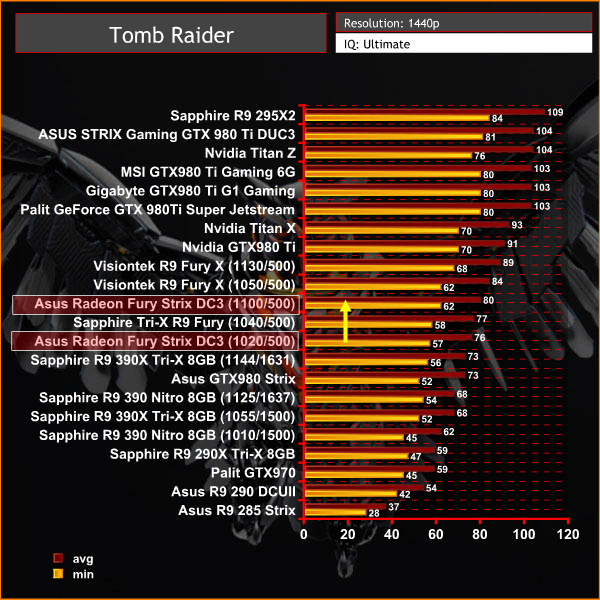

We test at 1440p with the ‘ULTIMATE’ image quality profile selected.

Tomb Raider is powered by a very capable engine and the Fury hardware has no problems powering the engine at 1440p, averaging well over 70 frames per second.Tomb Raider received much acclaim from critics, who praised the graphics, the gameplay and Camilla Luddington’s performance as Lara with many critics agreeing that the game is a solid and much needed reboot of the franchise. Much criticism went to the addition of the multiplayer which many felt was unnecessary. Tomb Raider went on to sell one million copies in forty-eight hours of its release, and has sold 3.4 million copies worldwide so far. (Wikipedia).

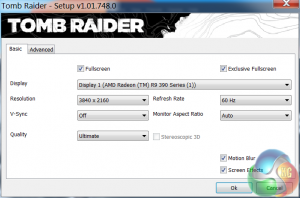

We test at 3840×2160 (4K) with the ‘ULTIMATE’ image profile selected. We normally reduce the image quality profile to ‘ULTRA’ at this resolution, but we decided to keep it at the highest image quality possible.

At these settings and at 4K resolution, the Fury card struggles to hold a 30 minimum frame rate at all times. Lowering the image quality setting from Ultimate to Ultra would help improve the smoothness of the engine. Either that, or install another Fury card and run them in Crossfire.

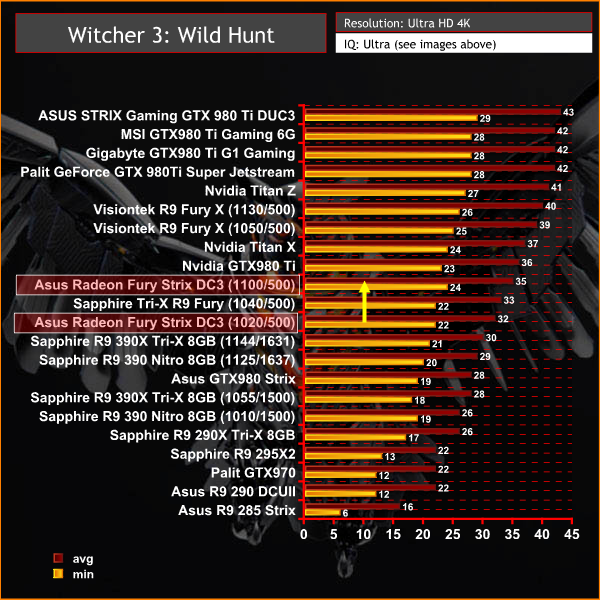

The Witcher 3: Wild Hunt (Polish: Wiedźmin 3: Dziki Gon) is an action role-playing video game set in an open world environment, developed by Polish video game developer CD Projekt RED. The Witcher 3: Wild Hunt concludes the story of the witcher Geralt of Rivia, the series’ protagonist, whose story to date has been covered in the previous versions. Continuing from The Witcher 2, the ones who sought to use Geralt are now gone. Geralt seeks to move on with his own life, embarking on a new and personal mission whilst the world order itself is coming to a change.

Geralt’s new mission comes in dark times as the mysterious and otherworldly army known as the Wild Hunt invades the Northern Kingdoms, leaving only blood soaked earth and fiery ruin in its wake; and it seems the Witcher is the key to stopping their cataclysmic rampage. (Wikipedia).

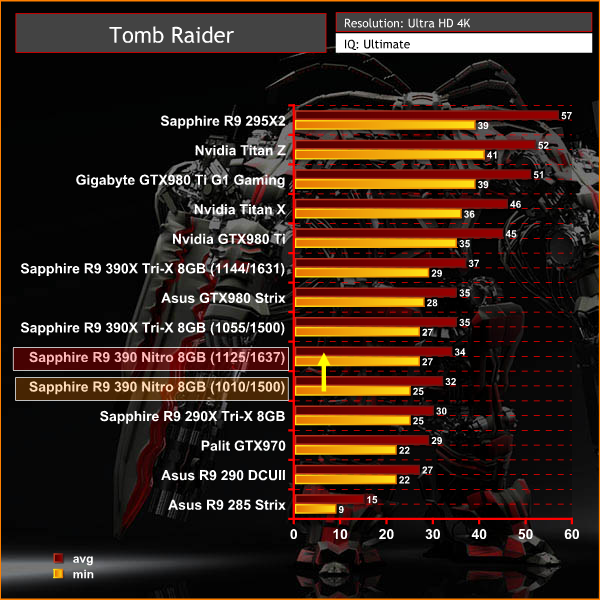

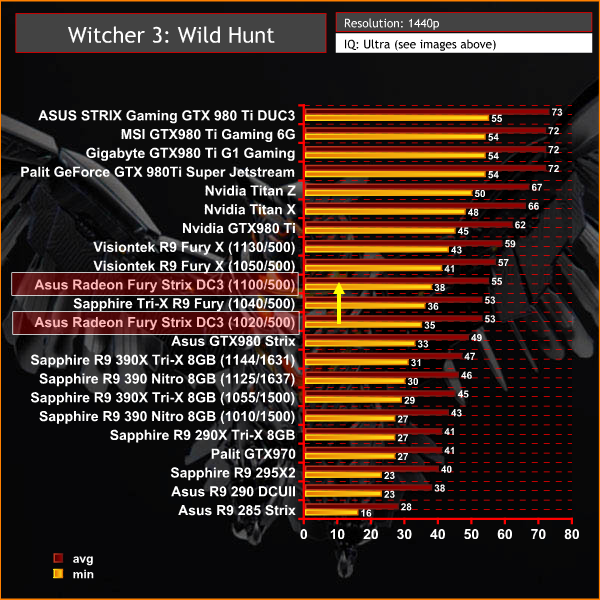

We test with the highest image quality settings, although I have disabled the Nvidia Hairworks option specifically as it does kill frame rate on many cards. Graphics Preset is on ULTRA and Postprocessing is on HIGH.

I have played The Witcher 3 for around 85 hours and I have completed the single player campaign. I tested the game today by playing 4 different save game stages for 5 minutes each, then averaging the frame rate results for a real world indication of performance – one of the map sections we tested is one of the most demanding in the game and our results can be considered strictly ‘worst case'. The Witcher 3 is a dynamic world, so it is important to run tests multiple times to remove any discrepancies.

This is one of the greatest PC games ever released in my opinion, so I spent around a total of 48 hours benchmarking it for this review alone – it should be on your must have list, if you don't have it already.

Perfectly playable at 1440p, and a smooth overall gaming experience.

The Witcher 3: Wild Hunt (Polish: Wiedźmin 3: Dziki Gon) is an action role-playing video game set in an open world environment, developed by Polish video game developer CD Projekt RED. The Witcher 3: Wild Hunt concludes the story of the witcher Geralt of Rivia, the series’ protagonist, whose story to date has been covered in the previous versions. Continuing from The Witcher 2, the ones who sought to use Geralt are now gone. Geralt seeks to move on with his own life, embarking on a new and personal mission whilst the world order itself is coming to a change.

Geralt’s new mission comes in dark times as the mysterious and otherworldly army known as the Wild Hunt invades the Northern Kingdoms, leaving only blood soaked earth and fiery ruin in its wake; and it seems the Witcher is the key to stopping their cataclysmic rampage. (Wikipedia).

We test with the highest image quality settings, although I have disabled the Nvidia Hairworks option specifically as it does kill frame rate on many cards. Graphics Preset is on ULTRA and Postprocessing is on HIGH.

I have played The Witcher 3 for around 85 hours and I have completed the single player campaign. I tested the game today by playing 4 different save game stages for 5 minutes each, then averaging the frame rate results for a real world indication of performance – one of the map sections we tested is one of the most demanding in the game and our results can be considered strictly ‘worst case'. The Witcher 3 is a dynamic world, so it is important to run tests multiple times to remove any discrepancies.

This is one of the greatest PC games ever released in my opinion, so I spent around a total of 48 hours benchmarking it for this review alone – it should be on your must have list, if you don't have it already.

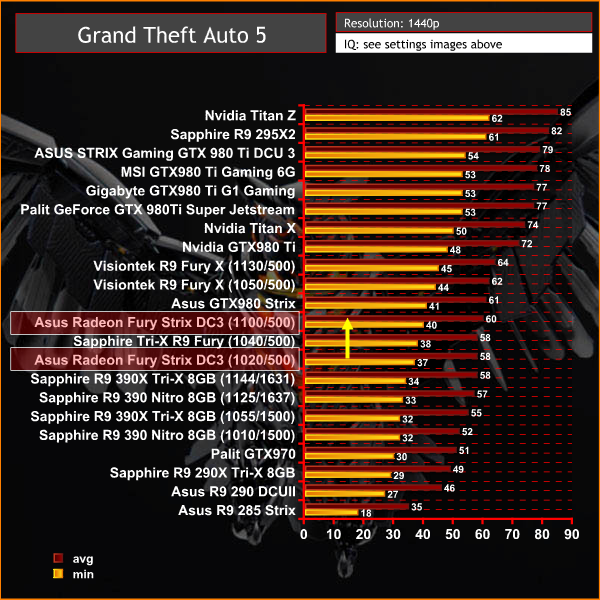

Too demanding really at these settings and resolution, dropping below 25 fps at times – the overclocked GTX980 Ti's deliver better overall engine performance at 4K.Grand Theft Auto V is an action-adventure game played from either a first-person or third-person view. Players complete missions—linear scenarios with set objectives—to progress through the story.

Outside of missions, players can freely roam the open world. Composed of the San Andreas open countryside area and the fictional city of Los Santos, the world of Grand Theft Auto V is much larger in area than earlier entries in the series.

The world may be fully explored from the beginning of the game without restrictions, although story progress unlocks more gameplay content. (Wikipedia).

We maximised every slider – FXAA was enabled, although we left all other Anti Aliasing settings disabled – based on reader feedback from previous reviews. ‘Ignore Suggested Limits’ was turned ‘ON’.

We found some intensive sections of the Grand Theft Auto 5 world and tested each card multiple times to confirm accuracy. The game demanded around 3.5GB of GPU memory at 1440p and just over 4GB at 4K.

A great game experience, very smooth at all times.

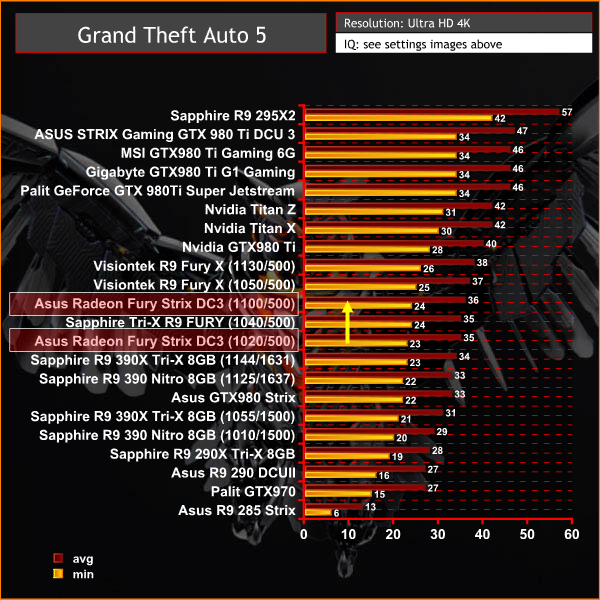

Grand Theft Auto V is an action-adventure game played from either a first-person or third-person view. Players complete missions—linear scenarios with set objectives—to progress through the story.

Outside of missions, players can freely roam the open world. Composed of the San Andreas open countryside area and the fictional city of Los Santos, the world of Grand Theft Auto V is much larger in area than earlier entries in the series.

The world may be fully explored from the beginning of the game without restrictions, although story progress unlocks more gameplay content. (Wikipedia).

We maximised every slider – FXAA was enabled, although we left all other Anti Aliasing settings disabled – based on reader feedback from previous reviews. ‘Ignore Suggested Limits’ was turned ‘ON’.

We found some intensive sections of the Grand Theft Auto 5 world and tested each card multiple times to confirm accuracy. The game demanded around 3.5GB of GPU memory at 1440p and just over 4GB at 4K.

We wouldn't class this performance as playable so some image quality settings would need reduced to enhance the minimum frame rate. That said, the Fury cards score well at 4K, just in behind the GTX980 Ti solutions and Titan X.

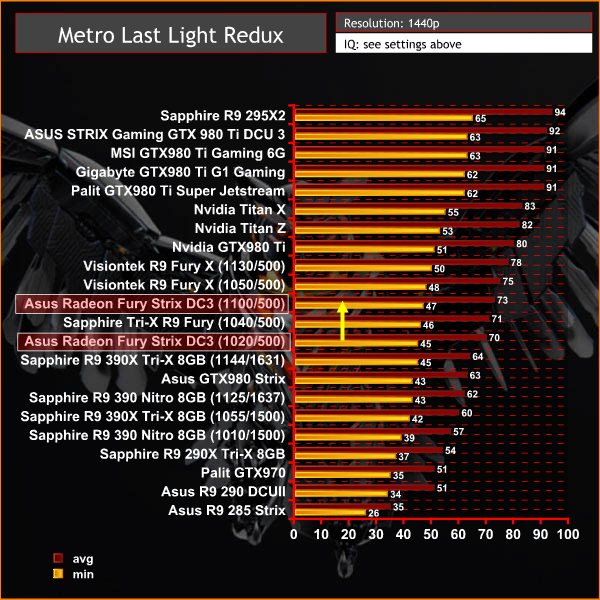

On May 22, 2014, a Redux version of Metro Last Light was announced. It was released on August 26, 2014 in North America and August 29, 2014 in Europe for the PC, PlayStation 4 and Xbox One. Redux adds all the DLC and graphical improvements. A compilation package, titled Metro Redux, was released at the same time which includes Last Light and 2033. (Wikipedia). We test with following settings: Quality-Very High, SSAA-off, Texture Filtering-16x, Motion Blur-Normal, Tessellation-Normal, Advanced Physx-off.

Good performance from the Asus Fury card at 1440p, falling in behind the reference clocked GTX980ti and Fury X.

On May 22, 2014, a Redux version of Metro Last Light was announced. It was released on August 26, 2014 in North America and August 29, 2014 in Europe for the PC, PlayStation 4 and Xbox One. Redux adds all the DLC and graphical improvements. A compilation package, titled Metro Redux, was released at the same time which includes Last Light and 2033. (Wikipedia). We test with following settings: Quality- High, SSAA-off, Texture Filtering-16x, Motion Blur-Normal, Tessellation-Normal, Advanced Physx-off.

4K performance from the Fury is excellent, holding above 35 frames per second at all times. The Fury X performs very well too, at a similar level to the Titan X.

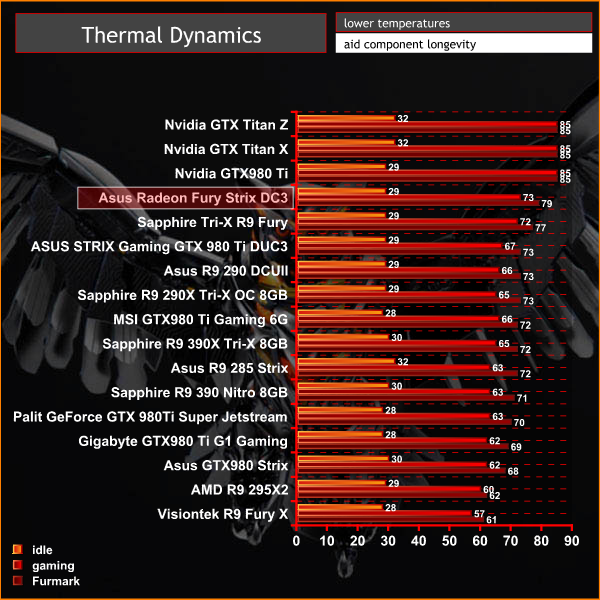

The tests were performed in a controlled air conditioned room with temperatures maintained at a constant 23c – a comfortable environment for the majority of people reading this.Idle temperatures were measured after sitting at the desktop for 30 minutes. Load measurements were acquired by playing Crysis Warhead for 30 minutes and measuring the peak temperature. We also have included Furmark results, recording maximum temperatures throughout a 30 minute stress test. All fan settings were left on automatic.

The Direct CU III cooler performs well, holding gaming load at around 73c, just a single degree behind the Tri-X cooler fitted to the Sapphire model.

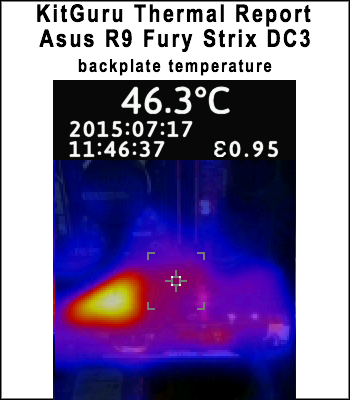

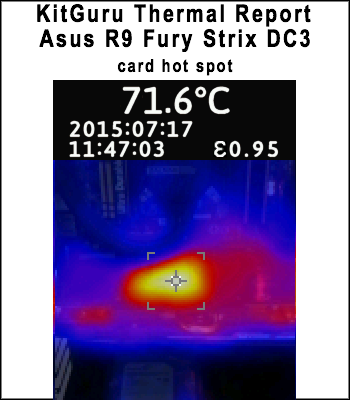

We install the graphics card into our system and measure temperatures on the back of the PCB with our Fluke Visual IR Thermometer/Infrared Thermal Camera. This is a real world running environment.

Details shown below.

We took a recording after 30 minutes of gaming in Witcher 3. The backplate on the R9 Fury helps to spread heat evenly across the full length of the PCB. Under extended load temperatures range between 43c and 48c. We took a recording after 30 minutes of gaming in Witcher 3.

The hottest part of the card is the exposed ‘GPU Fortifier' area – which peaks around 72c under full load.

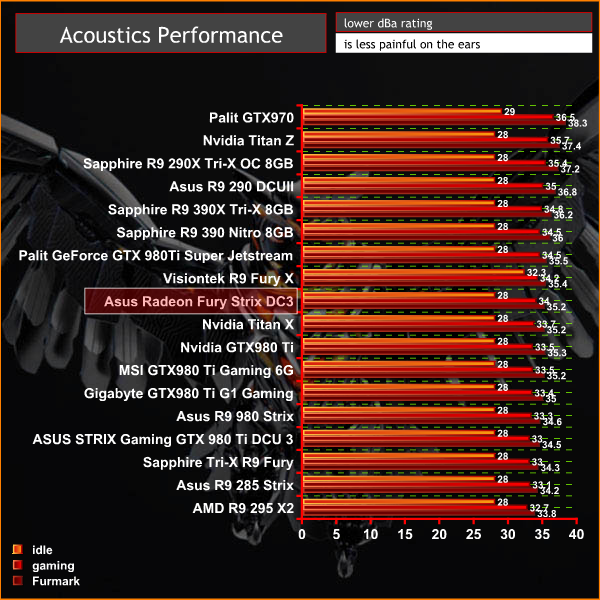

We have built a system inside a Lian Li chassis with no case fans and have used a fanless cooler on our CPU. The motherboard is also passively cooled. This gives us a build with almost completely passive cooling and it means we can measure noise of just the graphics card inside the system when we run looped 3dMark tests.

We measure from a distance of around 1 meter from the closed chassis and 4 foot from the ground to mirror a real world situation. Ambient noise in the room measures close to the limits of our sound meter at 28dBa. Why do this? Well this means we can eliminate secondary noise pollution in the test room and concentrate on only the video card. It also brings us slightly closer to industry standards, such as DIN 45635.

KitGuru noise guide

10dBA – Normal Breathing/Rustling Leaves

20-25dBA – Whisper

30dBA – High Quality Computer fan

40dBA – A Bubbling Brook, or a Refrigerator

50dBA – Normal Conversation

60dBA – Laughter

70dBA – Vacuum Cleaner or Hairdryer

80dBA – City Traffic or a Garbage Disposal

90dBA – Motorcycle or Lawnmower

100dBA – MP3 player at maximum output

110dBA – Orchestra

120dBA – Front row rock concert/Jet Engine

130dBA – Threshold of Pain

140dBA – Military Jet takeoff/Gunshot (close range)

160dBA – Instant Perforation of eardrum

The card is almost silent when idle. The triple fans spin up under load and the card is audible, although never too intrusive. The fans on the Direct CU 3 cooler fitted to the GTX980 Ti don't spin as fast, so noise levels are reduced.

The Asus Radeon R9 Fury Strix DC3 OC doesn't emit coil whine under extreme stress situations.

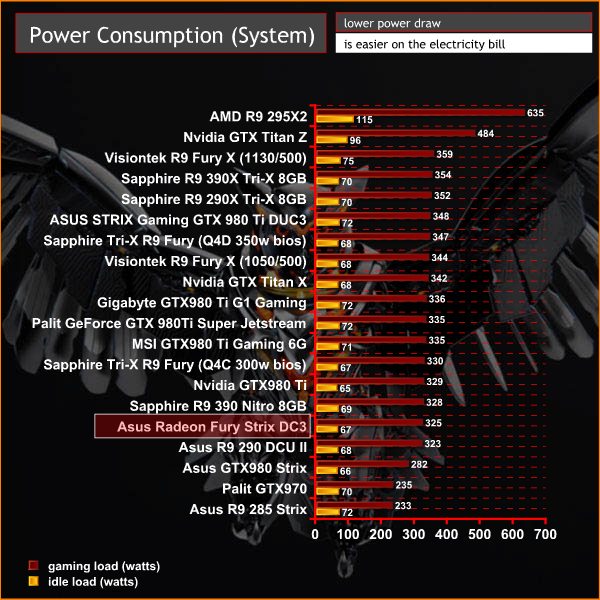

We measure power consumption from the whole system when idle and when gaming, excluding the monitor.

Under gaming load, the Asus Radeon R9 Fury Strix DC3 OC consumes around 325 watts of power, which is around 5 watts less than the Sapphire Tri-X R9 Fury card.

There is much to like about AMD's Fury X – the move to the new high bandwidth architecture has to be applauded and there is some indication that we are seeing signs of the potential at Ultra HD 4K, even at this early stage. We do feel this current generation of hardware has failed to truly inspire the enthusiast audience however, especially when custom partner GTX980 Ti's from Nvidia partners are generally so far ahead at both 1440p and 4K resolutions.

AMD should improve performance via drivers over the coming months so we will revisit the Fiji hardware with new tests when Catalyst has matured a little more. We aren't keen to focus on ‘what if' situations though – we can only test what we have, right now. And right now, the Nvidia GTX980 Ti is still the high end card to buy.

The Fury X card we received was tainted with pump noise issues and horrible coil whine – and ours was delivered straight from the American retail channel. We have another Sapphire Fury X sample in our labs and pump noise and coil whine are clearly evident – we managed to get this card recently from Overclockers UK stock, before they sold out. We await another look at Fury X, when Revision 2 hits in the coming weeks. Sadly there is very limited, if any stock of either Rev 1 or Rev 2 Fury X cards in the UK right now.

We feel the air cooled Fury cards offer more than the Fury X – the custom coolers from Sapphire and ASUS are excellent, and the performance, when overclocked is very close to the ‘out of the box' Fury X. You can save a lot of money in the process too and we all like that.

Asus have cast away the ‘all in one' liquid cooler and have incorporated their Direct CU III cooler onto the PCB. This cooler is quiet and proficient – holding temperatures when gaming between 70c and 73c at all times. It didn't quite match the Sapphire Tri-X cooler in either noise emission or thermal tests, but it is very close indeed.

Asus have fitted their card with a substantial backplate and GPU Fortifier which helps flatten out the temperatures across the full length of the PCB, while enhancing rigidity. The only real hotspot is on the exposed PCB section at the rear of the Fiji core. It peaked at around 72 under full load, so well within safe parameters.

The lack of HDMI 2.0 support may not be critical for most gamers, but for those of us gaming with large 4K television sets in the UK – many of them have yet to incorporate a DisplayPort. HDMI 1.4a is limited to 30hz at the native resolution, unless you opt to pay more for a converter cable, which we haven't tested ourselves yet. I fail to see why AMD didn't try to work out a way to add native HDMI 2.0 support to their latest Fiji solutions.

Performance from the Fury card is not lacking, especially at 4K resolutions when the high bandwidth architecture shines – scaling to the highest resolutions is very impressive indeed. The direct comparison against the Asus GTX980 Strix (£420 at Amazon HERE) shows the Fury card winning all of the time at 3,840×2,160, and losing out only a couple of times in some game engines at 1440p.

We are a little surprised to see that the ASUS R9 Fury Strix DC3 is running at a reference 1,000mhz out of the box, with some retailers focusing on a 1,020mhz clock speed, which is only achievable by installed GPU Tweak 2 then manually selecting the OC mode. Asus should have set the BIOS speed directly to this speed, or greater – and without this reliance on proprietary software.

The AMD Fury is clearly designed to target and outperform the GTX980, and we intentionally selected an (almost) equally priced Asus GTX980 Strix for the comparison today. The problem for AMD is that Nvidia partners such as Palit have dropped their prices recently, and their Jetstream model is available for only £379.99 inc vat. The overclocked enhanced Palit model is only £10 more, at £389.99 inc vat. The excellent Gigabyte GTX980 Windforce model is also now £389.99 inc vat.

You can buy the Asus Radeon R9 Fury Strix DC3 from Overclockers UK for £455.99 inc vat. We do feel Fury hardware needs a little price reduction to become more tempting against competitively priced GTX980's – hopefully some deals will appear on big retailers in coming months.

Discuss on our Facebook page, over HERE.

Pros:

- looks attractive.

- high fan quality.

- backplate helps reduce hot spots.

- ASUS GPU Tweak 2.0 works well.

- excellent 4K performance.

Cons:

- Its a reference 1,000mhz out of the box, only GPU Tweak 2.0 software takes it to 1,020mhz on OC setting.

- no ability to overclock memory.

- Lacks HDMI 2.0 support.

- modest overclocking headroom.

- Pricing could be slightly more competitive against some GTX980 cards.

Kitguru says: The Asus R9 Fury Strix DC3 is a great performer, and offers good value when compared against the Fury X. It is quiet, cool and is faster than the GTX980.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Wonder what settings were used for the Witcher 3. I can see horrible scaling for the 295×2. With the latest Catalyst drivers and game patch the 295×2 should be performing better then every single GPU in this review. Must be the issue with TemporalAA and crossfire.

The 295×2 results in those graphs is identical to the results in KG’s graphs before the latest 15.7 Catalyst drivers. My guess is, they have not re-tested the 295×2 with the new drivers yet, and are re-using the results from their previous testing, done with drivers that did not support Crossfire in Witcher 3 – thus why it looks remarkably like a 290X.

On the one hand, I understand, that would be a LOT of re-benchmarking to do on a lot of AMD cards with the new drivers. On the other hand, especially with Witcher 3, those new drivers are likely the difference between a third-to-last-place position and top-of-the-heap position for the 295×2.

I am currently updating tests with 15.7 – the text in this review states we are using 15.6 — ‘For the last 20 days we have been testing and retesting all the video cards in this review with the latest 15.6 Catalyst and 353.30 Forceware drivers.’

Unfortunately Catalyst 15.7 just appeared a short while ago and it takes a good amount of time to keep retesting with the latest drivers. A review next week will feature the new 15.7 driver results. thanks for your patience but it can be a full time job keeping these results always updated. thanks – Allan

Yeah I have no complaints about it, was just wondering the cause (since I only read parts of the review.). Doing benches all over again with each driver and update is PiA. Even doing personal benchmarks are PiA IMO.

Definitely not complaining, just wondering if it was due to using previous drivers, etc. Take you’re time. I know testing consumes a bunch of time even on a few pieces of hardware. Reviewers like you have too much on hand along with personal lives/jobs for people to be impatient.

it def adds performance. I just got a R9 380 4gb card as our 290 got unstable. its playing GTA V at 1440P at 60 FPS ALL day. with low AA and AF nothing crazy, but all high/ultra settings

I’m going into Pc gaming and leaving console gaming. I have done a lot of research and I decided to go with AMD because freesync monitors are cheaper. I want 1440p and well above 60fps. can some please recommend which card I should pick between Amd 390x , fury and fury x

https://pcpartpicker.com/forums/topic/95994-asus-fury-strix-or-390x

take the Fury, Asus model from this review

->Learn more HERE>>

>

Very nice card. Hopefully prices will come down, of the Fury and 980, as supply of the Fury ramps up.

If you have the money take a fury i will say 🙂

If you like to save some i will take a AMD 290 🙂 it’s the same card as 390, and it’s only slight worse but the price is lower 🙂

And just be aware the freesync monitors only works from FPS X to X, like FPS 45 to 75. All over and all lower will freesync not work and not be running. I cannot give you the accurate frames from and to because it’s different on every freesync monitor. Just letting you know so you can look after that when you shop monitor 🙂

I’d wait for the fixed Fury X personally, From the air-cooled Furies currently available the Sapphire edges a win for me. If going for a Free-sync monitor you need to keep an eye on there working ranges, For example although a 144 hz monitor there free-sync range seems to be limited, For example many only work from a minimum of 40 hz or higher while another one I can think of works from 30 hz but tops out at 90 hz meaning if it goes anywhere outside that range Free-sync switches off. The G-sync monitors tend to have full range cover ie: 30- 120/144 but as you said they can often be a lot more expensive, For example a 27″ 144hz IPS monitor has a price difference of roughly 200 quid in the UK between Free-sync and G sync models. That is a big difference.

Nice performances givien the premature drivers. I’m ordering 2 fury strix.

id go dual r9 fury – the strix in other reviews is much more power efficient than the sapphire model

if you cross fire the 380 performance is on par with a titan x