It has been over two years since Nvidia announced the GTX 1080 in Texas. Those two years saw its GeForce 10 series, based on the Pascal architecture, dominate the graphics card market – if you wanted the fastest consumer card available, you had to go with Team Green.

Last month in Cologne, Germany, Nvidia took things a step further with the launch of its GeForce 20 series, and the unveiling of the new Turing architecture. Today we have reviews of both the RTX 2080 and RTX 2080 Ti, but this article is concerned with the former graphics card. Priced at £749 here in the UK – more than last generation's flagship GTX 1080 Ti – what can the RTX 2080 offer to those who don't want to spend over £1000 for a single graphics card?

For the last few weeks, the rumour mill has been spinning at a furious rate, focusing on the question: why didn't Nvidia talk about real-world gaming performance of its new GPUs? Well, today we can present a full array of game benchmarks to put that question to bed.

It is also worth noting right from the off, considering that real-time ray tracing is such a big new feature for these cards, that today we cannot present benchmarks using ray tracing in games. The reason? Ray tracing support in games is currently dependent on Microsoft releasing DirectX Raytracing (DXR) – its API update to DX12. This is currently scheduled for October, and that's all we know.

We have tested one demo utilising real-time ray tracing, which is detailed later in the review, but apart from that, all the benchmark figures and graphs are from games as we know them (i.e. games that use rasterisation, not ray tracing).

| GPU | RTX 2080 Ti (FE) | GTX 1080 Ti (FE) | RTX 2080 (FE) | GTX 1080 (FE) |

| SMs | 68 | 28 | 46 | 20 |

| CUDA Cores | 4352 | 3584 | 2944 | 2560 |

| Tensor Cores | 544 | N/A | 368 | N/A |

| Tensor FLOPS | 114 | N/A | 85 | N/A |

| RT Cores | 68 | N/A | 46 | N/A |

| Texture Units | 272 | 224 | 184 | 160 |

| ROPs | 88 | 88 | 64 | 64 |

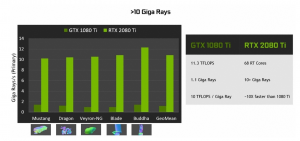

| Rays Cast | 10 Giga Rays/sec | 1.1 Giga Rays/sec | 8 Giga Rays/sec | 0.877 Giga Rays/sec |

| RTX Performance | 87 Trillion RTX-OPS | 11.3 Trillion RTX-OPS | 60 Trillion RTX-OPS | 8.9 Trillion RTX-OPS |

| GPU Boost Clock | 1635 MHz | 1582 MHz | 1800 MHz | 1733 MHz |

| Memory Clock | 7000 MHz | 5505 MHz | 7000 MHz | 5005 MHz |

| Total Video Memory | 11GB GDDR6 | 11GB GDDR5X | 8GB GDDR6 | 8GB GDDR5X |

| Memory Interface | 352-bit | 352-bit | 256-bit | 256-bit |

| Memory Bandwidth | 616 GB/sec | 484 GB/sec | 448 GB/sec | 320 GB/sec |

| TDP | 260W | 250W | 225W | 180W |

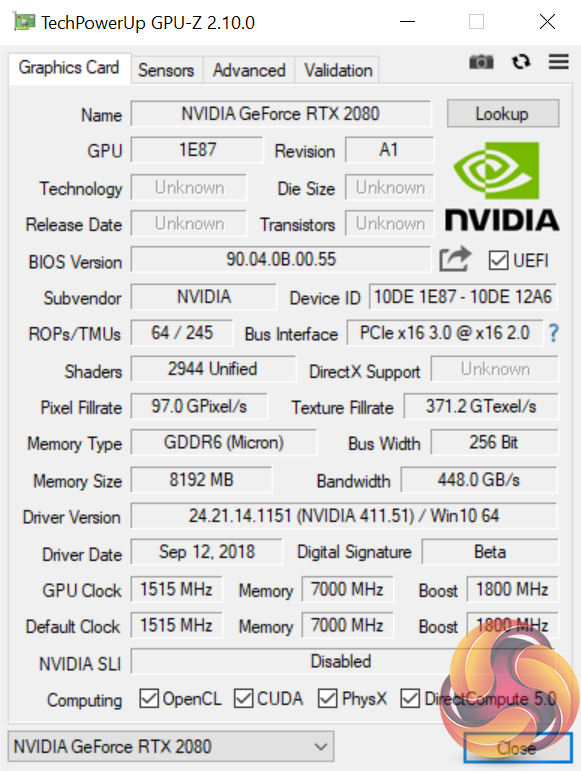

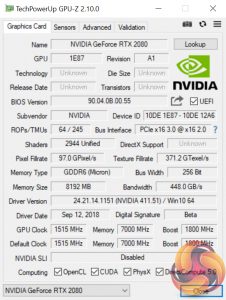

Note: GPU-Z has not yet been updated to show all of the RTX 20 series specs.

In terms of core specs, the Nvidia RTX 2080 sports 2944 CUDA cores with 368 Tensor Cores and 46 RT (ray tracing) cores. You may be unfamiliar with those last two metrics but it is something we address further on the next page. The chip itself is also built on a new 12nm process.

The RTX 2080 Founders Edition (FE) sports an aggressive clock speed out of the box, with a rated boost clock of 1800 MHz. There is still GPU Boost technology, however – now GPU Boost 4.0 – which will dynamically take this clock speed as far as it can, so we will see what real-world clock speed is like later in the review.

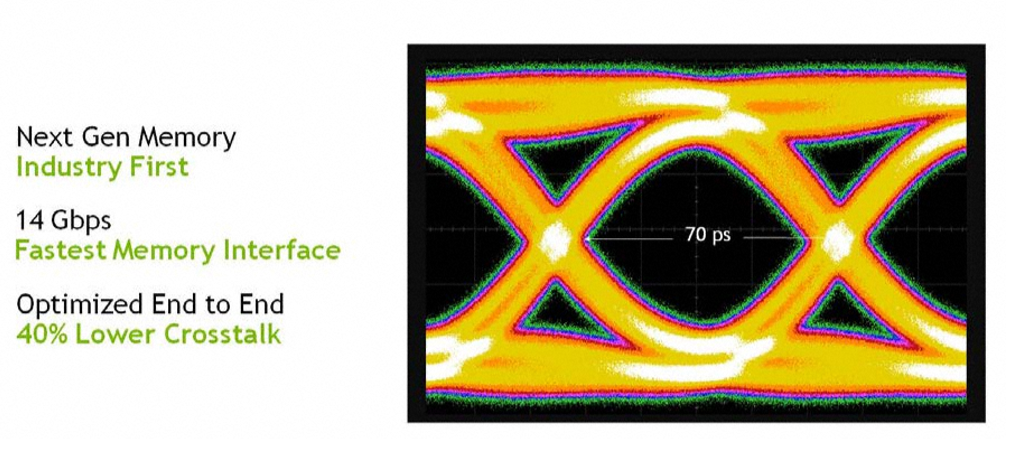

Lastly, 8GB of memory is provided, but in the shape of new GDDR6 chips, rather than the GDDR5X memory of the previous generation. Its 7000 MHz clock equates to 14Gbps, and it operates over a 256-bit bus with a total memory bandwidth figure of 448 GB/s – a 40% increase over the GTX 1080.Nvidia claims that the Turing architecture ‘represents the biggest architectural leap forward in over a decade', so here we detail what's new with Turing – with a particular focus on technologies that will affect/improve your gaming experience.

Note: if you have come directly from our RTX 2080 Ti review, the information provided below is the same.

Turing GPUs

The first thing to note is that, currently, we have three Turing GPUs – TU102, TU104 and TU106. While the RTX 2080 Ti uses the TU102 GPU, it is not actually a full implementation of that chip. TU102, for instance, sports more CUDA cores, as well as a greater number of RT cores, Tensor cores and even an extra GB of memory.

For the sake of reference, however, the Turing architecture detailed below uses the full TU102 GPU as the basis for its explanation. The architecture obviously remains consistent with the TU104 (RTX 2080) and TU106 (RTX 2070) GPUs , but those GPUs are essentially cut-back versions of TU102.

Turing Steaming Multiprocessor (SM) and what it means for today's games

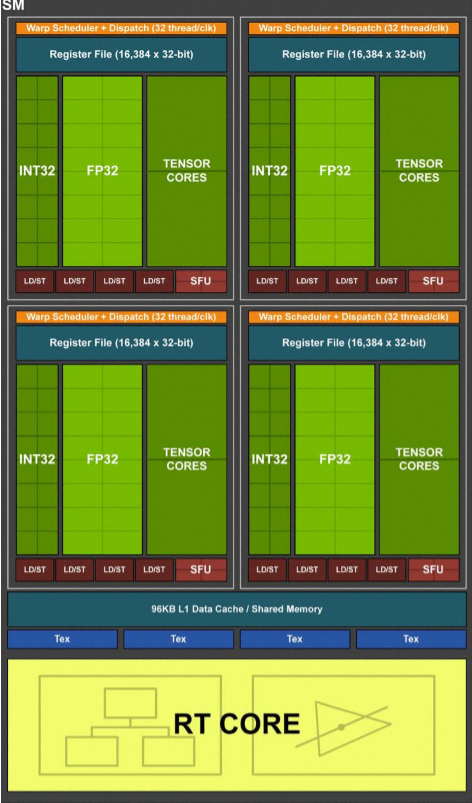

Compared to Pascal, the Turing SM sports quite a different design that builds on what we first saw with the Volta GV100 GPU. On the surface level, Turing includes two SMs per Texture Processing Cluster (TPC), with each SM housing 64 FP32 cores and 64 INT32 cores. On top of that, a Turing SM also includes 8 Tensor Cores and one RT core.

Pascal, on the other hand, has just 1 SM per TPC, with 128 FP32 cores within each SM, and obviously no Tensor or TR cores being present either.

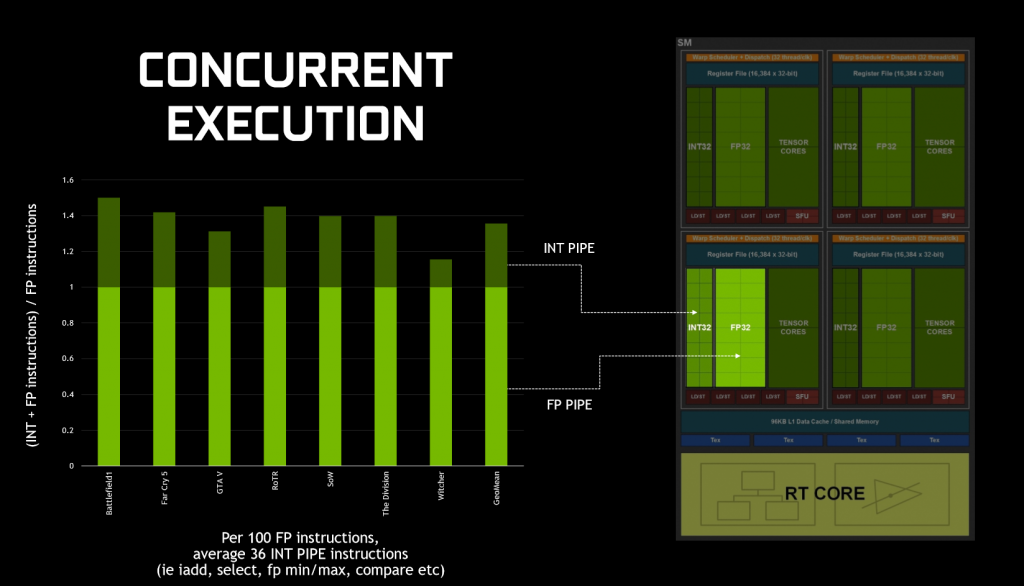

What makes Turing significant for today's games, is how it executes datapaths. That's because, in game, GPUs typically execute both floating point (FP) arithmetic instructions, as well as simpler integer instructions. To demonstrate this, Nvidia claims that we ‘see about 36 additional integer pipe instructions for every 100 floating point instructions', although this figure will vary depending on what application/game you run.

Previous architectures – like Pascal – could not run both FP and integer instructions simultaneously – integer instructions would instead force the FP datapath to sit idle. As we've already mentioned, however, Turing includes FP and integer cores within the same SM, and this means the GPU can execute integer instructions alongside (in parallel with) the FP maths.

In a nutshell, this should mean noticeably better performance while gaming as FP and integer instructions can be executed in parallel, when previously integer instructions stopped FP instructions executing.

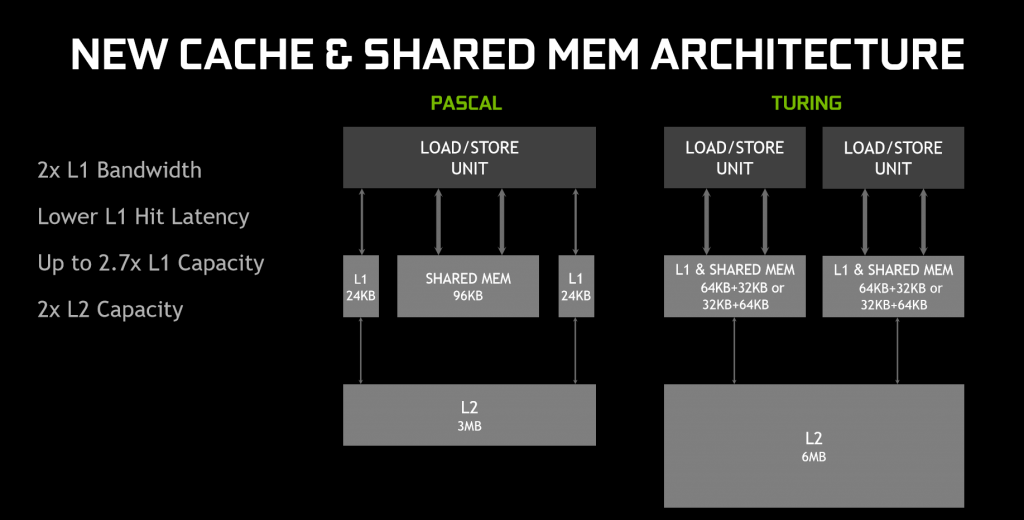

On top of that, Turing has also rejigged the memory architecture. In essence, the memory architecture is now unified, meaning the L1 (level 1) cache can ‘leverage resources', leading to twice the bandwidth per Texture Processing Cluster (TPC) when compared to Pascal. Memory can even be reconfigured when shared memory is not utilising its full capacity – for instance, L1 memory can expand/reduce to 64KB or 32KB respectively, with the former allowing less shared memory per SM, while the latter allows for more shared memory.

In sum, Nvidia claims the memory and parallel execution improvements equates to 50% improved performance per CUDA core (versus Pascal.) For real-world performance gains, this review will obviously document that later.

GDDR6

New with Turing is also GDDR6 memory – the first time such VRAM has ever been used with a GPU. With GDDR6, all three Turing GPUs can deliver 14 Gbps signalling rates, despite power efficiency improving 20% over GDDR5X (which was used with GTX 1080 and 1080 Ti). This also required Nvidia to re-work the memory sub-system, resulting in multiple improvements including a claimed 40% reduction in signal crosstalk.

Alongside this, Turing has built on Pascal's memory compression algorithms, which actually further improves memory bandwidth beyond the speeds that moving to GDDR6 itself brings. Nvidia claims this compression (or traffic reduction) as well as the speed benefit of moving to GDDR6, results in 50% higher effective bandwidth compared to Pascal.

NVLink

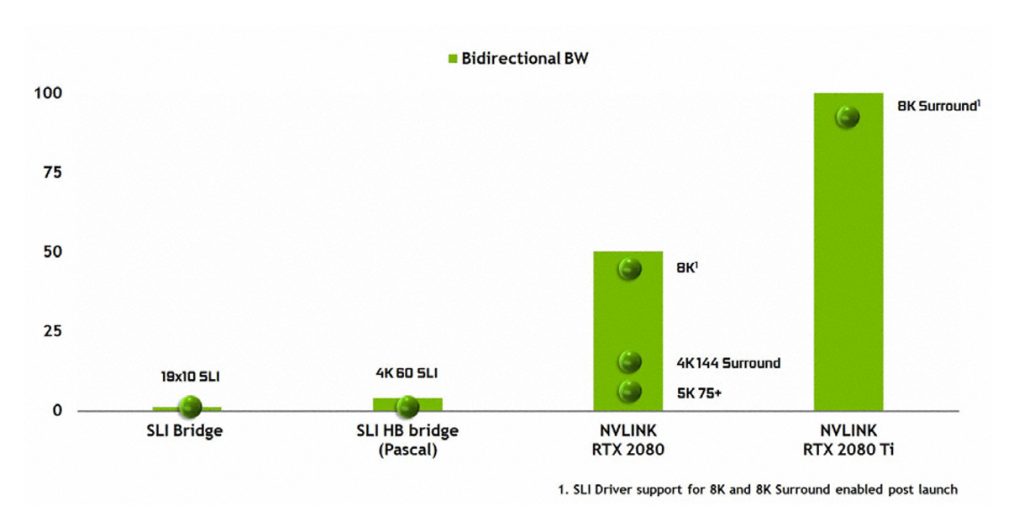

The last change to discuss here is NVLink. As we noted in our unboxing video, the traditional SLI finger has changed. Previous Nvidia GPUs used a Multiple Input/Output (MIO) interface with SLI, whereas Turing (TU102 and TU104 only) uses NVLink instead of MIO, while GPU-to-GPU data transfers use the PCIe interface.

TU102 provides two x8 second-gen NVLinks, while TU104 provides just one. Each link provides 50GB/sec bidirectional bandwidth (or 25GB/sec per direction), so TU102 will provide up to 100GB/sec bidirectionally.

Nvidia did not provide an exact figure of bandwidth for SLI used with Pascal GPUs, but from the graph above NVLink clearly provides a huge boost to bandwidth figures. It will be fascinating to test this with games in the near future.

Do remember, though, that NVLink is only supported with TU102 and TU104 – RTX 2080 Ti and RTX 2080 – so RTX 2070 buyers will not be able to take advantage of this technology.Ray tracing and RT cores

When the GeForce 20 series was announced, almost the entire presentation was dedicated to ray tracing. How do the new GPUs do what even the GTX 1080 Ti cannot?

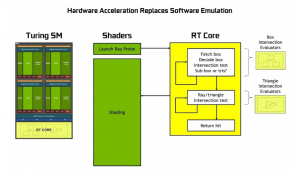

The secret lies with the new RT (ray tracing) cores. As mentioned, each SM features one RT core – RTX 2080 Ti has 68, and RTX 2080 has 46. These cores work together with denoising techniques, Bounding Volume Hierarchy (BVH) and compatible APIs (DXR and even Vulkan) to achieve real-time ray tracing on each Turing GPU.

As Nvidia puts its, ‘RT Cores traverse the BVH autonomously, and by accelerating traversal and ray/triangle intersection tests, they offload the SM, allowing it to handle other vertex, pixel, and compute shading work. Functions such as BVH building and refitting are handled by the driver, and ray generation and shading is managed by the application through new types of shaders.'

Without dedicated ray tracing hardware (i.e. Turing GPUs), each ray can only be traced using thousands of software instruction slots, which test bounding boxes within the BVH structure, until a triangle is hit – which is still only a possibility. The end result is, without dedicated RT hardware, the effort is so demanding that it couldn't be done in real-time. Instead, RT cores can be used to prevent the SM from expending thousands of instruction slots, thus significantly lessening the workload.

Each RT core is also made up of two units. One does the box bounding tests, while the other handles ray-triangle intersection tests. All the SM has to do is launch the ray probe, and the RT cores will handle the rest of the work to actually test if there has been a hit or not – data which is then returned to the SM.

The end result is a GPU that can run real-time ray tracing 10 times faster than Pascal – as demonstrated by the amount of Giga Rays per second that can be calculated: 1.1 Giga Rays per second for the GTX 1080 Ti, but 10 Giga Rays per second for the RTX 2080 Ti.

Ray tracing and gaming

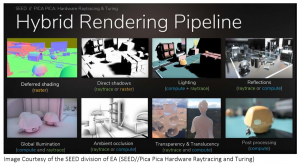

Speaking now about gaming, the RT cores should allow for real-time ray tracing to be built into games. For this aspect, Nvidia isn't yet pushing global ray tracing in games (where all light is as a result of ray tracing). Instead, the company is pushing hybrid rendering – which combines ray tracing with rasterisation, the latter being the rendering technique games currently use.

This can be demonstrated with Shadow of the Tomb Raider, a game that was demoed at the RTX launch event in Germany. This game will use the hybrid method, utilising ray tracing for the portrayal of shadows in-game. While we can't test any fully ray traced games at this point – because they don't exist, and we still need DXR – using ray tracing exclusively is almost certainly going to be too demanding, even for Turing GPUs.

So we expect to see developers pick and choose how ray tracing is used within games. Nvidia says it should be used where there is ‘most visual benefit', like when ‘rendering reflections, refractions, and shadows'.

At the moment, a list of games that will support ray tracing includes:

- Assetto Corsa Competizione from Kunos Simulazioni / 505 Games

- Atomic Heart from Mundfish

- Battlefield V from EA / DICE

- Control from Remedy Entertainment / 505 Games

- Enlisted from Gaijin Entertainment / Darkflow Software

- MechWarrior 5: Mercenaries from Piranha Games

- Metro Exodus f rom 4A Games

- Shadow of the Tomb Raider from Square Enix / Eidos-Montréal / Crystal Dynamics / Nixxes

- Justice (Ni Shui Han) from NetEase 10. JX3 from Kingsoft

Tensor Cores and DLSS

The Turing architecture also houses what is known as a Tensor Core – another feature first introduced with the Volta GV100. These add ‘INT8 and INT4 precision modes for inferencing workloads that can tolerate quantization.'

What the Tensor Cores do, though, is harness the power of deep learning for the purposes of gaming. They do this by accelerating certain aspects of Nvidia's NGX Neural Services in order to improve graphics, visual fidelity and rendering. For gaming this is primarily achieved via Deep Learning Super Sampling, or DLSS.

This is a new method of anti aliasing that aims to provide similar visual fidelity to TAA (temporal anti aliasing) but with significantly less performance cost. This is because, where TAA renders at your set resolution, DLSS can render faster with a lower input sample count, but then infers (upscales) the result at your set resolution – which Nvidia claims results in similar visual fidelity, but with half of the shading work.

Obviously that sounds great, but how is it achieved? That's where we circle back to the deep learning element of the Tensor Cores. Nvidia says it is the ‘training' element of the neural network which is key, where the DLSS network is asked to match thousands of high quality images (rendered with 64x supersampling). Through a back-and-forth process named ‘back propagation' this network eventually learns to produce results which resemble the quality of the 64x supersampled images while getting rid of any blurring that may have been introduced from TAA.

In a nutshell – Turing GPUs only need half the amount of samples (compared to TAA, for instance) for rendering and instead use AI and their Tensor Cores to provide the missing information and create the final image.

Now, because the network needs to be trained in regards to different scenes, games do need to specifically support DLSS, although Nvidia claims it is ‘an easy integration' for game devs. We currently have a list of 25 games that will support DLSS upon release:

- Ark: Survival Evolved from Studio Wildcard

- Atomic Heart from Mundfish

- Dauntless from Phoenix Labs

- Final Fantasy XV from Square Enix

- Fractured Lands from Unbroken Studios

- Hitman 2 from IO Interactive/Warner Bros.

- Islands of Nyne: Battle Royale from Define Human Studios

- Justice (Ni Shui Han) from NetEase

- JX3 from Kingsoft

- Mechwarrior 5: Mercenaries from Piranha Games

- PlayerUnknown’s Battlegrounds from PUBG Corp.

- Remnant: From the Ashes from Gunfire Games/Perfect World Entertainment

- Serious Sam 4: Planet Badass from Croteam/Devolver Digital

- Shadow of the Tomb Raider from Square Enix/Eidos-Montréal/Crystal Dynamics/Nixxes

- The Forge Arena from Freezing Raccoon Studios

- We Happy Few from Compulsion Games / Gearbox

- Darksiders 3 by Gunfire Games/THQ Nordic

- Deliver Us The Moon: Fortuna by KeokeN Interactive

- Fear the Wolves by Vostok Games / Focus Home Interactive

- Hellblade: Senua's Sacrifice by Ninja Theory

- KINETIK by Hero Machine Studios

- Outpost Zero by Symmetric Games / tinyBuild Games

- Overkill's The Walking Dead by Overkill Software / Starbreeze Studios

- SCUM by Gamepires / Devolver Digital

- Stormdivers by Housemarque

That does mean we can't test DLSS today with any current games, but we have been able to test a demo of FFXV provided early to press. More on that later in the review.

The Nvidia RTX 2080 Founders Edition card ships in a compact, rectangular box. RTX branding is prominent on the front of the box, while there is also the classic Nvidia green accenting.

Inside, the included accessories consist of: 1x support guide, 1x quick start guide, and 1x DisplayPort to DVI adapter. The display adapter is something we first saw included with the GTX 1080 Ti as Nvidia removed the DVI connector from that card.

That just leaves the card itself in the box. Both RTX 2080 and RTX 2080 Ti share the same cooler design, and I have to say I think it is a big improvement over previous reference cards, including the GTX 1080/1080 Ti Founders Edition cards.

Most obviously, it has ditched the single radial fan (blower-style fan) in favour of dual axial 13-blade fans. These measure 90mm and sit either side of a black aluminium section that is home to the RTX branding. The silver sections are also made of aluminium, and the card definitely feels very premium in the hand – it weighs in at just under 1.3KG.

In terms of dimensions, it is relatively compact by today's standards – it measures 266.74mm long and 115.7mm high. It's also 2-slots thick.

Cooling the card is actually a full-length vapour chamber, while there is also an aluminium fin array you can see from either side of the card. Air from the two fans is exhausted out these sides, and from the back of the card. This does mean more hot air will be left in your case as a result – compared to a previous generation blower-style cooler, that is – so bear that in mind.

New to the RTX 20 series is its iMON DrMOS power supply. With the RTX 2080, this is an 8-phase solution. A key feature of this iMON power supply design is its dynamic power management system which can handle current monitoring and control at the sub-millisecond level. This means it has very tight control of over the power flow to the GPU – thus allowing for improved power headroom which should help with overclocking.

The other key feature of the iMON supply is its ability to dynamically use less power phases when not running under full load, as this enables the power supply to run at maximum efficiency when there is little load on the system – when your PC is idling, for instance.

In terms of the cooler, as we mentioned this is the first time we have seen a full-length vapour chamber on an Nvidia reference card – or on any card for that matter – so the obvious benefit is increased size, allowing for greater heat dissipation. There is also an aluminium fin stack which is used to dissipate any remaining heat.

Looking on the card as it would be once installed in your case, the ‘GeForce RTX' branding is clearly visible and this lights up green once powered on. No RGB here.

As with previous Founders Editions, the RTX 2080 does sport a backplate – but it is now silver, rather than black. I think it looks fantastic, and it should also help with heat dissipation. It is made from more aluminium, and has a subtle but interesting design with lines etched into the metal, which bend around the RTX 2080 branding in the middle of the backplate.

The RTX 2080 Founders Edition requires 1x 6-pin and 1x 8-pin power connectors. Interestingly, the 6-pin connector looks like it is just an 8-pin plug with two of its holes blocked off, which suggests Nvidia is re-using as much of the design from the RTX 2080 Ti as it can.

Lastly, display outputs are provided thanks to 3x DisplayPort 1.4a, 1x HDMI 2.0b and even 1x VirtualLink (USB-C) connector. This inclusion is aimed for next-gen virtual reality HMDs, which should only require a single cable to connect your PC – though you could also use the connector now if your monitor has a Type-C input.Our newest GPU test procedure has been built with the intention of benchmarking high-end graphics cards. We test at 1920×1080 (1080p), 2560×1440 (1440p), and 3840×2160 (4K UHD) resolutions.

We try to test using the DX12 API if titles offer support. This gives us an interpretation into the graphics card performance hierarchy in the present time and the near future, when DX12 becomes more prevalent. After all, graphics cards of this expense may stay in a gamer’s system for a number of product generations/years before being upgraded.

We tested the RX Vega64 and Vega56 using the ‘Turbo‘ power mode in AMD’s WattMan software. This prioritises all-out performance over power efficiency, noise output, and lower thermals.

Driver Notes

- AMD graphics cards were benchmarked with the Adrenalin 18.9.1 driver.

- Nvidia graphics cards (apart from RTX 20 series cards) were benchmarked with the Nvidia 399.24 driver.

- RTX 20 series cards were benchmarked with the Nvidia 411.51 driver, supplied to press.

Test System

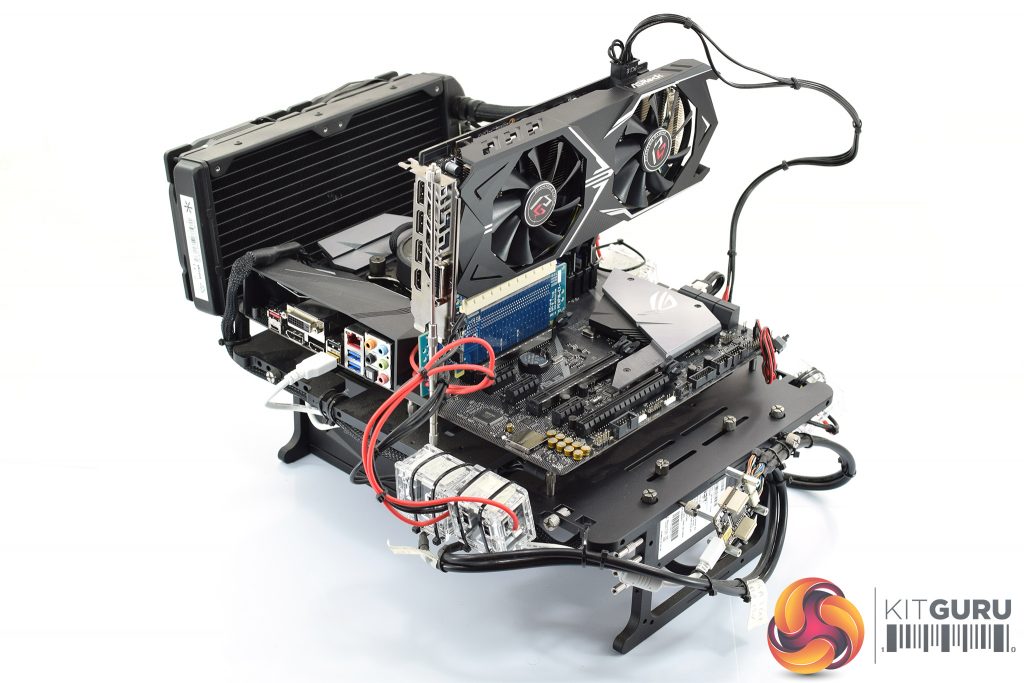

We test using the Overclockers UK Germanium pre-built system. You can read more about it over HERE. It is important to note we have had to re-house the components to an open-air test bench to accommodate our new GPU power testing (more on that later) but the core of the system is unchanged and the performance figures you see presented here are what you can expect from the Germanium.

| CPU |

Intel Core i7-8700K

Overclocked to 4.8GHz |

| Motherboard |

ASUS ROG Strix Z370-F Gaming

|

| Memory |

Team Group Dark Hawk RGB

16GB (2x8GB) @ 3200MHz 16-18-18-38 |

| Graphics Card |

Varies

|

| System Drive |

Patriot Wildfire 240GB

|

| Games Drive | Crucial M4 512GB |

| Chassis | Streacom ST-BC1 Bench |

| CPU Cooler |

OCUK TechLabs 240mm AIO

|

| Power Supply |

SuperFlower Leadex II 850W 80Plus Gold

|

| Operating System |

Windows 10 Professional

|

Comparison Graphics Cards List

- Nvidia RTX 2080 Ti Founders Edition (FE) 11GB

- Nvidia GTX 1080 Ti Founders Edition (FE) 11GB

- Gigabyte GTX 1080 G1 Gaming 8GB

- Palit GTX 1070 Ti Super Jetstream 8GB

- Nvidia GTX 1070 Founders Edition (FE) 8GB

- Nvidia GTX 1060 Founders Edition (FE) 6GB

- Gigabyte GTX 980 Ti XTREME Gaming 6GB

- AMD RX Vega 64 Air 8GB

- AMD RX Vega 56 8GB

- Sapphire RX 580 Nitro+ Limited Edition (LE) 8GB

- ASUS RX 570 ROG Strix Gaming OC 4GB

Software and Games List

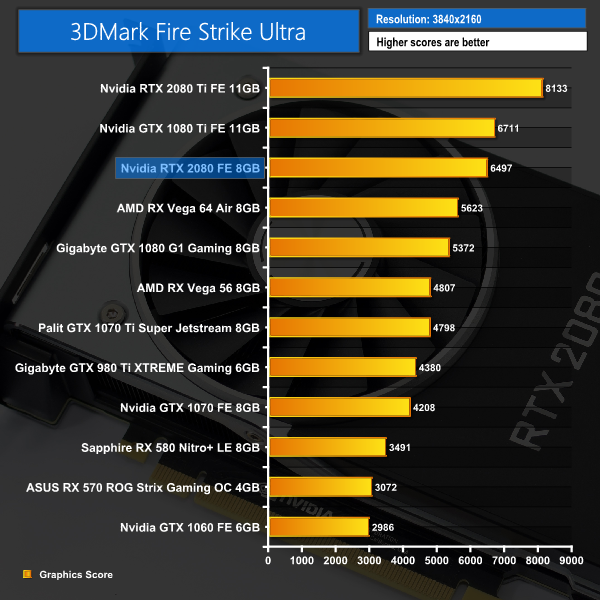

- 3DMark Fire Strike & Fire Strike Ultra (DX11 Synthetic)

- 3DMark Time Spy (DX12 Synthetic)

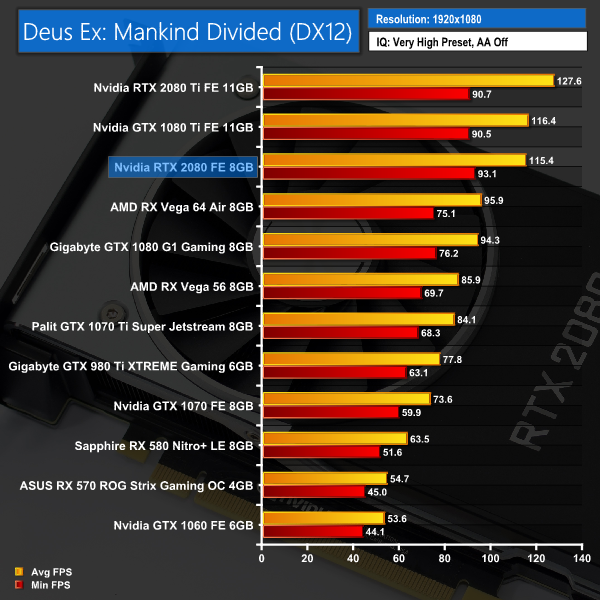

- Deus Ex: Mankind Divided (DX12)

- Far Cry 5 (DX11)

- Tom Clancy’s Ghost Recon: Wildlands (DX11)

- Middle Earth: Shadow of War (DX11)

- Shadow of the Tomb Raider (DX12)

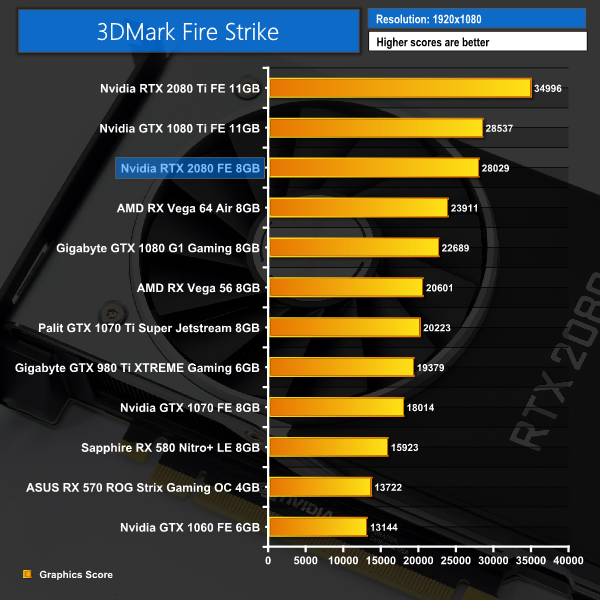

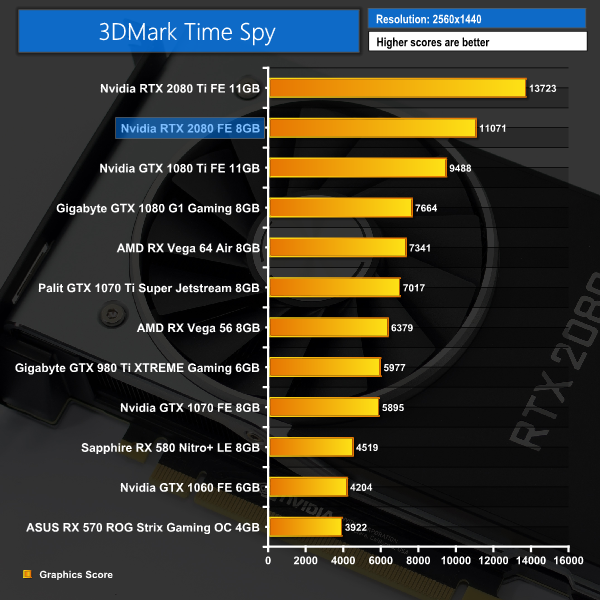

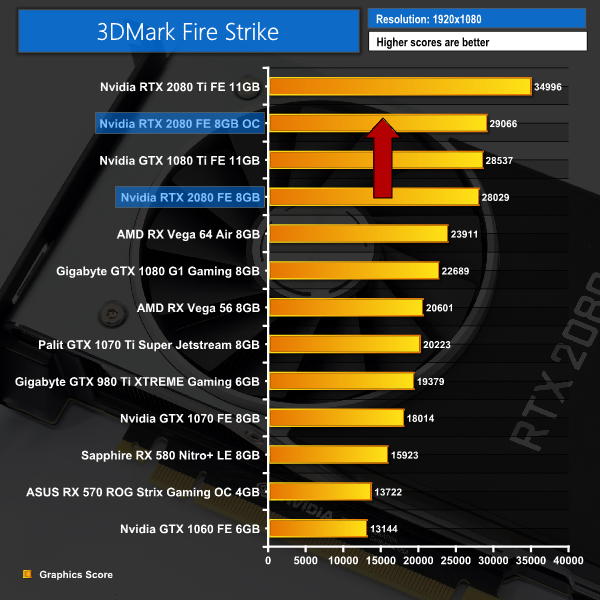

We run each benchmark/game three times, and present averages in our graphs.3DMark Fire Strike is a showcase DirectX 11 benchmark designed for today’s high-performance gaming PCs. It is our [FutureMark’s] most ambitious and technical benchmark ever, featuring real-time graphics rendered with detail and complexity far beyond what is found in other benchmarks and games today.

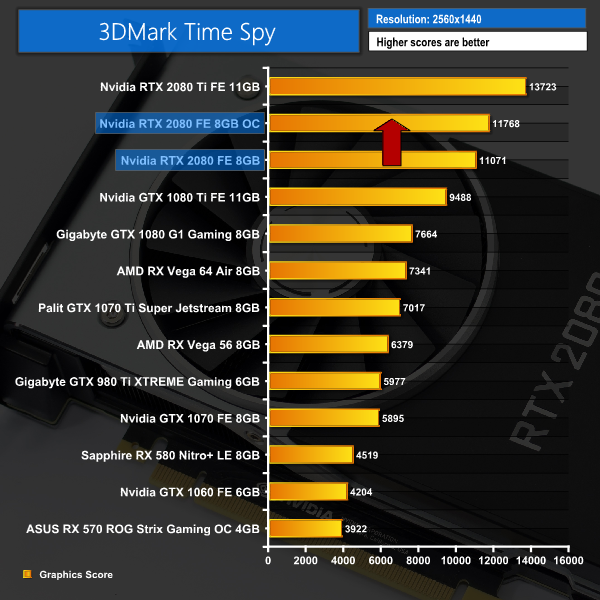

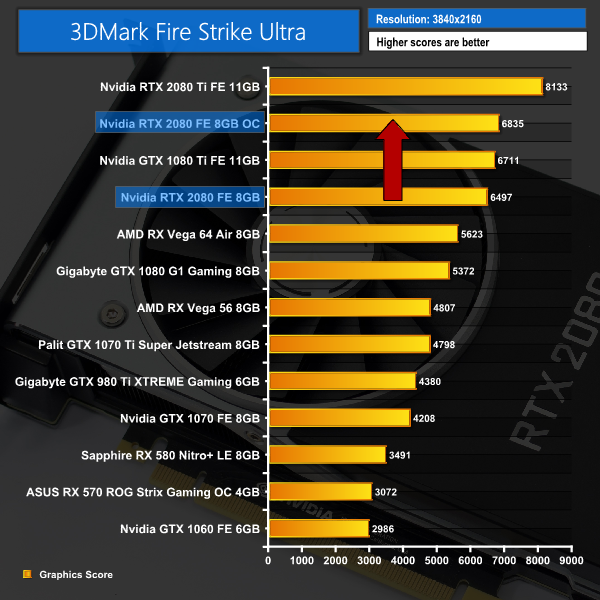

It's a fascinating start for the RTX 2080. It is barely 500 points behind the GTX 1080 Ti in Fire Strike, yet it storms ahead in the DX12 Time Spy benchmark, before again dropping just below the 1080 Ti in Fire Strike Ultra.

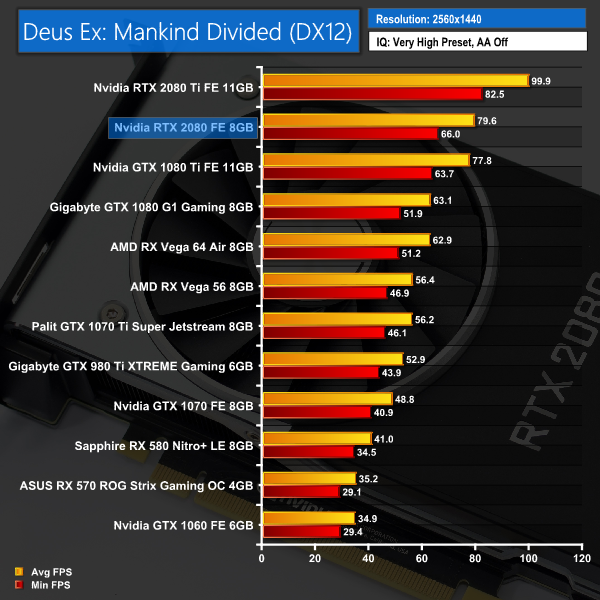

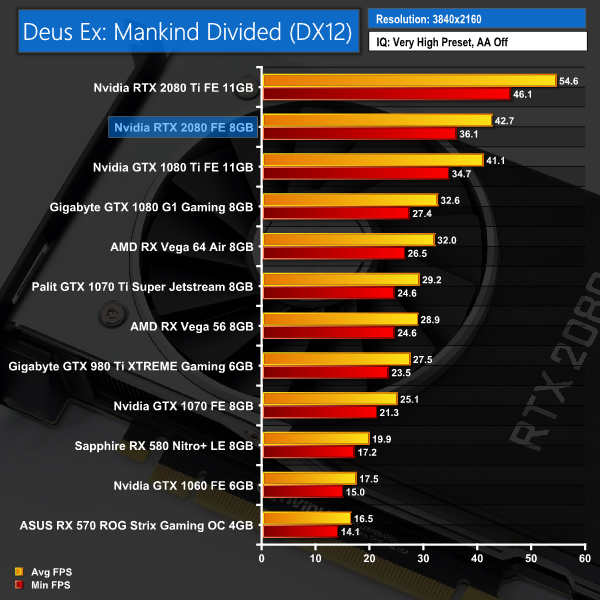

If these benchmarks are anything to go by, DX12 performance could be key for the RTX cards. Let's see if that trend continues.Deus Ex: Mankind Divided is set in the year 2029, two years after the events of Human Revolution and the “Aug Incident”—an event in which mechanically augmented humans became uncontrollable and lethally violent. Unbeknownst to the public, the affected augmented received implanted technology designed to control them by the shadowy Illuminati, which is abused by a rogue member of the group to discredit augmentations completely. (Wikipedia).

We test using the Very High preset, with MSAA disabled. We use the DirectX 12 API.

There's very little in it while playing Deus Ex: Mankind Divided. The GTX 1080 Ti takes a 1FPS lead at 1080p, before dropping back at 1440p. The gap at 2160p (4K) is 1.6FPS.Far Cry 5 is an action-adventure first-person shooter game developed by Ubisoft Montreal and Ubisoft Toronto and published by Ubisoft for Microsoft Windows, PlayStation 4 and Xbox One. It is the eleventh entry and the fifth main title in the Far Cry series, and was released on March 27, 2018.

The game takes place in the fictional Hope County, Montana, where charismatic preacher Joseph Seed and his cult Project at Eden’s Gate holds a dictatorial rule over the area. The story follows an unnamed junior deputy sheriff, who becomes trapped in Hope County and works alongside factions of a resistance to liberate the county from Eden’s Gate. (Wikipedia).

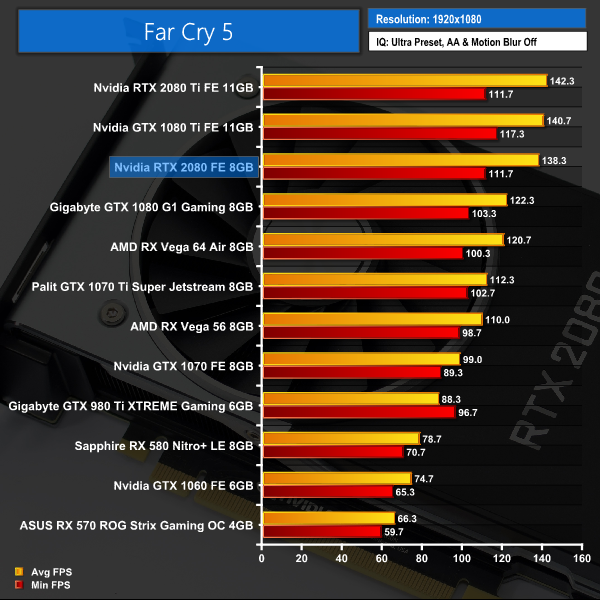

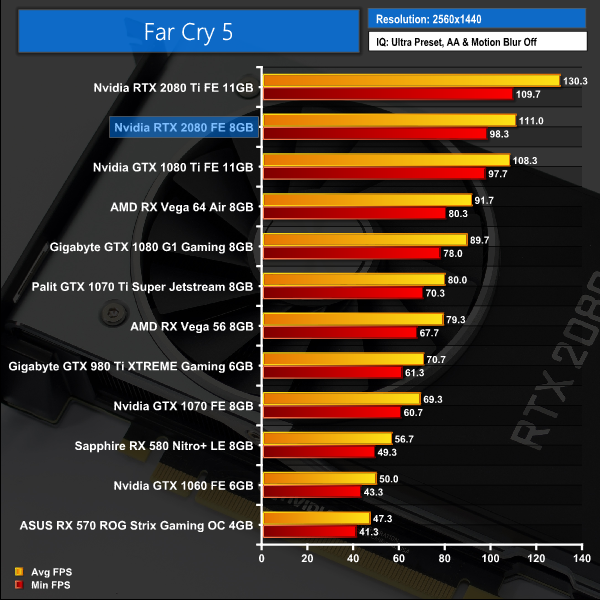

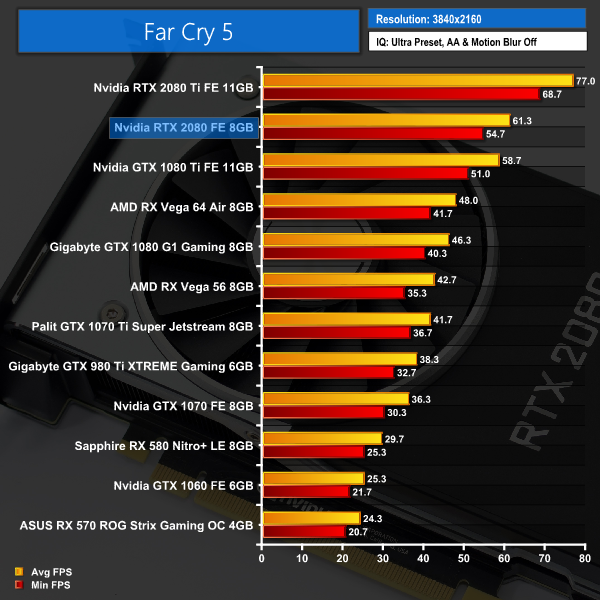

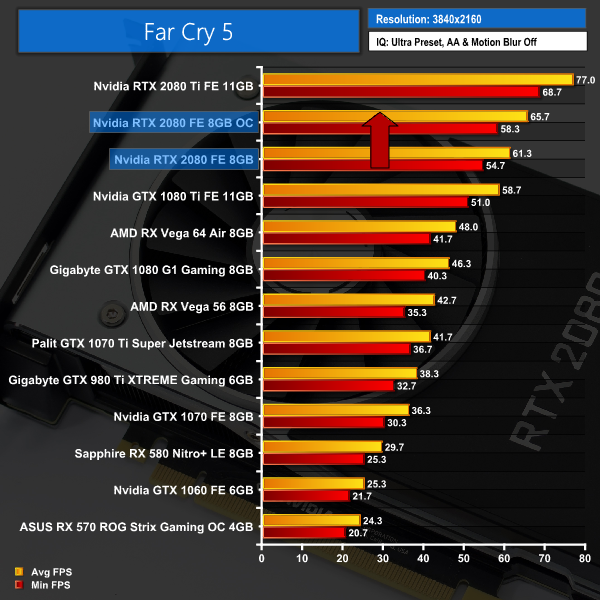

We test using the Ultra preset, with AA and motion blur disabled.

We can see clear signs of bottlenecking at 1080p when testing Far Cry 5 – the GTX 1080 Ti, RTX 2080 and RTX 2080 Ti all average well over 100FPS, yet they perform within just 4FPS of each other. The performance drop when going up to 1440p is also less than expected, which just goes to show – even with a hexacore i7-8700K, overclocked to 4.8GHz, you can still run into bottlenecking if you use one of the fastest cards on the market.

Still, at 1440p and 4K the RTX 2080 takes another small lead over the GTX 1080 Ti.Tom Clancy’s Ghost Recon Wildlands is a tactical shooter video game developed by Ubisoft Paris and published by Ubisoft. It was released worldwide on March 7, 2017, for Microsoft Windows, PlayStation 4 and Xbox One, as the tenth installment in the Tom Clancy’s Ghost Recon franchise and is the first game in the Ghost Recon series to feature an open world environment. (Wikipedia).

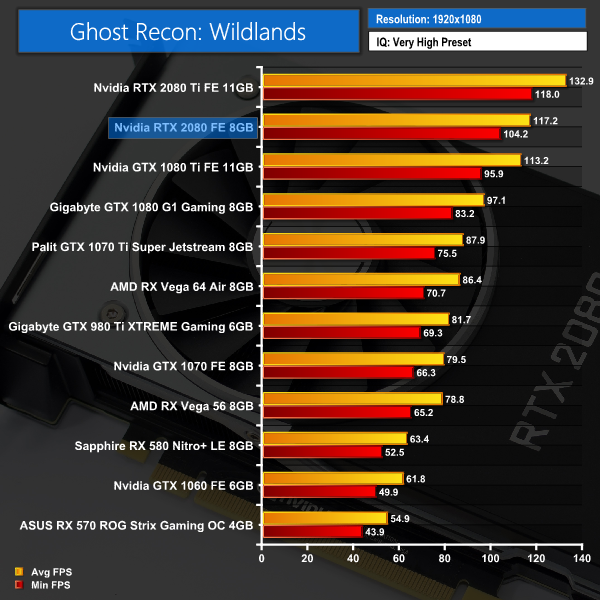

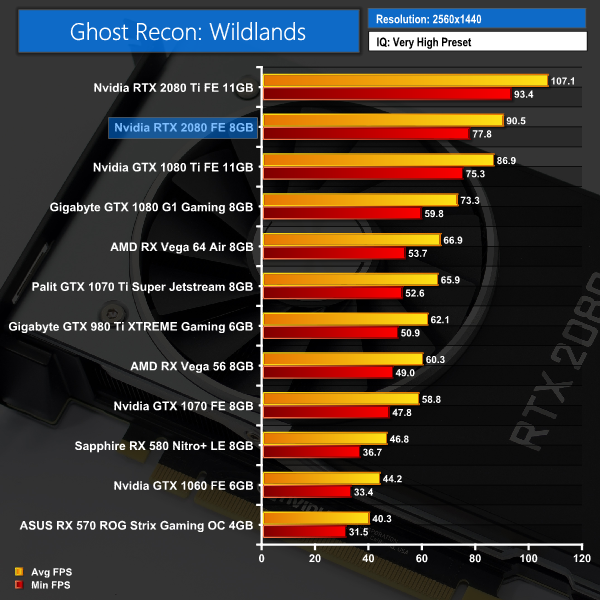

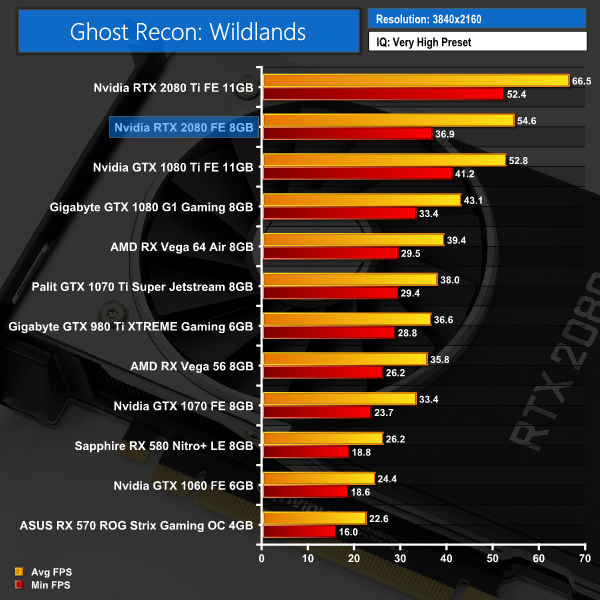

We test using the Very High preset.

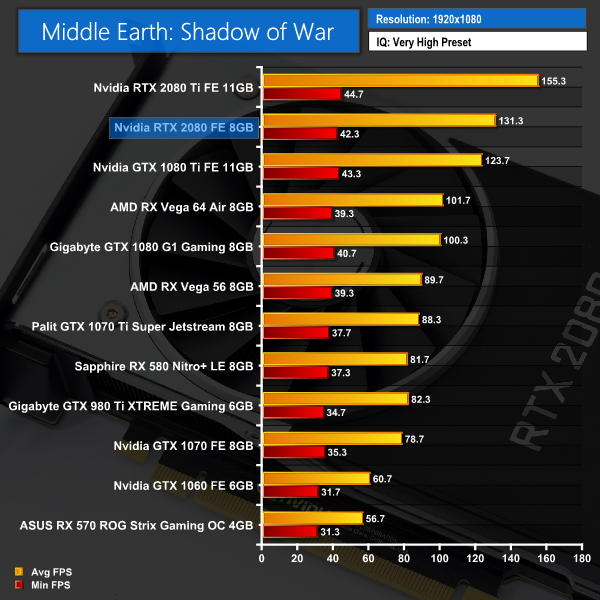

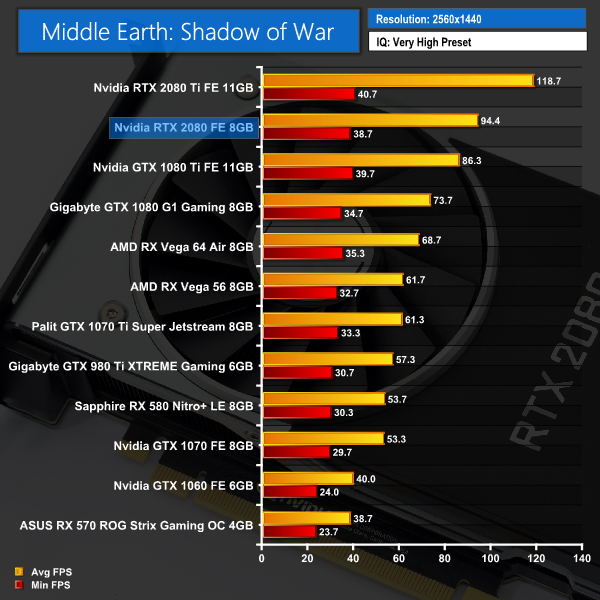

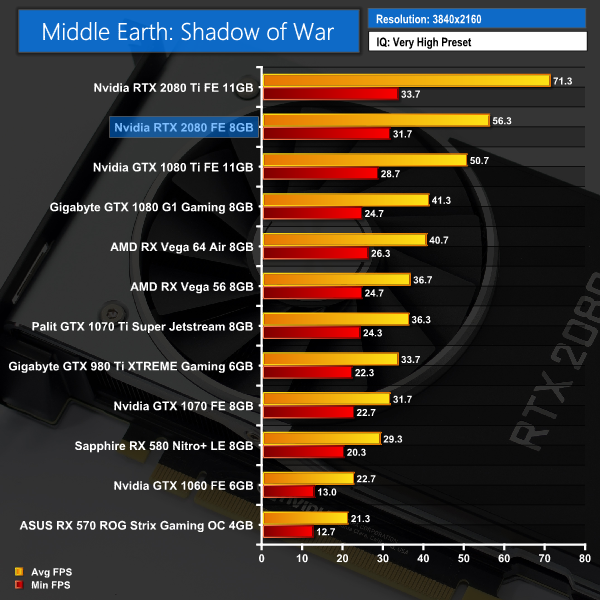

With Ghost Recon: Wildlands, the RTX 2080 proves faster than the GTX 1080 Ti at all resolutions, with the difference being 3.6FPS at 1440p, and 1.8FPS at 4K.Middle-earth: Shadow of War is an action role-playing video game developed by Monolith Productions and published by Warner Bros. Interactive Entertainment. It is the sequel to 2014’s Middle-earth: Shadow of Mordor, and was released worldwide for Microsoft Windows, PlayStation 4, and Xbox One on October 10, 2017. (Wikipedia).

We test using the Very High preset.

Middle Earth: Shadow of War shows the biggest lead for RTX 2080 over GTX 1080 Ti so far – the gap is 5.6FPS, even at 4K, while at 1440p the difference is 8.1 FPS.

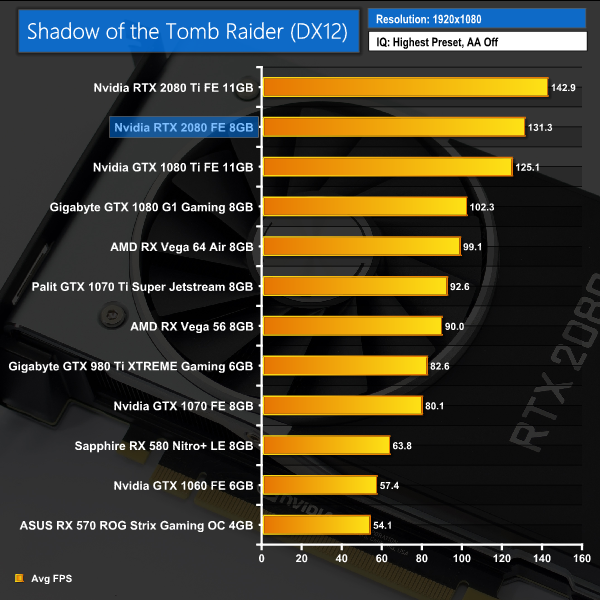

Taken on its own, the 4K average FPS figure of 56.3 is very respectable.Shadow of the Tomb Raider is an action-adventure video game developed by Eidos Montréal in conjunction with Crystal Dynamics and published by Square Enix. It continues the narrative from the 2013 game Tomb Raider and its sequel Rise of the Tomb Raider, and is the twelfth mainline entry in the Tomb Raider series. The game released worldwide on 14 September 2018 for Microsoft Windows, PlayStation 4 and Xbox One. (Wikipedia).

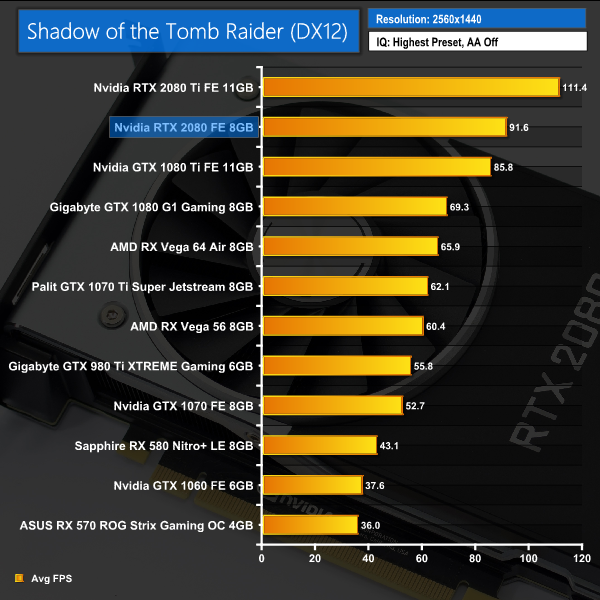

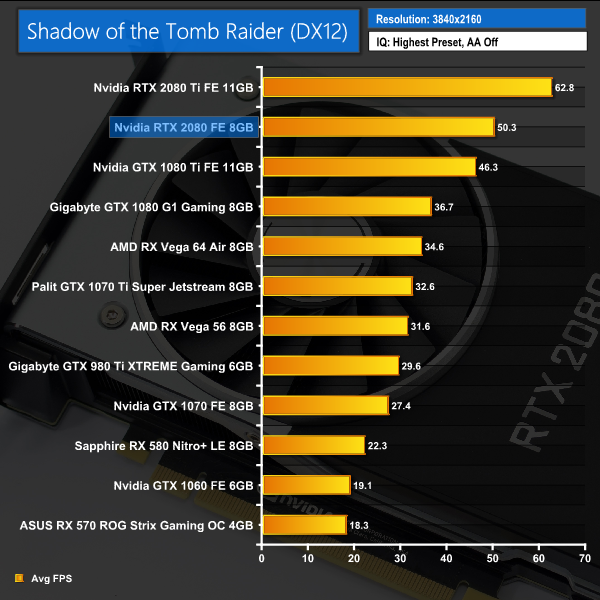

We test using the Highest preset, with AA disabled. We test using the DX12 API.

Our newest game to join the roster, Shadow of the Tomb Raider shows a slim but healthy lead for the RTX 2080 over the GTX 1080 Ti. Its lead is exactly 4FPS at 4K, while the average figure of 50.3FPS at 4K is also impressive when taken on its own.As mentioned at the beginning of this review, we are unable to test real-time ray tracing in games until the release of DXR in October. Nvidia did release one demo for the press to use when testing the ray tracing capabilities of the Turing GPUs – it's named ‘Reflections' and you will likely have seen it plenty of times, it's the short clip showing two stormtroopers mocking Captain Phasma. You can watch it on YouTube HERE.

While this demo shows an FPS counter while it is running, there is sadly no way to record average and minimum frame rates. The difference in frame rate is noticeable when run with different cards, though, so without being able to provide any exact benchmark figures, we filmed a short video to show you how the ray tracing demo performs with different GPUs:

As we can observe from the video, here are the trends exhibited by our four test cards:

- GTX 1080: The slowest card, the GTX 1080 ran the demo at 6-8FPS, with one or two moments where it jumped to 10FPS but no higher. It also dipped to 5FPS during the elevator scene.

- GTX 1080 Ti: This card ran the demo at between 8-10FPS. It did occasionally peak above 10FPS, but also dipped as low as 6FPS during the elevator scene. It did not look very smooth.

- RTX 2080: A much better experience here, the RTX 2080 ran the demo between 43-48FPS, with some peaks into the mid 50s. It did drop down to 35FPS during the intensive elevator scene, but overall a smooth viewing experience.

- RTX 2080 Ti: Proving even better still, the RTX 2080 Ti ran the demo around the 55-60FPS mark, with peaks almost at 70FPS. It again dipped to 46FPS, but the trend is clear to see.

So, there we have it. There's no doubt that ray tracing works far better with Turing hardware than it does with Pascal – the GTX 1080 Ti looked like a slideshow when running the demo, whereas the RTX 2080 Ti was averaging close to the 60FPS mark based on the frame counter that I could see. That means the RTX 2080 is roughly 5-6 times as fast as the GTX 1080 Ti when it comes to ray tracing.

Of course, this is only one demo, and it comes from Nvidia directly – they wouldn't give us something that made ray tracing look bad. Because of that, we can't conclusively say how the RTX 20 series is going to handle ray tracing when it comes to real-world game performance. At the same time, it would be foolish to dismiss the only benchmark for ray tracing performance that we currently have.

We will certainly know more when DXR is released next month and we can test actual games that use ray tracing. Still, for now – this demo does give a glimpse of the potential of the Turing architecture.

We are in a similar situation with Deep Learning Super Sampling (DLSS) that we are with ray tracing – no game on the market currently supports it, though that should hopefully change soon as thankfully we do not have to wait for a Microsoft update for games to utilise the new technology. It is a Turing-specific feature, though, so it won't be available to those with Pascal (or older) GPUs.

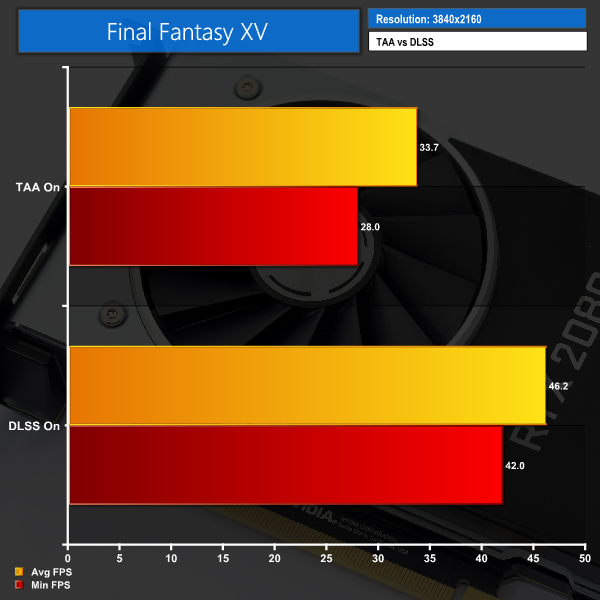

Nvidia supplied another demo for the purposes of this review – this time from Final Fantasy XV. We were able to run the demo at 4K with both TAA and DLSS anti aliasing solutions. We used FRAPS to extract minimum and average FPS figures, after a 1-minute run.

Using the FFXV demo, DLSS certainly seems to make a big difference – average frame rate improves by a huge 37%, while minimum FPS also jumps up a whole 50%. Again, it's only one demo – provided by Nvidia – but it does show DLSS could offer significantly better performance when running with AA on. This is exciting.

So, that's the performance from the demo, what about visual fidelity?

TAA on, left, versus DLSS on, right

The above screenshots were taken directly from the FFXV demo running on our RTX 2080. On the left we have the demo running 4K TAA on, and on the right the demo is at 4K with DLSS on instead. From this big-picture view, it may not look like there is much between the two.

When we really crop in close, however, I think the difference is clear to see. TAA has noticeably more ‘jaggies', while there are also some artifacts around the blonde hair. DLSS does look overall a bit softer, but it is noticeably smoother and overall a better-looking image.

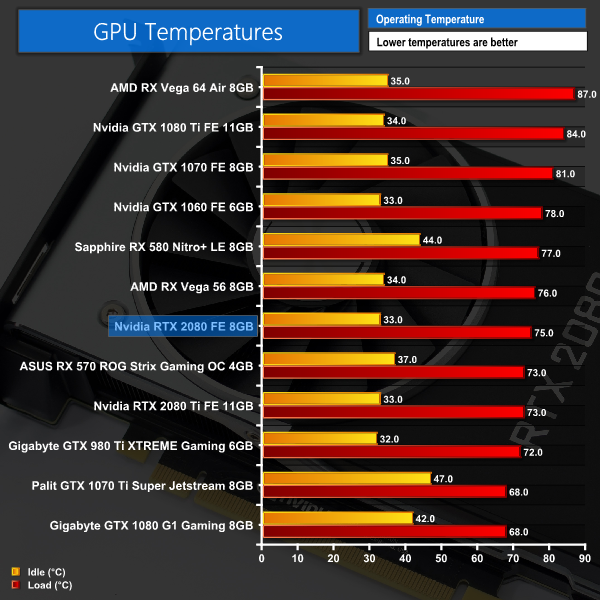

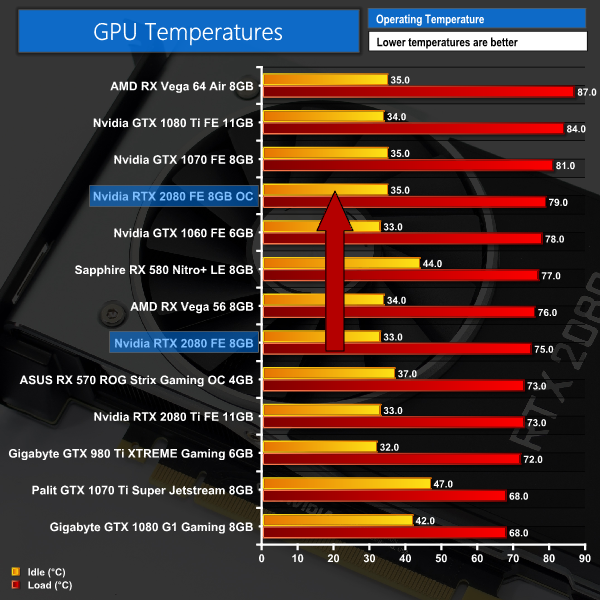

I look forward to testing this further in a real-world in-game environment. This demo has certainly given us some insight, but again – it is a single demo. We can't really judge DLSS on a single example just yet – but just like ray tracing, the potential is there to see for DLSS and Turing GPUs.For our temperature testing, we measure the peak GPU core temperature under load, as well as the GPU temperature with the card idling on the desktop. A reading under load comes from running the 3DMark Fire Strike Ultra stress test 20 times. An idle reading comes after leaving the system on the Windows desktop for 30 minutes.

I have to say, the new Founders Edition card has impressed me greatly. The RTX 2080 has a maximum operating temperature of 88C, and considering previous Founders Edition cards would run as hot as they were allowed, to see it come in at just 75C on the GPU core is fantastic for a reference card. This really is rivalling aftermarket solutions we are used to seeing from the likes of MSI, Gigabyte and ASUS.

It will be fascinating to see how cards with beefier cooling solutions perform. Indeed, I have already seen some cards revert back to the older blower-style cooler, so it would be interesting to see how they perform as well.

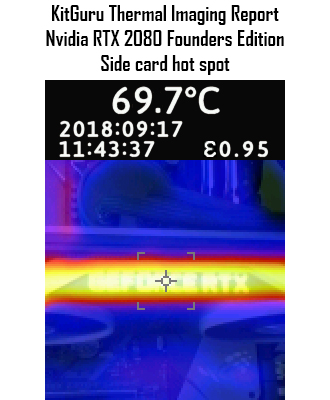

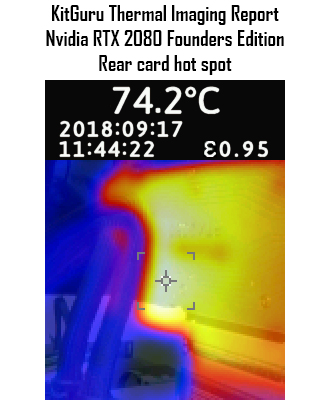

In terms of the thermal gun testing, there are no causes for concern. The hot spot on the side of the card is directly behind the GeForce logo, but at under 70C it is nothing to worry about.

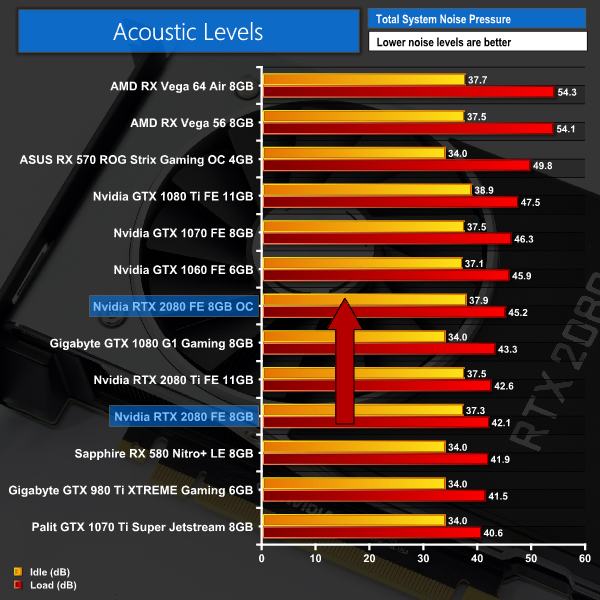

The area just behind the GPU core peaked at 74.2C, which is again a top result. The aluminium backplate is clearly doing a good job of dispersing the heat across its surface area.We take our noise measurements with the sound meter positioned 1 foot from the graphics card. I measured the sound floor to be 34 dBA, thus anything above this level can be attributed to the graphics cards. The power supply is passive for the entire power output range we tested all graphics cards in, while all CPU and system fans were disabled.

A reading under load comes from running the 3DMark Fire Strike Ultra stress test 20 times. An idle reading comes after leaving the system on the Windows desktop for 30 minutes.

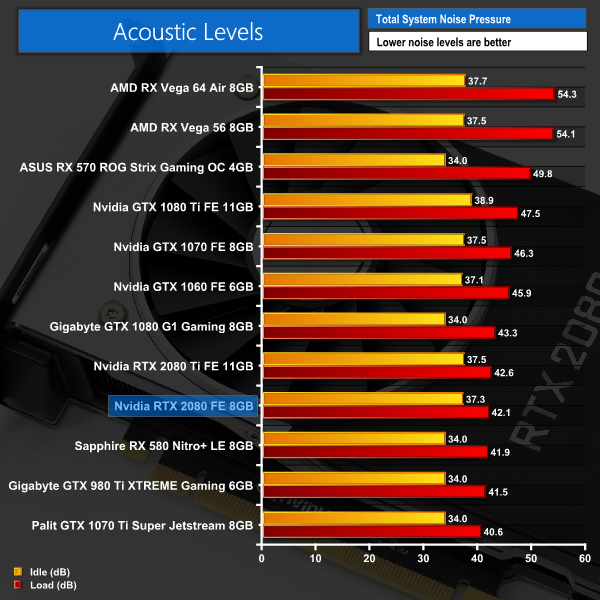

Following on from the impressive temperature testing, how about the noise emissions of the Founders Edition RTX 2080! It's the quietest reference card we've ever tested, coming in even quieter than the triple-fan Gigabyte G1 Gaming GTX 1080 as well – an aftermarket card. Nvidia's decision to ditch the blower-style fan in favour of the dual axial fan setup has clearly reaped dividends – the typecast of reference cards as being noisy is certainly over.

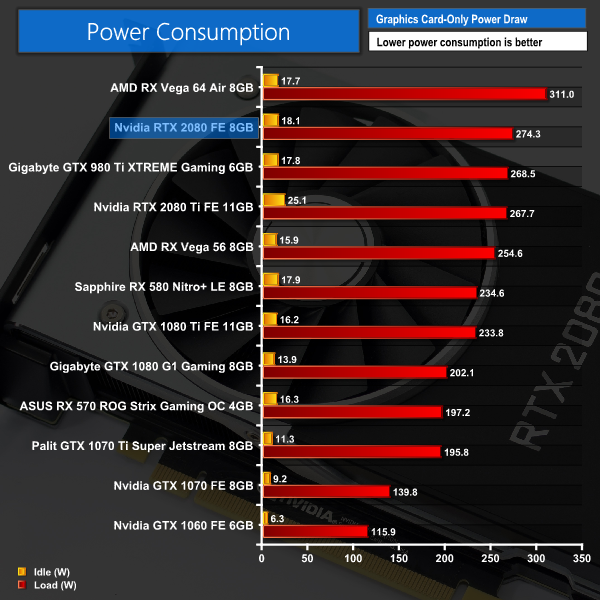

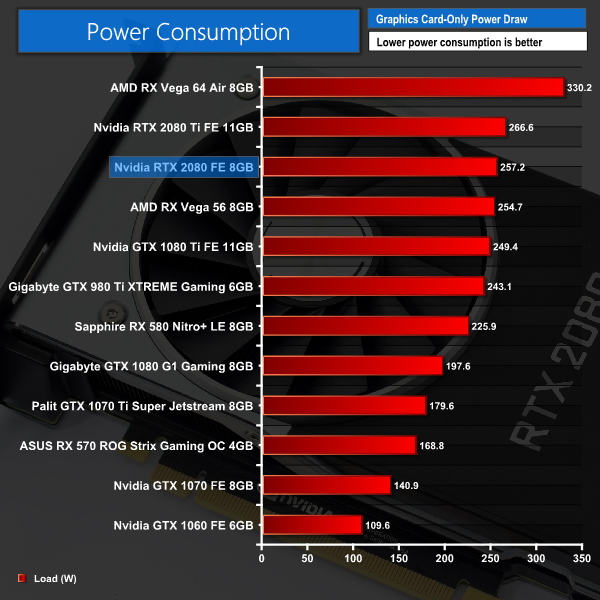

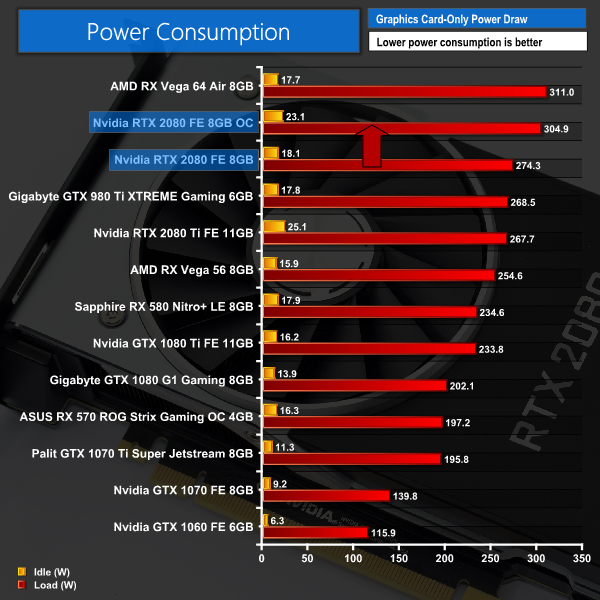

Things could be better, though – and I am talking about having a fan-stop mode, where the fans stop spinning under light loads. With the RTX 2080, the fans spin no matter what. It's obviously a lot quieter while idling than when gaming, but I am certain these coolers could get away with a fan-stop mode when under idle or low load situations. It could even be something added in later by Nvidia with a driver update.We have recently revamped our GPU power consumption testing. Previously we would measure the total system power draw with each graphics card installed. Given that the rest of the components did not change, this gave us an idea of the relative power consumption of each graphics card, but we could not be more specific than that.

Now, however, thanks to Cybenetics Labs and its Powenetics Project, we are able to measure the power consumption of the graphics card alone, giving much more precise and accurate data. Essentially, this works by installing sensors in the PCIe power cables, as well as the PCIe slot itself thanks to a special riser card. This data is recorded using specialist software provided by Cybenetics Labs and given it polls multiple times a second (between 6 and 8 times a second, based on my observations) we can track the power consumption in incredible detail over any given amount of time.

You can read more about the Powenetics Project over HERE.

As with previous testing, a reading under load comes from running the 3DMark Fire Strike Ultra stress test 20 times. An idle reading comes after leaving the system on the Windows desktop for 30 minutes. This stress test (20 runs) produces approximately 4300 data entries in the Powenetics software, which we can then export to an Excel file and analyse further. Here we present the average continuous power consumption of each graphics card across the entire 20 run test.

Now, this is really fascinating – our power testing shows the RTX 2080 drawing more power than the RTX 2080 Ti while running Fire Strike Ultra. As a reminder, the RTX 2080 has a TDP of 225W, whereas the RTX 2080 Ti has a TDP of 260W – so we would expect the RTX 2080 to draw 35-40W less power under load. But it simply did not work out that way in our testing.

We have reached out to Nvidia about this, but at the time of publication no clear solution/explanation has been found by either party.

Shadow of the Tomb Raider Power Testing

We did delve further, and perhaps wondered if 3DMark was to blame – although all the other results are as expected, so we couldn't see why that would be. Still, we wanted to try something else, so the figures you can see above are from running the Shadow of the Tomb Raider benchmark at 4K, with the Highest IQ preset.

Here, we do see the RTX 2080 drawing less power – but it is again higher than we would have expected. If any readers have ideas to why this might be, please leave them below or over on Facebook – we would love to hear your opinions on this.

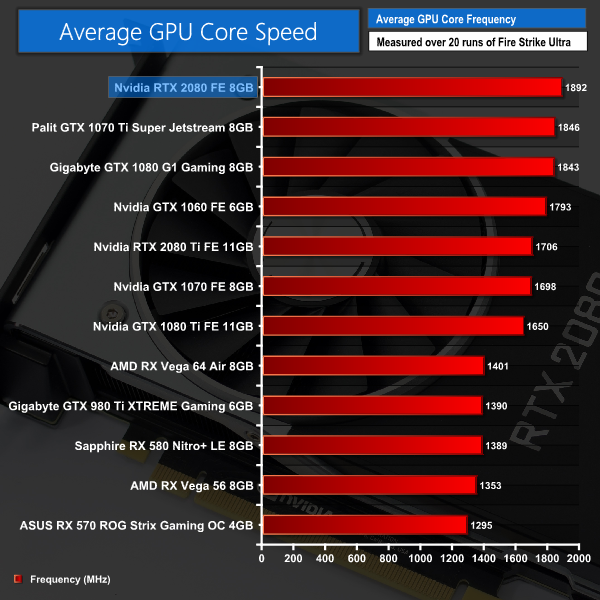

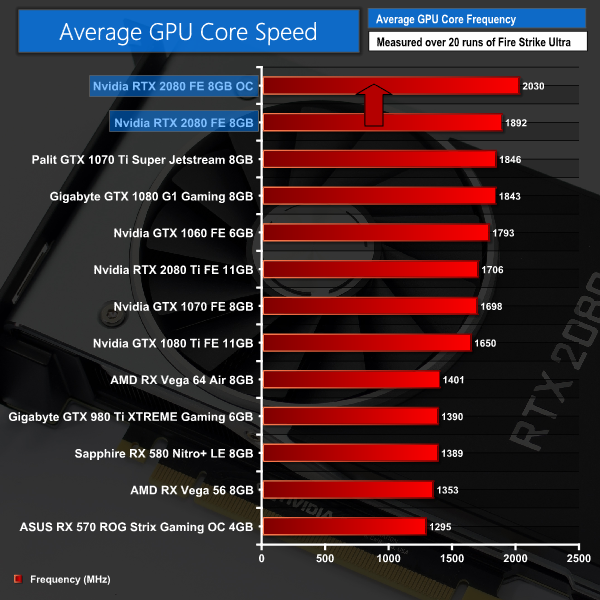

In any case, I don't think the power consumption is anything to worry about – 260-270W is a fair amount for a single graphics card to draw, but when you're spending this much money the extra electricity cost is unlikely to bother you. Certainly, I am not worried that anything is wrong with our RTX 2080 sample.Here we present the average clock speed for each graphics card while running the 3DMark Fire Strike Ultra stress test 20 times. We use GPU-Z in tandem with the Powenetics Project (see the previous page) to record the GPU core frequency during the Fire Strike Ultra runs – at the end, we are left with around 4300 data entries.

We calculate the average core frequency during the entire 20-run test to present here.

Given the RTX 2080 is built on the new Turing Architecture, we cannot make any meaningful comparisons with previous generation, or AMD, cards. Still, this data will prove more useful over the coming weeks and months when we test a variety of aftermarket RTX solutions – where the clock speeds will be directly comparable.The first thing to note when discussing manual overclocking of the RTX cards, is that Turing has introduced a new version of GPU Boost: we are now at GPU Boost 4.0, when Pascal used GPU Boost 3.0.

The primary difference is that where GPU Boost 3.0 dynamically adjusted core frequency based on temperature points that were locked away from the end user, GPU Boost 4.0 gives users control over these so called inflection points.

As Nvidia explains:

The algorithms used with GPU Boost 3.0 were completely inside the driver, and were not exposed to users. However, GPU Boost 4.0 now exposes the algorithms to users so they can manually modify the various curves themselves to increase performance in the GPU. The biggest benefit is in the temp domain where new inflection points have been added. Where before it was a straight line that dropped directly down to the Base Clock, the clock now holds the Boost Clock where it can be set to run longer at higher temperatures before a second temp target (T2) is reached where it will drop the clocks.

To do this, press were supplied with a new version of EVGA's Precision software, named Precision X1. If you are trying to get the most from your graphics card, the easiest thing to do is set all the inflection points (seen below in graph form) up to the right-hand side – meaning the graphics core will stay uncapped until it hits its maximum temperature.

This software is still in beta form, and I did encounter some bugs here and there. We will revisit manual overclocking in the future to see if a finalised software package could help things further.

But taking Precision X1 as is, what was the best overclock I could dial in?

Well, first of all I maximised both the power limit and temperature target sliders. I also set the voltage slider to its maximum level. Then, I was able to add +145MHz to the GPU core, and +400MHz to the memory.

3DMark and Games Testing

The gains are certainly there with this overclock applied – a 4.4FPS boost playing Far Cry 5 at 4K is not to be sniffed at. The Fire Strike score also rose by 1000 points.

On the next page we assess the implications this has for power draw, thermals, acoustics and the average clock speed.Here, we take a further look at the impact of our overclock, looking at the increased temperatures, acoustics, power draw, and lastly, the effect had on the average clock speed.

Temperatures

Acoustics

Power consumption

Average clock speed under load

Overview

As expected, GPU core temperature, noise levels and power consumption all rise as a result of our overclock. I would say, however, that the increased noise is not huge – it just makes the overclocked RTX 2080 Founders Edition sound like a stock clocked Founders Edition of the previous generation! Peak GPU temperature only rose 4C as well, putting it still below 80C, despite the increased power limit and voltage.

Average GPU frequency hit over 2GHz, which is a fantastic result and one that will have overclockers rubbing their hands together with excitement. The specific clock speed we achieved, 2030MHz, is almost exactly the +145MHz that we dialled in, so I would call that overclock a very good result.Today we have published reviews of both the Nvidia RTX 2080 and RTX 2080 Ti. It is the former this particular review has been concerned with, however, and it is safe to say we have a lot of good things to say about the card.

First of all, the new Founders Edition card really is excellent – not only is it built exceptionally well, but it runs very cool – peaking at just 75C, something which is unheard of for an Nvidia-built card until now. This is undoubtedly thanks to the new dual axial fans which have replaced the traditional blower-style (radial) fans we have seen from previous Nvidia reference cards, while there is also a new full-length vapour chamber as well.

It is also the quietest Nvidia card we have tested to-date, with a peak of just 42.1 dB in our noise emission testing. To put that into perspective, it makes it the 4th quietest card on test today – meaning it is quieter than some aftermarket cards. Nvidia has well and truly stepped up the game with its Founders Edition. In fact, I would expect many consumers, who might otherwise have bought a cheaper aftermarket card, will be going for one of the new Turing Founders Edition cards as they are that good.

We did, however, spot an irregularity with our power consumption testing which showed the RTX 2080 drawing 274W under load – 7W more than the RTX 2080 Ti. We are still in discussions with Nvidia as to why this might be, but running Shadow of the Tomb Raider did show the RTX 2080 drawing marginally less power than the RTX 2080 Ti. In either case, it's not as power hungry as Vega 64, but neither is it as frugal as the GTX 1080 or GTX 1080 Ti.

The main thing is, of course, real-world performance – the one element that Nvidia hardly addressed both during the launch of the RTX 20 series, and in the preceding weeks. What can we say about how fast the RTX 2080 is? Well, in a nutshell, it is very close to the previous-generation GTX 1080 Ti. If we look at the average FPS difference across our tested games, at 1440p the RTX 2080 is almost exactly 5% faster, while at 4K the RTX 2080 is just over 6% faster.

The reason we are comparing the RTX 2080 to the GTX 1080 Ti is pricing. Using current prices from Nvidia, the GTX 1080 Ti can be had for £669. The RTX 2080, on the other hand, cost £749. That makes the RTX 2080 almost 12% more expensive, despite being 5-6% faster.

You will see this performance gap increase when overclocking, however, as we found the RTX 2080 to be very amenable to a hefty overclock. Thanks in part to the extra control afforded to end-users via GPU Boost 4.0, we were able to add +145MHz to the GPU core, and +400MHz to the memory. This gave us a real-world operating frequency of 2030MHz that held steady under sustained load – resulting in an extra 7% performance when testing Far Cry 5 at 4K.

The big talking point about these new cards, however, is of course Turing's new capabilities with ray tracing, and Deep Learning Super Sampling is another benefit we have looked at. The problem is, until DirectX Raytracing (DXR) is released in October, we can't say just how good the ray tracing element is, and the same goes for DLSS – we need games to support it before we can give a proper verdict on it.

Still, we have been able to run the stormtrooper ‘Reflections' demo, and that shows the potential for ray tracing with these cards – even the GTX 1080 Ti could barely run the demo at 8-10FPS, whereas the RTX 2080 was able to perform around the 33-38FPS mark. It is important to not read too much into a single demo – we will of course have to see what real-world gaming is like with ray tracing added in. Still, based on what we can see – the potential is clearly there for ray tracing in games to run well on these cards, and Turing is undoubtedly far superior to Pascal when it comes to ray tracing capabilities.

As for DLSS, this is a neural-network based version of anti aliasing which not only provides similar (or better, based on our testing) visual fidelity to Temporal Anti Aliasing (TAA) but at a significantly lower performance penalty. Again, we could only test one demo of this, but with DLSS turned on instead of TAA, average frame rate rose by 37% when testing FFXV at 4K. A close crop of two screenshots, comparing TAA and DLSS image quality, also suggested DLSS provided the better visuals.

Where do we go from here for Turing and ray tracing? Apart from one ray tracing demo, as of right now, the RTX 2080 may as well be called the GTX 2080 – we simply can't play games with ray tracing just yet. That does make it hard to offer concrete buying advice – Nvidia has sent us a product which is paving the way for real-time ray tracing at home, but we can't test it in any games that are currently available.

So, should you buy the RTX 2080? On one hand, GTX 1080 Ti owners may see little reason to upgrade – and we are of course still waiting to see concrete ray tracing performance from games you can go out and buy.

But on the other hand, if you were in the market for a new high-end graphics card, regardless of the fact that Turing has just been released, then the RTX 2080 is the one to go for. That's because it is faster than the GTX 1080 Ti, and at £80 more expensive than a 1080 Ti, it is not wildly out of reach if you were going to drop about £670 on a new graphics card anyway.

On top of that, what we have seen of ray tracing is promising. We do of course need to see more from actual games, and not just one demo. But either way, we can't ignore what we have seen in regards to Turing GPUs and ray tracing – Pascal just won't cut it in that department.

The smart thing to do is wait and see when we have actual ray traced games that we can test. But if you are looking to buy a new GPU now, at only £80 more than the GTX 1080 Ti, the RTX 2080 does have the upper hand.

You can buy the Nvidia RTX 2080 directly from Nvidia for £749. Overclockers UK also has a range of aftermarket cards starting at £749.99.

Pros

- Founders Edition card looks lovely.

- Quietest reference card we've tested.

- There are impressive gains to be had from overclocking.

- Faster than GTX 1080 Ti.

- What ray tracing testing we can do, shows a clear benefit to Turing GPUs.

- FFXV ran noticeably better with DLSS when compared to TAA.

Cons

- We don't know how the card will perform with ray tracing in actual games.

- Not wildly faster than a GTX 1080 Ti.

- Fans keep spinning even under light loads.

KitGuru says: All of the talk has been about ray tracing – and what we have seen is certainly very promising. Current GTX 1080 Ti owners may not see much reason to upgrade, but for anyone else spending around this much cash on a new graphics card – the RTX 2080 is the one to go for.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards